参考:https://www.computervision.zone/topic/volumehandcontrol-py/

主函数:

VolumeHandControl.py

import cv2

import time

import numpy as np

import HandTrackingModule as htm

import math

from ctypes import cast, POINTER

from comtypes import CLSCTX_ALL

from pycaw.pycaw import AudioUtilities, IAudioEndpointVolume

################################

wCam, hCam = 640, 480

################################

cap = cv2.VideoCapture(0)

cap.set(3, wCam)

cap.set(4, hCam)

pTime = 0

detector = htm.handDetector(min_detection_confidence=0.7)

devices = AudioUtilities.GetSpeakers()

interface = devices.Activate(

IAudioEndpointVolume._iid_, CLSCTX_ALL, None)

volume = cast(interface, POINTER(IAudioEndpointVolume))

# volume.GetMute()

# volume.GetMasterVolumeLevel()

volRange = volume.GetVolumeRange()

minVol = volRange[0]

maxVol = volRange[1]

vol = 0

volBar = 400

volPer = 0

while True:

success, img = cap.read()

img = detector.findHands(img)

lmList = detector.findPosition(img, draw=False)

# print(lmList)

if len(lmList[0]) != 0:

# print(lmList[4], lmList[8])

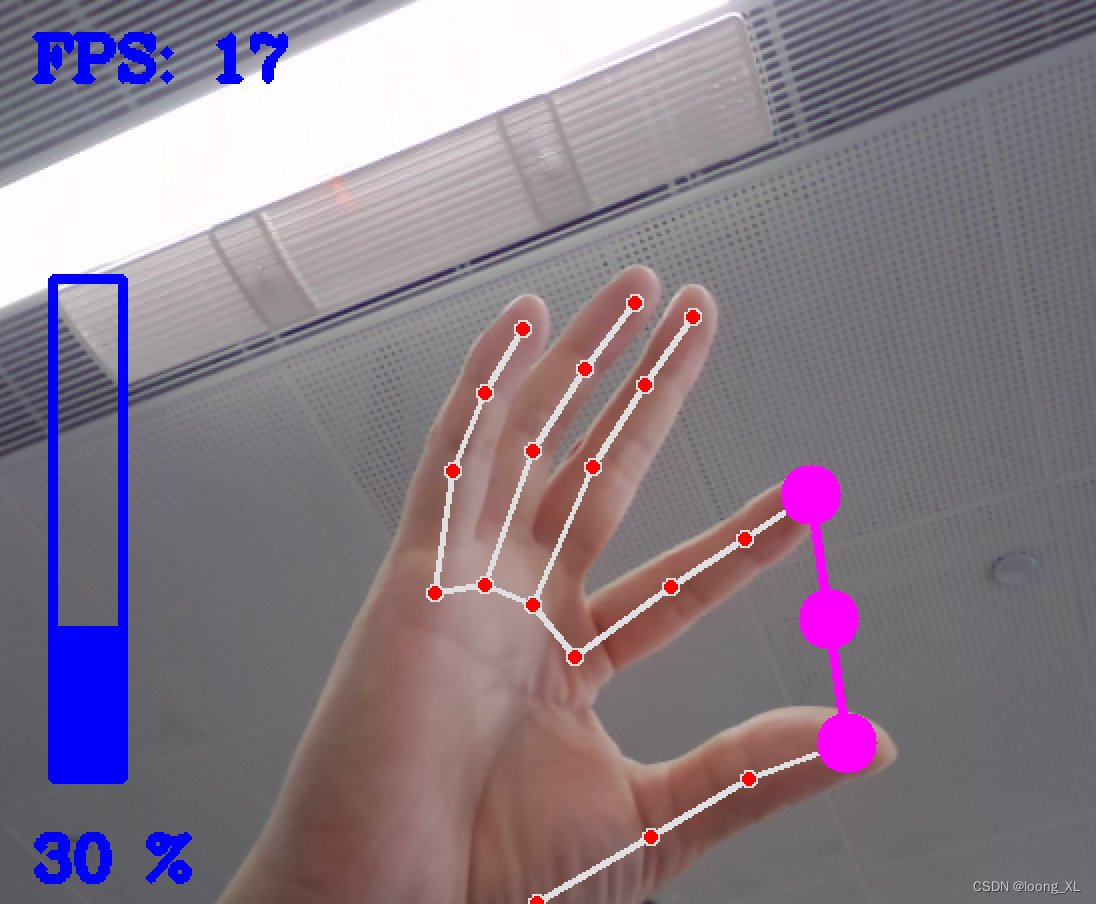

x1, y1 = lmList[0][4][1], lmList[0][4][2]

x2, y2 = lmList[0][8][1], lmList[0][8][2]

cx, cy = (x1 + x2) // 2, (y1 + y2) // 2

cv2.circle(img, (x1, y1), 15, (255, 0, 255), cv2.FILLED)

cv2.circle(img, (x2, y2), 15, (255, 0, 255), cv2.FILLED)

cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)

cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)

length = math.hypot(x2 - x1, y2 - y1)

print(x2 - x1, y2 - y1)

# Hand range 50 - 300

# Volume Range -65 - 0

vol = np.interp(length, [50, 300], [minVol, maxVol])

volBar = np.interp(length, [50, 300], [400, 150])

volPer = np.interp(length, [50, 300], [0, 100])

# print(int(length), vol)

volume.SetMasterVolumeLevel(vol, None)

if length < 50:

cv2.circle(img, (cx, cy), 15, (0, 255, 0), cv2.FILLED)

cv2.rectangle(img, (50, 150), (85, 400), (255, 0, 0), 3)

cv2.rectangle(img, (50, int(volBar)), (85, 400), (255, 0, 0), cv2.FILLED)

cv2.putText(img, f'{int(volPer)} %', (40, 450), cv2.FONT_HERSHEY_COMPLEX,

1, (255, 0, 0), 3)

cTime = time.time()

fps = 1 / (cTime - pTime)

pTime = cTime

cv2.putText(img, f'FPS: {int(fps)}', (40, 50), cv2.FONT_HERSHEY_COMPLEX,

1, (255, 0, 0), 3)

cv2.imshow("Img", img)

cv2.waitKey(1)

if cv2.waitKey(20) & 0xFF == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

HandTrackingModule.py

import cv2

import mediapipe as mp

import time

import math

class handDetector():

def __init__(self, mode=False, max_num_hands=2, min_detection_confidence=0.5, min_tracking_confidence=0.5):

self.mode = mode

self.max_num_hands = max_num_hands

self.min_detection_confidence = min_detection_confidence

self.min_tracking_confidence = min_tracking_confidence

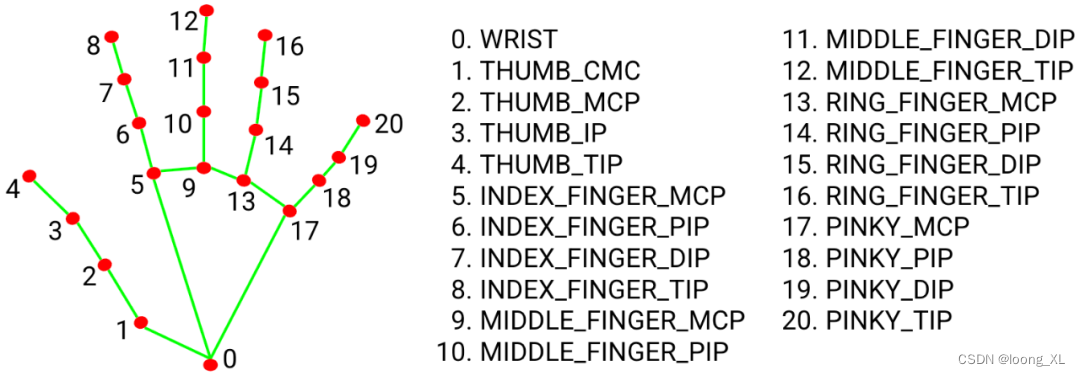

self.mpHands = mp.solutions.hands

self.hands = self.mpHands.Hands(static_image_mode=self.mode,

max_num_hands=self.max_num_hands,

min_detection_confidence=self.min_detection_confidence,

min_tracking_confidence=self.min_tracking_confidence)

self.mpDraw = mp.solutions.drawing_utils

self.tipIds = [4, 8, 12, 16, 20]

def findHands(self, img, draw=True):

imgRGB = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

self.results = self.hands.process(imgRGB)

# print(results.multi_hand_landmarks)

if self.results.multi_hand_landmarks:

for handLms in self.results.multi_hand_landmarks:

if draw:

self.mpDraw.draw_landmarks(img, handLms,self.mpHands.HAND_CONNECTIONS)

return img

def findPosition(self, img, handNo=0, draw=True):

xList = []

yList = []

bbox = []

self.lmList = []

if self.results.multi_hand_landmarks:

myHand = self.results.multi_hand_landmarks[handNo]

for id, lm in enumerate(myHand.landmark):

# print(id, lm)

h, w, c = img.shape

cx, cy = int(lm.x * w), int(lm.y * h)

xList.append(cx)

yList.append(cy)

# print(id, cx, cy)

self.lmList.append([id, cx, cy])

if draw:

cv2.circle(img, (cx, cy), 5, (255, 0, 255), cv2.FILLED)

xmin, xmax = min(xList), max(xList)

ymin, ymax = min(yList), max(yList)

bbox = xmin, ymin, xmax, ymax

if draw:

cv2.rectangle(img, (bbox[0] - 20, bbox[1] - 20),(bbox[2] + 20, bbox[3] + 20), (0, 255, 0), 2)

return self.lmList, bbox

def fingersUp(self):

fingers = []

# Thumb

if self.lmList[self.tipIds[0]][1] > self.lmList[self.tipIds[0] - 1][1]:

fingers.append(1)

else:

fingers.append(0)

# 4 Fingers

for id in range(1, 5):

if self.lmList[self.tipIds[id]][2] < self.lmList[self.tipIds[id] - 2][2]:

fingers.append(1)

else:

fingers.append(0)

return fingers

def findDistance(self, p1, p2, img, draw=True):

x1, y1 = self.lmList[p1][1], self.lmList[p1][2]

x2, y2 = self.lmList[p2][1], self.lmList[p2][2]

cx, cy = (x1 + x2) // 2, (y1 + y2) // 2

if draw:

cv2.circle(img, (x1, y1), 15, (255, 0, 255), cv2.FILLED)

cv2.circle(img, (x2, y2), 15, (255, 0, 255), cv2.FILLED)

cv2.line(img, (x1, y1), (x2, y2), (255, 0, 255), 3)

cv2.circle(img, (cx, cy), 15, (255, 0, 255), cv2.FILLED)

length = math.hypot(x2 - x1, y2 - y1)

return length, img, [x1, y1, x2, y2, cx, cy]

def main():

pTime = 0

cap = cv2.VideoCapture(0)

detector = handDetector()

while True:

success, img = cap.read()

img = detector.findHands(img)

lmList = detector.findPosition(img)

if len(lmList) != 0:

print(lmList[4])

cTime = time.time()

fps = 1 / (cTime - pTime)

pTime = cTime

cv2.putText(img, str(int(fps)), (10, 70), cv2.FONT_HERSHEY_PLAIN, 3,

(255, 0, 255), 3)

cv2.imshow("Image", img)

cv2.waitKey(1)

if __name__ == "__main__":

main()

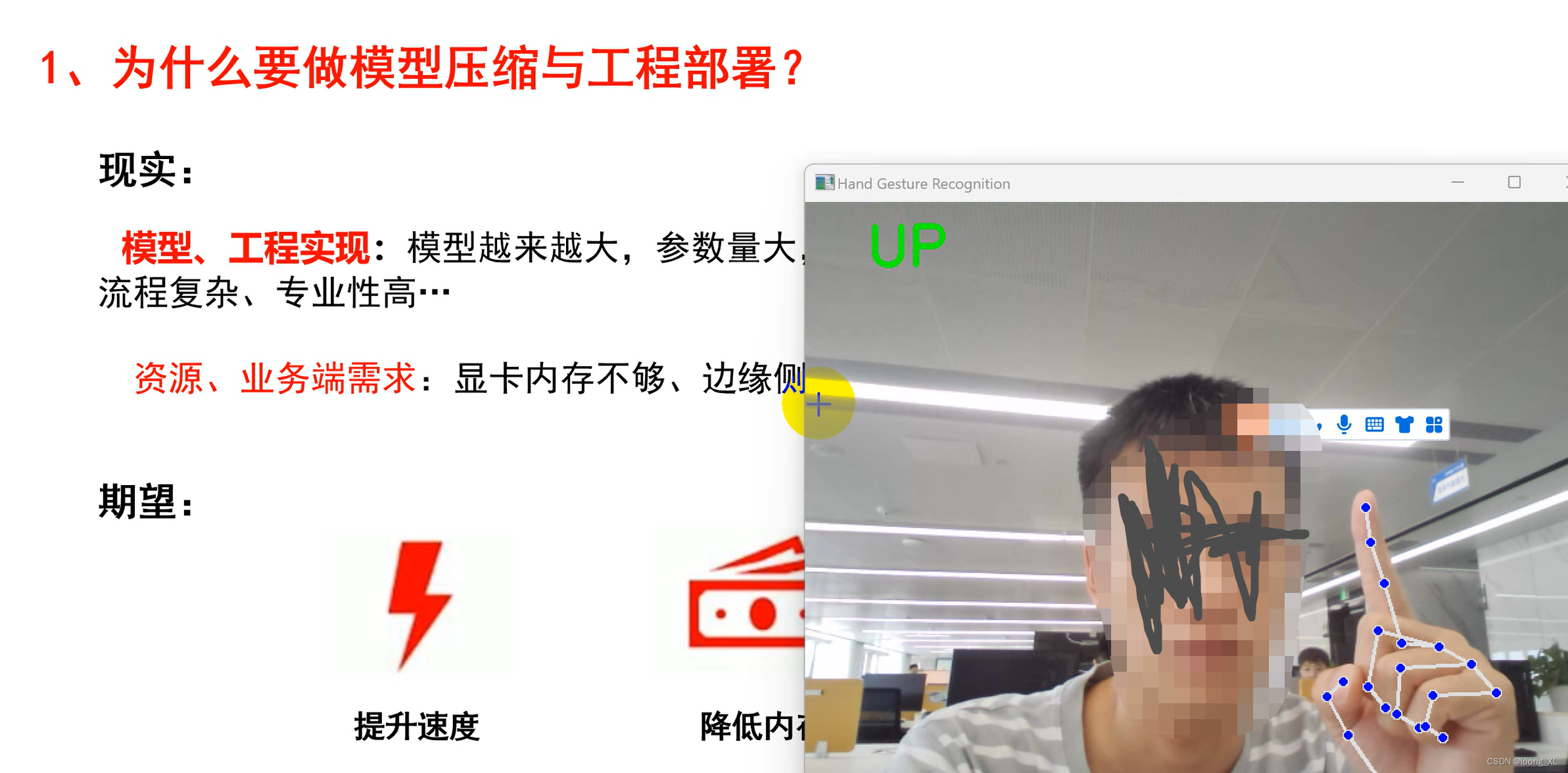

2、手势上下左右识别控制PPT翻页(实际只用的上下up down控制)

# 导入所需的库

import cv2

import mediapipe as mp

import math

import win32com.client #L4, 5用

import time #L8, 11, 14, 17用

app = win32com.client.Dispatch("PowerPoint.Application")

presentation = app.Presentations.Open(FileName=r"C:\Users\loong\Documents\WeChat Files\sanhutang\FileStorage\File\2023-07\模型压缩与工程部署知识分享.pptx", ReadOnly=1) #開啟PowerPoint檔

presentation.SlideShowSettings.Run()

# 创建手部检测对象和绘图对象

mp_hands = mp.solutions.hands

hands = mp_hands.Hands()

mp_drawing = mp.solutions.drawing_utils

# 定义方向字典,用于存储食指的方向和对应的反馈信息

directions = {

"up": "UP",

"down": "DOWN",

"left": "LEFT",

"right": "RIGHT"

}

# 设定重复帧 减少误差带来的影响

init = 0

direction_ = None

# 只有当相同识别帧数到count帧时, 才会去显示对应的方向

def counts(direction, count=10):

global init, direction_

if direction_ != direction:

direction_ = direction

init = 0

elif direction_ == direction:

init += 1

if init >= count:

return direction_

return None

keys=[]

start_time = time.time()

# 打开摄像头并捕获视频帧

cap = cv2.VideoCapture(0)

while cap.isOpened():

# 读取一帧图像并转换为RGB格式

success, image = cap.read()

if not success:

break

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = cv2.flip(image, 1)

# 将图像传入手部检测对象,并获取检测结果

results = hands.process(image)

# 如果检测到至少一只手,则遍历每只手,并获取食指的方向和反馈信息

if results.multi_hand_landmarks:

for hand_landmarks in results.multi_hand_landmarks:

# 获取食指第二个关节(index_finger_mcp)和第三个关节(index_finger_pip)的坐标

x1 = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_MCP].x

y1 = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_MCP].y

x2 = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_PIP].x

y2 = hand_landmarks.landmark[mp_hands.HandLandmark.INDEX_FINGER_PIP].y

# 计算食指的斜率和角度(以度为单位)

slope = (y2 - y1) / (x2 - x1)

angle = math.atan(slope) * 180 / math.pi

# 根据角度判断食指的方向,并获取对应的反馈信息

if (angle > 45 or angle < -45) and y1 > y2:

direction = directions["up"]

elif (angle > 45 or angle < -45) and y1 < y2:

direction = directions["down"]

elif (-45 < angle < 45) and x1 < x2:

direction = directions["right"]

else:

direction = directions["left"]

keys.append(direction)

# 在图像上绘制手部关键点和连线,并显示反馈信息

mp_drawing.draw_landmarks(image, hand_landmarks, mp_hands.HAND_CONNECTIONS)

if direction == counts(direction):

cv2.putText(image, direction, (50, 50), cv2.FONT_HERSHEY_SIMPLEX, 1.5, (0, 255, 0), 3)

elapsed_time = time.time() - start_time

# print(keys)

if len(set(keys))==1 and len(keys)>=2 and elapsed_time > 1.2: ##一直检测一个方向坚持1.2秒才去控制翻页

if list(set(keys))[0]=="UP":

print("up")

presentation.SlideShowWindow.View.Previous() #切回到上一頁

elif list(set(keys))[0]=="DOWN":

print("down")

presentation.SlideShowWindow.View.Next() #切換到下一頁

keys=[]

start_time = time.time()

elif len(set(keys))>1:

keys=[]

start_time = time.time()

# time.sleep(2)

# 将图像转换回BGR格式,并显示在窗口中

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

cv2.imshow("Hand Gesture Recognition", image)

# 按下q键退出循环并关闭窗口

if cv2.waitKey(5) & 0xFF == ord("q"):

break

# 释放摄像头资源并关闭所有窗口

cap.release()

cv2.destroyAllWindows()

3、石头剪刀布游戏

参考:https://github.com/ntu-rris/google-mediapipe