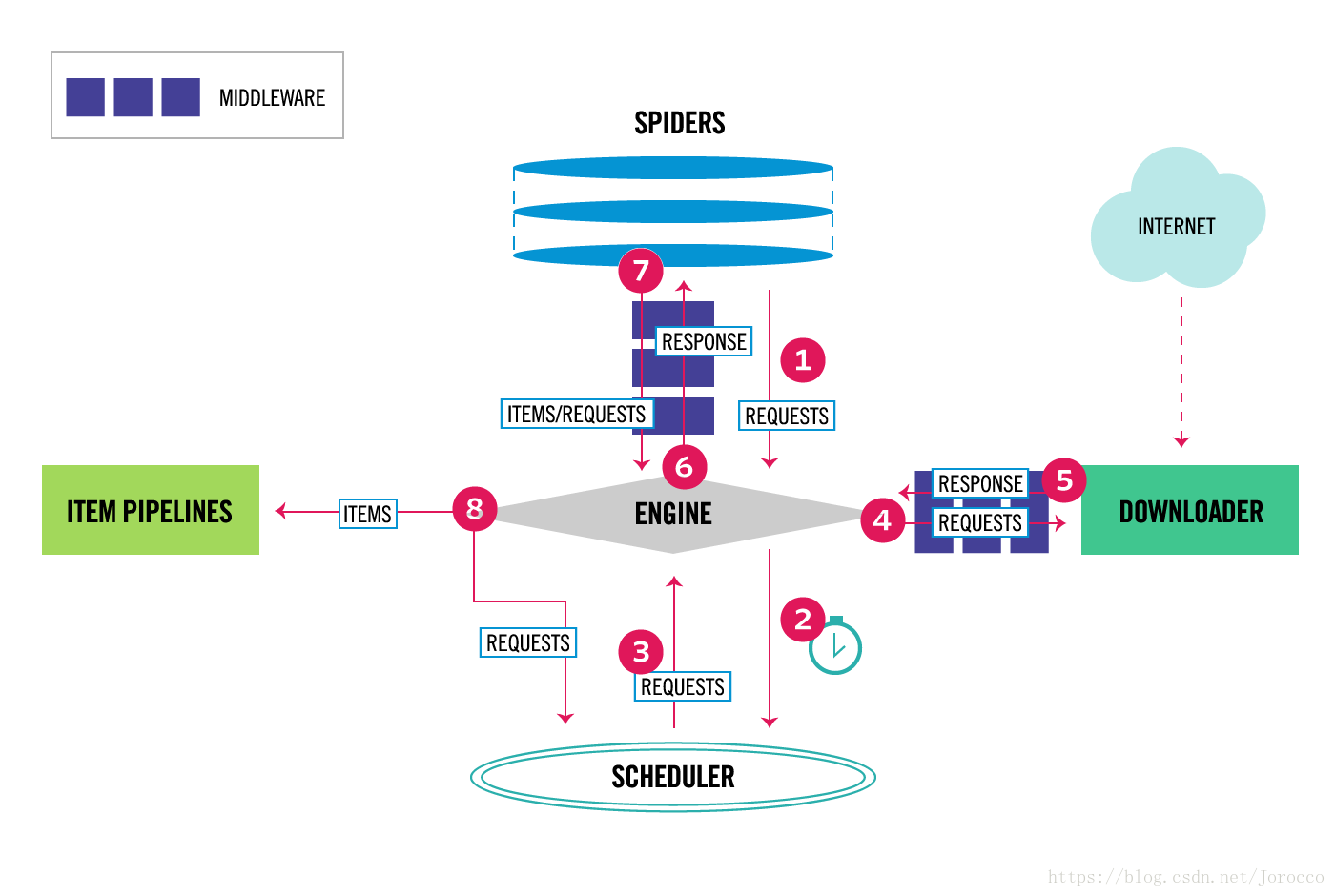

1、什么是scrapy

scrapy是Python开发的一个快速、高层次的屏幕抓取和web抓取的爬虫框架,用于抓取web站点并从页面中提取结构化的数据。

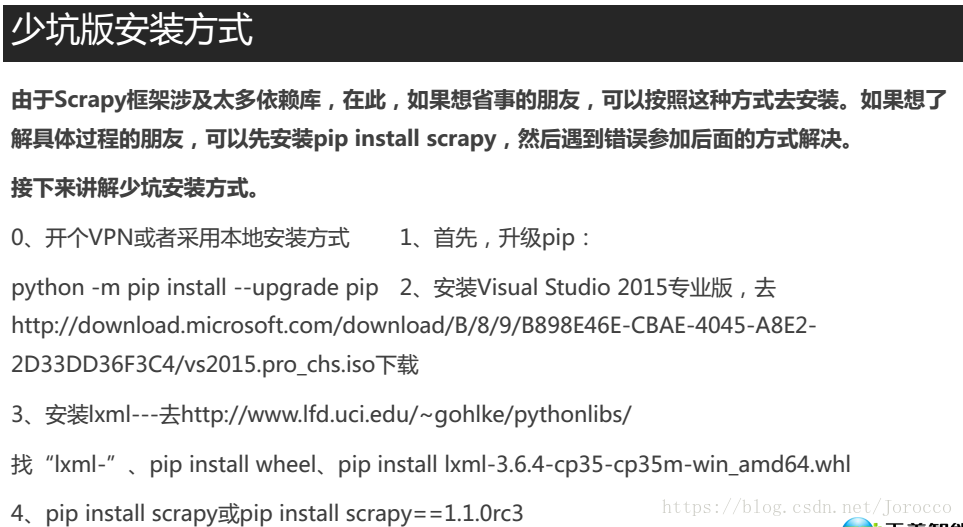

2、Scrapy的安装

也可以通过Anaconda安装可避免以上的坑

3、常用的基本命令

建立一个scrapy工程:

scrapy startproject pro

进入工程目录建立一个scrapy爬虫:

cd pro

以基础模板建立一个demo爬虫,域名为baidu.com

scrapy genspider -t basic demo baidu.com

以自动爬虫模板(也就是将一个网页上的所有链接的内容都依次爬取下来的)建立一个demo1爬虫,域名为baidu.com

scrapy genspider -t crawl demo1 baidu.com

两个模板的使用:一般做精准爬虫则用basic模板,做通用爬虫使用crawl模板

运行爬虫demo:

scrapy crawl demo

其他命令使用scrapy --help进行查看使用说明4、Xpath表达式

#!/usr/bin/python

# -*-coding:utf-8-*-

# __author__ = 'ShenJun'

'''

XPath表达式:

/:从顶端开始,一层一层的找

text():提取标签的内容

/html/head/title/text():从顶端开始搜索html/head/title标签下的内容

@:提取属性的值

//:寻找当前页中所有的标签 //li:寻找所有的li标签

//li[@class="hidden-xs"]/a/@href:提取属性class="hidden-xs"的标签下的a标签下的href属性的值

'''5、爬虫示例

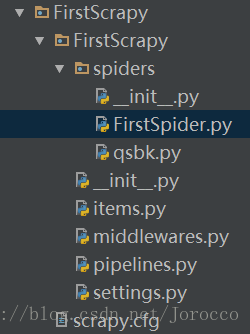

爬取百度的标题

在命令行建立好爬虫工程以及爬虫文件后,我们所需要编写的爬虫逻辑主要在spiders下的爬虫文件FirstSpider.py中,items.py用于设置爬取内容存储容器,pipelines.py则用于编写爬取到内容的后续处理,比如将其放到数据库或者存储到磁盘(这个文件可以不编写,只在爬虫文件中编写,但建议编写,为了更好的符合代码的低耦合),settings.py主要设置爬虫相应的配置,当我们使用了pipelines.py时需要在settings.py中开启,否则不能运行,同时需要关闭ROBOTSTXT_OBEY。其他文件一般不需要动。

init.py

# This package will contain the spiders of your Scrapy project

#

# Please refer to the documentation for information on how to create and manage

# your spiders.FirstSpider.py

# -*- coding: utf-8 -*-

import scrapy

from FirstScrapy.items import FirstscrapyItem

class FirstspiderSpider(scrapy.Spider):

name = "FirstSpider"

allowed_domains = ["baidu.com"]#目标地址的域名

#目标地址

start_urls = ['http://baidu.com/']

#parse是爬虫的主要逻辑,爬取到的内容都存在repsonse中

def parse(self, response):

#实例化类

item=FirstscrapyItem()

#将提取出的内容存储到content容器中

item["content"]=response.xpath("/html/head/title/text()").extract()

yield item

FirstScrapy__init__.py为空

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

'''

用于设置爬取的目标

'''

import scrapy

class FirstscrapyItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

content=scrapy.Field()#创建content容器用于存储爬取的内容

link=scrapy.Field()#创建link容器用于存储爬取的链接,容器创建了不一定要用,但用之前一定得创建

middlewares.py

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

class FirstscrapySpiderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Response, dict

# or Item objects.

pass

def process_start_requests(start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

'''

用于设置爬取后信息的后续处理

'''

'''

class FirstscrapyPipeline(object):

def process_item(self, item, spider):

print(item["content"])#处理content容器中的东西

return item

'''

class FirstscrapyPipeline(object):

def process_item(self, item, spider):

for i in range(0,len(item["content"])):

print(item["content"][i])#打印爬取到的内容

print(item["link"][i])#打印对应内容的链接

return item

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for FirstScrapy project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

'''

用于设置配置信息

'''

BOT_NAME = 'FirstScrapy'

SPIDER_MODULES = ['FirstScrapy.spiders']

NEWSPIDER_MODULE = 'FirstScrapy.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'FirstScrapy (+http://www.yourdomain.com)'

# Obey robots.txt rules

#爬虫协议,不遵守则可以让它为false

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'FirstScrapy.middlewares.FirstscrapySpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'FirstScrapy.middlewares.MyCustomDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

#默认是不开启pipelines,需要在settings中自己设置

'FirstScrapy.pipelines.FirstscrapyPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

scrapy.cfg

# Automatically created by: scrapy startproject

#

# For more information about the [deploy] section see:

# https://scrapyd.readthedocs.org/en/latest/deploy.html

[settings]

default = FirstScrapy.settings

[deploy]

#url = http://localhost:6800/

project = FirstScrapy

为了方便,后面的默认文件将不进行展示

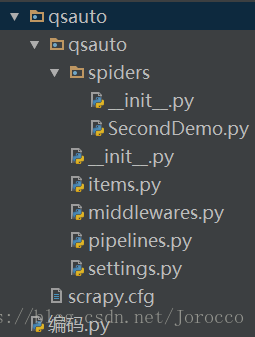

通用爬虫,即以crawl为模板的爬虫

爬取糗事百科的所有段子

SecondDemo

SecondDemo.py

# -*- coding: utf-8 -*-

'''

自动爬虫

使用自动爬虫模板建立自动爬虫:

crawl:自动爬虫模板

basic:基础爬虫模板

SecondDemo:爬虫文件

qiushibaike.com:需要爬取网页的域名

scrapy genspider -t crawl SecondDemo qiushibaike.com

'''

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from qsauto.items import QsautoItem

from scrapy.http import Request

class SeconddemoSpider(CrawlSpider):

name = 'SecondDemo'

allowed_domains = ['qiushibaike.com']

# start_urls = ['http://qiushibaike.com/']

#模拟成浏览器的方式访问第一个url,但后续的还是不会模拟成浏览器,所以必须在setting中进行相应的设置

def start_requests(self):

ua={"User-Agent":'Mozilla/5.0 (Windows NT 6.1; W…) Gecko/20100101 Firefox/60.0'}

yield Request('https://qiushibaike.com/',headers=ua)

rules = (

#设置链接提取的规则,follow表示是否从爬取的页面中继续提取链接进行爬取

Rule(LinkExtractor(allow=r'article'), callback='parse_item', follow=True),

)

def parse_item(self, response):

i = []

#i['domain_id'] = response.xpath('//input[@id="sid"]/@value').extract()

#i['name'] = response.xpath('//div[@id="name"]').extract()

#i['description'] = response.xpath('//div[@id="description"]').extract()

i["content"]=response.xpath("//div[@class='content']/text()").extract()

i["link"]=response.xpath("//link[@rel='canonical']/@href").extract()

print(i["content"])

print(i["link"])

print("")

return i

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class QsautoItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

content=scrapy.Field()#创建content容器用于存储爬取的内容

link=scrapy.Field()#创建link容器用于存储爬取的链接,容器创建了不一定要用,但用之前一定得创建

pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

class QsautoPipeline(object):

def process_item(self, item, spider):

return item

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for qsauto project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'qsauto'

SPIDER_MODULES = ['qsauto.spiders']

NEWSPIDER_MODULE = 'qsauto.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#设置的报文头

USER_AGENT ='Mozilla/5.0 (Windows NT 6.1; W…) Gecko/20100101 Firefox/60.0'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'qsauto.middlewares.QsautoSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'qsauto.middlewares.MyCustomDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'qsauto.pipelines.QsautoPipeline': 300,

#}

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

ThirdDemo.py

# -*- coding: utf-8 -*-

'''

爬取天善智能网站所有的课程、课程人数以及课程链接

'''

import scrapy

from project3.items import Project3Item

from scrapy.http import Request

class ThirddemoSpider(scrapy.Spider):

name = "ThirdDemo"

allowed_domains = ["hellobi.com"]

start_urls = ['https://edu.hellobi.com/course/10']

def parse(self, response):

items=Project3Item()

items["title"]=response.xpath('//ol[@class="breadcrumb"]/li[@class="active"]/text()').extract()

items["link"]=response.xpath('//ul[@class="nav nav-tabs"]/li[@class="active"]/a/@href').extract()

items["stu"]=response.xpath('//span[@class="course-view"]/text()').extract()

yield items

#爬取其他课程

for i in range(1,280):

#获取其他课程的链接

url="https://edu.hellobi.com/course/"+str(i)

#通过Request继续爬取其他课程的内容,callback是回调函数

yield Request(url,callback=self.parse)items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class Project3Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title=scrapy.Field()

stu=scrapy.Field()

link=scrapy.Field()pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

class Project3Pipeline(object):

#初始化方法

def __init__(self):

self.file=open("J:\\Program\\Python\\Python爬虫\\scrapy\\FirstScrapy\\project3\\content1\\1.txt","a")

def process_item(self, item, spider):

print(item["title"])

print(item["link"])

print(item["stu"])

print("---------------")

#将内容写入文件中

self.file.write(item["title"][0]+"\n"+item["link"][0]+"\n"+item["stu"][0]+"\n"+"-------------"+"\n")

return item

#最后执行的方法

def close_spider(self):

self.file.close()settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for project3 project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'project3'

SPIDER_MODULES = ['project3.spiders']

NEWSPIDER_MODULE = 'project3.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'project3 (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'project3.middlewares.Project3SpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'project3.middlewares.MyCustomDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'project3.pipelines.Project3Pipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

db.py

# -*- coding: utf-8 -*-

'''

自动模拟登陆豆瓣并解决验证码问题

'''

import scrapy

from scrapy.http import Request,FormRequest

import urllib.request

class DbSpider(scrapy.Spider):

name = "db"

allowed_domains = ["douban.com"]

# start_urls = ['http://douban.com/']

header={"User-Agent":'Mozilla/5.0 (Windows NT 6.1; W…) Gecko/20100101 Firefox/60.0'}

def start_requests(self):

#伪装成浏览器,回调函数,并设置cookie,保持登陆状态

return [Request("https://www.douban.com/accounts/login",callback=self.parse,meta={"cookiejar":1})]

def parse(self, response):

url="https://www.douban.com/accounts/login"

#提取出验证码

captcha=response.xpath("//img[@id='captcha_image']/@src").extract()

if len(captcha)>0:

print=("此时有验证码")

#将验证码提取到本地,手动输入,即半自动化方式

localpath="J:\\Program\\Python\\Python爬虫\\scrapy\\douban\\验证码\\captcha.png"

#将验证码图片下载下来

urllib.request.urlretrieve(captcha[0],filename=localpath)

print("请查看本地验证码图片并输入验证码")

captcha_value=input()

#需要发送给表单的数据,即自动登陆所需要账号密码等

data={

#账号字段

"form_email":"[email protected]",

#密码字段

"form_password":"sj528497934..",

#验证码字段

"captcha-solution":captcha_value,

#个人主页字段

"redir":"https://www.douban.com/people/174986115/",

}

else:

print=("此时没有验证码")

#需要发送给表单的数据,即自动登陆所需要账号密码等

data={

"form_email":"[email protected]",

"form_password":"sj528497934..",

"redir":"https://www.douban.com/people/174986115/",

}

print("登录中。。。。。")

return [FormRequest.from_response(response,

#设置cookie,当我们登陆成功后将会保存其cookie

meta={"cookiejar":response.meta["cookiejar"]},

#设置头,伪装成浏览器

headers=self.header,

#设置提交的表单信息

formdata=data,

#设置回调函数

callback=self.next,)]

def next(self,response):

print("登陆完成并爬取了个人中心的数据")

title=response.xpath("/html/head/title/text()").extract()

note=response.xpath("//div[@class='note']/text()").extract()

print(title[0])

print(note[0])

items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class DoubanItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

pass

pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

class DoubanPipeline(object):

def process_item(self, item, spider):

return item

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for douban project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'douban'

SPIDER_MODULES = ['douban.spiders']

NEWSPIDER_MODULE = 'douban.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'douban (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'douban.middlewares.DoubanSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'douban.middlewares.MyCustomDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

#ITEM_PIPELINES = {

# 'douban.pipelines.DoubanPipeline': 300,

#}

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

dd.py

# -*- coding: utf-8 -*-

'''

爬取当当网书籍信息并写入到mysql数据库

'''

import scrapy

from dangdang.items import DangdangItem

from scrapy.http import Request

class DdSpider(scrapy.Spider):

name = "dd"

allowed_domains = ["dangdang.com"]

start_urls = ['http://dangdang.com/']

def parse(self, response):

items=DangdangItem()

items["title"]=response.xpath("//a[@class='pic']/@title").extract()

items["link"]=response.xpath("//a[@class='pic']/@href").extract()

items["comment"]=response.xpath('//a[@class="search_comment_num"]/text()').extract()

yield items#交给pipelines中的item处理

for i in range(2,3):

url="http://search.dangdang.com/?key=python&act=input&page_index="+str(i)+"#J_tab"

yield Request(url,callback=self.parse)items.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class DangdangItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title=scrapy.Field()

link=scrapy.Field()

comment=scrapy.Field()pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import pymysql

import chardet

class DangdangPipeline(object):

def process_item(self, item, spider):

#连接数据库

# conn=pymysql.connect(host="127.0.0.1",port=3306,user="root",passwd="123456",db="dd",charset="utf-8")

#不能写成utf-8,不认识,记得加上端口号

conn = pymysql.connect( host='127.0.0.1', port=3306, user='root', passwd='123456', db='dd', charset="utf8")

cur=conn.cursor()

for i in range(0,len(item["title"])):

title=(item["title"][i])

link=(item["link"][i])

comment=(item["comment"][i])

cur.execute('insert into book(title,link,comment) values(%s,%s,%s)',(""+title+"",""+link+"",""+comment+""))

conn.commit()

cur.close()

conn.close()

return item

settings.py

# -*- coding: utf-8 -*-

# Scrapy settings for dangdang project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

# http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

# http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'dangdang'

SPIDER_MODULES = ['dangdang.spiders']

NEWSPIDER_MODULE = 'dangdang.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'dangdang (+http://www.yourdomain.com)'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See http://scrapy.readthedocs.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

#DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

#}

# Enable or disable spider middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# 'dangdang.middlewares.DangdangSpiderMiddleware': 543,

#}

# Enable or disable downloader middlewares

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html

#DOWNLOADER_MIDDLEWARES = {

# 'dangdang.middlewares.MyCustomDownloaderMiddleware': 543,

#}

# Enable or disable extensions

# See http://scrapy.readthedocs.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

#}

# Configure item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'dangdang.pipelines.DangdangPipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See http://doc.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See http://scrapy.readthedocs.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = 'httpcache'

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

PhantomJs的使用

#!/usr/bin/python

# -*-coding:utf-8-*-

# __author__ = 'ShenJun'

'''

PhantomJs主要用于抓取隐藏

'''

from selenium import webdriver

import time

import re

from lxml import etree

bs=webdriver.PhantomJS()#打开浏览器

time.sleep(3)

url="http://www.baidu.com"

bs.get(url)#访问url

#将访问到的网页保存为一个图片

# bs.get_screenshot_as_file("J:\\Program\\Python\\爬虫笔记\\图片\\phantomjs.png")

data=bs.page_source#获取访问到页面的源代码

'''

#通过正则表达式匹配title

pattitle="<title>(.*?)</title>"

title=re.compile(pattitle).findall(data)

print(title)

'''

'''

如何想在urllib或者phantomjs中使用xpath表达式

需要将data即源码转为tree,再进行xpath提取

'''

edata=etree.HTML(data)

title2=edata.xpath("/html/head/title/text()")

print(title2)

#将源代码保存到test

fh=open("J:\\Program\\Python\\爬虫笔记\\test.html","wb")

fh.write(data.encode("utf-8"))

fh.close()

bs.quit()#关闭浏览器

Bloom过滤器去重

#!/usr/bin/python

# -*-coding:utf-8-*-

# __author__ = 'ShenJun'

import bloom

'''

主要用于去重url

'''

url="www.baidu.com"

# 申请一个内存等于1000000k的容器,错误率等于0.001的bloomfilter对象

bloo=bloom.BloomFilter(0.001, 1000000)

element = 'weiwei'

bloo.insert_element(element)

print(bloo.is_element_exist('weiwei'))