一 新建项目microservice-simple-provider-user-trace-elk

二 为项目添加以下依赖

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<dependency>

<groupId>com.h2database</groupId>

<artifactId>h2</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-sleuth</artifactId>

</dependency>

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>4.6</version>

</dependency>

</dependencies>

三 新建logback-spring.xml文件

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml" />

<springProperty scope="context" name="springAppName" source="spring.application.name" />

<!-- Example for logging into the build folder of your project -->

<property name="LOG_FILE" value="${BUILD_FOLDER:-build}/${springAppName}" />

<property name="CONSOLE_LOG_PATTERN"

value="%clr(%d{yyyy-MM-dd HH:mm:ss.SSS}){faint} %clr(${LOG_LEVEL_PATTERN:-%5p}) %clr([${springAppName:-},%X{X-B3-TraceId:-},%X{X-B3-SpanId:-},%X{X-B3-ParentSpanId:-},%X{X-Span-Export:-}]){yellow} %clr(${PID:- }){magenta} %clr(---){faint} %clr([%15.15t]){faint} %clr(%-40.40logger{39}){cyan} %clr(:){faint} %m%n${LOG_EXCEPTION_CONVERSION_WORD:-%wEx}" />

<!-- Appender to log to console -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<!-- Minimum logging level to be presented in the console logs -->

<level>DEBUG</level>

</filter>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file -->

<appender name="flatfile" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${CONSOLE_LOG_PATTERN}</pattern>

<charset>utf8</charset>

</encoder>

</appender>

<!-- Appender to log to file in a JSON format -->

<appender name="logstash" class="ch.qos.logback.core.rolling.RollingFileAppender">

<file>${LOG_FILE}.json</file>

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE}.json.%d{yyyy-MM-dd}.gz</fileNamePattern>

<maxHistory>7</maxHistory>

</rollingPolicy>

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<pattern>

<pattern>

{

"severity": "%level",

"service": "${springAppName:-}",

"trace": "%X{X-B3-TraceId:-}",

"span": "%X{X-B3-SpanId:-}",

"parent": "%X{X-B3-ParentSpanId:-}",

"exportable": "%X{X-Span-Export:-}",

"pid": "${PID:-}",

"thread": "%thread",

"class": "%logger{40}",

"rest": "%message"

}

</pattern>

</pattern>

</providers>

</encoder>

</appender>

<root level="INFO">

<appender-ref ref="console" />

<appender-ref ref="logstash" />

<!--<appender-ref ref="flatfile"/> -->

</root>

</configuration>

四 编写application.yml

server:

port: 8000

spring:

jpa:

generate-ddl: false

show-sql: true

hibernate:

ddl-auto: none

datasource: # 指定数据源

platform: h2 # 指定数据源类型

schema: classpath:schema.sql # 指定h2数据库的建表脚本

data: classpath:data.sql # 指定h2数据库的数据脚本

logging:

level:

root: INFO

org.springframework.cloud.sleuth: DEBUG

# org.springframework.web.servlet.DispatcherServlet: DEBUG

五 编写bootstrap.yml

spring:

application:

name: microservice-provider-user

# 注意:本例中的spring.application.name只能放在bootstrap.*文件中,不能放在application.*文件中,因为我们使用了自定义的logback-spring.xml。

# 如果放在application.*文件中,自定义的logback文件将无法正确读取属性。

六 编写Logstash配置文件,命名为logstash.conf,内容如下

input {

file {

codec => json

path => "F:/springcloud/temp/microservice-simple-provider-user-trace-elk/build/*.json"

}

}

filter {

grok {

match => { "message" => "%{TIMESTAMP_ISO8601:timestamp}\s+%{LOGLEVEL:severity}\s+\[%{DATA:service},%{DATA:trace},%{DATA:span},%{DATA:exportable}\]\s+%{DATA:pid}---\s+\[%{DATA:thread}\]\s+%{DATA:class}\s+:\s+%{GREEDYDATA:rest}" }

}

}

output {

elasticsearch {

hosts => "localhost:9200"

}

}

七 测试

1 启动ELK

1.1 ElasticSearch启动方法:在服务中启动。

1.2 Logstash启动方法

D:\Logstash\logstash-6.2.2\bin>logstash -f D:/Logstash/logstash-6.2.2/conf/logstash.conf

1.3 kinana启动方法

D:\kinana\kibana-6.2.2\bin>kibana.bat

2 启动项目microservice-simple-provider-user-trace-elk

3 多次访问http://localhost:8000/1,产生一些日志

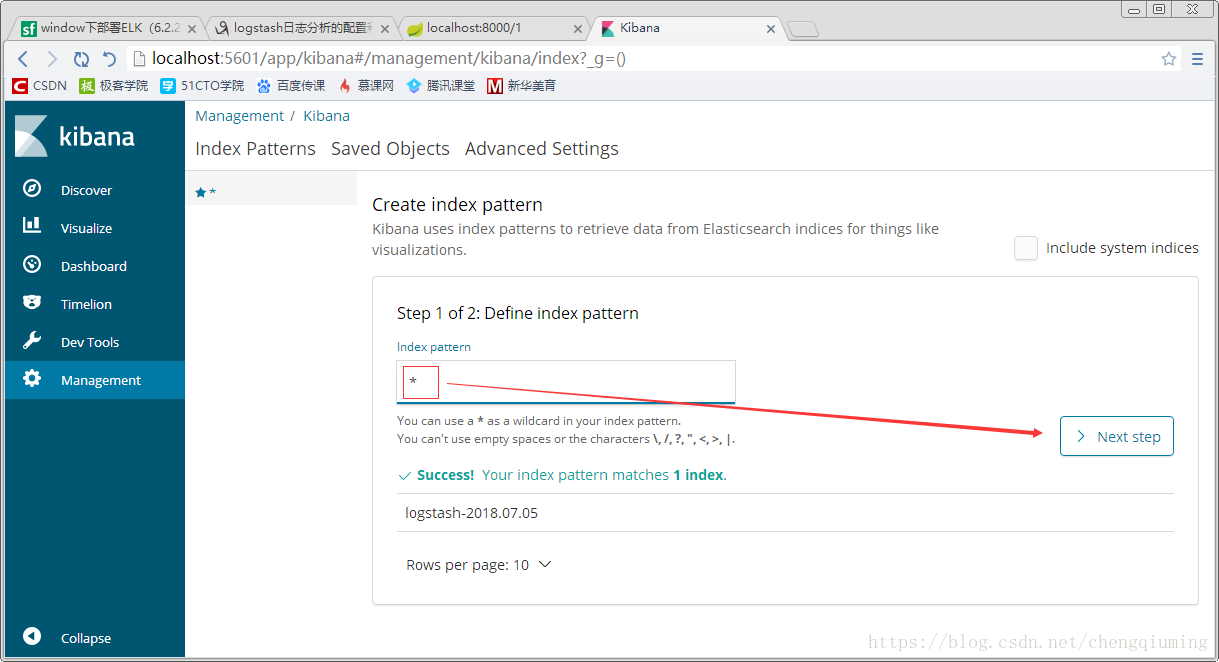

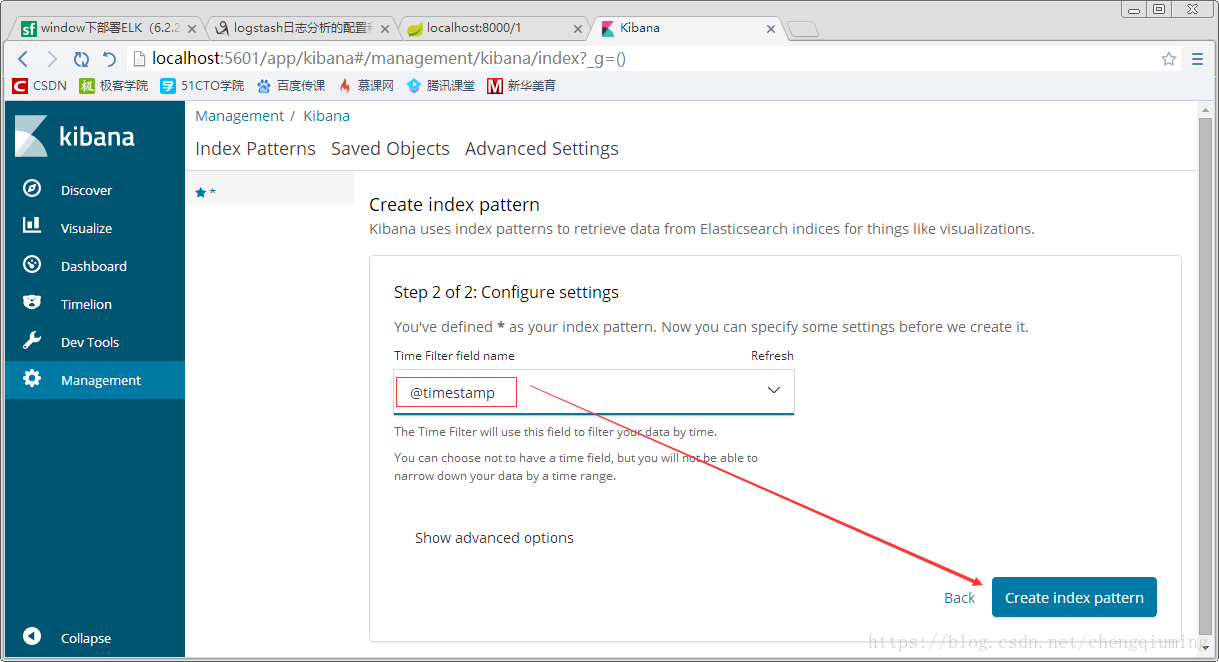

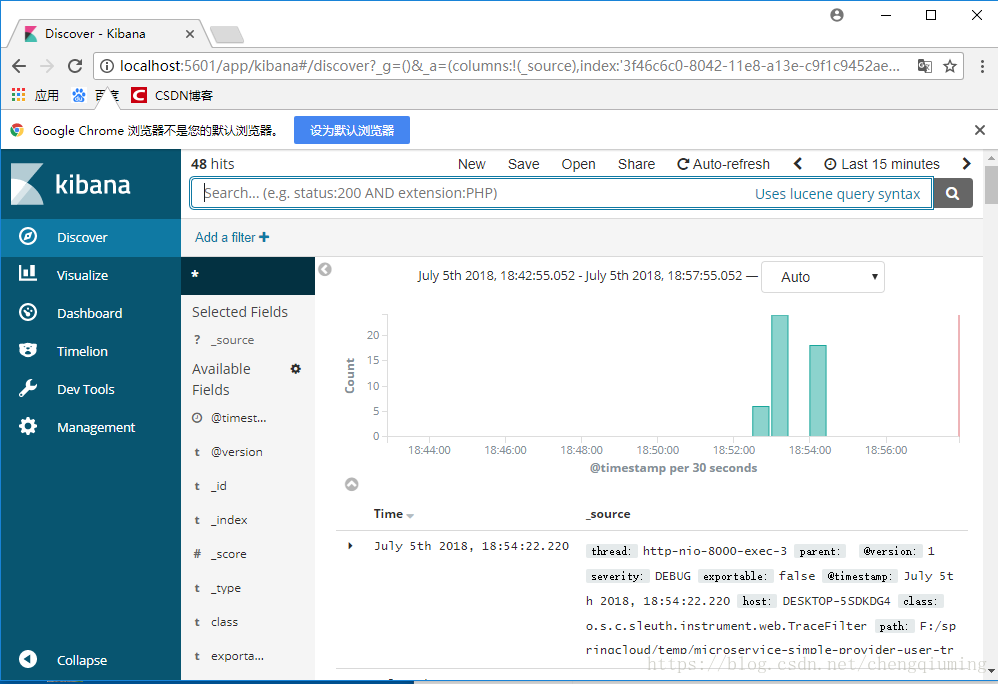

4 访问http://localhost:5601,可以看到Kibana的首页,

然后在点击Discover,有了数据,如下图

测试成功!

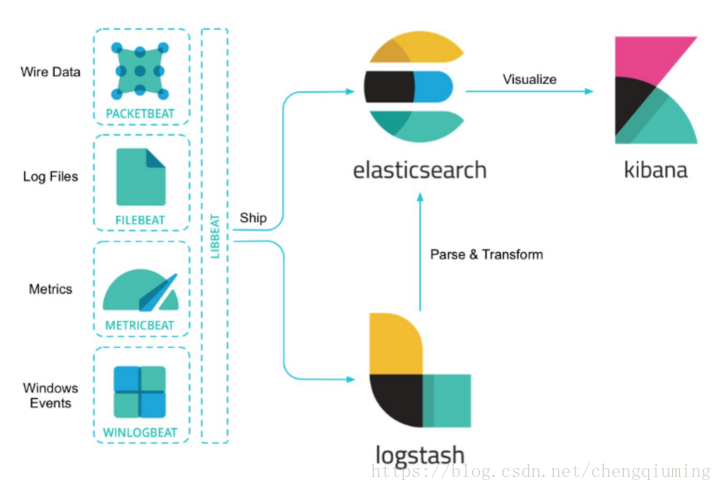

八 ELK架构图