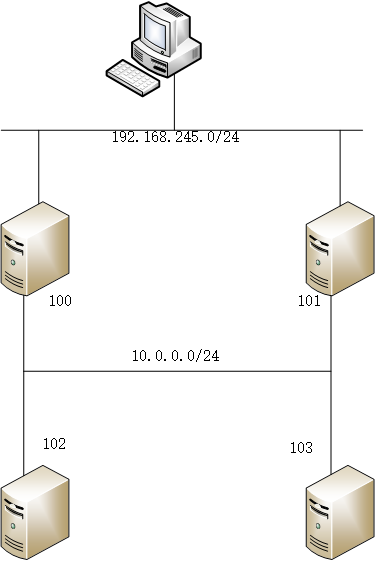

如上图所示,102和103是内网nginx服务器,100和101是边界LB,clinet是1,这个实验是为了实现在LB上虚拟出一个VIP,client通过访问该VIP,来动态负载到两台内网nginx服务器上面来。流量的来回,都需要经过边界LB。

我们首先分析一下,这种模式是一种NAT模式,流量的来回都要经过边界LB。回忆一下NAT的工作模式,LB在接收到client的数据请求后,根据自身负载均衡算法,将目标IP地址修改为内网RS地址,然后从内网口扔出去,内网服务器收到这个数据请求之后,目标MAC和IP都是自己,源地址是client,这个时候就会处理该数据包,返回,返回的数据包中,源地址是自己,目标地址是client,在经过LB的时候,会被转换,源地址为VIP地址,目标地址为client地址,然后client就会收到这个回包。

这个和物理硬件LB的工作模式中,透明模式相同,透明模式也就是NAT模式。而物理硬件中的反向代理模式,和LVS中的tunnel模式相似,在接收到client的数据请求后,会将整个数据包进行改写,源地址为LB的内网地址,目标地址为某一个RS地址,然后数据包返回的时候,也就会再次进行转换。这种反向代理模式中,服务器正常情况下无法知道client的源,只知道是自己的LB访问了自己,可以通过配置LB,添加字段,来讲该client的地址一并发送给RS。

物理硬件LB中的三角模式,也就是LVS的DR模式。需要在服务器上配置lo接口。

回到环境中。这个地方采用的是NAT模式,也就是说流量必须要经过LB,最简单的做法,就是将两台NGINX服务器的网关,配置在LB上,而且是以VIP的形式存在,对外,LB也拿出一个VIP,作为client访问的入口,也就是说,LB上,有两个VRRP INSTANCE,而且要保持状态一致。这样才能实现高可用HA。

下面开始做配置了,主要是在两台LB上配置keepalived,keepalived是默认安装的,配置文件存放在/etc/keepalived/目录下,启动脚本为/etc/init.d/keepalived,定义100为主,101为备。下面是两台设备的keepalived配置文件。

! Configuration File for keepalived ! The global defines,including notification server and target,router-id and others global_defs { notification_email { [email protected] 0633225522@domain.com } notification_email_from [email protected] smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id LB_100 } ! The vrrp sync group,any vrrp instance in the vrrp sync group while keep the same status, ! no matter the priority ,for example ,VI_1 is master ,VI_2 is backup,the sync group status is backup vrrp_sync_group VG1 { group { VI_To_client VI_To_server } } ! The VRRP instance for client to connect,clients connect to the VIPs vrrp_instance VI_To_client { state MASTER interface eth0 virtual_router_id 192 priority 150 nopreempt advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.245.200 } } ! The VRRP instance for real servers,real servers` default gateway vrrp_instance VI_To_server { state MASTER interface eth1 virtual_router_id 10 priority 150 nopreempt advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 10.0.0.254 } } ! The LB configuration,including VS and RS,scheduling argu,and other options,health check virtual_server 192.168.245.200 80 { delay_loop 6 lb_algo rr lb_kind NAT persistence_timeout 10 protocol TCP real_server 10.0.0.102 80 { weight 1 TCP_CHECK { connect_timeout 3 } } real_server 10.0.0.103 80 { weight 1 TCP_CHECK { connect_timeout 3 } } }

在100的配置文件中,主要是配置了一个vrrp_sync_group,里面有两个VRRP INSTANCE,分别是面向client和面向RS的,两个VRRP INSTANCE的vrrp-_id是不同的,但是其他属性值都是ixiangtong的。另外,在keepalived的LB配置中,有对VS和RS的配置,同时对RS使用了health check,检查方法很简单,就是TCP的connect,当能够访问到时,将该RS动态的加入到ipvs中,如果访问不到,就从ipvs中移除。这个现象可以通过查看日志看到。

slave的配置文件和master的配置文件大同小异,除了必要的字段不同,其他都是相同的。下面看看keepalived的日志信息。

Jul 15 13:57:01 CentOS_101 Keepalived[26012]: Starting Keepalived v1.2.13 (03/19,2015) Jul 15 13:57:01 CentOS_101 Keepalived[26013]: Starting Healthcheck child process, pid=26015 Jul 15 13:57:01 CentOS_101 Keepalived[26013]: Starting VRRP child process, pid=26016 Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Netlink reflector reports IP 192.168.245.101 added Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Netlink reflector reports IP 192.168.245.101 added Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Netlink reflector reports IP 10.0.0.101 added Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Netlink reflector reports IP fe80::20c:29ff:fe88:718 added Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Netlink reflector reports IP fe80::20c:29ff:fe88:722 added Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Registering Kernel netlink reflector Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Registering Kernel netlink command channel Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Opening file '/etc/keepalived/keepalived.conf'. Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Configuration is using : 15025 Bytes Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Using LinkWatch kernel netlink reflector... Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Netlink reflector reports IP 10.0.0.101 added Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Netlink reflector reports IP fe80::20c:29ff:fe88:718 added Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Netlink reflector reports IP fe80::20c:29ff:fe88:722 added Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Registering Kernel netlink reflector Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Registering Kernel netlink command channel Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Registering gratuitous ARP shared channel Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Opening file '/etc/keepalived/keepalived.conf'. Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Configuration is using : 69565 Bytes Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: Using LinkWatch kernel netlink reflector... Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Activating healthchecker for service [10.0.0.102]:80 Jul 15 13:57:01 CentOS_101 Keepalived_healthcheckers[26015]: Activating healthchecker for service [10.0.0.103]:80 Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: VRRP_Instance(VI_To_client) Entering BACKUP STATE Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: VRRP_Instance(VI_To_server) Entering BACKUP STATE Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] Jul 15 13:57:01 CentOS_101 Keepalived_vrrp[26016]: VRRP sockpool: [ifindex(3), proto(112), unicast(0), fd(12,13)]

这个是启动日志,在启动的时候,就对两个RS就开启了健康检查。

Jul 15 13:57:19 CentOS_101 Keepalived_healthcheckers[26015]: TCP connection to [10.0.0.102]:80 failed !!! Jul 15 13:57:19 CentOS_101 Keepalived_healthcheckers[26015]: Removing service [10.0.0.102]:80 from VS [192.168.245.200]:80 Jul 15 13:57:49 CentOS_101 Keepalived_healthcheckers[26015]: TCP connection to [10.0.0.102]:80 success. Jul 15 13:57:49 CentOS_101 Keepalived_healthcheckers[26015]: Adding service [10.0.0.102]:80 to VS [192.168.245.200]:80 Jul 15 13:57:59 CentOS_101 Keepalived_healthcheckers[26015]: TCP connection to [10.0.0.103]:80 failed !!! Jul 15 13:57:59 CentOS_101 Keepalived_healthcheckers[26015]: Removing service [10.0.0.103]:80 from VS [192.168.245.200]:80 Jul 15 13:58:29 CentOS_101 Keepalived_healthcheckers[26015]: TCP connection to [10.0.0.103]:80 success. Jul 15 13:58:29 CentOS_101 Keepalived_healthcheckers[26015]: Adding service [10.0.0.103]:80 to VS [192.168.245.200]:80

这个是测试RS故障时候,keepalived的日志。日志写的很清楚,当主机故障时,就从VS中移除,同时可以看到ipvsadm的状态。

[root@CentOS_100 keepalived]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.245.200:80 rr persistent 10 -> 10.0.0.102:80 Masq 1 0 0 [root@CentOS_100 keepalived]# ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.245.200:80 rr persistent 10 -> 10.0.0.102:80 Masq 1 0 0 -> 10.0.0.103:80 Masq 1 0 3 [root@CentOS_100 keepalived]# ipvsadm -L -n

client在访问的时候,只需要访问200这个虚拟地址就好了。如果不想LB作为RS的网关,可以直接在RS上写一条路由即可。

在NAT模式中,需要在LB上开启转发,先查看sysctl -p查看是否开启了转发,如果没有开启,需要在/etc/sysctl.conf中修改net.ipv4.ip_forward为1,默认为0,如果为0,数据包在经过LB的时候,是不会被转发出去的。这个时候的LB,作为的是一个路由器,用linux作为路由器,是需要开启这个属性的。