摘要:本文详细讲解了python网络爬虫,并介绍抓包分析等技术,实战训练三个网络爬虫案例,并简单补充了常见的反爬策略与反爬攻克手段。通过本文的学习,可以快速掌握网络爬虫基础,结合实战练习,写出一些简单的爬虫项目。

数十款阿里云产品限时折扣中,赶紧点击这里,领劵开始云上实践吧!

演讲嘉宾简介:

韦玮,企业家,资深IT领域专家/讲师/作家,畅销书《精通Python网络爬虫》作者,阿里云社区技术专家。

以下内容根据演讲嘉宾视频分享以及PPT整理而成。

本次的分享主要围绕以下五个方面:

一、数据采集与网络爬虫技术简介

二、网络爬虫技术基础

三、抓包分析

四、挑战案例

五、推荐内容

一、数据采集与网络爬虫技术简介

网络爬虫是用于数据采集的一门技术,可以帮助我们自动地进行信息的获取与筛选。从技术手段来说,网络爬虫有多种实现方案,如PHP、Java、Python ...。那么用python 也会有很多不同的技术方案(Urllib、requests、scrapy、selenium...),每种技术各有各的特点,只需掌握一种技术,其它便迎刃而解。同理,某一种技术解决不了的难题,用其它技术或方依然无法解决。网络爬虫的难点并不在于网络爬虫本身,而在于网页的分析与爬虫的反爬攻克问题。希望在本次课程中大家可以领会爬虫中相对比较精髓的内容。

二、网络爬虫技术基础

在本次课中,将使用Urllib技术手段进行项目的编写。同样,掌握了该技术手段,其他的技术手段也不难掌握,因为爬虫的难点不在于技术手段本身。本知识点包括如下内容:

·Urllib基础

·浏览器伪装

·用户代理池

·糗事百科爬虫实战

需要提前具备的基础知识:正则表达式

1)Urllib基础

爬网页

打开python命令行界面,两种方法:ulropen()爬到内存,urlretrieve()爬到硬盘文件。

<span style="color:#f8f8f2"><code class="language-python"><span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> <span style="color:#66d9ef">import</span> urllib<span style="color:#f8f8f2">.</span>request

<span style="color:slategray">#open百度,读取并爬到内存中,解码(ignore可忽略解码中的细微错误), 并赋值给data</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>ulropen<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"http://www.baidu.com"</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8”, “ignore"</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#判断网页内的数据是否存在,通过查看data长度</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> len<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

提取网页标题

<span style="color:slategray">#首先导入正则表达式, .*?代表任意信息,()代表要提取括号内的内容</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> <span style="color:#66d9ef">import</span> re

<span style="color:slategray">#正则表达式</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> pat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"<title>(.*?)</title>"</span>

<span style="color:slategray">#re.compile()指编译正则表达式</span>

<span style="color:slategray">#re.S是模式修正符,网页信息往往包含多行内容,re.S可以消除多行影响</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> rst<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>pat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> <span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span>rst<span style="color:#f8f8f2">)</span>

<span style="color:slategray">#[‘百度一下,你就知道’]</span></code></span>同理,只需换掉网址可爬取另一个网页内容

<span style="color:#f8f8f2"><code class="language-python"><span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>ulropen<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"http://www.jd.com"</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">,</span> <span style="color:#a6e22e">"ignore"</span><span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> rst<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>pat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

<span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> <span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span>rst<span style="color:#f8f8f2">)</span></code></span>上面是将爬到的内容存在内存中,其实也可以存在硬盘文件中,使用urlretrieve()方法

<span style="color:#333333"><span style="color:#f8f8f2"><code class="language-python"><span style="color:#f8f8f2">>></span><span style="color:#f8f8f2">></span> urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlretrieve<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"http://www.jd.com"</span><span style="color:#f8f8f2">,</span>filename<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"D:/我的教学/Python/阿里云系列直播/第2次直播代码/test.html"</span><span style="color:#f8f8f2">)</span></code></span></span>之后可以打开test.html,京东网页就出来了。由于存在隐藏数据,有些数据信息和图片无法显示,可以使用抓包分析进行获取。

2)浏览器伪装

尝试用上面的方法去爬取糗事百科网站url="https://www.qiushibaike.com/",会返回拒绝访问的回复,但使用浏览器却可以正常打开。那么问题肯定是出在爬虫程序上,其原因在于爬虫发送的请求头所导致。

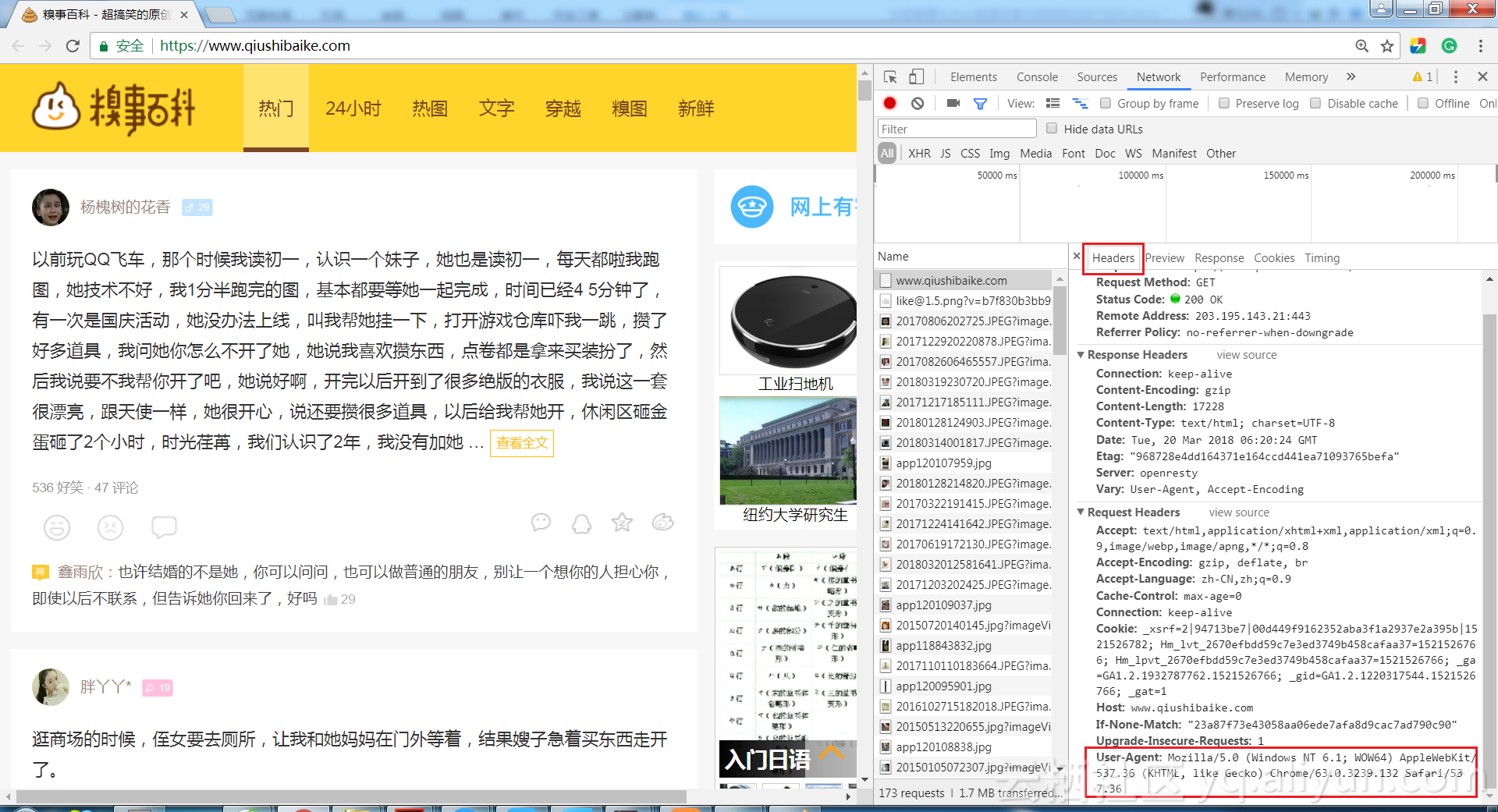

打开糗事百科页面,如下图,通过F12,找到headers,这里主要关注用户代理User-Agent字段。User-Agent代表是用什么工具访问糗事百科网站的。不同浏览器的User-Agent值是不同的。那么就可以在爬虫程序中,将其伪装成浏览器。

将User-Agent设置为浏览器中的值,虽然urlopen()不支持请求头的添加,但是可以利用opener进行addheaders,opener是支持高级功能的管理对象。代码如下:

<span style="color:#f8f8f2"><code class="language-python"><span style="color:slategray">#浏览器伪装</span>

url<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"https://www.qiushibaike.com/"</span>

<span style="color:slategray">#构建opener</span>

opener<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>build_opener<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#User-Agent设置成浏览器的值</span>

UA<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"User-Agent"</span><span style="color:#f8f8f2">,</span><span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0"</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#将UA添加到headers中</span>

opener<span style="color:#f8f8f2">.</span>addheaders<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span>UA<span style="color:#f8f8f2">]</span>

urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>install_opener<span style="color:#f8f8f2">(</span>opener<span style="color:#f8f8f2">)</span>

data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlopen<span style="color:#f8f8f2">(</span>url<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">,</span><span style="color:#a6e22e">"ignore"</span><span style="color:#f8f8f2">)</span></code></span>3)用户代理池

在爬取过程中,一直用同样一个地址爬取是不可取的。如果每一次访问都是不同的用户,对方就很难进行反爬,那么用户代理池就是一种很好的反爬攻克的手段。

第一步,收集大量的用户代理User-Agent

<span style="color:#f8f8f2"><code class="language-python"><span style="color:slategray">#用户代理池</span>

uapools<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span>

<span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.79 Safari/537.36 Edge/14.14393"</span><span style="color:#f8f8f2">,</span>

<span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.22 Safari/537.36 SE 2.X MetaSr 1.0"</span><span style="color:#f8f8f2">,</span>

<span style="color:#a6e22e">"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)"</span><span style="color:#f8f8f2">,</span>

<span style="color:#f8f8f2">]</span></code></span>第二步,建立函数UA(),用于切换用户代理User-Agent

<span style="color:#f8f8f2"><code class="language-python"><span style="color:#66d9ef">def</span> <span style="color:#e6db74">UA</span><span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

opener<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>build_opener<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#从用户代理池中随机选择一个</span>

thisua<span style="color:#f8f8f2">=</span>random<span style="color:#f8f8f2">.</span>choice<span style="color:#f8f8f2">(</span>uapools<span style="color:#f8f8f2">)</span>

ua<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"User-Agent"</span><span style="color:#f8f8f2">,</span>thisua<span style="color:#f8f8f2">)</span>

opener<span style="color:#f8f8f2">.</span>addheaders<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span>ua<span style="color:#f8f8f2">]</span>

urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>install_opener<span style="color:#f8f8f2">(</span>opener<span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"当前使用UA:"</span><span style="color:#f8f8f2">+</span>str<span style="color:#f8f8f2">(</span>thisua<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span></code></span>for循环,每访问一次切换一次UA

<span style="color:#f8f8f2"><code class="language-python"><span style="color:#66d9ef">for</span> i <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span><span style="color:#ae81ff">10</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

UA<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlopen<span style="color:#f8f8f2">(</span>url<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">,</span><span style="color:#a6e22e">"ignore"</span><span style="color:#f8f8f2">)</span></code></span>每爬3次换一次UA

<span style="color:#f8f8f2"><code class="language-python"><span style="color:#66d9ef">for</span> i <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span><span style="color:#ae81ff">10</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">if</span><span style="color:#f8f8f2">(</span>i<span style="color:#f8f8f2">%</span><span style="color:#ae81ff">3</span><span style="color:#f8f8f2">==</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

UA<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlopen<span style="color:#f8f8f2">(</span>url<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">,</span><span style="color:#a6e22e">"ignore"</span><span style="color:#f8f8f2">)</span></code></span>(*每几次做某件事情,利用求余运算)

4)第一项练习-糗事百科爬虫实战

目标网站:https://www.qiushibaike.com/

需要把糗事百科中的热门段子爬取下来,包括翻页之后内容,该如何获取?

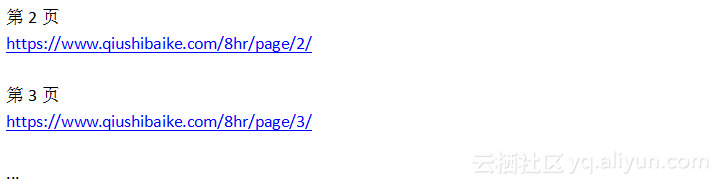

第一步,对网址进行分析,如下图所示,发现翻页之后变化的部分只是page后面的页面数字。

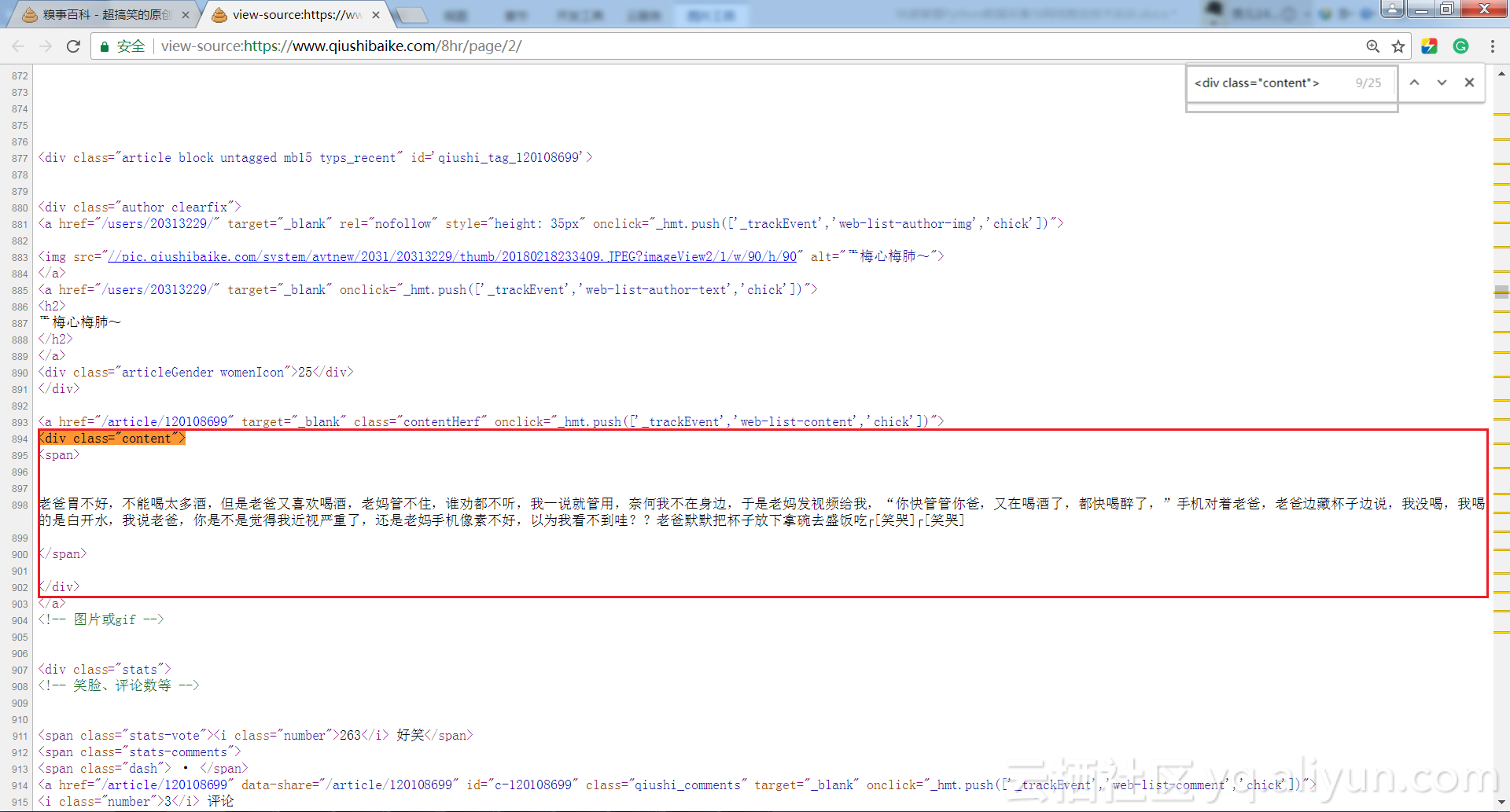

第二步,思考如何提取某个段子?查看网页代码,如下图所示,可以发现<div class="content">的数量和每页段子数量相同,可以用<div class="content">这个标识提取出每条段子信息。

第三步,利用上面所提到的用户代理池进行爬取。首先建立用户代理池,从用户代理池中随机选择一项,设置UA。

<span style="color:#f8f8f2"><code class="language-python"><span style="color:#66d9ef">import</span> urllib<span style="color:#f8f8f2">.</span>request

<span style="color:#66d9ef">import</span> re

<span style="color:#66d9ef">import</span> random

<span style="color:slategray">#用户代理池</span>

uapools<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span>

<span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.79 Safari/537.36 Edge/14.14393"</span><span style="color:#f8f8f2">,</span>

<span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.22 Safari/537.36 SE 2.X MetaSr 1.0"</span><span style="color:#f8f8f2">,</span>

<span style="color:#a6e22e">"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1; Maxthon 2.0)"</span><span style="color:#f8f8f2">,</span>

<span style="color:#f8f8f2">]</span>

<span style="color:#66d9ef">def</span> <span style="color:#e6db74">UA</span><span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

opener<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>build_opener<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

thisua<span style="color:#f8f8f2">=</span>random<span style="color:#f8f8f2">.</span>choice<span style="color:#f8f8f2">(</span>uapools<span style="color:#f8f8f2">)</span>

ua<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"User-Agent"</span><span style="color:#f8f8f2">,</span>thisua<span style="color:#f8f8f2">)</span>

opener<span style="color:#f8f8f2">.</span>addheaders<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span>ua<span style="color:#f8f8f2">]</span>

urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>install_opener<span style="color:#f8f8f2">(</span>opener<span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"当前使用UA:"</span><span style="color:#f8f8f2">+</span>str<span style="color:#f8f8f2">(</span>thisua<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#for循环,爬取第1页到第36页的段子内容</span>

<span style="color:#66d9ef">for</span> i <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span><span style="color:#ae81ff">35</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

UA<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#构造不同页码对应网址</span>

thisurl<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"http://www.qiushibaike.com/8hr/page/"</span><span style="color:#f8f8f2">+</span>str<span style="color:#f8f8f2">(</span>i<span style="color:#f8f8f2">+</span><span style="color:#ae81ff">1</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">+</span><span style="color:#a6e22e">"/"</span>

data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlopen<span style="color:#f8f8f2">(</span>thisurl<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">,</span><span style="color:#a6e22e">"ignore"</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#利用<div class="content">提取段子内容</span>

pat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">'<div class="content">.*?<span>(.*?)</span>.*?</div>'</span>

rst<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>pat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">for</span> j <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span>len<span style="color:#f8f8f2">(</span>rst<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span>rst<span style="color:#f8f8f2">[</span>j<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"-------"</span><span style="color:#f8f8f2">)</span></code></span>还可以定时的爬取:

<span style="color:#f8f8f2"><code class="language-python">Import time

<span style="color:slategray">#然后在后面调用time.sleep()方法</span></code></span>换言之,学习爬虫需要灵活变通的思想,针对不同的情况,不同的约束而灵活运用。

三、抓包分析

抓包分析可以将网页中的访问细节信息取出。有时会发现直接爬网页时是无法获取到目标数据的,因为这些数据做了隐藏,此时可以使用抓包分析的手段进行分析,并获取隐藏数据。

1)Fiddler简介

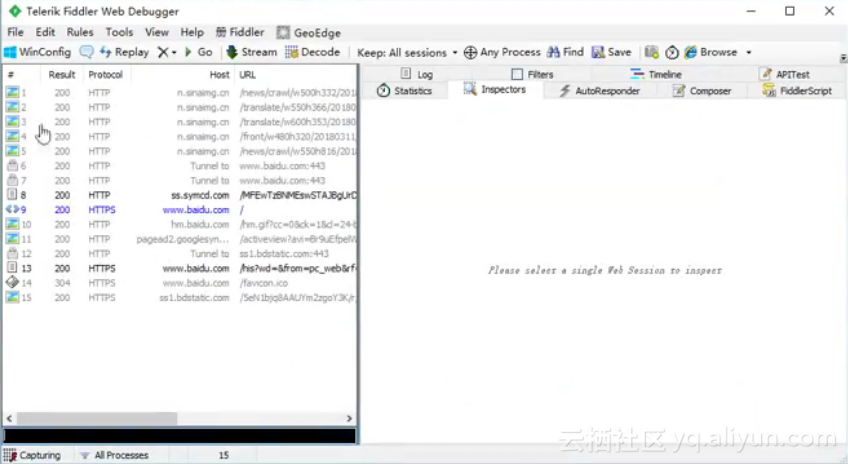

抓包分析可以直接使用浏览器F12进行,也可以使用一些抓包工具进行,这里推荐Fiddler。Fiddler下载安装。假设给Fiddler配合的是火狐浏览器,打开浏览器,如下图,找到连接设置,选择手动代理设置并确定。

假设打开百度,如下图,加载的数据包信息就会在Fiddler中左侧列表中列出来,那么网站中隐藏相关的数据可以从加载的数据包中找到。

2)第二项练习-腾讯视频评论爬虫实战

目标网站:https://v.qq.com/

需要获取的数据:某部电影的评论数据,实现自动加载。

首先可以发现腾讯视频中某个视频的评论,在下面的图片中,如果点击”查看更多评论”,网页地址并无变化,与上面提到的糗事百科中的页码变化不同。而且通过查看源代码,只能看到部分评论。即评论信息是动态加载的,那么该如何爬取多页的评论数据信息?

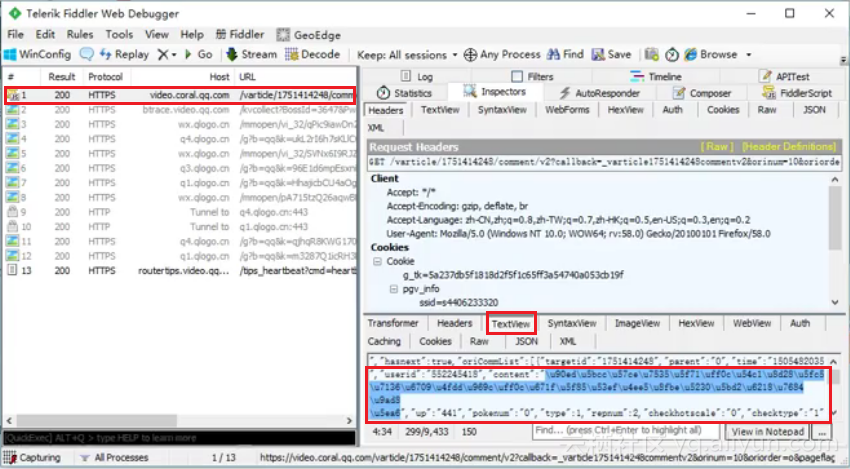

第一步,分析腾讯视频评论网址变化规律。点击”查看更多评论”,同时打开Fiddler,第一条信息的TextView中,TextView中可以看到对应的content内容是unicode编码,刚好对应的是某条评论的内容。

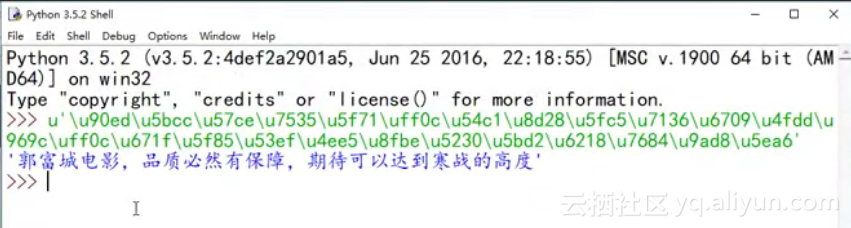

解码出来可以看到对应评论内容。

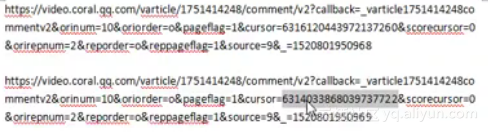

将第一条信息的网址复制出来进行分析,观察其中的规律。下图是两个紧连着的不同评论的url地址,如下图,可以发现只有cursor字段发生变化,只要得到cursor,那么评论的地址就可以轻松获得。如何找到cursor值?

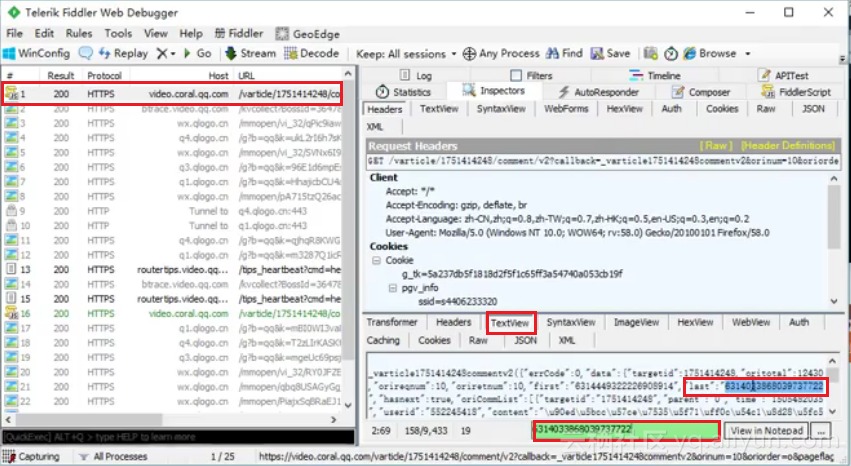

第二步,查找网址中变化的cursor字段值。从上面的第一条评论信息里寻找,发现恰好在last字段值与后一条评论的cursor值相同。即表示cursor的值是迭代方式生成的,每条评论的cursor信息在其上一条评论的数据包中寻找即可。

第三步,完整代码

a.腾讯视频评论爬虫:获取”深度解读”评论内容(单页评论爬虫)

<span style="color:#f8f8f2"><code class="language-python"><span style="color:slategray">#单页评论爬虫</span>

<span style="color:#66d9ef">import</span> urllib<span style="color:#f8f8f2">.</span>request

<span style="color:#66d9ef">import</span> re

<span style="color:slategray">#https://video.coral.qq.com/filmreviewr/c/upcomment/[视频id]?commentid=[评论id]&reqnum=[每次提取的评论的个数]</span>

<span style="color:slategray">#视频id</span>

vid<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"j6cgzhtkuonf6te"</span>

<span style="color:slategray">#评论id</span>

cid<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"6233603654052033588"</span>

num<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"20"</span>

<span style="color:slategray">#构造当前评论网址</span>

url<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"https://video.coral.qq.com/filmreviewr/c/upcomment/"</span><span style="color:#f8f8f2">+</span>vid<span style="color:#f8f8f2">+</span><span style="color:#a6e22e">"?commentid="</span><span style="color:#f8f8f2">+</span>cid<span style="color:#f8f8f2">+</span><span style="color:#a6e22e">"&reqnum="</span><span style="color:#f8f8f2">+</span>num

headers<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">{</span><span style="color:#a6e22e">"User-Agent"</span><span style="color:#f8f8f2">:</span><span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.22 Safari/537.36 SE 2.X MetaSr 1.0"</span><span style="color:#f8f8f2">,</span>

<span style="color:#a6e22e">"Content-Type"</span><span style="color:#f8f8f2">:</span><span style="color:#a6e22e">"application/javascript"</span><span style="color:#f8f8f2">,</span>

<span style="color:#f8f8f2">}</span>

opener<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>build_opener<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

headall<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span><span style="color:#f8f8f2">]</span>

<span style="color:#66d9ef">for</span> key<span style="color:#f8f8f2">,</span>value <span style="color:#66d9ef">in</span> headers<span style="color:#f8f8f2">.</span>items<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

item<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">(</span>key<span style="color:#f8f8f2">,</span>value<span style="color:#f8f8f2">)</span>

headall<span style="color:#f8f8f2">.</span>append<span style="color:#f8f8f2">(</span>item<span style="color:#f8f8f2">)</span>

opener<span style="color:#f8f8f2">.</span>addheaders<span style="color:#f8f8f2">=</span>headall

urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>install_opener<span style="color:#f8f8f2">(</span>opener<span style="color:#f8f8f2">)</span>

<span style="color:slategray">#爬取当前评论页面</span>

data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlopen<span style="color:#f8f8f2">(</span>url<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">)</span>

titlepat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">'"title":"(.*?)"'</span>

commentpat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">'"content":"(.*?)"'</span>

titleall<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>titlepat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

commentall<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>commentpat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">for</span> i <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span>len<span style="color:#f8f8f2">(</span>titleall<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">try</span><span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"评论标题是:"</span><span style="color:#f8f8f2">+</span>eval<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">'u"'</span><span style="color:#f8f8f2">+</span>titleall<span style="color:#f8f8f2">[</span>i<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">+</span><span style="color:#a6e22e">'"'</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"评论内容是:"</span><span style="color:#f8f8f2">+</span>eval<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">'u"'</span><span style="color:#f8f8f2">+</span>commentall<span style="color:#f8f8f2">[</span>i<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">+</span><span style="color:#a6e22e">'"'</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"------"</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">except</span> Exception <span style="color:#66d9ef">as</span> err<span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span>err<span style="color:#f8f8f2">)</span></code></span>b.腾讯视频评论爬虫:获取”深度解读”评论内容(自动切换下一页评论的爬虫)

<span style="color:#f8f8f2"><code class="language-python"><span style="color:slategray">#自动切换下一页评论的爬虫</span>

<span style="color:#66d9ef">import</span> urllib<span style="color:#f8f8f2">.</span>request

<span style="color:#66d9ef">import</span> re

<span style="color:slategray">#https://video.coral.qq.com/filmreviewr/c/upcomment/[视频id]?commentid=[评论id]&reqnum=[每次提取的评论的个数]</span>

vid<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"j6cgzhtkuonf6te"</span>

cid<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"6233603654052033588"</span>

num<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">"3"</span>

headers<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">{</span><span style="color:#a6e22e">"User-Agent"</span><span style="color:#f8f8f2">:</span><span style="color:#a6e22e">"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.22 Safari/537.36 SE 2.X MetaSr 1.0"</span><span style="color:#f8f8f2">,</span>

<span style="color:#a6e22e">"Content-Type"</span><span style="color:#f8f8f2">:</span><span style="color:#a6e22e">"application/javascript"</span><span style="color:#f8f8f2">,</span>

<span style="color:#f8f8f2">}</span>

opener<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>build_opener<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span>

headall<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">[</span><span style="color:#f8f8f2">]</span>

<span style="color:#66d9ef">for</span> key<span style="color:#f8f8f2">,</span>value <span style="color:#66d9ef">in</span> headers<span style="color:#f8f8f2">.</span>items<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

item<span style="color:#f8f8f2">=</span><span style="color:#f8f8f2">(</span>key<span style="color:#f8f8f2">,</span>value<span style="color:#f8f8f2">)</span>

headall<span style="color:#f8f8f2">.</span>append<span style="color:#f8f8f2">(</span>item<span style="color:#f8f8f2">)</span>

opener<span style="color:#f8f8f2">.</span>addheaders<span style="color:#f8f8f2">=</span>headall

urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>install_opener<span style="color:#f8f8f2">(</span>opener<span style="color:#f8f8f2">)</span>

<span style="color:slategray">#for循环,多个页面切换</span>

<span style="color:#66d9ef">for</span> j <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span><span style="color:#ae81ff">100</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

<span style="color:slategray">#爬取当前评论页面</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"第"</span><span style="color:#f8f8f2">+</span>str<span style="color:#f8f8f2">(</span>j<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">+</span><span style="color:#a6e22e">"页"</span><span style="color:#f8f8f2">)</span>

<span style="color:slategray">#构造当前评论网址thisurl="https://video.coral.qq.com/filmreviewr/c/upcomment/"+vid+"?commentid="+cid+</span>

<span style="color:#a6e22e">"&reqnum="</span><span style="color:#f8f8f2">+</span>num

data<span style="color:#f8f8f2">=</span>urllib<span style="color:#f8f8f2">.</span>request<span style="color:#f8f8f2">.</span>urlopen<span style="color:#f8f8f2">(</span>thisurl<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>read<span style="color:#f8f8f2">(</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>decode<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"utf-8"</span><span style="color:#f8f8f2">)</span>

titlepat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">'"title":"(.*?)","abstract":"'</span>

commentpat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">'"content":"(.*?)"'</span>

titleall<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>titlepat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

commentall<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>commentpat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span>

lastpat<span style="color:#f8f8f2">=</span><span style="color:#a6e22e">'"last":"(.*?)"'</span>

<span style="color:slategray">#获取last值,赋值给cid,进行评论id切换</span>

cid<span style="color:#f8f8f2">=</span>re<span style="color:#f8f8f2">.</span>compile<span style="color:#f8f8f2">(</span>lastpat<span style="color:#f8f8f2">,</span>re<span style="color:#f8f8f2">.</span>S<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">.</span>findall<span style="color:#f8f8f2">(</span>data<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">[</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">]</span>

<span style="color:#66d9ef">for</span> i <span style="color:#66d9ef">in</span> range<span style="color:#f8f8f2">(</span><span style="color:#ae81ff">0</span><span style="color:#f8f8f2">,</span>len<span style="color:#f8f8f2">(</span>titleall<span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">try</span><span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"评论标题是:"</span><span style="color:#f8f8f2">+</span>eval<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">'u"'</span><span style="color:#f8f8f2">+</span>titleall<span style="color:#f8f8f2">[</span>i<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">+</span><span style="color:#a6e22e">'"'</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"评论内容是:"</span><span style="color:#f8f8f2">+</span>eval<span style="color:#f8f8f2">(</span><span style="color:#a6e22e">'u"'</span><span style="color:#f8f8f2">+</span>commentall<span style="color:#f8f8f2">[</span>i<span style="color:#f8f8f2">]</span><span style="color:#f8f8f2">+</span><span style="color:#a6e22e">'"'</span><span style="color:#f8f8f2">)</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span><span style="color:#a6e22e">"------"</span><span style="color:#f8f8f2">)</span>

<span style="color:#66d9ef">except</span> Exception <span style="color:#66d9ef">as</span> err<span style="color:#f8f8f2">:</span>

<span style="color:#66d9ef">print</span><span style="color:#f8f8f2">(</span>err<span style="color:#f8f8f2">)</span>

</code></span>四、挑战案例

1)第三项练习-中国裁判文书网爬虫实战

目标网站:http://wenshu.court.gov.cn/

需要获取的数据:2018年上海市的刑事案件接下来进入实战讲解。

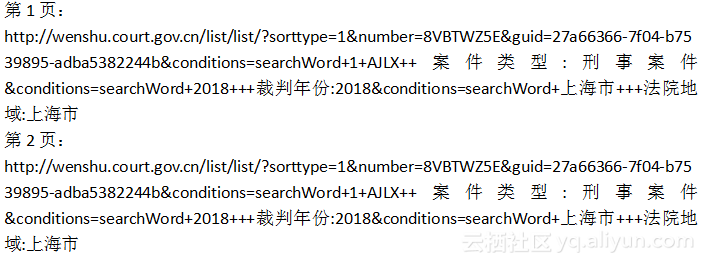

第一步,观察换页之后的网页地址变化规律。打开中国裁判文书网2018年上海市刑事案件的第一页,在换页时,如下图中的地址,发现网址是完全不变的,这种情况就是属于隐藏,使用抓包分析进行爬取。

第二步,查找变化字段。从Fiddler中可以找到,获取某页的文书数据的地址:http://wenshu.court.gov.cn/List/ListContent

可以发现没有对应的网页变换,意味着中国裁判文书网换页是通过POST进行请求,对应的变化数据不显示在网址中。通过F12查看网页代码,再换页操作之后,如下图,查看ListContent,其中有几个字段需要了解:

Param:检索条件

Index:页码

Page:每页展示案件数量

...