本文实现AlexNet,用于识别MNIST手写数字体。所有代码的框架基于tensorflow。看了几篇论文的源代码之后,觉得tensorflow 确实很难,学习编程还是靠实践。这篇博客留着给自己以及学习深度学习道路上的小伙伴们一些参考吧,希望能对大家有所帮助!

环境:

Ubuntu 16.04

tensorflow-gpu

一.数据集加载

tensor flow examples 中自带mnist数据集,可以自动下载和进行处理。由于笔者的tensorflow版本的原因吧,使用自带的read_data_sets()没有任何效果,那就把数据集下载下来,自己解压处理。mnist数据解压后格式是二进制文件,需要进行特殊的处理变成numpy数组,这里这是参考的网上的代码,觉得这个处理过程如果大家明白最好,不明白也不需要太纠结。加载数据集代码如下:(注意这里需要把下载的数据集先解压之后,在处理)

# laod mnist dataset to npy

import os

import struct

import numpy as np

# 加载数据集

def load_mnist(path, kind='train'):

"""Load MNIST data from `path`"""

labels_path = os.path.join(path,

'%s-labels.idx1-ubyte'

% kind)

images_path = os.path.join(path,

'%s-images.idx3-ubyte'

% kind)

with open(labels_path, 'rb') as lbpath:

magic, n = struct.unpack('>II',

lbpath.read(8))

labels = np.fromfile(lbpath,

dtype=np.uint8)

with open(images_path, 'rb') as imgpath:

magic, num, rows, cols = struct.unpack('>IIII',

imgpath.read(16))

images = np.fromfile(imgpath,

dtype=np.uint8).reshape(len(labels), 784)

return images, labels

path = './MNIST_data'

images,labels = load_mnist(path=path,kind='t10k') 上面的代码,可以把数据预处理,然后自己保存成npy格式的文件。原始的mnist数据灰度值是0-255,在feed给网络的时候,注意数据归一化处理

二.网络搭建

由于最原始的AlexNet是处理ImageNet数据集的,图片大小是224x224x3,而mnist图片大小可以看作是28x28x1.所以网络的形式上会有差别,包括卷积核的参数。但是思想是一样的,学习这个思想很重要。代码如下:

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sat Mar 24 16:37:00 2018

@author: wsw

"""

import tensorflow as tf

class Alexnet():

def __init__(self):

self.lr_based = 0.01

self.wd = 1e-4

self.epochs = 100

def build(self,input_tensor,train=True,num_class=10):

self.conv1 = self.conv_layer(input_tensor,[3,3,1,16],name='conv1')

self.pool1 = self.max_pool_2x2(self.conv1,name='pool1')

self.conv2_1 = self.conv_layer(self.pool1,[3,3,16,32],name='conv2_1')

self.conv2_2 = self.conv_layer(self.conv2_1,[3,3,32,64],name='conv2_2')

self.pool2 = self.max_pool_2x2(self.conv2_2,name='pool2')

self.fc3 = self.fc_layer(self.pool2,120,relu=train,name='fc3')

self.fc4 = self.fc_layer(self.fc3,120,relu=train,name='fc4')

self.fc5 = self.fc_layer(self.fc4,num_class,relu=False,name='fc5')

return self.fc5

pass

def variables_weights(self,shape,name):

in_num = shape[0]*shape[1]*shape[2]

out_num = shape[3]

with tf.variable_scope(name):

stddev = (2/(in_num+out_num))**0.5

init = tf.random_normal_initializer(stddev=stddev)

weights = tf.get_variable('weights',initializer=init,shape=shape)

return weights

def variables_biases(self,shape,name):

with tf.variable_scope(name):

init = tf.constant_initializer(0.1)

biases = tf.get_variable('biases',initializer=init,shape=shape)

return biases

def conv_layer(self,bottom,shape,name):

with tf.name_scope(name):

kernel = self.variables_weights(shape,name)

conv = tf.nn.conv2d(bottom,kernel,strides=[1,1,1,1],padding='SAME')

bias = self.variables_biases(shape[3],name)

biases = tf.nn.bias_add(conv,bias)

conv_out = tf.nn.relu(biases)

return conv_out

def max_pool_2x2(self,bottom,name):

with tf.name_scope(name):

pool = tf.nn.max_pool(bottom,ksize=[1,2,2,1],strides=[1,2,2,1],padding='SAME')

return pool

def fc_layer(self,bottom,out_num,name,relu=True):

bottom_shape = bottom.get_shape().as_list()

if name=='fc3':

in_num = bottom_shape[1]*bottom_shape[2]*bottom_shape[3]

bottom = tf.reshape(bottom,shape=(-1,in_num))

else:

in_num = bottom_shape[1]

with tf.name_scope(name):

weights = self.fc_weights(in_num,out_num,name)

biases = self.variables_biases(out_num,name)

output = tf.matmul(bottom,weights) + biases

if relu:

output = tf.nn.relu(output)

return output

def fc_weights(self,in_num,out_num,name):

with tf.variable_scope(name):

stddev = (2/(in_num+out_num))**0.5

init = tf.random_normal_initializer(stddev=stddev)

return tf.get_variable('weights',initializer=init,shape=[in_num,out_num])

这个简易版的网络有3个卷积层,2个池化层,3个全连接层。但是麻雀虽小,五脏俱全。这个网络完整的示范了一个深度学习的框架搭建,从数据预处理,从队列获取batch个训练样本,到模型参数的保存,输出到tensorboard可视化训练过程,最后加载训练好的参数,在测试数据上进行测试。

三.训练过程

先给出训练的代码,然后在说说一些细节问题。

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sat Mar 24 22:21:35 2018

@author: wsw

"""

import tensorflow as tf

import numpy as np

import mnist_CNN

import time

# from sklearn.preprocessing import StandardScaler

logdir = './log/'

model = './model/'

datapath = './MNIST_data/'

train_path = datapath+'train.npy'

train_label_path = datapath+'train-label.npy'

train_data = np.load(train_path)

train_label = np.load(train_label_path)

#ss = StandardScaler()

## normalization

#train_data = ss.fit_transform(train_data)

# random produce one instance

# 产生一个队列,随机产生一个实例

image,label = tf.train.slice_input_producer([train_data,train_label])

with tf.name_scope('reshape'):

# reshape iamge

image = tf.reshape(image,shape=(28,28,1))

# image = tf.transpose(image,[1,2,0])

image = tf.image.per_image_standardization(image)

label = tf.one_hot(label,depth=10)

# 随机生成一个batch的训练数据

with tf.name_scope('get_bacth'):

batch_size = 100

min_after_dequeue = 10000

capacity = 3*min_after_dequeue+batch_size

img_batch,lab_batch = tf.train.shuffle_batch([image,label],batch_size=batch_size,

min_after_dequeue=min_after_dequeue,

capacity=capacity)

#print(img_batch.shape)

#print(lab_batch.shape)

# create model

with tf.name_scope('placeholder'):

xs = tf.placeholder(dtype=tf.float32,shape=[None,28,28,1])

ys = tf.placeholder(dtype=tf.float32,shape=[None,10])

with tf.name_scope('losses'):

alexnet = mnist_CNN.Alexnet()

logits = alexnet.build(xs,train=True)

labels = tf.argmax(ys,axis=1)

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=logits,

labels=labels)

losses = tf.reduce_mean(cross_entropy)

tf.summary.scalar(logdir+'losses',losses)

global_step = tf.Variable(0,trainable=False)

with tf.name_scope('train'):

optimizer = tf.train.GradientDescentOptimizer(0.01)

train_op = optimizer.minimize(losses,global_step=global_step)

with tf.name_scope('accuracy'):

accu_predict = tf.nn.in_top_k(logits,labels,k=1)

accuracy = tf.reduce_mean(tf.cast(accu_predict,dtype=tf.float32))

tf.summary.scalar(logdir+'train_accuracy',accuracy)

with tf.name_scope('configuration'):

saver = tf.train.Saver(max_to_keep=3)

merge_op = tf.summary.merge_all()

with tf.Session() as sess:

writer = tf.summary.FileWriter(logdir,sess.graph)

tf.local_variables_initializer().run()

tf.global_variables_initializer().run()

coord = tf.train.Coordinator()

thread = tf.train.start_queue_runners(sess,coord)

try:

while not coord.should_stop():

start = time.time()

imgs,labs = sess.run([img_batch,lab_batch])

_,loss_value = sess.run([train_op,losses],

feed_dict={xs:imgs,ys:labs})

end = time.time()

duration = end - start

print('duration time:',duration)

if global_step.eval() %100 == 0:

result = sess.run(merge_op,feed_dict={xs:imgs,

ys:labs})

writer.add_summary(result,global_step.eval())

saver.save(sess,model,global_step=global_step.eval())

if global_step.eval() == alexnet.epochs*600:

break

except tf.errors.OutOfRangeError:

print('done!');

finally:

coord.request_stop()

coord.join(thread)

首先说说tensorflow 队列的问题,个人理解也没有多通透,昨天这个代码这里还出现错误,一直在网上找原因。tf.train.slice_input_producer()函数,可以随机从输入的tensorlist中输出一个tensor,把所有数据集和标签组合输入[images,labels],随机选择一个进行输出,这个函数实际上生成了一个队列,由子线程运行的。这里在主线程里面也就是with tf.Session() as sess 这个上下文管理器中开启子线程 tf.train.start_queue_runners(),不然队列始终为空,线程一直挂起。还有一个参数问题,tf.train.slice_input_producer()中num_epochs=None,默认为空,查看其他的资料说循环迭代的次数,一般使用不设置。之前设置了程序有问题,后来参考网上相关资料,不设置比较好。然后,tf.train.slice_input_producer()函数每次都只是产生一个实例,我们需要一个batch的数据,然后使用tf.train.shuffle_batch([image,label]),该函数使用开启的子线程自动进行队列填充,当达到batch_size时就输出feed给网络。还有一个问题是,tensorflow中多个线程是异步进行的,我们需要使线程同步结束,这个时候需要tf.train.Coordinator() 建立一个线程协调器,使多个异步线程之间同步结束。上面代码快儿中try............except.............finally,是固定的经典的套路,照搬就行。训练100步之后,保存一次模型的参数,输出到tensorboard日志文件中,查看一次训练数据集的准确率。

四.在数据集测试

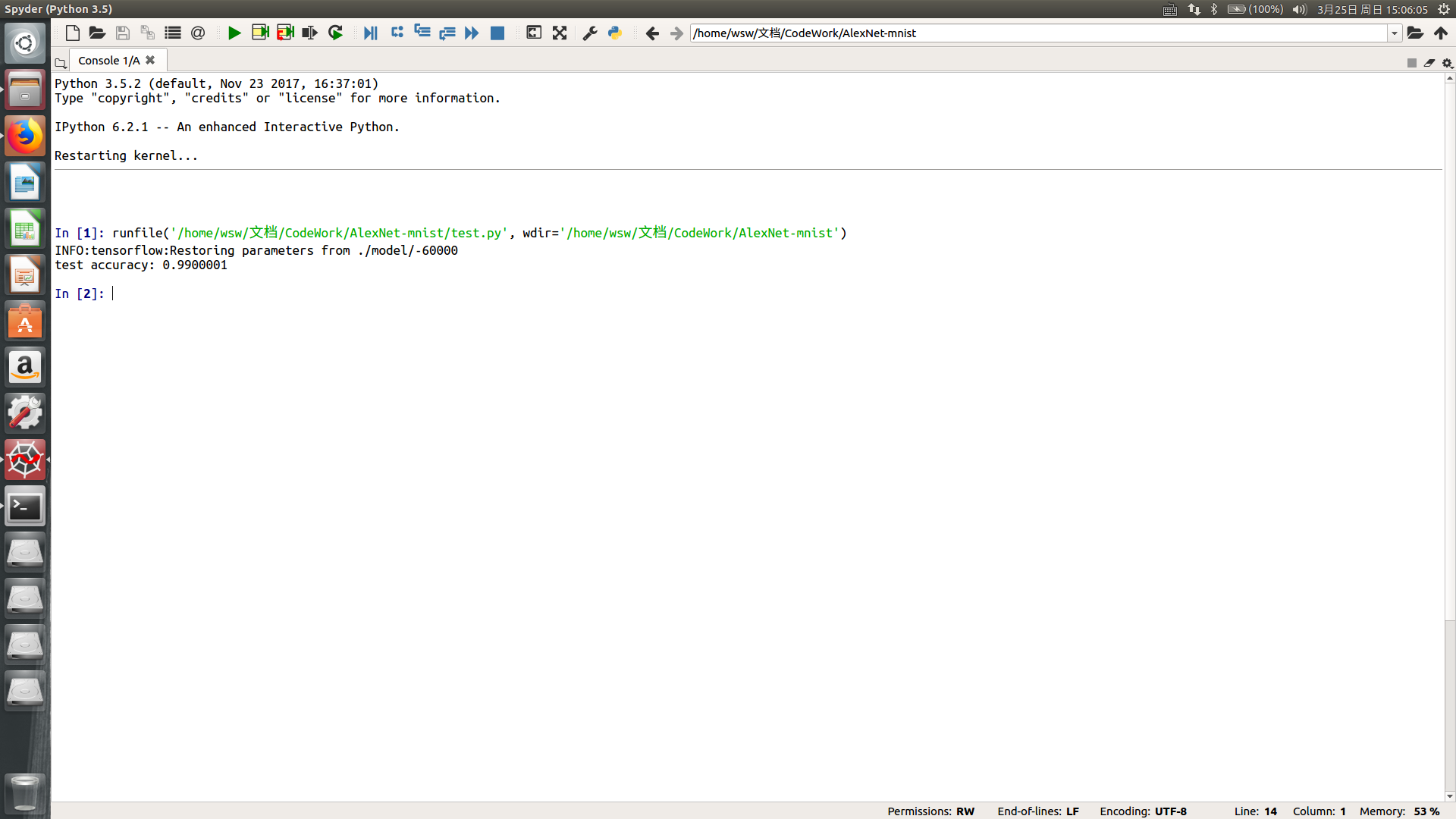

以上训练了60000步。训练完成后,加载模型参数进行测试。测试代码如下:

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sun Mar 25 13:58:37 2018

@author: wsw

"""

import tensorflow as tf

import mnist_CNN

import numpy as np

model = './model/'

with tf.name_scope('load_data'):

datapath = './MNIST_data/'

testdata = datapath+'test.npy'

testlabel = datapath+'test-label.npy'

test_data = np.load(testdata)

labels = np.load(testlabel)

with tf.name_scope('preprocessing'):

mean = np.mean(test_data,axis=1)

std = np.std(test_data,axis=1)

data = np.zeros(shape=(test_data.shape))

for i in range(len(mean)):

data[i,:] = (test_data[i,:]-mean[i])/std[i]

data = data.reshape(-1,28,28,1).astype(np.float32)

with tf.name_scope('create_model'):

alexnet = mnist_CNN.Alexnet()

logits = alexnet.build(data,train=True)

with tf.name_scope('test_accuracy'):

# labels is not need to one-hot encode

# and the dtype is int32 or int64

accuracy_op = tf.nn.in_top_k(logits,labels,k=1)

accuracy = tf.reduce_mean(tf.cast(accuracy_op,dtype=tf.float32))

saver = tf.train.Saver()

with tf.Session() as sess:

# get the newest model parameter

ckpt = tf.train.get_checkpoint_state(model)

path = ckpt.model_checkpoint_path

if ckpt and path:

saver.restore(sess,path)

accu_score = sess.run(accuracy)

print('test accuracy:',accu_score)在测试时需要对每张图片进行归一化操作。计算准确率可以使用tensorflow tf.nn.in_top_k()函数,注意这里的标签值不需要进行one_hot编码。tf.train.get_checkpoint_state()可以得到最新保存的模型参数,然后进行恢复加载,最后利用加载的参数进行预测。在测试代码中,不需要进行变量的初始化。

最后简单看一下,模型的准确率为99%。期望和大家交流与学习。