读取两个数据,一个数据存放用户的id,性别和年龄,一个数据存放的电影的uid,id等信息但缺少用户信息,将二者拼接起来

本次采用的是在reduce阶段拼接,也可以在map阶段拼接

1.JoinBean

今天碰到一个很尴尬的问题,read和write中的读写数据顺序必须一直,否则数据会混乱。检查了很久才发现,需要注意

package nuc.edu.ls.extend;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Writable;

public class JoinBean implements Writable{

private String uid;

private String age;

private String gender;

private String movieId;

private String rating;

private String table;

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

public String getAge() {

return age;

}

public void setAge(String age) {

this.age = age;

}

public String getGender() {

return gender;

}

public void setGender(String gender) {

this.gender = gender;

}

public String getMovieId() {

return movieId;

}

public void setMovieId(String movieId) {

this.movieId = movieId;

}

public String getRating() {

return rating;

}

public void setRating(String rating) {

this.rating = rating;

}

public String getTable() {

return table;

}

public void setTable(String table) {

this.table = table;

}

@Override

public String toString() {

return "JoinBean [uid=" + uid + ", age=" + age + ", gender=" + gender + ", movieId=" + movieId + ", rating="

+ rating + ", table=" + table + "]";

}

@Override

public void readFields(DataInput arg0) throws IOException {

uid=arg0.readUTF();

age=arg0.readUTF();

gender=arg0.readUTF();

movieId=arg0.readUTF();

rating=arg0.readUTF();

table=arg0.readUTF();

}

@Override

public void write(DataOutput arg0) throws IOException {

// TODO Auto-generated method stub

arg0.writeUTF(uid);

arg0.writeUTF(age);

arg0.writeUTF(gender);

arg0.writeUTF(movieId);

arg0.writeUTF(rating);

arg0.writeUTF(table);

}

public void set(String uid, String age, String gender, String movieId, String rating, String table) {

this.uid = uid;

this.age = age;

this.gender = gender;

this.movieId = movieId;

this.rating = rating;

this.table = table;

}

}

2.任务

map将相同用d户id的数据汇集到一起,两数据的唯一联系就是uid

setup方法中获得了写入的文件名信息,写入setup中的好处在于只需要获取一次即可,提高效率

reduce阶段 随便取到一个用户信息(根据table即写入的文件名),就可以对集合中所有电影信息进行拼接了

package nuc.edu.ls.extend;

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import org.apache.commons.io.FileUtils;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class JoinMR {

public static class MapTask extends Mapper<LongWritable, Text, Text, JoinBean> {

String table;

@Override

protected void setup(Mapper<LongWritable, Text, Text, JoinBean>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

FileSplit fileSplit = (FileSplit) context.getInputSplit();

table = fileSplit.getPath().getName();

}

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, JoinBean>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

String[] split = value.toString().split("::");

JoinBean jb = new JoinBean();

if (table.startsWith("users")) {

jb.set(split[0], split[2], split[1], "null", "null", "user");

} else {

jb.set(split[0], "null", "null", split[1], split[2], "rating");

}

// System.out.print(jb.getUid()+"\\");

// System.out.println(jb);

context.write(new Text(jb.getUid()), jb);

}

}

public static class ReduceTask extends Reducer<Text, JoinBean, JoinBean, NullWritable> {

@Override

protected void reduce(Text key, Iterable<JoinBean> values,

Reducer<Text, JoinBean, JoinBean, NullWritable>.Context context)

throws IOException, InterruptedException {

// TODO Auto-generated method stub

JoinBean user = new JoinBean();

ArrayList<JoinBean> list = new ArrayList<>();

for (JoinBean joinBean2 : values) {

String table = joinBean2.getTable();

if ("user".equals(table)) {

user.set(joinBean2.getUid(), joinBean2.getAge(), joinBean2.getGender(), joinBean2.getMovieId(), joinBean2.getRating(), joinBean2.getTable());

} else {

JoinBean joinBean3 = new JoinBean();

joinBean3.set(joinBean2.getUid(), joinBean2.getAge(), joinBean2.getGender(), joinBean2.getMovieId(), joinBean2.getRating(), joinBean2.getTable());

list.add(joinBean3);

}

}

System.out.print(key.toString()+"\\\\\\");

System.out.println(user+"\\\\\\");

// 拼接数据

for (JoinBean join : list) {

join.setAge(user.getAge());

join.setGender(user.getGender());

context.write(join, NullWritable.get());

}

}

}

public static void main(String[] args) throws Exception {

System.setProperty("HADOOP_USER_NAME", "root");

Configuration conf = new Configuration();

Job job = Job.getInstance(conf, "eclipseToCluster");

job.setMapperClass(MapTask.class);

job.setReducerClass(ReduceTask.class);

job.setJarByClass(JoinMR.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(JoinBean.class);

job.setOutputKeyClass(JoinBean.class);

job.setOutputValueClass(NullWritable.class);

// job.setCombinerClass(ReduceTask.class);

FileInputFormat.addInputPath(job, new Path("d:/indata/"));

FileOutputFormat.setOutputPath(job, new Path("d:/outdata/movie"));

File file = new File("d:/outdata/movie");

if(file.exists()) {

FileUtils.deleteDirectory(file);

}

boolean completion = job.waitForCompletion(true);

System.out.println(completion ? 0 : 1);

}

}

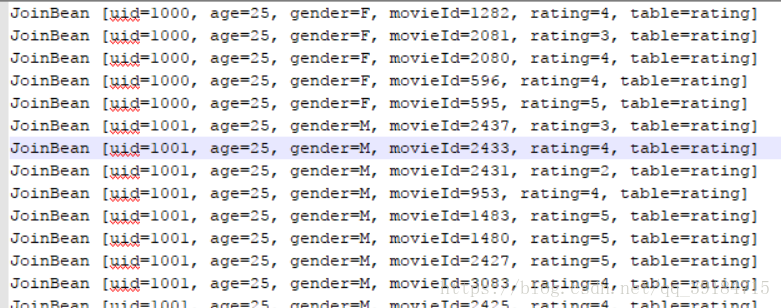

3.结果:

想结果好看可以修改JavaBean里面的toString方法