版权声明:采菊东篱下,悠然现南山! https://blog.csdn.net/ChaosJ/article/details/53106524

Maptask 超时问题(1)

1.Maptask超时问题情况描述

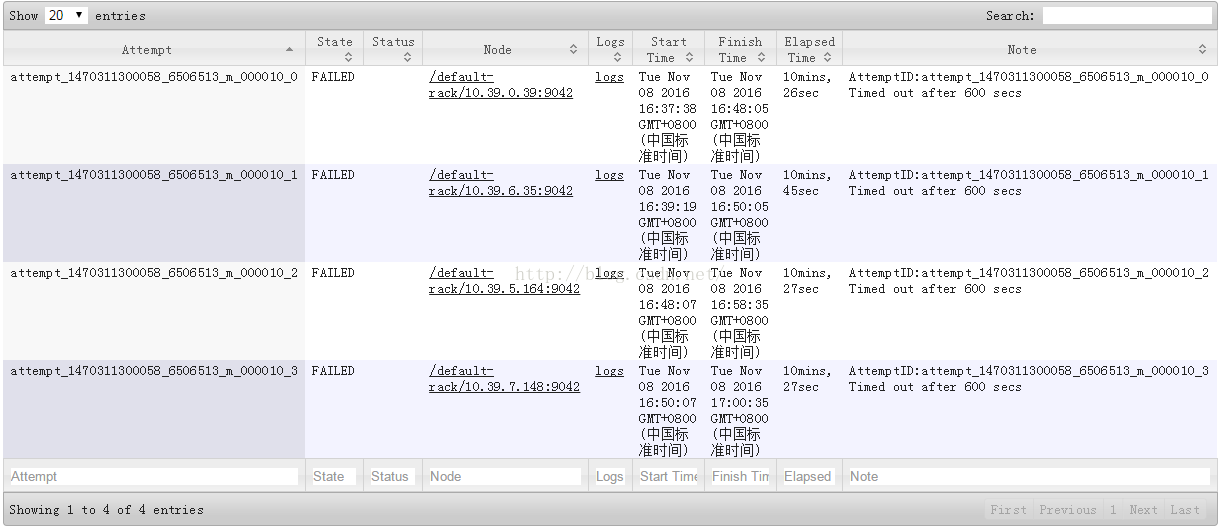

- 某个maptask重试四次后导致job失败,失败原因就是task超时,如下: ` AttemptID:attempt_1470311300058_6506513_m_000010_0 Timed out after 600 secs

- mapred.task.timeout 参数说明

The number of milliseconds before a task will beterminated if it neither reads an input, writes an output, norupdates its status string.

该参数默认为60000毫秒(即600秒)。这意味着,如果map或reduce方法在600秒内没有返回或者输出内容,TaskTracker将认为相应的map或reduce已经死亡。 并向JobTracker汇报错误。JobTracker会杀死此次尝试,并在其他节点上重新执行它。

2.问题分析

2.1 导致该问题出现的原因

- 处理程序存在死循环

- 网络问题 丢包导致

2.2 具体分析过程

- 该application多次重复提交,问题还是重现,应该不是网络问题导致,及时集群存在网络问题,也不可能这么容易出现现;

- 每次重复提交,卡主的map task 读取的文件总是处理同一个文件:hdfs://ns1/user/sinadata/warehouse/f_tblog_hotwordsv3_classify_preprocess3/dt=20161107/hour=10/000004_0,因此可能是这个数据文件有问题;

- 多次打印卡主maptask的jstack,进程一直卡在如下的地方,分析可见自定义udf可能存在死循环,即异常的数据导致udf程序陷入死循环! : ps -ef | grep application_1470311300058_6589239 确定map task线程pid; jstack -F pid

main" prio=10 tid=0x00007fa9a800f800 nid=0x1bac runnable [0x00007fa9ae992000]

java.lang.Thread.State: RUNNABLE

at java.util.regex.Pattern$CharProperty.match(Pattern.java:3694)

at java.util.regex.Pattern$Curly.match0(Pattern.java:4158)

at java.util.regex.Pattern$Curly.match(Pattern.java:4132)

at java.util.regex.Pattern$Curly.match0(Pattern.java:4170)

at java.util.regex.Pattern$Curly.match(Pattern.java:4132)

at java.util.regex.Pattern$Slice.match(Pattern.java:3870)

at java.util.regex.Pattern$Curly.match0(Pattern.java:4170)

at java.util.regex.Pattern$Curly.match(Pattern.java:4132)

at java.util.regex.Pattern$Curly.match0(Pattern.java:4170)

at java.util.regex.Pattern$Curly.match(Pattern.java:4132)

at java.util.regex.Pattern$Slice.match(Pattern.java:3870)

at java.util.regex.Pattern$Curly.match0(Pattern.java:4170)

at java.util.regex.Pattern$Curly.match(Pattern.java:4132)

at java.util.regex.Matcher.match(Matcher.java:1221)

at java.util.regex.Matcher.matches(Matcher.java:559)

at org.apache.hadoop.hive.ql.udf.UDFLike.evaluate(UDFLike.java:192)

at sun.reflect.GeneratedMethodAccessor4.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.hive.ql.exec.FunctionRegistry.invoke(FunctionRegistry.java:1219)

at org.apache.hadoop.hive.ql.udf.generic.GenericUDFBridge.evaluate(GenericUDFBridge.java:182)

at org.apache.hadoop.hive.ql.exec.ExprNodeGenericFuncEvaluator._evaluate(ExprNodeGenericFuncEvaluator.java:166)

at org.apache.hadoop.hive.ql.exec.ExprNodeEvaluator.evaluate(ExprNodeEvaluator.java:77)

at org.apache.hadoop.hive.ql.exec.ExprNodeGenericFuncEvaluator$DeferredExprObject.get(ExprNodeGenericFuncEvaluator.java:77)

at org.apache.hadoop.hive.ql.udf.generic.GenericUDFOPOr.evaluate(GenericUDFOPOr.java:69)

at org.apache.hadoop.hive.ql.exec.ExprNodeGenericFuncEvaluator._evaluate(ExprNodeGenericFuncEvaluator.java:166)

at org.apache.hadoop.hive.ql.exec.ExprNodeEvaluator.evaluate(ExprNodeEvaluator.java:77)

at org.apache.hadoop.hive.ql.exec.ExprNodeGenericFuncEvaluator$DeferredExprObject.get(ExprNodeGenericFuncEvaluator.java:77)

at org.apache.hadoop.hive.ql.udf.generic.GenericUDFWhen.evaluate(GenericUDFWhen.java:75)

at org.apache.hadoop.hive.ql.exec.ExprNodeGenericFuncEvaluator._evaluate(ExprNodeGenericFuncEvaluator.java:166)

at org.apache.hadoop.hive.ql.exec.ExprNodeEvaluator.evaluate(ExprNodeEvaluator.java:77)

at org.apache.hadoop.hive.ql.exec.ExprNodeEvaluatorHead._evaluate(ExprNodeEvaluatorHead.java:44)

at org.apache.hadoop.hive.ql.exec.ExprNodeEvaluator.evaluate(ExprNodeEvaluator.java:77)

at org.apache.hadoop.hive.ql.exec.ExprNodeEvaluator.evaluate(ExprNodeEvaluator.java:65)

at org.apache.hadoop.hive.ql.exec.SelectOperator.processOp(SelectOperator.java:79)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:793)

at org.apache.hadoop.hive.ql.exec.TableScanOperator.processOp(TableScanOperator.java:92)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:793)

at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:540)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:177)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:430)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:342)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:167)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1550)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162)- 另外确认程序死循环的另一个依据是,该进程占用cpu 持续接近100%:top -p pid

3.解决办法

- 二分法切分有问题的数据,重复提交找到对应的错误数据;

- 在UDF中打印处理的处理数据内容,看处理那一条数据的时候卡主;

- 加长mapred.task.timeout超时时间不是处理这个问题的办法!!!!