lvs-dr+keepalived实例架设

一、 实战基于ipvs的集群

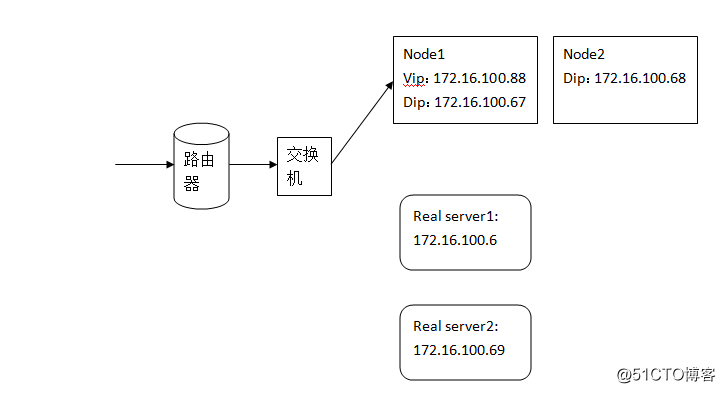

集群的拓扑图

node1:17216.100.67

node2:170.16.100.68

VIP:172.16.100.88

RS1:172.16.100.6

RS2:172.16.100.69

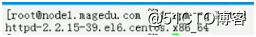

1、 检查配置real server1(172.16.100.6)的web服务。

~] rpm –q httpd

~] echo ‘’<h1>RS1 CentOS 6</h1>” > /var/www/html/index.html

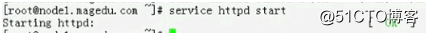

开启apache服务

~] service httpd start

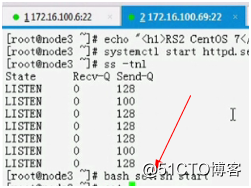

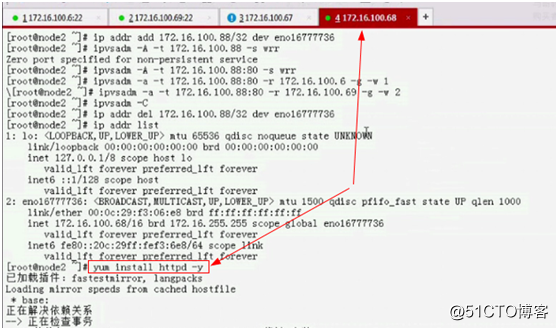

2、 检查配置real server2(172.16.100.69)的web服务。

~] rpm –q httpd

检查到RS2没有安装httpd

~] yum install httpd -y

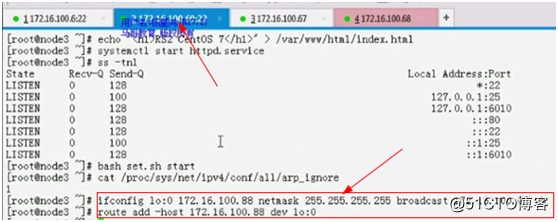

~] echo ‘’<h1>RS2 CentOS 7</h1>” > /var/www/html/index.html

开启apache服务

~] systemctl start httpd.service

查看服务是否启动

~] ss –tnl

3、 安装director的ipvsadm

确保ipvs功能存在。(此处不用确认,因为内核中有ipvs功能)

安装ipvsadm。

~] yum install ipvsadm –y

4、 配置director1的vip地址。

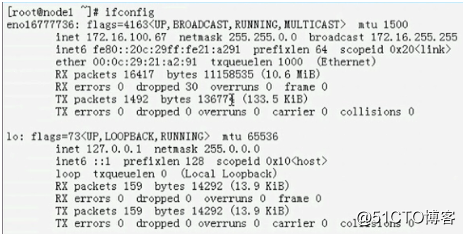

~] ifconfig

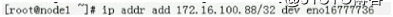

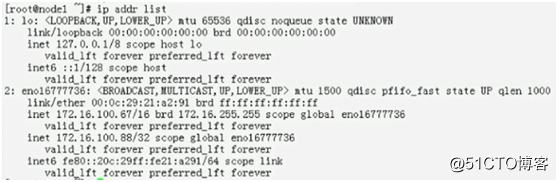

~] ip addr add 172.16.100.88/32 dev eno16777736

~] ip addr list

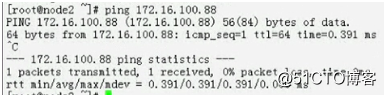

5、 在node2上检查node1(director1)的vip地址是否通。

~] ping 172.16.100.88

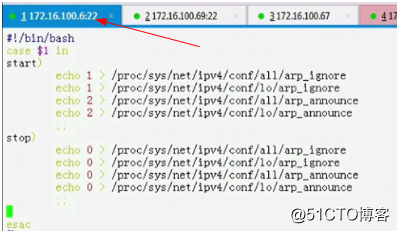

6、 配置real server1的arp_announce,arp_ignore。

在real server1上编辑一个执行脚本(修改arp_announce,arp_ignore两个内核参数脚本)。

~] vim set.sh

#!/bin/bash

case $1 in

start)

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

stop)

echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore

echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce

echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce

;;

esac

执行脚本

~] bash set.sh start

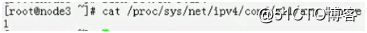

检查内核参数修改情况。

~] cat /proc/sys/net/ipv4/conf/all/arp_ignore

~] cat /proc/sys/net/ipv4/conf/all/arp_announce

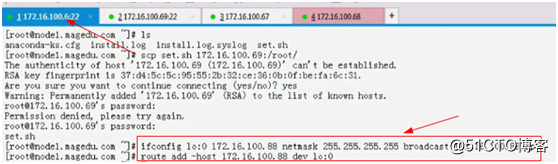

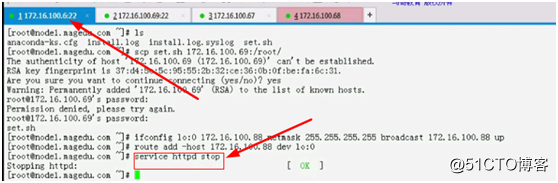

7、 把set.sh脚本传递给另一个RS2

~] scp set.sh 172.16.100.69:/root/

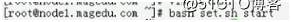

8、 在RS2(172.16.100.69)上运行set.sh脚本修改内核参数。

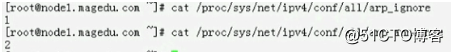

~] bash set.sh start

验证内核参数修改情况

~] cat /proc/sys/net/ipv4/conf/all/arp_ignore

9、 配置RS1(172.16.100.6)的ip地址。

~] ifconfig lo:0 172.16.100.88 netmask 255.255.255.255 broadcast 172.16.100.88 up

~] route add –host 172.16.100.88 dev lo:0

10、 配置RS2(172.16.100.69)的ip地址。

~] ifconfig lo:0 172.16.100.88 netmask 255.255.255.255 broadcast 172.16.100.88 up

~] route add –host 172.16.100.88 dev lo:0

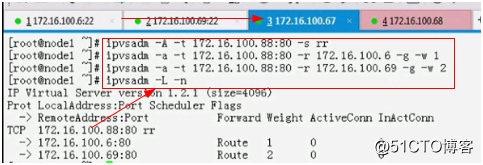

11、 在node1(172.16.100.67)上添加ipvs规则。(下面的-s是算法,-g指明的是dr类型)

~] ipvsadm –A –t 172.16.100.88:80 –s rr

~] ipvsadm –a –t 172.16.100.88:80 –r 172.16.100.6 –g –w 1

~] ipvsadm –a –t 172.16.100.88:80 –r 172.16.100.69 –g –w 2

查看ipvs规则

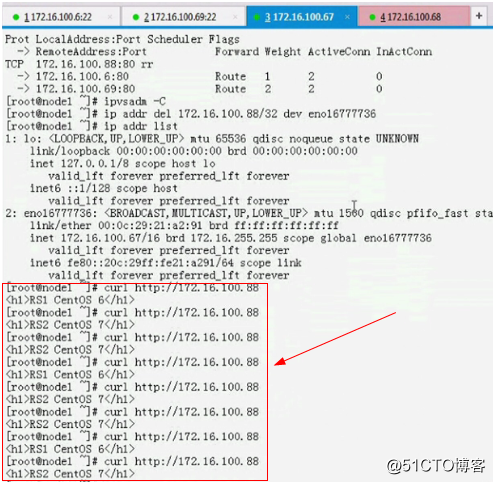

~] ipvsadm –Ln

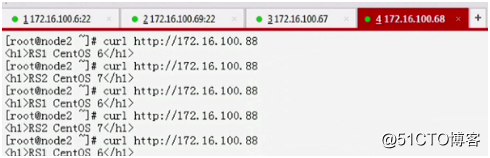

12、 用node2作为客户端请求vip看结果。

~] curl http://172.16.100.88

此时证明第一个director1(node1)已经ok。

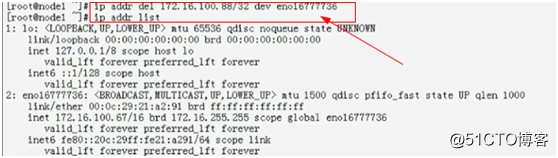

13、 在node1上清空的ipvs规则,并删除eno16777736的网卡。

~] ipvsadm -C

~] ip addr del 172.16.100.88/32 dev eno16777736

~] ip addr list

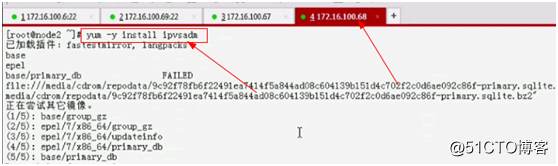

14、 安装第二个director2(node2)172.16.100.68。

~] yum –y install ipvsadm

15、 配置node2的vip。

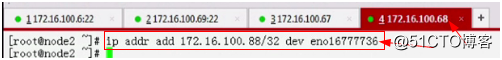

~] ip addr add 172.16.100.88/32 dev eno16777736

16、 生成ipvs规则。

~] ipvsadm –A –t 172.16.100.88:80 –s wrr

~] ipvsadm –a –t 172.16.100.88:80 –r 172.16.100.6 –g –w 1

~] ipvsadm –a –t 172.16.100.88:80 –r 172.16.100.69 –g –w 2

17、 在node1检查node2(172.16.100.68)的LB功能是够正常。

~] curl http://172.16.100.88

此时证明director2功能正常。

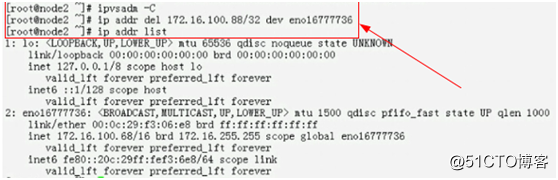

18、 在node2上清空的ipvs规则,并删除eno16777736的网卡。

~] ipvsadm –C

~] ip addr del 172.16.100.88/32 dev eno16777736

~] ip addr list

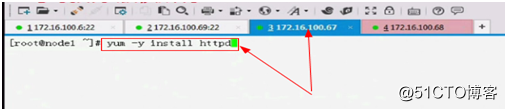

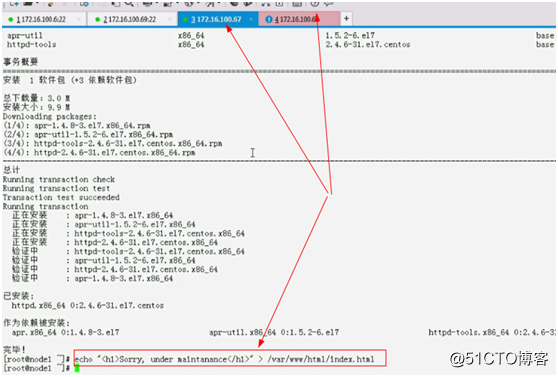

19、 为了让两个node节点都有sorry server,所以两个节点上安装httpd服务。

~] yum –y install httpd

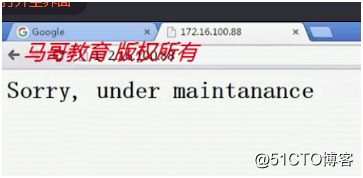

20、 在两个node节点上各自给一个sorry页面。

root@node1 ~] echo “<h1>Sorry, under maintenance</h1>” > /var/www/html/index.html

root@node2 ~] echo “<h1>Sorry, under maintenance(68)</h1>” > /var/www/html/index.html

21、 两个节点上apache服务启动。

node1(172.16.100.67)上执行apache服务启动。

root@node1 ~] systemctl start httpd.service

node2(172.16.100.68)上执行apache服务启动。

root@node2 ~] systemctl start httpd.service

22、 两个节点上安装配置keepalived。

root@node1 ~] yum install keepalived –y

root@node2 ~] yum install keepalived –y

23、 备份两个节点的keepalived.conf配置文件。

root@node1 ~] cd /etc/keepalived/

root@node1 ~] cp keepalived.conf{,.backup}

root@node2 ~] cd /etc/keepalived/

root@node2 ~] cp keepalived.conf{,.backup}

24、 编辑node1节点上的keepalived.conf配置文件和notify.sh脚本。

配置node1节点上的keepalived.conf配置文件。

! Configuration File for keepalived

global_defs {

notification_email { br/>[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_connect_timeout 3

smtp_server 127.0.0.1

router_id LVS_DEVEL

}

vrrp_script chk_mantaince_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1 #检查的时间间隔为1秒

weight -2 #一但-f的down文件存在,weight权重-2

}

vrrp_instance VI_1 {

interface eno16777736

state MASTER # BACKUP for slave routers

priority 101 # 100 for BACKUP

virtual_router_id 51

garp_master_delay 1

advert_int 1

authentication {

auth_type PASS

auth_pass 2231da37af98 #openssl rand –hex 6命令生成随机数

}

virtual_ipaddress {

172.16.100.88/32 dev eno16777736 label eno16777736:1

}

track_script {

chk_mantaince_down

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault" }

virtual_server 172.16.100.88 80 {

delay_loop 6 #检查失败要转6圈

lb_algo wrr

lb_kind DR

nat_mask 255.255.0.0

persistence_timeout 50 #做持久连接

protocol TCP

sorry_server 127.0.0.1 80 #定义当所有RS挂掉,node的sorry server

real_server 172.16.100.6 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 172.16.100.69 80 {

weight 2

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}}

编辑node1上的/etc/keepalived/notify.sh脚本。

#!/bin/bash

vip=172.16.100.88

contact='root@localhost'

notify() {

mailsubject="hostname to be $1: $vip floating"

mailbody="date '+%F %H:%M:%S': vrrp transition, hostname changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

notify master

exit 0

;;

backup)

notify backup

exit 0

;;

fault)

notify fault

exit 0

;;

*)

echo 'Usage: basename $0 {master|backup|fault}'

exit 1

;;

esac

给予notify.sh执行权限。

~] chmod +x notify.sh

25、 编辑node2节点上的keepalived.conf配置文件和notify.sh脚本。

配置node2节点上的keepalived.conf配置文件。

! Configuration File for keepalived

global_defs {

notification_email { br/>[email protected]

[email protected]

}

notification_email_from [email protected]

smtp_connect_timeout 3

smtp_server 127.0.0.1

router_id LVS_DEVEL

}

vrrp_script chk_mantaince_down {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1 #检查的时间间隔为1秒

weight -2 #一但-f的down文件存在,weight权重-2

}

vrrp_instance VI_1 {

interface eno16777736

state BACKUP # BACKUP for slave routers

priority 100 # 100 for BACKUP

virtual_router_id 51

garp_master_delay 1

advert_int 1

authentication {

auth_type PASS

auth_pass 2231da37af98 #openssl rand –hex 6命令生成随机数

}

virtual_ipaddress {

172.16.100.88/16 dev eno16777736 label eno16777736:1

}

track_script {

chk_mantaince_down

}

notify_master "/etc/keepalived/notify.sh master"

notify_backup "/etc/keepalived/notify.sh backup"

notify_fault "/etc/keepalived/notify.sh fault" }

virtual_server 172.16.100.88 80 {

delay_loop 6 #检查失败要转6圈

lb_algo wrr

lb_kind DR

nat_mask 255.255.0.0

persistence_timeout 50 #做持久连接

protocol TCP

sorry_server 127.0.0.1 80 #定义当所有RS挂掉,node的sorry server

real_server 172.16.100.6 80 {

weight 1

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 172.16.100.69 80 {

weight 2

HTTP_GET {

url {

path /

status_code 200

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}}

编辑node2上的/etc/keepalived/notify.sh脚本。

#!/bin/bash

vip=172.16.100.88

contact='root@localhost'

notify() {

mailsubject="hostname to be $1: $vip floating"

mailbody="date '+%F %H:%M:%S': vrrp transition, hostname changed to be $1"

echo $mailbody | mail -s "$mailsubject" $contact

}

case "$1" in

master)

notify master

exit 0

;;

backup)

notify backup

exit 0

;;

fault)

notify fault

exit 0

;;

*)

echo 'Usage: basename $0 {master|backup|fault}'

exit 1

;;

esac

给予notify.sh执行权限。

~] chmod +x notify.sh

26、 启动两个节点上的keepalived服务。

root@node1 ~] systemctl start keepalived.service

root@node2 ~] systemctl start keepalived.service

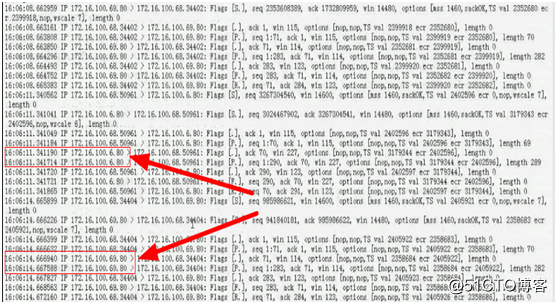

27、 检查node1的keepalived服务生成状况。

在node1(172.16.100.67)节点上执行tcpdump命令。

~] tcpdump –i eno16777736 –nn host 172.16.100.68

以上可以看到对RS1(172.16.100.6)和RS2(172.16.100.69)做健康状态检查,说明keepalived服务已经启动。

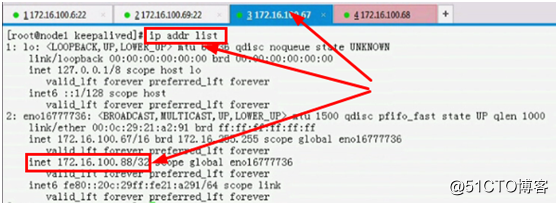

在node1上使用ip addr list查看vip情况。

root@node1 ~] ip addr list

在利用ipvsadm –Ln检查ipvs规则生成情况。

root@node1~] ipvsadm –Ln

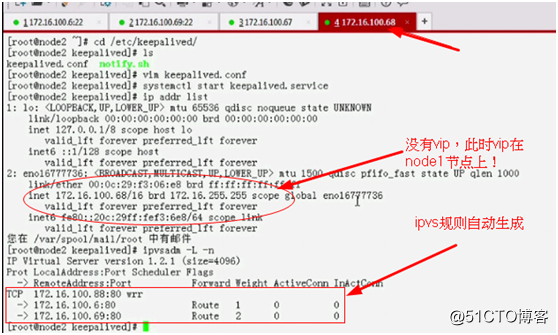

28、 检查node2的keepalived生成情况。

在node2上使用ip addr list查看vip情况,ipvs规则情况。

root@node2 ~] ip addr list

root@node2 ~] ipvsadm -Ln

29、 利用mail查看自己定义的脚本(notify)邮件传送的情况。

root@node1 ~] mail

root@node2 ~] mail

此时看出notify的邮件传送也能实现。

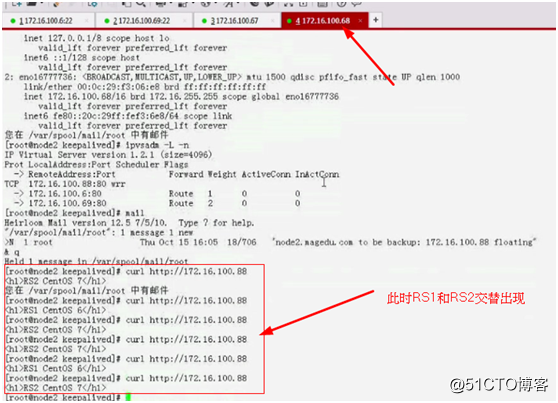

30、 用node2节点来访问vip看集群架设是否正常。

root@node2 ~] crul http://172.16.100.88

31、 此时让RS1(172.16.100.6)下线,看分发情况。

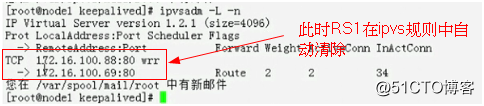

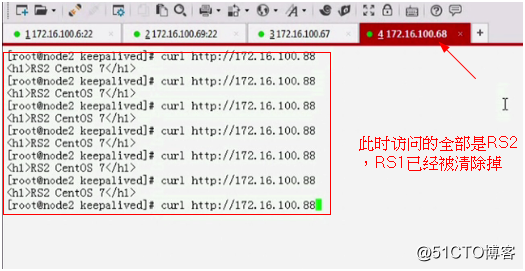

root@RS1 ~] service httpd stop

在node1(MASTER)上查看ipvs规则,是否自动清掉RS1。

root@node1 ~] ipvsadm –Ln

在node2上执行curl命令看访问效果。

root@node2 ~] curl http://172.16.100.88

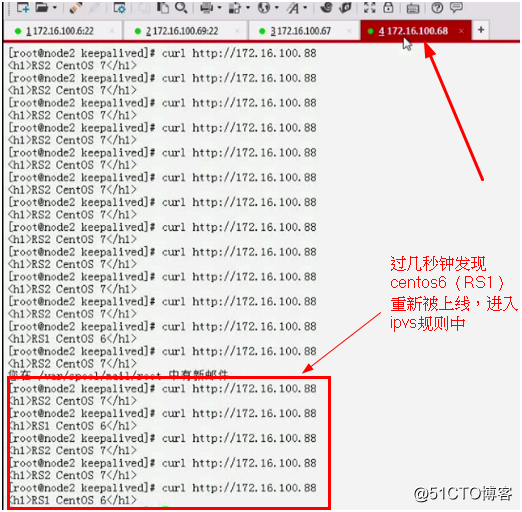

32、 此时让RS1(172.16.100.6)上线,还在node2上执行curl命令查看RS1是否被重新加入ipvs规则中。

root@node2 ~] curl http://172.16.100.88

33、 当所有的RS挂掉后,node节点上应该有个sorry server提示,sorry server定义在virtual server里面。

34、 关闭一台node1,看vip能否转移。可以stop keepalived服务,也可以在keepalived目录中创建一个/etc/keepalived/down文件让vip从master中转移到backup中;在删除node1的down文件,看vip转移。以此来证明HA的架构是否有问题。

35、 关闭两台node。访问vip(172.16.100.88)看是否有sorry server。