这篇博文主要参考了另一位博主https://blog.csdn.net/hellohaibo,在此向他表示感谢

首先,博主今天的caffe崩了,毫无征兆的崩了,具体表现为博主想做一个更大的数据集,但是在生成lmbd文件时永远生成的是一个没有名字的文件夹,可是博主已经在指定的example目录里写了文件名,百度,没有答案,初步推测caffe崩了。。。

然后重新安装,真的坑,以前没有的报错都出现了,网上寻求外国友人帮助。。。真的难受这玩意。。。

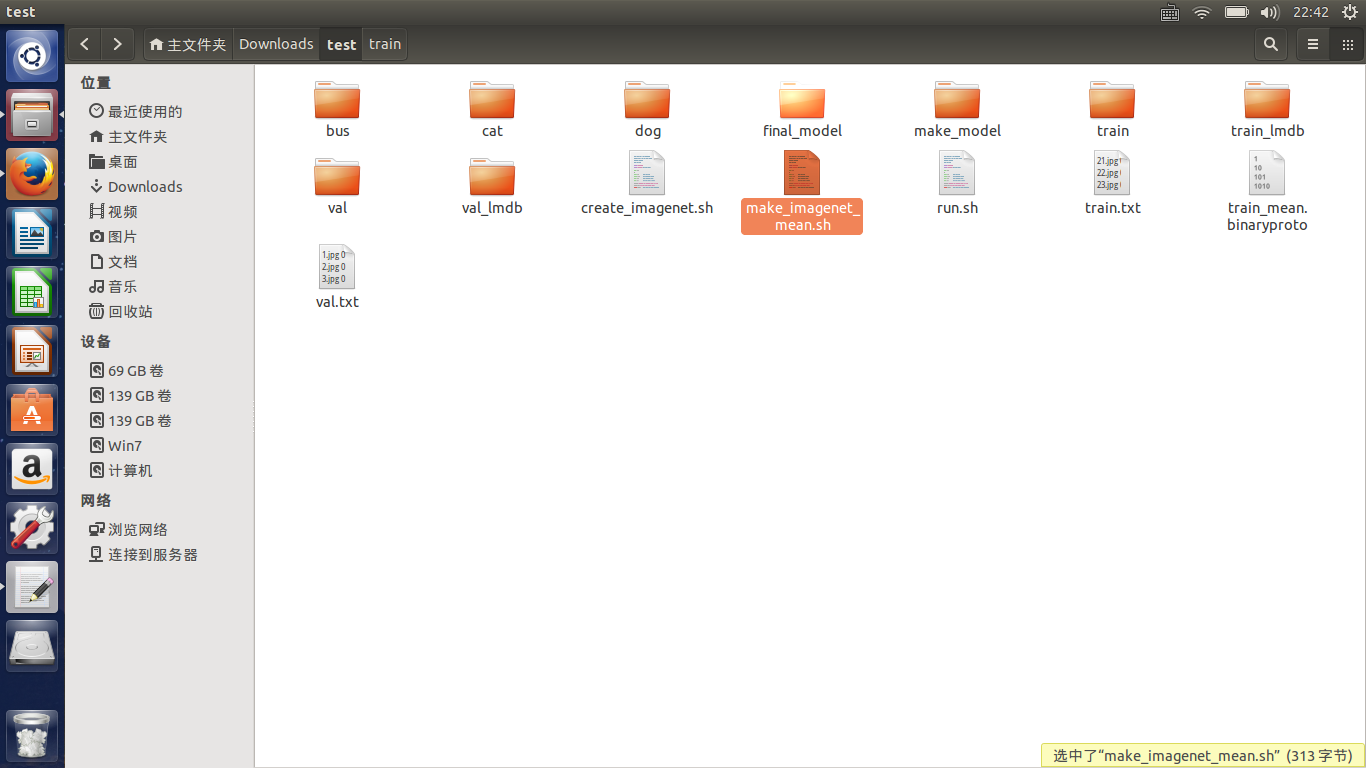

重新安装好后,就可以训练自己的数据集了,前一章讲过如何制作自己的数据集,这章就忽略了,我们来讲均值文件的生成,很简单,去caffe里寻找一个make_imagenet_mean.sh的脚本文件,然后放入自己测试的目录,博主的目录如下:

修改脚本文件如下

#!/usr/bin/env sh

# Compute the mean image from the imagenet training lmdb

# N.B. this is available in data/ilsvrc12

EXAMPLE=/home/f/Downloads/test

DATA=/home/f/Downloads/test

TOOLS=/home/f/Downloads/caffe/build/tools

$TOOLS/compute_image_mean $EXAMPLE/train_lmdb \

$DATA/train_mean.binaryproto

echo "Done."然后终端执行该文件,这样就会产生均值文件了。

将caffe/model下随便拷贝一个文件到我们的test目录,修改以下参数,首先对于train_val的prototxt文件,将一开始的两层修改为

name: "CaffeNet"

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TRAIN

}

transform_param {

mirror: true

crop_size: 227

mean_file: "/home/f/Downloads/test/train_mean.binaryproto"

}

# mean pixel / channel-wise mean instead of mean image

# transform_param {

# crop_size: 227

# mean_value: 104

# mean_value: 117

# mean_value: 123

# mirror: true

# }

data_param {

source: "/home/f/Downloads/test/train_lmdb"

batch_size: 10

backend: LMDB

}

}

layer {

name: "data"

type: "Data"

top: "data"

top: "label"

include {

phase: TEST

}

transform_param {

mirror: false

crop_size: 227

mean_file: "/home/f/Downloads/test/train_mean.binaryproto"

}

# mean pixel / channel-wise mean instead of mean image

# transform_param {

# crop_size: 227

# mean_value: 104

# mean_value: 117

# mean_value: 123

# mirror: false

# }

data_param {

source: "/home/f/Downloads/test/val_lmdb"

batch_size: 5

backend: LMDB

}

}这里面是对source的路径修改,它为lmdb的文件所在路径,mean file为均值文件所在路径

batchsize根据你的数量集来,博主由于只有几百张图片,所以size设定得很小

然后修该该文件的最后输出层

inner_product_param {

num_output: 3

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0

}

}

}

layer {

name: "accuracy"

type: "Accuracy"

bottom: "fc8"

bottom: "label"

top: "accuracy"

include {

phase: TEST

}

}

layer {

name: "loss"

type: "SoftmaxWithLoss"

bottom: "fc8"

bottom: "label"

top: "loss"

}将max——output改为你的分类数

对于deploy的prototxt文件,只有修改最后一层的输出,同上。

layer {

name: "fc8"

type: "InnerProduct"

bottom: "fc7"

top: "fc8"

inner_product_param {

num_output: 3

}

}

layer {

name: "prob"

type: "Softmax"

bottom: "fc8"

top: "prob"

}然后修改solve文件,我的形式为

net: "/home/f/Downloads/test/make_model/train_val.prototxt"

test_iter: 24

test_interval: 50

base_lr: 0.001

lr_policy: "step"

gamma: 0.1

stepsize: 100

display: 20

max_iter: 500

momentum: 0.9

weight_decay: 0.0005

snapshot: 50

snapshot_prefix: "/home/f/Downloads/test/final_model"

solver_mode: CPU然后cd出来执行命令

./caffe/build/tools/caffe train --solver=/home/f/Downloads/test/make_model/solver.prototxt 然后就可以愉快地等待了。。。

注意,这里的目录最好都是绝对目录,caffe经常会报目录的问题,所以一定要细心。。。

注:如果训练量太大我们想中断并恢复,可以执行如下的命令,从某一snapshot开始:

./caffe/buiid/tools/caffe train

--solver=test/make_model/solver.prototxt

--snapshot=test/final_model/cifar10_full_iter_100.solverstate 可以通过caffe的接口进行测试:

./caffe/build/examples/cpp_classification/classification.bin /home/f/Downloads/test/make_model/deploy.prototxt /home/f/Downloads/test/final_model/solver_iter_500.caffemodel /home/f/Downloads/test/train_mean.binaryproto /home/f/Downloads/test/label.txt /home/f/Downloads/test/val/101.jpg输出结果:

接着我们在opencv上测试

将deploy文件和caffemodel文件和我们自己做的标签文件放入测试程序目录中,修改deploy的第一层为

name: "CaffeNet"

input: "data"

input_dim: 10

input_dim: 3

input_dim: 227

input_dim: 227 测试程序为

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/core/utils/trace.hpp>

using namespace cv;

using namespace cv::dnn;

#include <fstream>

#include <iostream>

#include <cstdlib>

using namespace std;

//寻找出概率最高的一类

static void getMaxClass(const Mat &probBlob, int *classId, double *classProb)

{

Mat probMat = probBlob.reshape(1, 1);

Point classNumber;

minMaxLoc(probMat, NULL, classProb, NULL, &classNumber);

*classId = classNumber.x;

}

//从标签文件读取分类 空格为标志

static std::vector<String> readClassNames(const char *filename = "label.txt")

{

std::vector<String> classNames;

std::ifstream fp(filename);

if (!fp.is_open())

{

std::cerr << "File with classes labels not found: " << filename << std::endl;

exit(-1);

}

std::string name;

while (!fp.eof())

{

std::getline(fp, name);

if (name.length())

classNames.push_back(name.substr(name.find(' ') + 1));

}

fp.close();

return classNames;

}

//主程序

int main(int argc, char **argv)

{

//初始化

CV_TRACE_FUNCTION();

//读取模型参数和模型结构文件

String modelTxt = "deploy.prototxt";

String modelBin = "caffenet.caffemodel";

//读取图片

String imageFile = (argc > 1) ? argv[1] : "1.jpg";

//合成网络

Net net = dnn::readNetFromCaffe(modelTxt, modelBin);

//判断网络是否生成成功

if (net.empty())

{

std::cerr << "Can't load network by using the following files: " << std::endl;

exit(-1);

}

cerr << "net read successfully" << endl;

//读取图片

Mat img = imread(imageFile);

imshow("image", img);

if (img.empty())

{

std::cerr << "Can't read image from the file: " << imageFile << std::endl;

exit(-1);

}

cerr << "image read sucessfully" << endl;

/* Mat inputBlob = blobFromImage(img, 1, Size(224, 224),

Scalar(104, 117, 123)); */

//构造blob,为传入网络做准备,图片不能直接进入网络

Mat inputBlob = blobFromImage(img, 1, Size(227, 227));

Mat prob;

cv::TickMeter t;

for (int i = 0; i < 10; i++)

{

CV_TRACE_REGION("forward");

//将构建的blob传入网络data层

net.setInput(inputBlob,"data");

//计时

t.start();

//前向预测

prob = net.forward("prob");

//停止计时

t.stop();

}

int classId;

double classProb;

//找出最高的概率ID存储在classId,对应的标签在classProb中

getMaxClass(prob, &classId, &classProb);

//打印出结果

std::vector<String> classNames = readClassNames();

std::cout << "Best class: #" << classId << " '" << classNames.at(classId) << "'" << std::endl;

std::cout << "Probability: " << classProb * 100 << "%" << std::endl;

//打印出花费时间

std::cout << "Time: " << (double)t.getTimeMilli() / t.getCounter() << " ms (average from " << t.getCounter() << " iterations)" << std::endl;

//便于观察结果

waitKey(0);

return 0;

} 然后观察结果

我由于实验的数据很不好,导致结果出现了问题,我放了猫,结果是狗。。。。但至少证明了实验可以成功,也说明了数据集的重要。。。