系列连载目录

- 请查看博客 《Paper》 4.1 小节 【Keras】Classification in CIFAR-10 系列连载

学习借鉴

- github:BIGBALLON/cifar-10-cnn

- 知乎专栏:写给妹子的深度学习教程

- Inception v4 Caffe 代码:https://github.com/soeaver/caffe-model/blob/master/cls/inception/deploy_inception-v4.prototxt

- GoogleNet网络详解与keras实现:https://blog.csdn.net/qq_25491201/article/details/78367696

参考

代码下载

- 链接:https://pan.baidu.com/s/1fbIu0DuZ9iMtMRslp1asSw

提取码:vlq9

硬件

- TITAN XP

文章目录

1 理论基础

参考【Inception-v4】《Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning》

2 Inception_v4 代码实现

[D] Why aren’t Inception-style networks successful on CIFAR-10/100?

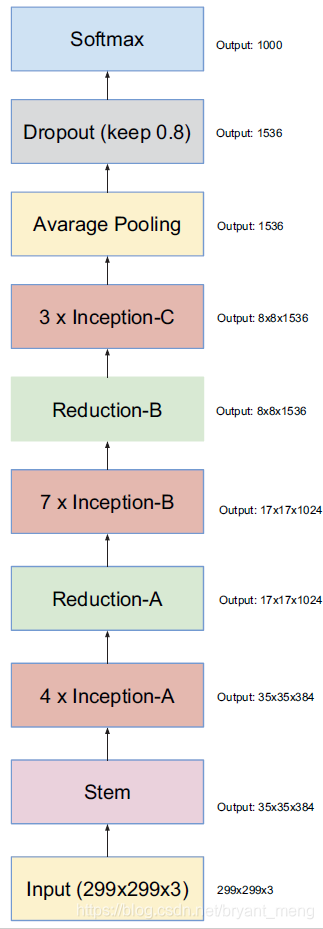

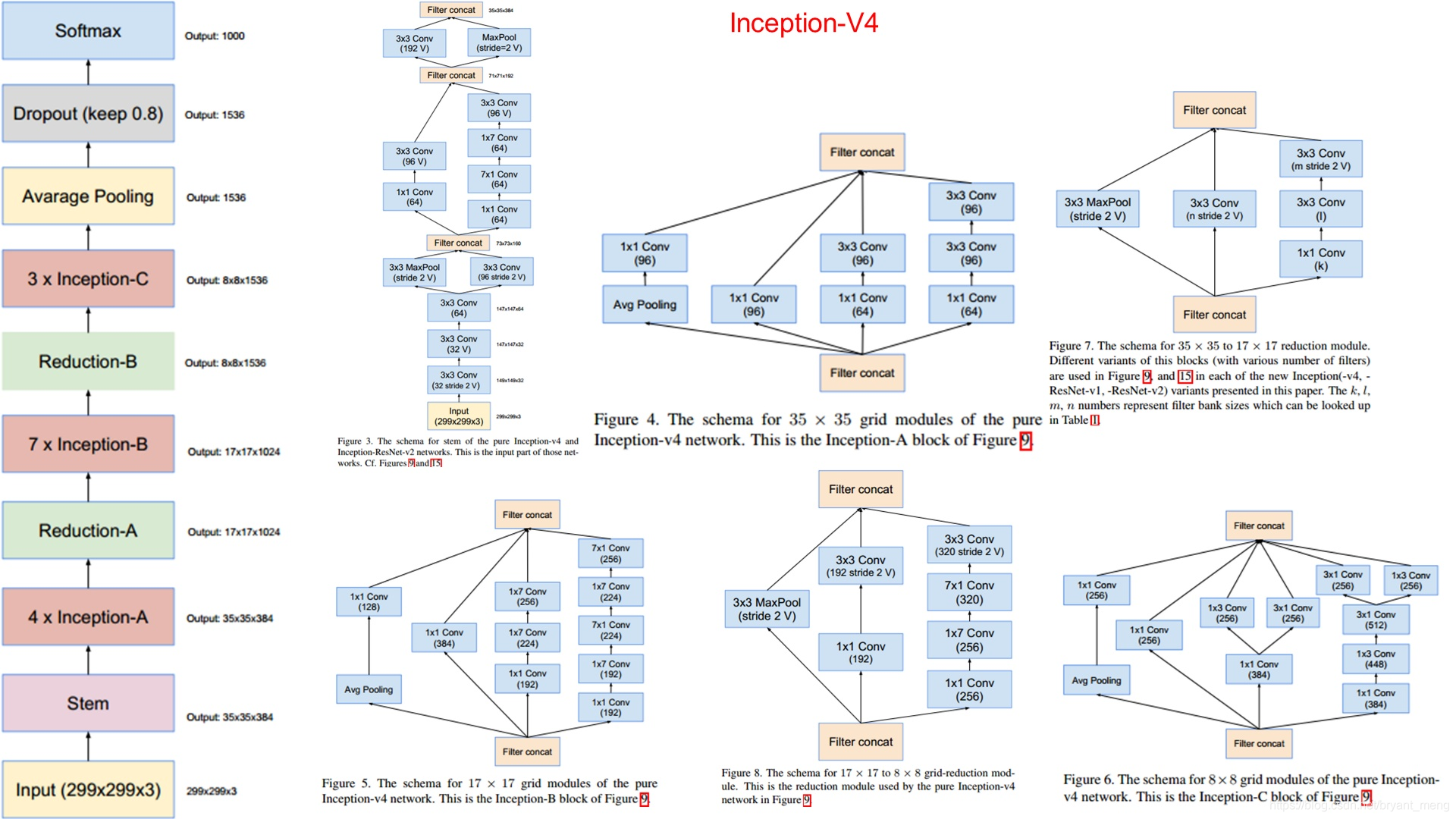

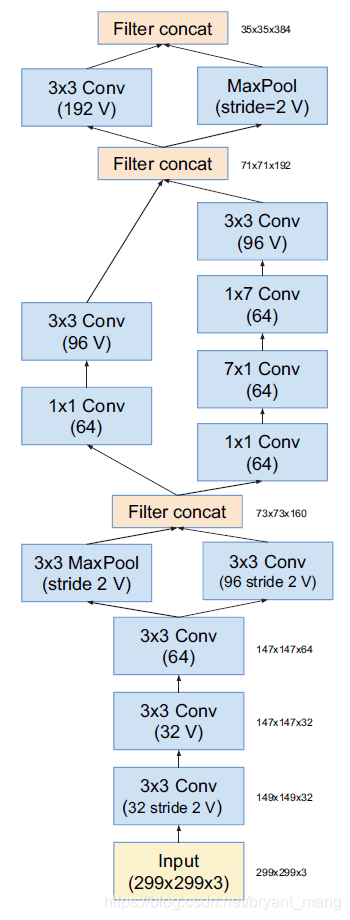

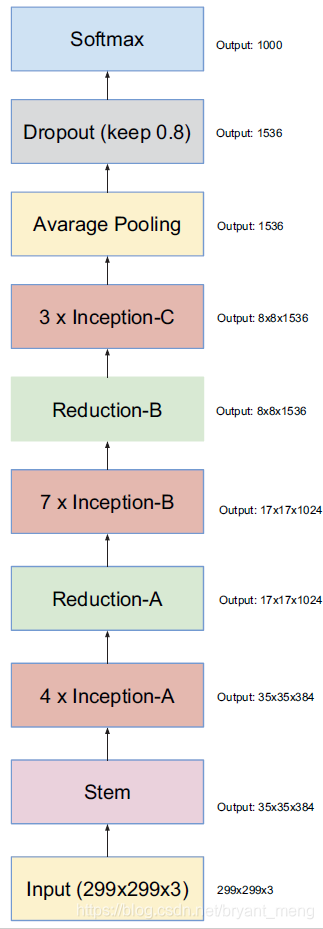

整体结构(图2左边 in paper)

图片来源 https://www.zhihu.com/question/50370954 (★★★★★)

这张图非常经典,最左边一列是 inception_v4 的 pipeline,6个结构图从左到右,从上到下分别是 pipeline 中的 stem、Inception-A、Reduction-A、Inception-B、Reduction-B、Inception-C

2.1 Inception_v4

1)导入库,设置好超参数

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="0"

import keras

import numpy as np

import math

from keras.datasets import cifar10

from keras.layers import Conv2D, MaxPooling2D, AveragePooling2D, ZeroPadding2D, GlobalAveragePooling2D

from keras.layers import Flatten, Dense, Dropout,BatchNormalization,Activation, Convolution2D

from keras.models import Model

from keras.layers import Input, concatenate

from keras import optimizers, regularizers

from keras.preprocessing.image import ImageDataGenerator

from keras.initializers import he_normal

from keras.callbacks import LearningRateScheduler, TensorBoard, ModelCheckpoint

num_classes = 10

batch_size = 64 # 64 or 32 or other

epochs = 300

iterations = 782

USE_BN=True

DROPOUT=0.2 # keep 80%

CONCAT_AXIS=3

weight_decay=1e-4

DATA_FORMAT='channels_last' # Theano:'channels_first' Tensorflow:'channels_last'

log_filepath = './inception_v4'

2)数据预处理并设置 learning schedule

def color_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

mean = [125.307, 122.95, 113.865]

std = [62.9932, 62.0887, 66.7048]

for i in range(3):

x_train[:,:,:,i] = (x_train[:,:,:,i] - mean[i]) / std[i]

x_test[:,:,:,i] = (x_test[:,:,:,i] - mean[i]) / std[i]

return x_train, x_test

def scheduler(epoch):

if epoch < 100:

return 0.01

if epoch < 200:

return 0.001

return 0.0001

# load data

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

x_train, x_test = color_preprocessing(x_train, x_test)

3)定义网络结构

- Factorization into smaller convolutions

eg:3×3 → 3×1 + 1×3

def conv_block(x, nb_filter, nb_row, nb_col, border_mode='same', subsample=(1,1), bias=False):

x = Convolution2D(nb_filter, nb_row, nb_col, subsample=subsample, border_mode=border_mode, bias=bias,

init="he_normal",dim_ordering='tf',W_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

return x

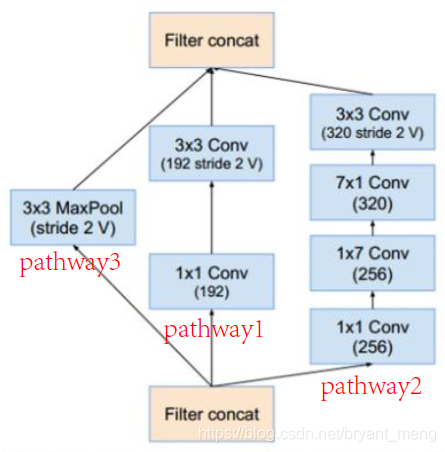

- stem(图2右边 in paper)

上图中,V 表示padding为valid,没有标注的表示same, 因为数据集分辨率的不同,如下代码全部用same表示:

def create_stem(img_input,concat_axis):

x = Conv2D(32,kernel_size=(3,3),strides=(2,2),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(32,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(64,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

# stem1

x_1 = Conv2D(96,kernel_size=(3,3),strides=(2,2),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_2 = MaxPooling2D(pool_size=(3,3),strides=2,padding='same',data_format=DATA_FORMAT)(x)

x = concatenate([x_1,x_2],axis=concat_axis)

# stem2

x_1 = Conv2D(64,kernel_size=(1,1),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_1 = Conv2D(96,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x_1)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_2 = Conv2D(96,kernel_size=(1,1),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_2))

x_2 = conv_block(x_2,64,1,7)

x_2 = conv_block(x_2,64,7,1)

x_2 = Conv2D(96,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x_2)

x_2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_2))

x = concatenate([x_1,x_2],axis=concat_axis)

# stem3

x_1 = Conv2D(192,kernel_size=(3,3),strides=(2,2),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_2 = MaxPooling2D(pool_size=(3,3),strides=2,padding='same',data_format=DATA_FORMAT)(x)

x = concatenate([x_1,x_2],axis=concat_axis)

return x

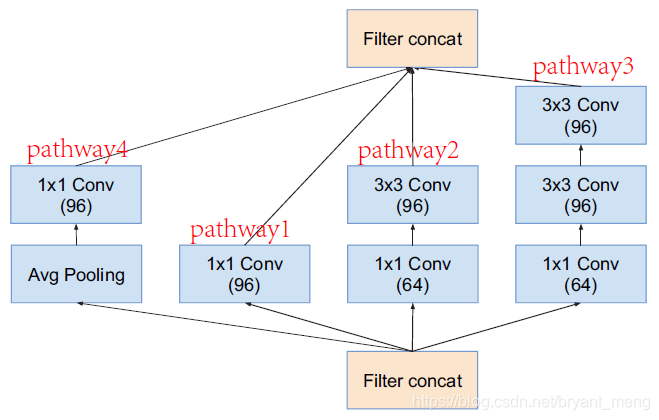

- inception module A(图3左边 in paper)

def inception_A(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,weight_decay=weight_decay):

(branch1,branch2,branch3,branch4)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1=Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->3x3

pathway2=Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2=Conv2D(filters=branch2[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#1x1->3x3+3x3

pathway3=Conv2D(filters=branch3[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3=Conv2D(filters=branch3[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway3)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3=Conv2D(filters=branch3[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway3)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

#3x3->1x1

pathway4=AveragePooling2D(pool_size=(3,3),strides=1,padding=padding,data_format=DATA_FORMAT)(x)

pathway4=Conv2D(filters=branch4[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway4)

pathway4 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway4))

return concatenate([pathway1,pathway2,pathway3,pathway4],axis=concat_axis)

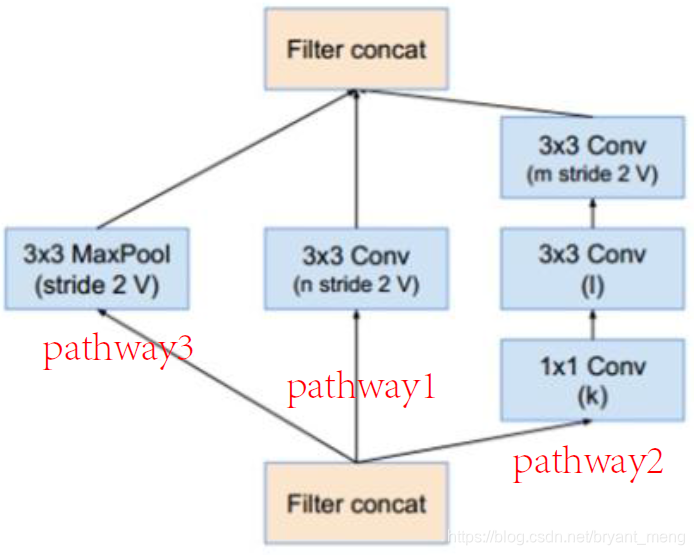

- reduction A(和 inception-resnet-v1 相同)

图片来源 https://www.zhihu.com/question/50370954

def reduce_A(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,weight_decay=weight_decay):

(branch1,branch2)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#3x3

pathway1 = Conv2D(filters=branch1[0],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->3x3+3x3

pathway2 = Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = Conv2D(filters=branch2[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = Conv2D(filters=branch2[2],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#3x3

pathway3 = MaxPooling2D(pool_size=(3,3),strides=2,padding=padding,data_format=DATA_FORMAT)(x)

return concatenate([pathway1,pathway2,pathway3],axis=concat_axis)

def reduce_A(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,weight_decay=weight_decay):

(branch1,branch2)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1 = Conv2D(filters=branch1[0],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->3x3+3x3

pathway2 = Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = Conv2D(filters=branch2[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = Conv2D(filters=branch2[2],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#3x3->1x1

pathway3 = MaxPooling2D(pool_size=(3,3),strides=2,padding=padding,data_format=DATA_FORMAT)(x)

return concatenate([pathway1,pathway2,pathway3],axis=concat_axis)

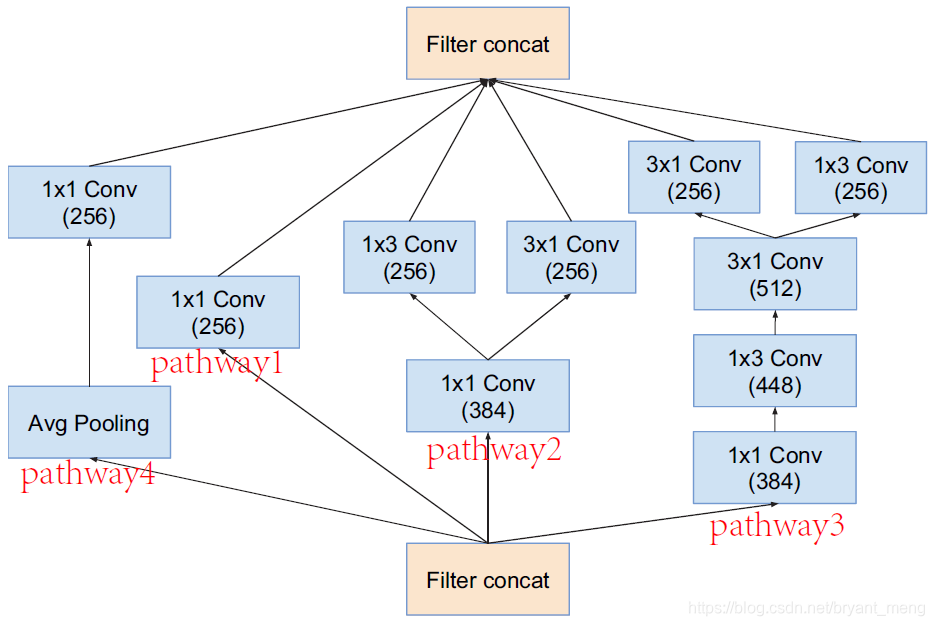

- inception module B(图3中间 in paper)

def inception_B(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,weight_decay=weight_decay):

(branch1,branch2,branch3,branch4)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1=Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->1x7->7x1

pathway2=Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = conv_block(pathway2,branch2[1],1,7)

pathway2 = conv_block(pathway2,branch2[2],7,1)

#1x1->7x1->1x7->7x1->1x7

pathway3=Conv2D(filters=branch3[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3 = conv_block(pathway3,branch3[1],7,1)

pathway3 = conv_block(pathway3,branch3[2],1,7)

pathway3 = conv_block(pathway3,branch3[3],7,1)

pathway3 = conv_block(pathway3,branch3[4],1,7)

#3x3->1x1

pathway4=AveragePooling2D(pool_size=(3,3),strides=1,padding=padding,data_format=DATA_FORMAT)(x)

pathway4=Conv2D(filters=branch4[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway4)

pathway4 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway4))

return concatenate([pathway1,pathway2,pathway3,pathway4],axis=concat_axis)

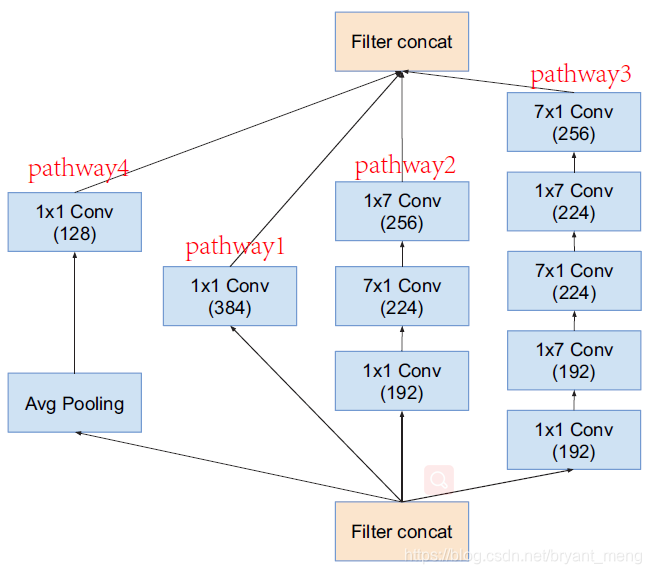

- reduction B

图片来源 https://www.zhihu.com/question/50370954

def reduce_B(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,weight_decay=weight_decay):

(branch1,branch2)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1->3x3

pathway1 = Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

pathway1 = Conv2D(filters=branch1[1],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway1)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->1x7->7x1->3x3

pathway2 = Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = conv_block(pathway2,branch2[1],1,7)

pathway2 = conv_block(pathway2,branch2[2],7,1)

pathway2 = Conv2D(filters=branch2[3],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#3x3

pathway3 = MaxPooling2D(pool_size=(3,3),strides=2,padding=padding,data_format=DATA_FORMAT)(x)

return concatenate([pathway1,pathway2,pathway3],axis=concat_axis)

- inception module C(图3右边 in paper)

def inception_C(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,weight_decay=weight_decay):

(branch1,branch2,branch3,branch4)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1=Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->1x3+3x1

pathway2=Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2_1 = conv_block(pathway2,branch2[1],1,3)

pathway2_2 = conv_block(pathway2,branch2[2],3,1)

#1x1->1x3->3x1->1x3+3x1

pathway3=Conv2D(filters=branch3[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3 = conv_block(pathway3,branch3[1],3,1)

pathway3 = conv_block(pathway3,branch3[2],1,3)

pathway3_1 = conv_block(pathway3,branch3[3],1,3)

pathway3_2 = conv_block(pathway3,branch3[4],3,1)

#3x3->1x1

pathway4=AveragePooling2D(pool_size=(3,3),strides=1,padding=padding,data_format=DATA_FORMAT)(x)

pathway4=Conv2D(filters=branch4[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway4)

pathway4 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway4))

return concatenate([pathway1,pathway2_1,pathway2_2,pathway3_1,pathway3_2,pathway4],axis=concat_axis)

4)搭建网络

用 3)中设计好的模块来搭建网络

整体结构(图2左边 in paper)

def create_model(img_input):

# stem

x = create_stem(img_input,concat_axis=CONCAT_AXIS)

# 4 x inception_A 3a-3d 384

for _ in range(4):

x=inception_A(x,params=[(96,),(64,96),(64,96),(96,)],concat_axis=CONCAT_AXIS)

# inception_reduce1 1024

x=reduce_A(x,params=[(384,),(192,224,256)],concat_axis=CONCAT_AXIS) # 768

# 7 x inception_B 4a-4g 1024

for _ in range(7):

x=inception_B(x,params=[(384,),(192,224,256),(192,192,224,224,256),(128,)],concat_axis=CONCAT_AXIS)

# inception_reduce2 1536

x=reduce_B(x,params=[(192,192),(256,256,320,320)],concat_axis=CONCAT_AXIS) # 1280

# 3 x inception_C 5a-5c 1536

for _ in range(3):

x=inception_C(x,params=[(256,),(384,256,256),(384,448,512,256,256),(256,)],concat_axis=CONCAT_AXIS)

x=GlobalAveragePooling2D()(x)

x=Dropout(DROPOUT)(x)

x = Dense(num_classes,activation='softmax',kernel_initializer="he_normal",

kernel_regularizer=regularizers.l2(weight_decay))(x)

return x

5)生成模型

img_input=Input(shape=(32,32,3))

output = create_model(img_input)

model=Model(img_input,output)

model.summary()

Total params: 41,256,266

Trainable params: 41,193,034

Non-trainable params: 63,232

6)开始训练

# set optimizer

sgd = optimizers.SGD(lr=.1, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

# set callback

tb_cb = TensorBoard(log_dir=log_filepath, histogram_freq=0)

change_lr = LearningRateScheduler(scheduler)

cbks = [change_lr,tb_cb]

# dump checkpoint if you need.(add it to cbks)

# ModelCheckpoint('./checkpoint-{epoch}.h5', save_best_only=False, mode='auto', period=10)

# set data augmentation

datagen = ImageDataGenerator(horizontal_flip=True,

width_shift_range=0.125,

height_shift_range=0.125,

fill_mode='constant',cval=0.)

datagen.fit(x_train)

# start training

model.fit_generator(datagen.flow(x_train, y_train,batch_size=batch_size),

steps_per_epoch=iterations,

epochs=epochs,

callbacks=cbks,

validation_data=(x_test, y_test))

model.save('inception_v4.h5')

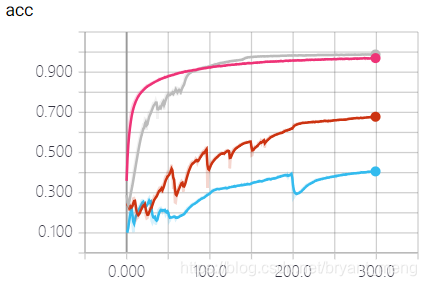

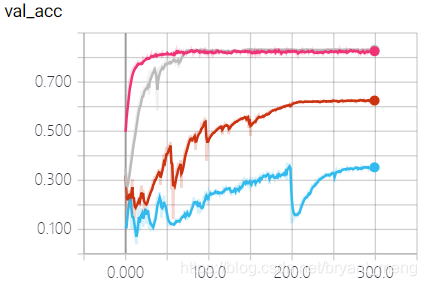

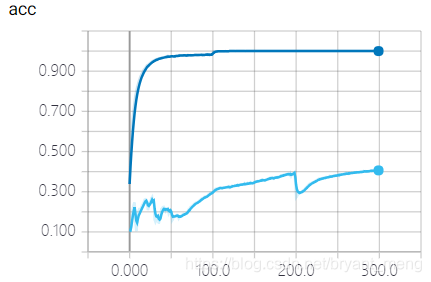

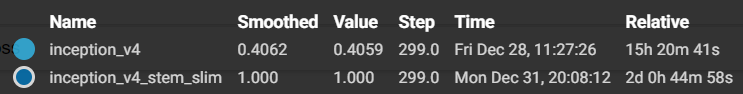

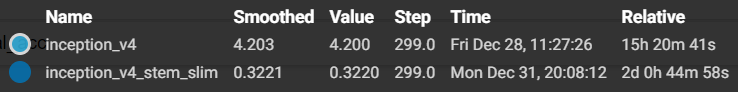

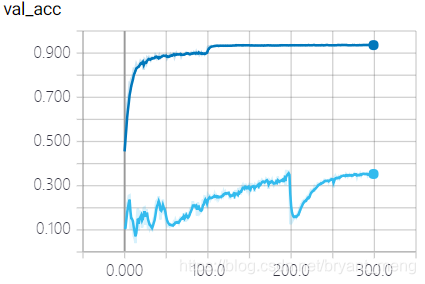

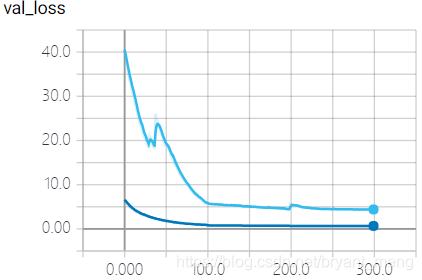

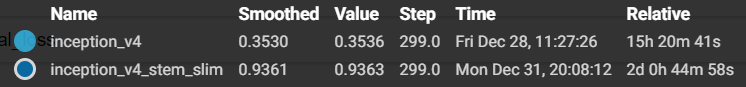

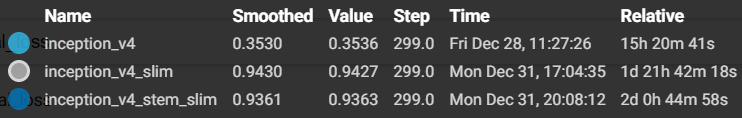

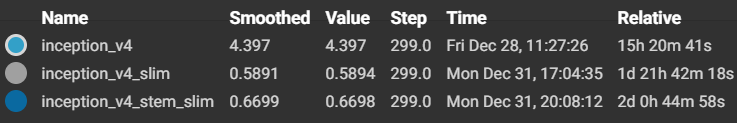

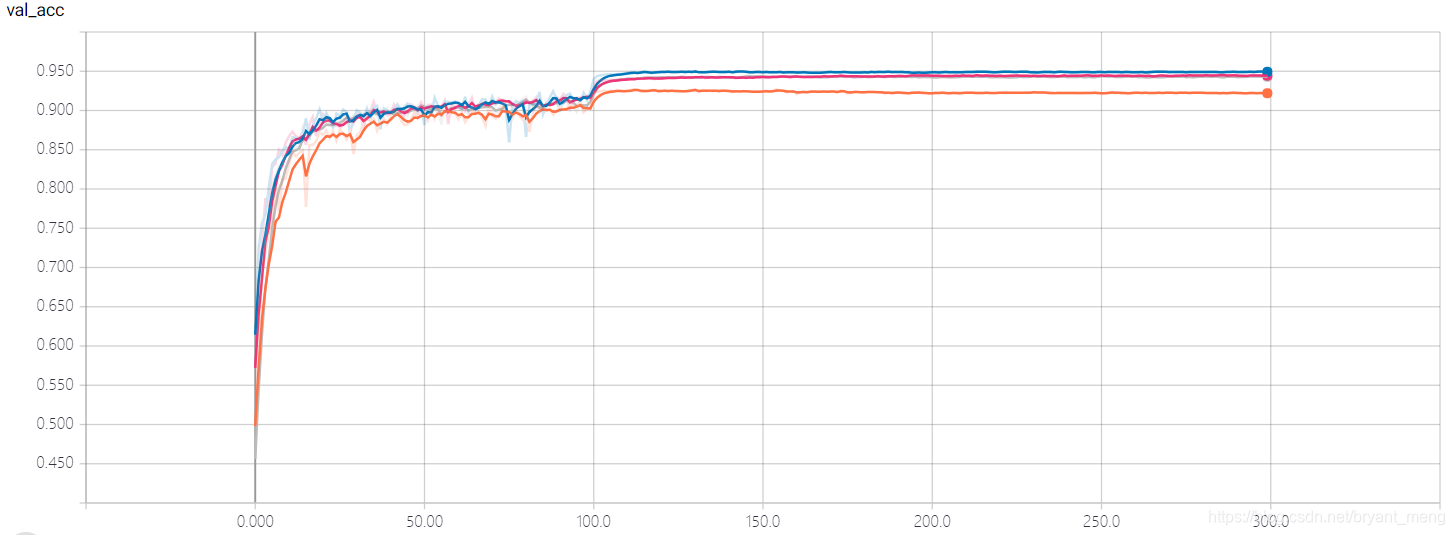

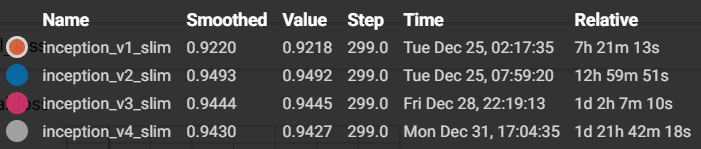

7)结果分析

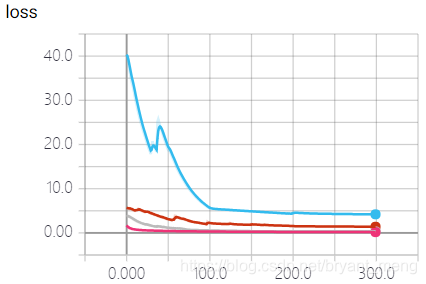

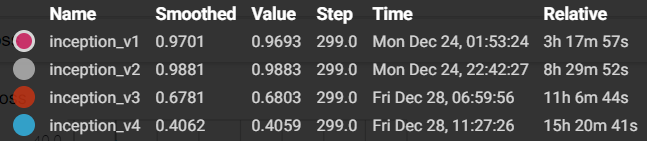

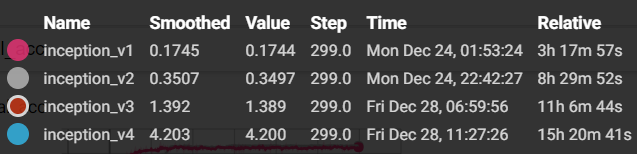

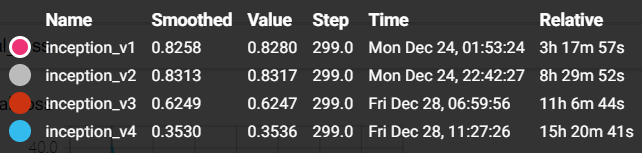

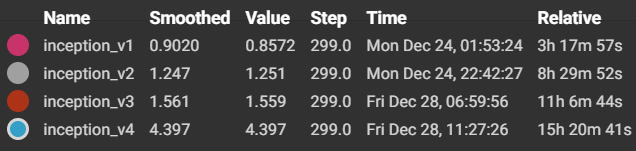

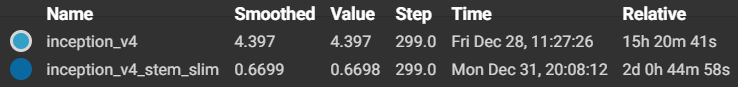

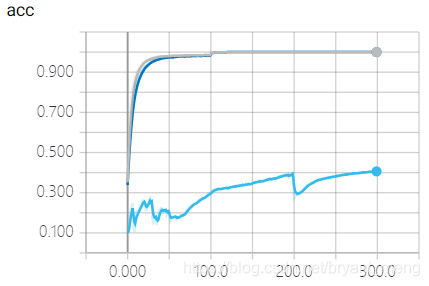

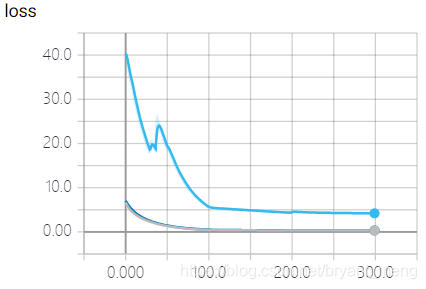

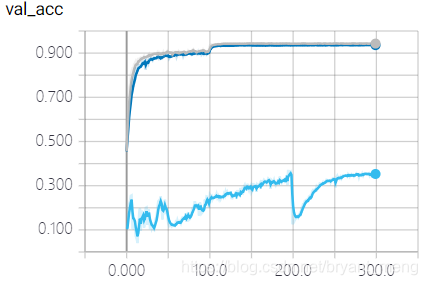

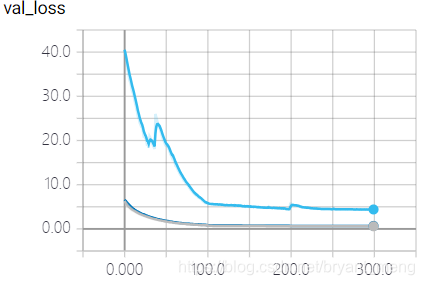

training accuracy 和 training loss

- accuracy

- loss

因为前面的 stem 把 feature map 的分辨率降到了 4,后面 inception module 中 1x7 与 7x1 的效果可想而知,都是在处理 padding, 差,相当的差。

test accuracy 和 test loss

- accuracy

- loss

…………

结果意料之中

2.2 Inception_v4_stem_slim

对2.1 小节中的 inception 模块函数 def create_stem(……)进行如下修改:

把 stride 为 2 的 convolution 和 pooling 都替换成 1,保持 feature map 的分辨率,让 1×7,7×1 convolution 有的放矢

def create_stem(img_input,concat_axis):

x = Conv2D(32,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(32,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(64,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

# stem1

x_1 = Conv2D(96,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_2 = MaxPooling2D(pool_size=(3,3),strides=1,padding='same',data_format=DATA_FORMAT)(x)

x = concatenate([x_1,x_2],axis=concat_axis)

# stem2

x_1 = Conv2D(64,kernel_size=(1,1),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_1 = Conv2D(96,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x_1)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_2 = Conv2D(96,kernel_size=(1,1),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_2))

x_2 = conv_block(x_2,64,1,7)

x_2 = conv_block(x_2,64,7,1)

x_2 = Conv2D(96,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x_2)

x_2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_2))

x = concatenate([x_1,x_2],axis=concat_axis)

# stem3

x_1 = Conv2D(192,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x_1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x_1))

x_2 = MaxPooling2D(pool_size=(3,3),strides=1,padding='same',data_format=DATA_FORMAT)(x)

x = concatenate([x_1,x_2],axis=concat_axis)

return x

参数量如下(不变):

Total params: 41,256,266

Trainable params: 41,193,034

Non-trainable params: 63,232

- inception_v4

Total params: 41,256,266

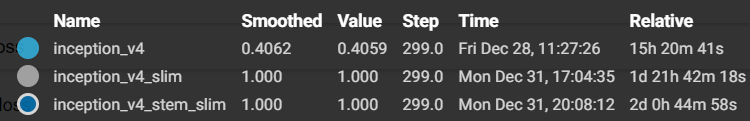

结果分析如下:

training accuracy 和 training loss

- accuracy

- loss

test accuracy 和 test loss

- accuracy

- loss

精度提升上去了,93%+,还 OK

2.3 Inception_v4_slim

把 Inception_v4 中 stern 结构直接替换成一个卷积,inception 结构不变,因为stern结果会把原图降到1/8的分辨率,对于 ImageNet 还行,CIFRA-10的话有些吃不消了

- 调整网络结构

def create_model(img_input):

x = Conv2D(192,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

# 4 x inception_A 3a-3d 384

for _ in range(4):

x=inception_A(x,params=[(96,),(64,96),(64,96),(96,)],concat_axis=CONCAT_AXIS)

# inception_reduce1 1024

x=reduce_A(x,params=[(384,),(192,224,256)],concat_axis=CONCAT_AXIS) # 768

# 7 x inception_B 4a-4g 1024

for _ in range(7):

x=inception_B(x,params=[(384,),(192,224,256),(192,192,224,224,256),(128,)],concat_axis=CONCAT_AXIS)

# inception_reduce2 1536

x=reduce_B(x,params=[(192,192),(256,256,320,320)],concat_axis=CONCAT_AXIS) # 1280

# 3 x inception_C 5a-5c 1536

for _ in range(3):

x=inception_C(x,params=[(256,),(384,256,256),(384,448,512,256,256),(256,)],concat_axis=CONCAT_AXIS)

x=GlobalAveragePooling2D()(x)

x=Dropout(DROPOUT)(x)

x = Dense(num_classes,activation='softmax',kernel_initializer="he_normal",

kernel_regularizer=regularizers.l2(weight_decay))(x)

return x

其它代码同 Inception_v4

参数量如下:

Total params: 40,572,394

Trainable params: 40,510,954

Non-trainable params: 61,440

- inception_v4

Total params: 41,256,266 - inception_v4_stem_slim

Total params: 41,256,266

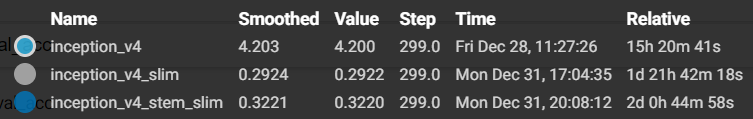

training accuracy 和 training loss

- accuracy

- loss

test accuracy 和 test loss

- accuracy

- loss

时间缩短,精度进一步提升,突破 94%

2.4 纵向对比

3 总结

模型大小