一、Hive重温

1、复杂数据类型:

map,结构体,数组

2、JDBC编程:有两句官网的代码有误

3、ZK:HA集群比较多,奇数台。

二、压缩Compression

1、常用压缩格式介绍

| Tool | Algorithm | File extention | |

| gzip | gzip | DEFLATE | .gz |

| bzip2 | bizp2 | bzip2 | .bz2 |

| LZO | lzop | LZO | .lzo |

| Snappy | N/A | Snappy | .snappy |

2、压缩格式对比

压缩比

压缩速度/解压缩度

3、压缩在Hadoop中的应用

1)是否支持分割

压缩有什么用,举个例子:

1G的数据:

没压缩,Map Task = 1024/128

Gzip压缩后,由于不支持分割,所以是一个Map Task处理。

2)常用Codec

Codec:我们只需配置再看hadoop的配置文件中即可

3)压缩在MapReduce中的应用计划

1:

MapReduce jobs从HDFS读取数据

压缩可以减少磁盘开销

输入时的压缩尽量使用可分割的压缩方式

压缩使用可分割的文件结构:ORC或者...

2:

中间解压尽量使用解压速度快的技术比如Snappy,LZO

3、

最后压缩输出的时候分两种情况:

1)保存到磁盘,使用高压缩比的技术

2)给下个Map,使用能分割的压缩技术

三、配置HDFS压缩

1、core-site.xml

<property>

<name>io.compression.codecs</name>

<value>

org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

</value>

</property>

2、mapred-site.xml

是否需要压缩:

<property>

<name>mapreduce.output.fileoutputformat.compress</name> ---------------------第三步

<value>true</value>

</property>

输出使用哪种压缩:

<property>

<name>mapreduce.output.fileoutputformat.compress.codec</name>

<value>org.apache.hadoop.io.compress.BZip2Codec</value>

</property>

map阶段或者其他步骤使用哪种压缩:

官网:http://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

搜索:mapreduce.output.fileoutputformat.compress

往下看输出,类推使用方式:

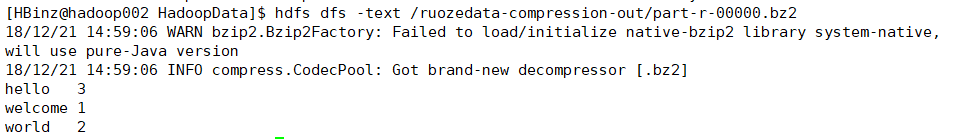

3、测试压缩效果

进入目录:

cd /opt/app/hadoop-2.6.0-cdh5.7.0/share/hadoop/mapreduce

执行:

hadoop jar hadoop-mapreduce-examples-2.6.0-cdh5.7.0.jar wordcount /ruozeinput.txt /ruozedata-compression-out/

成功:

![]()

四、Hive压缩

1、创建page_views表

create table page_views(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

row format delimited fields terminated by "\t" ;

2、读取外部数据源到表

load data local inpath ' ' overwrite into table page_views;

3、创建另外一张表,将数据压缩后再导入Hive

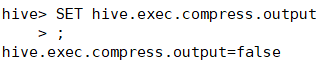

(1)修改默认的配置:

SET hive.exec.compress.output=true;

set mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.BZip2Codec;

(2)创建一个表,导入压缩后数据到此表:

create table page_views_bzip2

row format delimited fields terminated by '\t'

as select * from page_views;

(3)到HDFS查看文件

hdfs dfs -du -s -h /user/hive/warehouse/page_views

压缩的文件小了1/3

五、Hive Storage Format

1、STORED AS file_format

create table ruoze_a(id int) stored as textfile;

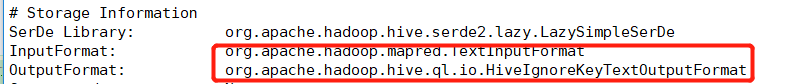

2、查看表结构

desc formatted ruoze_a;

发现是默认的输入输出格式。

3、定义输入输出格式,将textfile拆开。

create table ruoze_b(id int) stored as

INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat';

4、TEXTFILE

现在都在使用的。

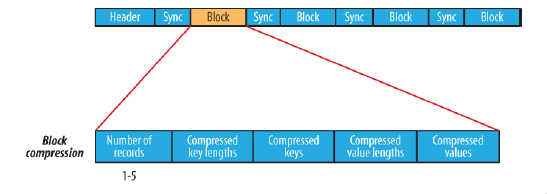

5、SEQUENCEFILE

create table ruoze_page_views_seq(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

row format delimited fields terminated by '\t'

stored as SEQUENCEFILE;

错误:load data local inpath '/home/hadoop/data/page_views.dat' overwrite into table ruoze_page_views_seq;//创建表格已经定义了表格是SEQ格式,但是源数据的文件是文本文件,所以报错。因此需要从别的文本表insert过去。

insert into table ruoze_page_views_seq select * from ruoze_page_views;

总结:

SEQ会使数据变大。

6、rcfile

create table ruoze_page_views_rc(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

row format delimited fields terminated by '\t'

stored as rcfile;

insert into table ruoze_page_views_rc select * from ruoze_page_views;

7、ORC(https://blog.csdn.net/dabokele/article/details/51542327)

Compared with RCFile format, for example, ORC file format has many advantages such as:

- a single file as the output of each task, which reduces the NameNode's load

- Hive type support including datetime, decimal, and the complex types (struct, list, map, and union)

- light-weight indexes stored within the file

- skip row groups that don't pass predicate filtering

- seek to a given row

- block-mode compression based on data type

- run-length encoding for integer columns

- dictionary encoding for string columns

- concurrent reads of the same file using separate RecordReaders

- ability to split files without scanning for markers

- bound the amount of memory needed for reading or writing

- metadata stored using Protocol Buffers, which allows addition and removal of fields

create table ruoze_page_views_orc(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

row format delimited fields terminated by '\t'

stored as orc;

insert into table ruoze_page_views_orc select * from ruoze_page_views;

总结:

比bzip2压缩的数据更小。

(1)ORC+null_compress

create table ruoze_page_views_orc_null(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

row format delimited fields terminated by '\t'

stored as orc tblproperties ("orc.compress"="NONE");

insert into table ruoze_page_views_orc_null select * from ruoze_page_views;

8、Parquet源于dremel

create table ruoze_page_views_parquet(

track_time string,

url string,

session_id string,

referer string,

ip string,

end_user_id string,

city_id string

)

row format delimited fields terminated by '\t'

stored as parquet;

insert into table ruoze_page_views_parquet select * from ruoze_page_views;

1)Parquet+compress

set parquet.compression=GZIP;

create table ruoze_page_views_parquet_gzip

row format delimited fields terminated by '\t'

stored as parquet

as select * from ruoze_page_views;

六、行式存储and列式存储

行式存储:

一行的数据,存在一个Block中

列式存储:

按列存

总结:

1、大数据中一个表非常多的字段,我们大部分场景只有其中的某些字段,可以直接取数,所以列式存储可以减少系统I/O

2、压缩的话,列式存储压缩比更高。

3、但是如果查较多列的话,列式存储需要重组所有列,因此性能耗费较高。

七、读写、性能层面

对比分析null、ORC、parquet三者在HDFS上读取的数据量

select count(1) from ruoze_page_views where session_id='B58W48U4WKZCJ5D1T3Z9ZY88RU7QA7B1';

19022752

select count(1) from ruoze_page_views_orc where session_id='B58W48U4WKZCJ5D1T3Z9ZY88RU7QA7B1';

1257523

select count(1) from ruoze_page_views_parquet where session_id='B58W48U4WKZCJ5D1T3Z9ZY88RU7QA7B1';

2687077

3496487