Python3 Scrapy爬虫框架(Scrapy/scrapy-redis)

本文由 Luzhuo 编写,转发请保留该信息.

原文: https://blog.csdn.net/Rozol/article/details/80010173

Scrapy

Scrapy 是 Python 写的, 主要用于爬取网站数据, 爬过的链接会自动过滤

使用的 Twisted 异步网络框架

官网: https://scrapy.org/

文档: https://docs.scrapy.org/en/latest/

中文文档: http://scrapy-chs.readthedocs.io/zh_CN/latest/index.html

安装: pip install Scrapy

PyDispatcher-2.0.5 Scrapy-1.5.0 asn1crypto-0.24.0 cffi-1.11.5 cryptography-2.2.2 cssselect-1.0.3 parsel-1.4.0 pyOpenSSL-17.5.0 pyasn1-0.4.2 pyasn1-modules-0.2.1 pycparser-2.18 queuelib-1.5.0 service-identity-17.0.0 w3lib-1.19.0

其他依赖库: pywin32-223

常用命令

- 创建项目:

scrapy startproject mySpider - 爬虫

- 创建爬虫:

scrapy genspider tieba tieba.baidu.comscrapy genspider -t crawl tieba tieba.baidu.com

- 启动爬虫:

scrapy crawl tieba

- 创建爬虫:

- 分布式爬虫:

- 启动爬虫:

scrapy runspider tieba.py - 发布指令:

lpush tieba:start_urls http://tieba.baidu.com/f/index/xxx

- 启动爬虫:

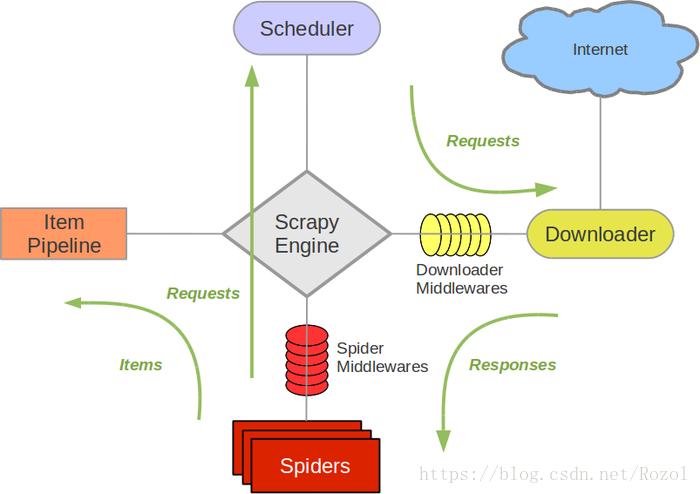

框架

- Scrapy Engine(

引擎): 负责Spider、ItemPipeline、Downloader、Scheduler中间的通讯 - Scheduler(

调度器): 负责接收引擎发来请求, 加入队列, 当引擎需要时交还给引擎 - Downloader(

下载器): 负责下载引擎发送的所有请求, 将结果交还给引擎,引擎交给Spider来处理 - Spider(

爬虫): 负责从结果里提取数据, 获取Item字段需要的数据, 将需要跟进的URL提交给引擎,引擎交给调度器 - Item Pipeline(

管道): 负责处理Spider中获取到的Item, 并进行处理与保存 - Downloader Middlewares(

下载中间件): 扩展下载功能的组件 - Spider Middlewares(Spider中间件): 扩展Spider中间通信的组件

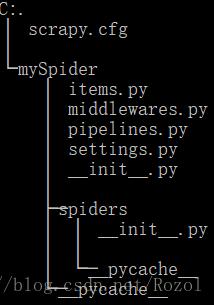

项目目录

- scrapy.cfg: 项目的配置文件

- mySpider: 项目

- mySpider/items.py: 目标文件

- mySpider/pipelines.py: 管道文件

- mySpider/settings.py: 配置文件

- mySpider/spiders: 存储爬虫代码目录

Scrapy的使用

操作步骤

创建项目

scrapy startproject mySpider

编写提取的内容(items.py)

class TiebaItem(scrapy.Item): # 编写要存储的内容 # 贴吧名 name = scrapy.Field() # 简介 summary = scrapy.Field() # 贴吧总人数 person_sum = scrapy.Field() # 贴吧帖子数 text_sum = scrapy.Field()创建爬虫

- cd 到项目目录(mySpider)下

scrapy genspider tieba tieba.baidu.com- 爬虫文件创建在

mySpider.spiders.tieba 编写爬虫

# -*- coding: utf-8 -*- import scrapy from mySpider.items import TiebaItem class TiebaSpider(scrapy.Spider): name = 'tieba' # 爬虫名 allowed_domains = ['tieba.baidu.com'] # 爬虫作用范围 page = 1 page_max = 2 #30 url = 'http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=' start_urls = [url + str(page)] # 爬虫起始地址 # 处理响应文件 def parse(self, response): # scrapy 自带的 xpath 匹配 # .css('title::text') / .re(r'Quotes.*') / .xpath('//title') tieba_list = response.xpath('//div[@class="ba_content"]') # 数据根目录 for tieba in tieba_list: # 从网页中获取需要的数据 name = tieba.xpath('./p[@class="ba_name"]/text()').extract_first() # .extract_first() 转成字符串 summary = tieba.xpath('./p[@class="ba_desc"]/text()').extract_first() person_sum = tieba.xpath('./p/span[@class="ba_m_num"]/text()').extract_first() text_sum = tieba.xpath('./p/span[@class="ba_p_num"]/text()').extract_first() item = TiebaItem() item['name'] = name item['summary'] = summary item['person_sum'] = person_sum item['text_sum'] = text_sum # 将获取的数据交给管道 yield item if self.page < self.page_max: self.page += 1 # 将新请求交给调度器下载 # yield scrapy.Request(next_page, callback=self.parse) yield response.follow(self.url + str(self.page), callback=self.parse) # 回调方法可以自己写个, 也可以用旧的parse

创建管道文件, 存储内容(pipelines.py)

编写settings.py文件

# 管道文件 (优先级同上) ITEM_PIPELINES = { # 'mySpider.pipelines.MyspiderPipeline': 300, 'mySpider.pipelines.TiebaPipeline': 100, }编写代码

import json class TiebaPipeline(object): # 初始化 def __init__(self): self.file = open('tieba.json', 'w', encoding='utf-8') # spider开启时调用 def open_spider(self, spider): pass # 必写的方法, 处理item数据 def process_item(self, item, spider): jsontext = json.dumps(dict(item), ensure_ascii=False) + "\n" self.file.write(jsontext) return item # spider结束后调用 def close_spider(self, spider): self.file.close()

运行爬虫

- 启动

scrapy crawl tieba

- 启动

request和response

# -*- coding: utf-8 -*-

import scrapy

class TiebaSpider(scrapy.Spider):

name = 'reqp'

allowed_domains = ['www.baidu.com']

# start_urls = ['http://www.baidu.com/1']

# 默认 start_urls 使用的是GET请求, 重写该方法, 注释掉 start_urls 就可以在第一次请求时自定义发送请求类型

def start_requests(self):

# 发送一个Form表单数据

return [scrapy.FormRequest(url='http://www.baidu.com/1', formdata={"key1": "value1", "key2": "value2"}, callback=self.parse),]

def parse(self, response):

'''

Response

def __init__(self, url, status=200, headers=None, body='', flags=None, request=None):

url, # 最后url

status=200, # 状态码

headers=None, # 结果头

body='', # 结果体

flags=None,

request=None

'''

'''

Request

def __init__(self, url, callback=None, method='GET', headers=None, body=None,

cookies=None, meta=None, encoding='utf-8', priority=0,

dont_filter=False, errback=None):

url = 'http://www.baidu.com/2' # url

callback = self.parse # response回调

method = "GET" # "GET"(默认), "POST" 等

headers = None # 请求头 (默认有请求头) >> headers = {"xxx":"xxx", "xxx", "xxx"}

body = None # 请求体

cookies=None # cookie >> cookies={'key1':'value1', 'key2': 'value2'}

meta=None # 传送数据(meta={'metakey':'metavalue'}), 可在回调函数取出(metavalue = response.meta['metakey'])

encoding='utf-8' # 字符集

priority=0 # 优先级

dont_filter=False # 不要过滤重复url (默认False)

errback=None # 错误回调

'''

yield scrapy.Request('http://www.baidu.com/2', callback=self.parse_meta, meta={'metakey': 'metavalue'})

# 发送From表单 (POST请求)

yield scrapy.FormRequest(url='http://www.baidu.com/3',

formdata={'key': 'value'}, # From表单数据

callback=self.parse)

# 同 scrapy.Request

yield response.follow('http://www.baidu.com/4', callback=None, method='GET', headers=None, body=None, cookies=None, meta=None, encoding='utf-8',

priority=0, dont_filter=False, errback=None)

def parse_meta(self, response):

print("====== {} ======".format(response.meta['metakey']))

settings.py 的配置

# 爬虫是否遵循robots协议

ROBOTSTXT_OBEY = False # True

# 爬虫并发量 (默认16)

#CONCURRENT_REQUESTS = 32

# 下载延迟(默认:0)

#DOWNLOAD_DELAY = 3

# 是否启动Cookie (默认True)

COOKIES_ENABLED = False

# 请求头

DEFAULT_REQUEST_HEADERS = {

'User-Agent': 'Mozilla/4.0 xxx,

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

}

# 爬虫中间件

#SPIDER_MIDDLEWARES = {

# 'mySpider.middlewares.MyspiderSpiderMiddleware': 543,

#}

# 下载中间件 (优先级: 数字越小越高 [0,1000])

#DOWNLOADER_MIDDLEWARES = {

# 'mySpider.middlewares.MyspiderDownloaderMiddleware': 543,

#}

# 管道文件 (优先级同上)

#ITEM_PIPELINES = {

# 'mySpider.pipelines.MyspiderPipeline': 300,

#}

下载文件(图片)

settings.py配置

# 管道文件 (优先级同上) ITEM_PIPELINES = { # 'mySpider.pipelines.MyspiderPipeline': 300, 'mySpider.pipelines.TiebaPipeline': 100, 'mySpider.pipelines.MyFilesPipeline': 1, } FILES_STORE = "C:\Code\Python_Vir\mySpider\static"爬虫代码

# -*- coding: utf-8 -*- import scrapy from mySpider.items import FileItem class ImageSpider(scrapy.Spider): name = 'image' # 爬虫名 allowed_domains = ['tieba.baidu.com'] # 爬虫作用范围 page = 1 page_max = 30 url = 'http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=' start_urls = [url + str(page)] # 爬虫起始地址 # 处理响应文件 def parse(self, response): # scrapy 自带的 xpath 匹配 # .css('title::text') / .re(r'Quotes.*') / .xpath('//title') tieba_list = response.xpath('//a[@rel="noopener"]') # 数据根目录 for tieba in tieba_list: # 从网页中获取需要的数据 file_url = tieba.xpath('./img[@class="ba_pic"]/@src').extract_first() file_name = tieba.xpath('./div[@class="ba_content"]/p[@class="ba_name"]/text()').extract_first() if (file_url and file_name) is not None: item = FileItem() item['file_url'] = file_url item['file_name'] = file_name yield item if self.page < self.page_max: self.page += 1 yield response.follow(self.url + str(self.page), callback=self.parse)目标内容代码

class FileItem(scrapy.Item): # 图片地址 file_url = scrapy.Field() # 图片名 file_name = scrapy.Field() # 图片路径 file_path = scrapy.Field()管道代码 (TiebaPipeline管道与操作步骤里的代码是一样的, 主要是保存json数据, 这里不拷贝了)

import scrapy from scrapy.utils.project import get_project_settings # 从settings.py获取值 from scrapy.conf import settings # 从settings.py获取值 >> settings["FILES_STORE"] # from scrapy.pipelines.images import ImagesPipeline # 下载图片的管道 from scrapy.pipelines.files import FilesPipeline # 下载文件的管道 import os class MyFilesPipeline(FilesPipeline): # 从settings.py获取变量值 IMAGES_STORE = get_project_settings().get("FILES_STORE") # 重写 发送图片地址 def get_media_requests(self, item, info): file_url = item['file_url'] yield scrapy.Request(file_url) # 返回None表示没有图片可下载 # 重写 下载完成 def item_completed(self, results, item, info): ''' result [(success:成功True 失败False {'checksum': '图片MD5' 'path': '图片存储路径' 'url': '图片下载url' }, # 成功为字典, 失败为Failure )] [(True, {'checksum': '2b00042f7481c7b056c4b410d28f33cf', 'path': 'full/7d97e98f8af710c7e7fe703abc8f639e0ee507c4.jpg', 'url': 'http://www.example.com/images/product1.jpg'}),] ''' file_paths = [x['path'] for ok, x in results if ok] # 对文件进行重命名 name_new = self.IMAGES_STORE + os.sep + item["file_name"] os.rename(self.IMAGES_STORE + os.sep + file_paths[0], name_new + ".jpg") item['file_path'] = name_new return item

跟进爬虫CrawlSpiders

提取链接并跟进爬取 (注意, 部分反爬虫机制可能会提供假的url)

创建命令:

scrapy genspider -t crawl tieba tieba.baidu.com爬虫代码:

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from mySpider.items import TiebaItem class TiebaCrawlSpider(CrawlSpider): name = 'tieba_crawl' allowed_domains = ['tieba.baidu.com'] start_urls = ['http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=1'] # 提取页面url规则 page_lx = LinkExtractor(allow=('pn=\d+')) # 提取内容url规则 # content_lx = LinkExtractor(allow=('xxx')) ''' 可写多个Rule匹配规则 LinkExtractor: (用于提取链接) allow = (), # 满足正则提取, 为空提取全部 deny = (), # 不满足正则提取 allow_domains = (), # 满足域提取 deny_domains = (), # 不满足域提取 deny_extensions = None, restrict_xpaths = (), # 满足xpath提取 restrict_css = () # 满足css提取 tags = ('a','area'), # 满足标签 attrs = ('href'), # 满足属性 canonicalize = True, # 是否规范链接 unique = True, # 是否过滤重复链接 process_value = None Rule: link_extractor: LinkExtractor对象(定义需要获取的链接) callback = None: 每获取到一个链接时的回调(不要使用parse函数) cb_kwargs = None, follow = None: 是否对提取的链接进行跟进 process_links = None: 过滤链接 process_request = None: 过滤request ''' rules = ( # 跟进page Rule(page_lx, callback='parse_item', follow=True), # 不跟进内容 # Rule(page_lx, callback='content_item', follow=False), ) def parse_item(self, response): tieba_list = response.xpath('//div[@class="ba_content"]') for tieba in tieba_list: name = tieba.xpath('./p[@class="ba_name"]/text()').extract_first() summary = tieba.xpath('./p[@class="ba_desc"]/text()').extract_first() person_sum = tieba.xpath('./p/span[@class="ba_m_num"]/text()').extract_first() text_sum = tieba.xpath('./p/span[@class="ba_p_num"]/text()').extract_first() item = TiebaItem() item['name'] = name item['summary'] = summary item['person_sum'] = person_sum item['text_sum'] = text_sum yield item def content_item(self, response): pass

保存数据到数据库

import pymongo

# MONGODB数据库的使用

class MongoPipeline(object):

def __init__(self):

# 获取settings.py里的参数

host = settings["MONGODB_HOST"]

port = settings["MONGODB_PORT"]

dbname = settings["MONGODB_DBNAME"]

sheetname= settings["MONGODB_SHEETNAME"]

# MongoDB数据库

client = pymongo.MongoClient(host=host, port=port) # 连接

mydb = client[dbname] # 数据库

self.sheet = mydb[sheetname] # 表

def process_item(self, item, spider):

data = dict(item)

self.sheet.insert(data) # 插入数据

return item

下载中间件

settings.py配置

DOWNLOADER_MIDDLEWARES = { # 'mySpider.middlewares.MyspiderDownloaderMiddleware': 543, 'mySpider.middlewares.RandomUserAgent': 100, 'mySpider.middlewares.RandomProxy': 200, }下载中间件代码

import random import base64 class RandomUserAgent(object): def __init__(self): self.USER_AGENTS = [ 'Mozilla/5.0(Windows;U;WindowsNT6.1;en-us)AppleWebKit/534.50(KHTML,likeGecko)Version/5.1Safari/534.50', ] # 必须写 def process_request(self, request, spider): useragent = random.choice(self.USER_AGENTS) # 修改请求头 (优先级高于settings.py里配置的) request.headers.setdefault("User-Agent", useragent) class RandomProxy(object): def __init__(self): self.PROXIES = [ # {"ip_port": "http://115.215.56.138:22502", "user_passwd": b"user:passwd"}, {"ip_port": "http://115.215.56.138:22502", "user_passwd": b""} ] def process_request(self, request, spider): proxy = random.choice(self.PROXIES) # 无须授权的代理ip if len(proxy['user_passwd']) <= 0: request.meta['proxy'] = proxy['ip_port'] # 需要授权代理ip else: request.meta['proxy'] = proxy['ip_port'] userpasswd_base64 = base64.b64encode(proxy['user_passwd']) request.headers['Proxy-Authorization'] = 'Basic ' + userpasswd_base64 # 按指定格式格式化

其他

保存log

在settings.py里配置

# 保存日志信息

LOG_FILE = "tieba.log"

LOG_LEVEL = "DEBUG"

logging的配置参数

LOG_ENABLED = True # 启动logging (默认True)

LOG_ENCODING = 'utf-8' # logging编码

LOG_FILE = "tieba.log" # logging文件名

LOG_LEVEL = "DEBUG " # log级别 (默认DEBUG)

LOG_STDOUT = False # True进程所有标准输出到写到log文件中 (默认False)

logging的错误级别 (级别最低从最高: 1->5)

1. CRITICAL(严重错误)

2. ERROR(一般错误)

3. WARNING(警告信息)

4. INFO(一般信息)

5. DEBUG(调试信息)

代码中使用logging

import logging

logging.warning("This is a warning")

logging.log(logging.WARNING, "This is a warning")

logger = logging.getLogger()

logger.warning("This is a warning")

Scrapy Shell

Scrapy终端是一个交互终端,供您在未启动spider的情况下尝试及调试您的爬取代码

- 启动

scrapy shell "http://www.baidu.com"

- 获取

- body:

response.body - 内容(解析用):

response.text - 状态码:

response.status - 请求头:

response.headers - 结果url:

response.url - 用浏览器查看response:

view(response) - 转成文字:

response.xpath('//div[@class="ba_content"]').extract() - 第一个转成文字:

response.xpath('//div[@class="ba_content"]').extract_first()

- body:

- 匹配

- xpath:

response.xpath('//div[@class="ba_content"]')

- xpath:

运行代码

from scrapy.linkextractors import LinkExtractor link_extrator = LinkExtractor(allow=("\d+")) links_list = link_extrator.extract_links(response) fetch('http://www.baidu.com')

—

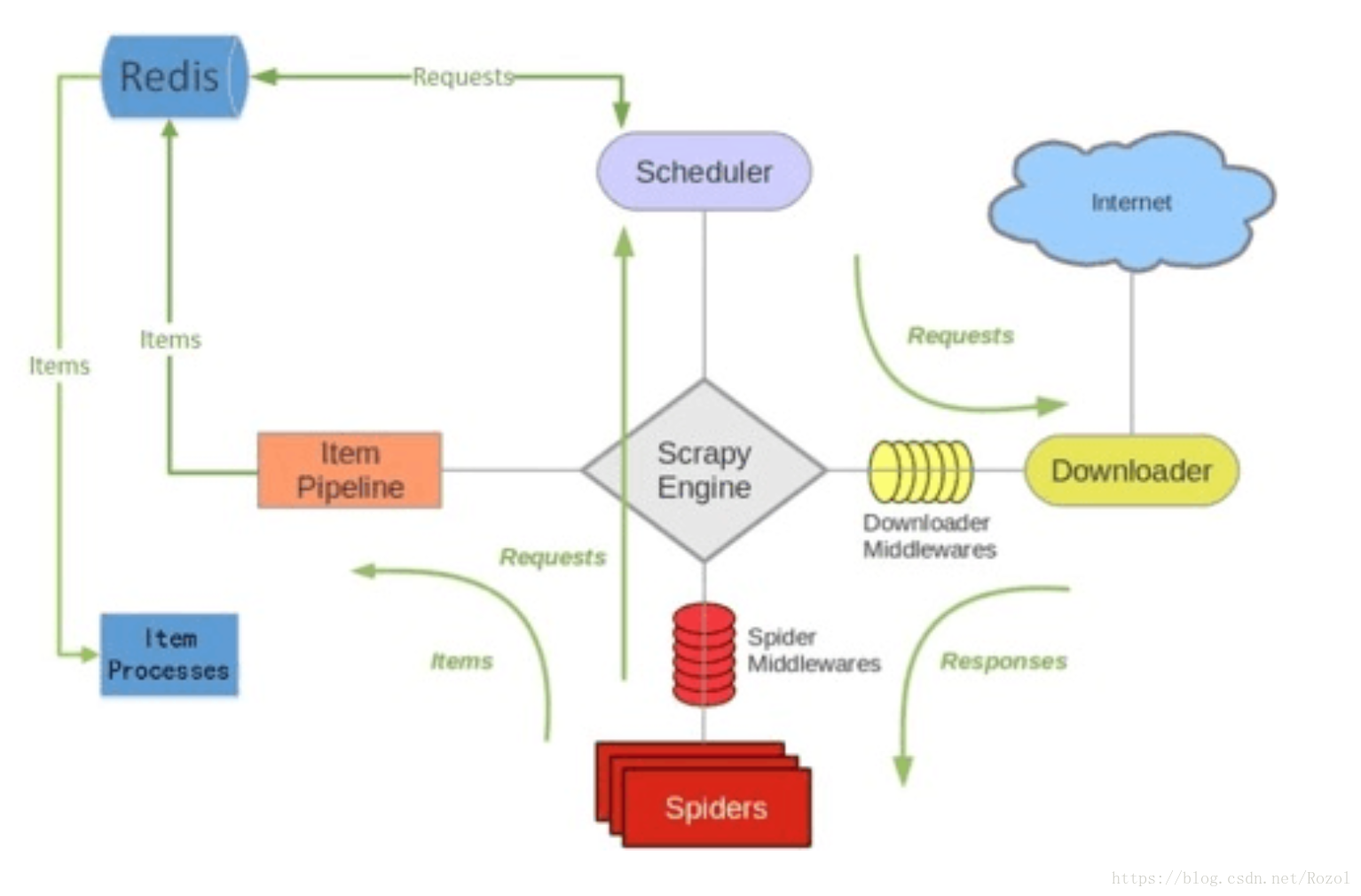

分布式爬虫 scrapy-redis

Scrapy 爬虫框架本身不支持分布式, Scrapy-redis为了实现Scrapy分布式提供了一些以redis为基础的组件

https://github.com/rolando/scrapy-redis.git

框架

Scheduler(调度器):

- Scrapy的Scrapy queue 换成 redis 数据库, 多个 spider 从同一个 redis-server 获取要爬取的request

- Scheduler 对新的request进行入队列操作, 取出下个要爬取的request给爬虫, 使用Scrapy-redis的scheduler组件

Duplication Filter(指纹去重):

- 在scrapy-redis中去重是由Duplication Filter组件来实现的, 通过redis的set不重复的特性

- scrapy-redis调度器从引擎接受request, 并指纹存⼊set检查是否重复, 不重复写⼊redis的request queue

Item Pipeline(管道):

- 引擎将爬取到的Item给Item Pipeline, scrapy-redis组件的Item Pipeline将爬取到的Item存⼊redis的items队列里

Base Spider(爬虫):

- RedisSpider继承了Spider和RedisMixin这两个类, RedisMixin用来从redis读取url的类

- 我们写个Spider类继承RedisSpider, 调用setup_redis函数去连接redis数据库, 然后设置signals(信号)

- 当spider空闲时候的signal, 会调用spider_idle函数, 保证spider是一直活着的状态

- 当有item时的signal, 会调用item_scraped函数, 获取下一个request

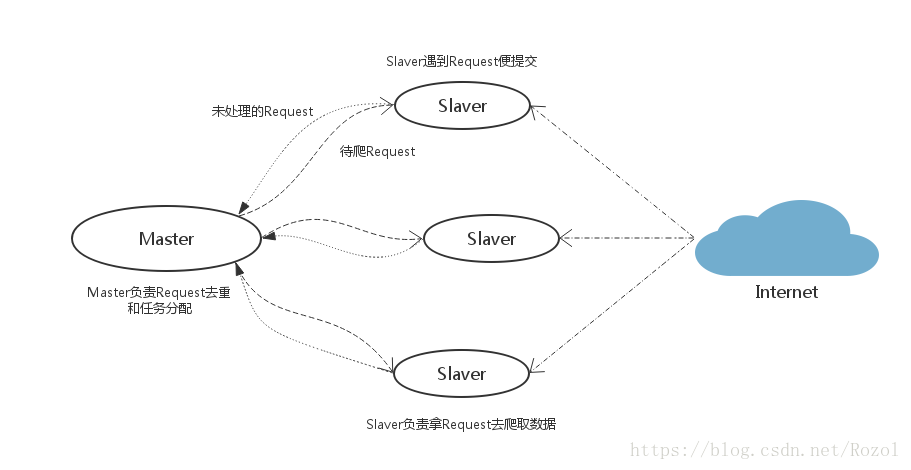

Scrapy-Redis分布式策略

- Master端(核心服务器): 搭建一个Redis数据库, 一般不负责爬取, 只负责Request去重, Request的分配, 数据的存储

- Slaver端(爬虫程序执行端): 负责执行爬虫程序, 从Master端拿任务进行数据抓取, 运行过程中提交新的Request给Master处理

- Master端的Scrapy-Redis调度的任务是Request对象(包含url, callback, headers等信息), 占用大量的Redis存储空间, 会导致爬虫速度降低, 对硬件要求比较高(内存, 网络)

准备

- Redis (仅需Master端启动)

- 官网: https://redis.io/download

- 管理软件: https://redisdesktop.com/download

- redis命令(cd到redis安装目录):

- 启动:

redis-server redis.windows.conf - 连接: 运行

redis-cli

- 连接其他主机:

redis-cli -h 192.168.1.2

- 连接其他主机:

- 切换数据库:

select 1 - 查看键:

keys * - 设置值:

set [键] [值] - 查看值:

get [键] - 删除数据:

del [键/*]

- 启动:

- 修改Master端

redis.windows.conf配置文件

- 注释掉

bind 127.0.0.1 - Ubuntu可选(作为后台线程):

daemonize yes - 关闭保护模式(

protected-mode no)或设置密码

- 注释掉

- 启动

- scrapy-redis(组件)

- 安装:

pip install scrapy-redis scrapy-redis-0.6.8- 下载案例代码:

git clone https://github.com/rolando/scrapy-redis.git

- 安装:

操作步骤

创建项目(同上)

scrapy startproject myRedisSpider

编写要存储的内容(items.py) (除了保存数据从哪来, 其他同上)

class TiebaItem(scrapy.Item): name = scrapy.Field() summary = scrapy.Field() person_sum = scrapy.Field() text_sum = scrapy.Field() # 数据来源 from_url = scrapy.Field() from_name = scrapy.Field() time = scrapy.Field()创建爬虫 (除了保存数据从哪来, 其他同上)

- cd 到项目目录(myRedisSpider)下

scrapy genspider tieba tieba.baidu.com- 爬虫文件创建在

mySpider.spiders.tieba 编写爬虫

# -*- coding: utf-8 -*- import scrapy # 1. 导入 RedisSpider库 from scrapy_redis.spiders import RedisSpider from myRedisSpider.items import TiebaItem # 普通的 Spider 爬虫改造成分布式的 RedisSpider 爬虫 [1,4] # 2. 继承 scrapy.Spider 换成 RedisSpider class TiebaSpider(RedisSpider): name = 'tieba' allowed_domains = ['tieba.baidu.com'] page = 1 page_max = 30 url = 'http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=' # 3. 注释 start_urls # start_urls = [url + str(page)] # 4. 启动所有爬虫端的指令 (爬虫名:start_urls) redis_key = 'tieba:start_urls' def parse(self, response): tieba_list = response.xpath('//div[@class="ba_content"]') # 数据根目录 for tieba in tieba_list: # 从网页中获取需要的数据 name = tieba.xpath('./p[@class="ba_name"]/text()').extract_first() summary = tieba.xpath('./p[@class="ba_desc"]/text()').extract_first() person_sum = tieba.xpath('./p/span[@class="ba_m_num"]/text()').extract_first() text_sum = tieba.xpath('./p/span[@class="ba_p_num"]/text()').extract_first() item = TiebaItem() item['name'] = name item['summary'] = summary item['person_sum'] = person_sum item['text_sum'] = text_sum # 告知数据从哪里来 item['from_url'] = response.url yield item if self.page < self.page_max: self.page += 1 yield response.follow(self.url + str(self.page), callback=self.parse)

配置settings.py文件 (添加以下设置, 其他同上)

ITEM_PIPELINES = { 'myRedisSpider.pipelines.MyredisspiderPipeline': 300, 'scrapy_redis.pipelines.RedisPipeline': 400, # 将数据存到redis数据库 (优先级要比其他管道低(数值高)) } # scrapy_redis去重组件 DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" # scrapy_redis调度器组件 SCHEDULER = "scrapy_redis.scheduler.Scheduler" # 可中途暂停, 不清空信息 SCHEDULER_PERSIST = True # 默认Scrapy队列模式, 优先级 # SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderPriorityQueue" # 队列模式, 先进先出 #SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderQueue" # 栈模式, 先进后出 #SCHEDULER_QUEUE_CLASS = "scrapy_redis.queue.SpiderStack" # redis主机 REDIS_HOST = '192.168.1.104' REDIS_PORT = '6379' REDIS_ENCODING = "utf-8"设置值的通道

class MyredisspiderPipeline(object): def process_item(self, item, spider): item['time'] = datetime.utcnow() # 格林威治时间 item['from_name'] = spider.name return item运行

- Slaver端开启爬虫: (scrapy runspider 爬虫文件名.py)

- cd到myRedisSpider\spiders爬虫目录

scrapy runspider tieba.py

- Master端发送指令: (lpush redis_key 爬虫起始url)

- cd到redis安装目录并打开redis-cli, 输出命令

lpush tieba:start_urls http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=1

- Slaver端开启爬虫: (scrapy runspider 爬虫文件名.py)

跟进爬虫CrawlSpiders

- 创建命令:

scrapy genspider -t crawl tieba_crawl tieba.baidu.com 爬虫代码

# -*- coding: utf-8 -*- import scrapy from scrapy.linkextractors import LinkExtractor # from scrapy.spiders import CrawlSpider, Rule from scrapy.spiders import Rule # 1. 把 CrawlSpider 换成 RedisCrawlSpider from scrapy_redis.spiders import RedisCrawlSpider from myRedisSpider.items import TiebaItem # 普通的 CrawlSpider 爬虫改造成分布式的 RedisCrawlSpider 爬虫 [1,4] # class TiebaSpider(CrawlSpider): # 2. 把继承 CrawlSpider 换成 RedisCrawlSpider class TiebaSpider(RedisCrawlSpider): name = 'tieba_crawl' # 不要使用动态域, 因为会导致获取不到域而将所有请求过滤 Filtered offsite request to 'tieba.baidu.com' allowed_domains = ['tieba.baidu.com'] # 3. 注释 start_urls # start_urls = ['http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=1'] # 4. 启动所有爬虫端的指令 (爬虫名:start_urls) redis_key = 'tiebacrawl:start_urls' rules = ( Rule(LinkExtractor(allow=('pn=\d+')), callback='parse_item', follow=True), # st=new& ) def parse_item(self, response): tieba_list = response.xpath('//div[@class="ba_content"]') for tieba in tieba_list: name = tieba.xpath('./p[@class="ba_name"]/text()').extract_first() summary = tieba.xpath('./p[@class="ba_desc"]/text()').extract_first() person_sum = tieba.xpath('./p/span[@class="ba_m_num"]/text()').extract_first() text_sum = tieba.xpath('./p/span[@class="ba_p_num"]/text()').extract_first() item = TiebaItem() item['name'] = name item['summary'] = summary item['person_sum'] = person_sum item['text_sum'] = text_sum # 告知数据从哪里来 item['from_url'] = response.url item['from_name'] = self.name yield item运行爬虫 (同上)

scrapy runspider tieba_crawl.py

- 发送指令 (同上)

lpush tiebacrawl:start_urls http://tieba.baidu.com/f/index/forumpark?cn=%E5%86%85%E5%9C%B0%E6%98%8E%E6%98%9F&ci=0&pcn=%E5%A8%B1%E4%B9%90%E6%98%8E%E6%98%9F&pci=0&ct=1&st=new&pn=1

把Redis数据保存到本地数据库

MySQL

- 创建数据库创建表:

- 创建表:

create table tieba (name varchar(1000), summary varchar(1000), person_sum varchar(1000), text_sum varchar(1000), from_url varchar(1000), from_name varchar(1000), time varchar(1000)); - 插入数据:

insert into tieba(name, summary, person_sum, text_sum, from_url, from_name) values("名字", "简介", "111", "222", "http://tieba.baidu.com", "tieba", "xxx");

- 创建表:

python代码实现

#!/usr/bin/env python # coding=utf-8 # pip install redis import redis # pip install pymysql import pymysql import json import sys def process_item(): # 创建数据库连接 redisc = redis.Redis(host="192.168.1.104", port=6379, db=0, encoding='utf-8') mysql = pymysql.connect(host='127.0.0.1', port=3306, user='root', password='root', db='test', charset='utf8mb4') cursor = None while True: try: # 获取数据 source, data = redisc.blpop("tieba:items") item = json.loads(data, encoding="utf-8") # 保存数据 cursor = mysql.cursor() sql = 'insert into tieba(name, summary, person_sum, text_sum, from_url, from_name, time) values(%s, %s, %s, %s, %s, %s, %s)' cursor.execute(sql, [item["name"], item["summary"], item["person_sum"], item["text_sum"], item["from_url"], item["from_name"], item["time"]]); mysql.commit() print(end=">") except: types, value, back = sys.exc_info() # 捕获异常 sys.excepthook(types, value, back) # 打印异常 finally: if cursor is not None: cursor.close() if __name__ == "__main__": process_item()

- 创建数据库创建表: