void lir_SGD()

{

//在x中添加一列1,对应w0

vector<vector<double>> x={

{1,1.1,1.5},

{1,1.3,1.9},

{1,1.5,2.3},

{1,1.7,2.7},

{1,1.9,3.1},

{1,2.1,3.5},

{1,2.3,3.9},

{1,2.5,4.3},

{1,2.7,4.7},

{1,2.9,5.1}

};

vector<double> y={

2.5,

3.2,

3.9,

4.6,

5.3,

6,

6.7,

7.4,

8.1,

8.8

};

double alpha=0.01;

vector<double> w=vector<double>(x[0].size(),0);

int loop=1;

while(loop++<10000) {

for(int i=0;i<x.size();i++) {

////计算w*x值

double wx=0;

for(int j=0;j<w.size();j++) {

wx+=w[j]*x[i][j];

}

//计算w*x 和y 之间的误差

double wx_y=wx-y[i];

//计算每个w的导数,每个w对应-个x中的每个位置

for(int j=0;j<w.size();j++) {

//计算w的导数

double deri=wx_y*x[i][j];

//更新每个w的值

w[j]-=alpha*deri;

}

}

}

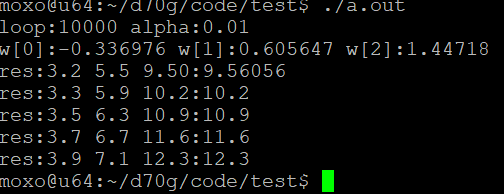

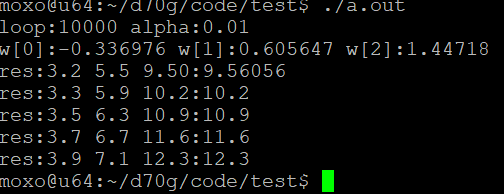

std::cout<<"loop:"<<loop-1<<" alpha:"<<alpha<<std::endl;

//输出每个w的值

for(int i=0;i<w.size();i++) {

std::cout<<"w["<<i<<"]:"<<w[i]<<" ";

if(i==w.size()-1) std::cout<<std::endl;

}

//验证结果

std::cout<<"res:3.2 5.5 9.50:"<<w[0]+w[1]*3.2+w[2]*5.5<<std::endl;

std::cout<<"res:3.3 5.9 10.2:"<<w[0]+w[1]*3.3+w[2]*5.9<<std::endl;

std::cout<<"res:3.5 6.3 10.9:"<<w[0]+w[1]*3.5+w[2]*6.3<<std::endl;

std::cout<<"res:3.7 6.7 11.6:"<<w[0]+w[1]*3.7+w[2]*6.7<<std::endl;

std::cout<<"res:3.9 7.1 12.3:"<<w[0]+w[1]*3.9+w[2]*7.1<<std::endl;

}