#xpath

#第一种方法 可在开发者工具中找到标签,右键copy xpath,有时需去掉tbody标签

#第二种方法 简单学习xpath,自己书写,掌握基本语法即可,简单的层级关系

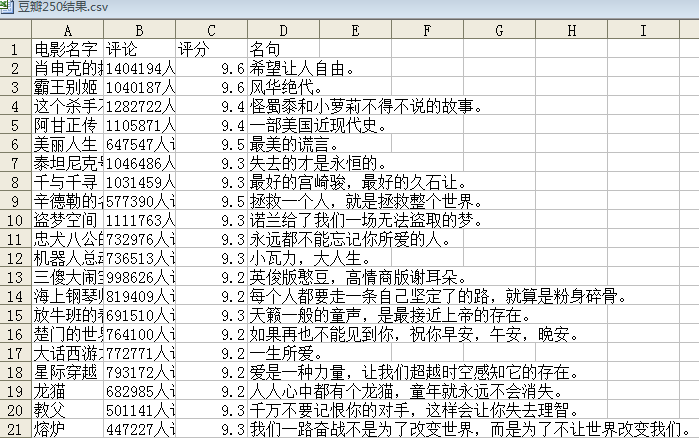

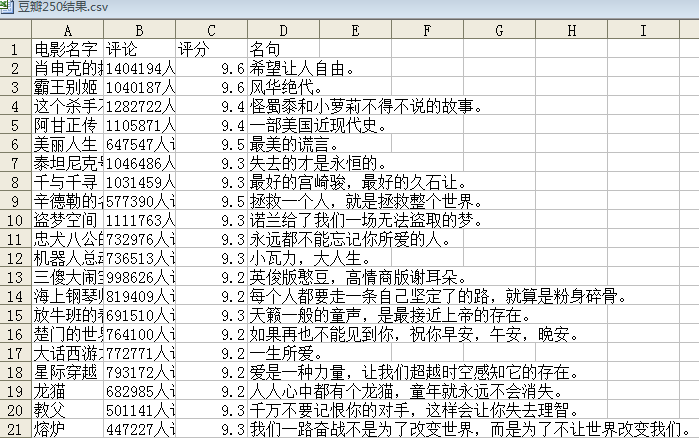

#先将csv文件以记事本打开,更改编码为ASNI,保存,再用excel打开即可

import urllib.request

import urllib.parse

import csv

from lxml import etree

#需要cmd pip install lxml

headers = ['电影名字', '评论', '评分', '名句']

with open('C:\\Users\\lenovo\\Desktop\\mmm.csv','a+',

newline='', encoding='utf-8') as f:

writer = csv.writer(f)

writer.writerow(headers)#先将表头插入

for i in range(10):

url ='https://movie.douban.com/top250?start={}&filter='.format(i*25)#发现规律,网址的变化,用format更便捷

response = urllib.request.urlopen(url).read().decode()#源代码

html = etree.HTML(response)#建议学习Xpath,非常有用,web自动化中也会用到

name = html.xpath('//*[@id="content"]/div/div[1]/ol/li/div/div[2]/div[1]/a/span[1]/text()')#电影名字

comments = html.xpath('//*[@id="content"]/div/div[1]/ol/li/div/div[2]/div[2]/div/span[4]/text()')#电影评价数

star = html.xpath('//*[@id="content"]/div/div[1]/ol/li/div/div[2]/div[2]/div/span[2]/text()')#评分

quote = html.xpath('//*[@id="content"]/div/div[1]/ol/li/div/div[2]/div[2]/p[2]/span/text()')#名句

with open('C:\\Users\\lenovo\\Desktop\\mmm.csv','a+',

newline='', encoding='utf-8') as f:#将数据写入csv文件,a+代表继续写入

writer = csv.writer(f)#将文件对象转化成csv对象

listw = []

for i in range(25):

listw = [name[i], comments[i], star[i], quote[i]]

writer.writerow(listw)#csv按行写入,写一个列表