接着第二篇,master上面部署完了三个角色,接着部署node节点

主要部署:kubelet kube-proxy

一 环境准备(以下都是在master上操作)

1建立目录,拷贝两个组件

mkdir /home/yx/kubernetes/{bin,cfg,ssl} -p

# 两个node节点都拷贝

scp -r /home/yx/src/kubernetes/server/bin/kubelet [email protected]:/home/yx/kubernetes/bin

scp -r /home/yx/src/kubernetes/server/bin/kube-proxy [email protected]:/home/yx/kubernetes/bin

2将kubelet-bootstrap用户绑定到系统集群角色

kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap3 生成bootstrap.kubeconfig和kube-proxy.kubeconfig两个文件,利用kubeconfig.sh脚本,内如如下:

执行 bash kubeconfig.sh 192.168.18.104 其中第一个参数是master节点ip,第二个是ssl证书的路径,最终会生成上面两个文件,然后把这两个文件拷贝到两个node节点上面

# 创建 TLS Bootstrapping Token

#BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

BOOTSTRAP_TOKEN=71b6d986c47254bb0e63b2a20cfaf560

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

#----------------------

APISERVER=$1

SSL_DIR=$2

# 创建kubelet bootstrapping kubeconfig

export KUBE_APISERVER="https://$APISERVER:6443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

# 设置上下文参数

kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 设置默认上下文

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#----------------------

# 创建kube-proxy kubeconfig文件

kubectl config set-cluster kubernetes \

--certificate-authority=$SSL_DIR/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy \

--client-certificate=$SSL_DIR/kube-proxy.pem \

--client-key=$SSL_DIR/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig4拷贝生成的bootstrap.kubeconfig 和 kube-proxy.kubeconfig

scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/home/yx/kubernetes/cfg

scp bootstrap.kubeconfig kube-proxy.kubeconfig [email protected]:/home/yx/kubernetes/cfg二 node节点安装

1 部署kubelet组件

创建kubelet配置文件:

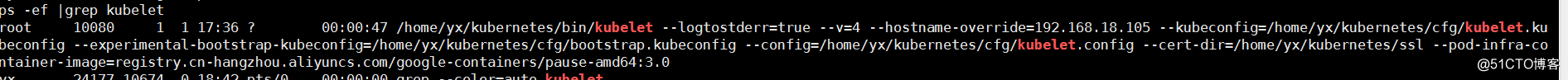

cat /home/yx/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.18.105 \

--kubeconfig=/home/yx/kubernetes/cfg/kubelet.kubeconfig \

--experimental-bootstrap-kubeconfig=/home/yx/kubernetes/cfg/bootstrap.kubeconfig \

--config=/home/yx/kubernetes/cfg/kubelet.config \

--cert-dir=/home/yx/kubernetes/ssl \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

参数说明:

--hostname-override 在集群中显示的主机名

--kubeconfig 指定kubeconfig文件位置,会自动生成

--bootstrap-kubeconfig 指定刚才生成的bootstrap.kubeconfig文件

--cert-dir 颁发证书存放位置

--pod-infra-container-image 管理Pod网络的镜像

创建kubelet.config

cat /home/yx/kubernetes/cfg/kubelet.config

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 192.168.18.105

port: 10250

cgroupDriver: cgroupfs

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local.

failSwapOn: false启动脚本

cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=/home/yx/kubernetes/cfg/kubelet

ExecStart=/home/yx/kubernetes/bin/kubelet $KUBELET_OPTS

Restart=on-failure

KillMode=process

[Install]

WantedBy=multi-user.target启动

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

查看是否启动

2 部署kube-proxy组件

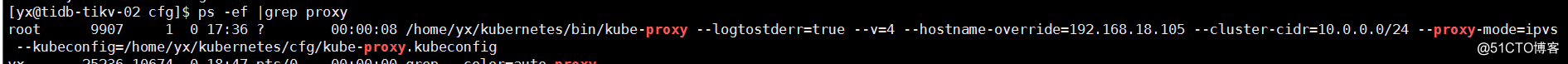

创建kube-proxy配置文件:

cat /home/yx/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true \

--v=4 \

--hostname-override=192.168.18.105 \

--cluster-cidr=10.0.0.0/24 \

--proxy-mode=ipvs \

--kubeconfig=/home/yx/kubernetes/cfg/kube-proxy.kubeconfig"

启动脚本

[yx@tidb-tikv-02 cfg]$ cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/home/yx/kubernetes/cfg/kube-proxy

ExecStart=/home/yx/kubernetes/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.targe

启动

systemctl daemon-reload

systemctl enable kube-proxy

systemctl restart kube-proxy

验证

同样的,在另一个node节点上也执行上面的,注意ip要改一下即可

三 在Master审批Node加入集群:

查看

[yx@tidb-tidb-03 cfg]$ kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-jn-F4xSn1LAwJhom9l7hlW0XuhDQzo-RQrnkz1j4q6Y 16m kubelet-bootstrap Pending

node-csr-kB2CFmTqkCA2Ix5qYGSXoAP3-ctes-cHcjs7D84Wb38 5h55m kubelet-bootstrap Approved,Issued

node-csr-wWa0cKQ6Ap9Bcqap3m9d9ZBqBclwkLB84W8bpB3g_m0 22s kubelet-bootstrap Pending

允许加入

kubectl certificate approve node-csr-wWa0cKQ6Ap9Bcqap3m9d9ZBqBclwkLB84W8bpB3g_m0

certificatesigningrequest.certificates.k8s.io/node-csr-wWa0cKQ6Ap9Bcqap3m9d9ZBqBclwkLB84W8bpB3g_m0 approved

# 允许完成之后,状态会发生改变由Pending变成Approved,Issued四 查看集群状态(master上)

[yx@tidb-tidb-03 cfg]$ kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.18.104 Ready <none> 41s v1.12.1

192.168.18.105 Ready <none> 52s v1.12.1

[yx@tidb-tidb-03 cfg]$ kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"} 至此整个k8s二进制安装全部完成,接下来该进行实际操作了