一 实例描述

构建异或数据集模拟样本,使用多层神经网络将其分类,并使用dropout技术来改善过拟合情况。

如下代码,在layer_1后面添加一个dropout层,将dropout的keep_prob设为占位符,这样可以在运行时随时指定keep_prob,在session的run中指定keep_prob为0.6,这意味着每次训练将仅仅允许60%的节点参与学习运算。由于学习算的慢了,所以要将学习率调大些,变成0.01,加快训练。

另外在,测试时,别忘了一定要将keep_prob调成1.

二 代码

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from sklearn.utils import shuffle

from matplotlib.colors import colorConverter, ListedColormap

# 对于上面的fit可以这么扩展变成动态的

from sklearn.preprocessing import OneHotEncoder

def onehot(y,start,end):

ohe = OneHotEncoder()

a = np.linspace(start,end-1,end-start)

b =np.reshape(a,[-1,1]).astype(np.int32)

ohe.fit(b)

c=ohe.transform(y).toarray()

return c

def generate(sample_size, num_classes, diff,regression=False):

np.random.seed(10)

mean = np.random.randn(2)

cov = np.eye(2)

#len(diff)

samples_per_class = int(sample_size/num_classes)

X0 = np.random.multivariate_normal(mean, cov, samples_per_class)

Y0 = np.zeros(samples_per_class)

for ci, d in enumerate(diff):

X1 = np.random.multivariate_normal(mean+d, cov, samples_per_class)

Y1 = (ci+1)*np.ones(samples_per_class)

X0 = np.concatenate((X0,X1))

Y0 = np.concatenate((Y0,Y1))

if regression==False: #one-hot 0 into the vector "1 0

Y0 = np.reshape(Y0,[-1,1])

#print(Y0.astype(np.int32))

Y0 = onehot(Y0.astype(np.int32),0,num_classes)

#print(Y0)

X, Y = shuffle(X0, Y0)

#print(X, Y)

return X,Y

'''

构建输入

'''

# Ensure we always get the same amount of randomness

np.random.seed(10)

input_dim = 2

num_classes =4

X, Y = generate(120,num_classes, [[3.0,0],[3.0,3.0],[0,3.0]],True)

Y=Y%2

#colors = ['r' if l == 0.0 else 'b' for l in Y[:]]

#plt.scatter(X[:,0], X[:,1], c=colors)

xr=[]

xb=[]

for(l,k) in zip(Y[:],X[:]):

if l == 0.0 :

xr.append([k[0],k[1]])

else:

xb.append([k[0],k[1]])

xr =np.array(xr)

xb =np.array(xb)

plt.scatter(xr[:,0], xr[:,1], c='r',marker='+')

plt.scatter(xb[:,0], xb[:,1], c='b',marker='o')

plt.show()

'''

建立模型

'''

Y=np.reshape(Y,[-1,1])

learning_rate = 0.01#1e-4

n_input = 2

n_label = 1

n_hidden = 200

x = tf.placeholder(tf.float32, [None,n_input])

y = tf.placeholder(tf.float32, [None, n_label])

weights = {

'h1': tf.Variable(tf.truncated_normal([n_input, n_hidden], stddev=0.1)),

'h2': tf.Variable(tf.random_normal([n_hidden, n_label], stddev=0.1))

}

biases = {

'h1': tf.Variable(tf.zeros([n_hidden])),

'h2': tf.Variable(tf.zeros([n_label]))

}

layer_1 = tf.nn.relu(tf.add(tf.matmul(x, weights['h1']), biases['h1']))

keep_prob = tf.placeholder("float")

'''

在layer_1后面添加了一个dropout层

'''

layer_1_drop = tf.nn.dropout(layer_1, keep_prob)

#Leaky relus

layer2 =tf.add(tf.matmul(layer_1_drop, weights['h2']),biases['h2'])

y_pred = tf.maximum(layer2,0.01*layer2)

reg = 0.01

#loss=tf.reduce_mean((y_pred-y)**2)+tf.nn.l2_loss(weights['h1'])*reg+tf.nn.l2_loss(weights['h2'])*reg

loss=tf.reduce_mean((y_pred-y)**2)

global_step = tf.Variable(0, trainable=False)

decaylearning_rate = tf.train.exponential_decay(learning_rate, global_step,1000, 0.9)

train_step = tf.train.AdamOptimizer(learning_rate).minimize(loss)

#train_step = tf.train.AdamOptimizer(decaylearning_rate).minimize(loss,global_step=global_step)

#加载

'''

训练模型

'''

sess = tf.InteractiveSession()

sess.run(tf.global_variables_initializer())

for i in range(20000):

X, Y = generate(1000,num_classes, [[3.0,0],[3.0,3.0],[0,3.0]],True)

Y=Y%2

Y=np.reshape(Y,[-1,1])

_, loss_val = sess.run([train_step, loss], feed_dict={x: X, y: Y,keep_prob:0.6})

if i % 1000 == 0:

print ("Step:", i, "Current loss:", loss_val)

#colors = ['r' if l == 0.0 else 'b' for l in Y[:]]

#plt.scatter(X[:,0], X[:,1], c=colors)

xr=[]

xb=[]

for(l,k) in zip(Y[:],X[:]):

if l == 0.0 :

xr.append([k[0],k[1]])

else:

xb.append([k[0],k[1]])

xr =np.array(xr)

xb =np.array(xb)

plt.scatter(xr[:,0], xr[:,1], c='r',marker='+')

plt.scatter(xb[:,0], xb[:,1], c='b',marker='o')

nb_of_xs = 200

xs1 = np.linspace(-1, 8, num=nb_of_xs)

xs2 = np.linspace(-1, 8, num=nb_of_xs)

xx, yy = np.meshgrid(xs1, xs2) # create the grid

# Initialize and fill the classification plane

classification_plane = np.zeros((nb_of_xs, nb_of_xs))

for i in range(nb_of_xs):

for j in range(nb_of_xs):

#classification_plane[i,j] = nn_predict(xx[i,j], yy[i,j])

classification_plane[i,j] = sess.run(y_pred, feed_dict={x: [[ xx[i,j], yy[i,j] ]],keep_prob:1.0} )

classification_plane[i,j] = int(classification_plane[i,j])

# Create a color map to show the classification colors of each grid point

cmap = ListedColormap([

colorConverter.to_rgba('r', alpha=0.30),

colorConverter.to_rgba('b', alpha=0.30)])

# Plot the classification plane with decision boundary and input samples

plt.contourf(xx, yy, classification_plane, cmap=cmap)

plt.show()

'''

12节点测试

'''

xTrain, yTrain = generate(12,num_classes, [[3.0,0],[3.0,3.0],[0,3.0]],True)

yTrain=yTrain%2

#colors = ['r' if l == 0.0 else 'b' for l in yTrain[:]]

#plt.scatter(xTrain[:,0], xTrain[:,1], c=colors)

xr=[]

xb=[]

for(l,k) in zip(yTrain[:],xTrain[:]):

if l == 0.0 :

xr.append([k[0],k[1]])

else:

xb.append([k[0],k[1]])

xr =np.array(xr)

xb =np.array(xb)

plt.scatter(xr[:,0], xr[:,1], c='r',marker='+')

plt.scatter(xb[:,0], xb[:,1], c='b',marker='o')

#plt.show()

yTrain=np.reshape(yTrain,[-1,1])

print ("loss:\n", sess.run(loss, feed_dict={x: xTrain, y: yTrain,keep_prob:1.0}))

nb_of_xs = 200

xs1 = np.linspace(-1, 8, num=nb_of_xs)

xs2 = np.linspace(-1, 8, num=nb_of_xs)

xx, yy = np.meshgrid(xs1, xs2) # create the grid

# Initialize and fill the classification plane

classification_plane = np.zeros((nb_of_xs, nb_of_xs))

for i in range(nb_of_xs):

for j in range(nb_of_xs):

#classification_plane[i,j] = nn_predict(xx[i,j], yy[i,j])

classification_plane[i,j] = sess.run(y_pred, feed_dict={x: [[ xx[i,j], yy[i,j] ]],keep_prob:1.0} )

classification_plane[i,j] = int(classification_plane[i,j])

# Create a color map to show the classification colors of each grid point

cmap = ListedColormap([

colorConverter.to_rgba('r', alpha=0.30),

colorConverter.to_rgba('b', alpha=0.30)])

# Plot the classification plane with decision boundary and input samples

plt.contourf(xx, yy, classification_plane, cmap=cmap)

plt.show()

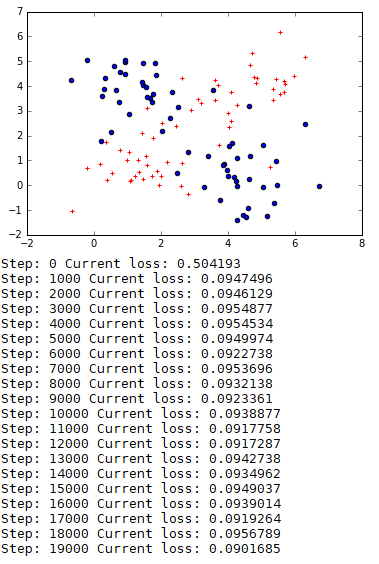

三 运行结果

四 说明

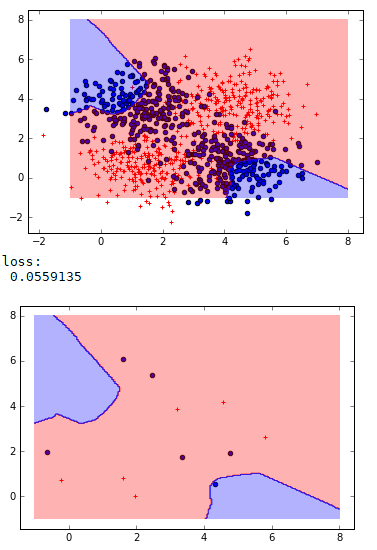

测试效果很不错!这次的模型测试loss比训练的loss值还要低,而且达到了0.05.这就是dropout的效果。

损失值在后期出现了抖动现象,这表明后期学习率有点大了。