环境说明

kubernetes v1.10.11

kubeflow v0.6.0.rc.1

版本介绍:

1)较之v0.5.1,v0.6.0使用了istio组件

2)包管理器也从ksonet转成了kustomize

3)还需要提供要给类似于公有云服务器上面的负载均衡器

依赖:

1)kubernetes

2)kfctl

3)负载均衡器

使用开源的负载均衡器,部署流程如下:

执行

kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.7.1/manifests/metallb.yaml

root@debian:/home/urmsone# kubectl get pods -n metallb-system

NAME READY STATUS RESTARTS AGE

controller-5f69bbf78c-6598x 1/1 Running 0 2h

speaker-85v8c 1/1 Running 0 5d

speaker-sd5b9 1/1 Running 0 5d

配置configmap

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: my-ip-space

protocol: layer2

addresses:

- 192.168.221.128/28

PS:这里的 IP 地址范围需要跟集群实际情况相对应。

1、生成kfctl二进制文件

执行git clone https://github.com/kubeflow/kubeflow.git

root@node:/home/urmsone# git clone https://github.com/kubeflow/kubeflow.git

正克隆到 'kubeflow'...

remote: Enumerating objects: 10, done.

remote: Counting objects: 100% (10/10), done.

remote: Compressing objects: 100% (10/10), done.

remote: Total 27026 (delta 0), reused 1 (delta 0), pack-reused 27016

接收对象中: 100% (27026/27026), 203.84 MiB | 2.25 MiB/s, 完成.

处理 delta 中: 100% (12566/12566), 完成.

执行

cd kubeflow/bootstrap

make build-kfctl-container

ln -s /home/urmsone/kubeflow/bootstrap/bin/kfctl /usr/bin/kfctl

root@node:/home/urmsone# cd kubeflow/bootstrap

root@node:/home/urmsone/kubeflow/bootstrap# make build-kfctl-container

DOCKER_BUILDKIT=1 docker build \

--build-arg GOLANG_VERSION=1.12 \

--build-arg VERSION=v0.6.0-rc.0-23-g8da1e470 \

--target=kfctl \

--tag gcr.io/kubeflow-images-public/kfctl/builder:v0.6.0-rc.0-23-g8da1e470 .

......

root@debian:/home/urmsone# kfctl

A client CLI to create kubeflow applications for specific platforms or 'on-prem'

to an existing k8s cluster.

Usage:

kfctl [command]

Available Commands:

apply Deploy a generated kubeflow application.

completion Generate shell completions

delete Delete a kubeflow application.

generate Generate a kubeflow application where resources is one of 'platform|k8s|all'.

help Help about any command

init Create a kubeflow application under <[path/]name>

show Show a generated kubeflow application.

version Print the version of kfctl.

Flags:

-h, --help help for kfctl

Use "kfctl [command] --help" for more information about a command.

PS:

先到kubeflow官网上选好对应的版本,获取git仓库的地址。

以上命令执行过程中可能遇到的问题:

1)docker版本太低,不支持dockerfile中使用的语法,报错

root@node:/home/urmsone/kubeflow/bootstrap# make build-kfctl-container

DOCKER_BUILDKIT=1 docker build \

--build-arg GOLANG_VERSION=1.12 \

--build-arg VERSION=v0.6.0-rc.0-23-g8da1e470 \

--target=kfctl \

--tag gcr.io/kubeflow-images-public/kfctl/builder:v0.6.0-rc.0-23-g8da1e470 .

unknown flag: --target

See 'docker build --help'.

Makefile:116: recipe for target 'build-kfctl-container' failed

make: *** [build-kfctl-container] Error 125

执行vim Makefile

...

build-kfctl-container:

DOCKER_BUILDKIT=1 docker build \

--build-arg GOLANG_VERSION=$(GOLANG_VERSION) \

--build-arg VERSION=$(TAG) \

--target=$(KFCTL_TARGET) \

--tag $(KFCTL_IMG)/builder:$(TAG) .

@echo Built $(KFCTL_IMG)/builder:$(TAG)

mkdir -p bin

docker create \

--name=temp_kfctl_container \

$(KFCTL_IMG)/builder:$(TAG)

docker cp temp_kfctl_container:/usr/local/bin/kfctl ./bin/kfctl

docker rm temp_kfctl_container

@echo Exported kfctl binary to bin/kfctl

...

执行vim

FROM barebones_base as kfctl

COPY --from=kfctl_base /go/src/github.com/kubeflow/kubeflow/bootstrap/bin/kfctl /usr/local/bin

CMD ["/bin/bash", "-c", "trap : TERM INT; sleep infinity & wait"]

类似于这种语法要更新docker18.03以上才能支持,查看一下自己的docker版本

执行docker info

...

root@debian:/home/urmsone# docker info

Containers: 17

Running: 16

Paused: 0

Stopped: 1

Images: 52

Server Version: 17.03.3-ce

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

...

So,将版本更新到18.06.2-ce即可解决该报错

执行apt-cache madison docker-ce |grep 18.06

root@node:/home/urmsone/kubeflow/bootstrap# apt-cache madison docker-ce |grep 18.06

docker-ce | 18.06.3~ce~3-0~debian | https://download.docker.com/linux/debian stretch/stable amd64 Packages

docker-ce | 18.06.2~ce~3-0~debian | https://download.docker.com/linux/debian stretch/stable amd64 Packages

docker-ce | 18.06.1~ce~3-0~debian | https://download.docker.com/linux/debian stretch/stable amd64 Packages

docker-ce | 18.06.0~ce~3-0~debian | https://download.docker.com/linux/debian stretch/stable amd64 Packages

执行apt-get install docker-ce=18.06.3~ce~3-0~debian

bash: https://download.docker.com/linux/debian: 没有那个文件或目录

root@debian:/home/urmsone# apt-get install docker-ce=18.06.3~ce~3-0~debian

正在读取软件包列表... 完成

正在分析软件包的依赖关系树

正在读取状态信息... 完成

下列软件包是自动安装的并且现在不需要了:

golang-1.7 golang-1.7-doc golang-1.7-go golang-1.7-src golang-src

使用'apt autoremove'来卸载它(它们)。

下列软件包将被升级:

docker-ce

升级了 1 个软件包,新安装了 0 个软件包,要卸载 0 个软件包,有 6 个软件包未被升级。

需要下载 40.2 MB 的归档。

解压缩后会消耗 0 B 的额外空间。

0% [执行中]^C

...

安装完执行systemctl restart docker即可

执行docker info

...

root@debian:/home/urmsone# docker info

Containers: 17

Running: 16

Paused: 0

Stopped: 1

Images: 52

Server Version: 18.06.2-ce

Storage Driver: overlay2

Backing Filesystem: extfs

...

最后把Makefile中的DOCKER_BUILDKIT=1去掉,重新执行make build-kfctl-container即可

root@debian:/home/urmsone# ls kubeflow/bootstrap/bin/

总用量 86268

-rwxr-xr-x 1 root root 88336256 7月 18 13:14 kfctl

2、设置环境变量

执行

export PATH=$PATH:"<path to kfctl>"

export KFAPP=kfapp

export CONFIG="https://raw.githubusercontent.com/kubeflow/kubeflow/master/bootstrap/config/kfctl_existing_arrikto.0.6.yaml"

3、设置用户名密码

export KUBEFLOW_USER_EMAIL="[email protected]"

export KUBEFLOW_PASSWORD="12341234"

PS:

登录Kubeflow Dashboard的用户名和密码

4、kubeflow初始化

执行

kfctl init ${KFAPP} --config=${CONFIG} -V

cd ${KFAPP}

kfctl generate all -V

kfctl apply all -V

PS:

kfctl apply all -V 报错

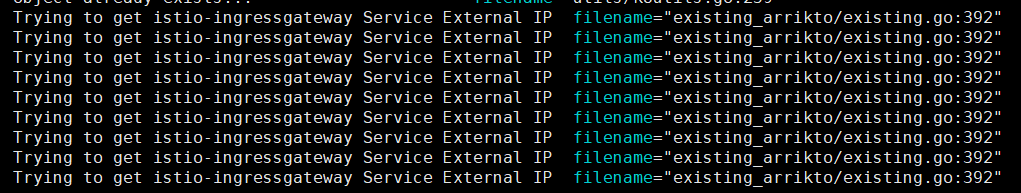

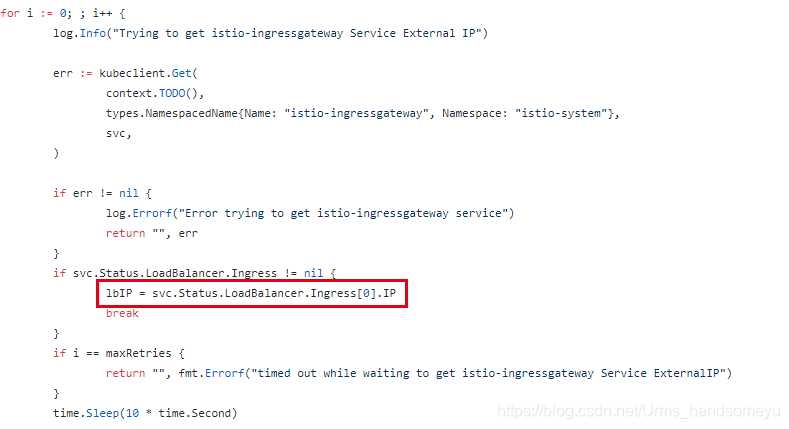

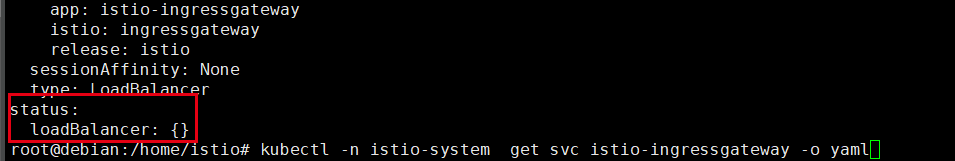

1)Tring to get istio-ingressgateway Service External IP 然后超时。

PS:原因是v0.6.0版本istio-ingressgateway的svc使用LoadBalancer,我本机的环境没有配置Istio Ingress Gateway外部的负载均衡器。

2)no matches for kind “Viewer” in version “kubeflow.org/v1beta1”

原因:新版本源码里的配置文件少了spec.vsersion字段,在${KFAPP}/kustomize/pipelines-viewer/base/crd.yaml文件中spec下添加version: v1beta1即可

root@debian:/home# cat kfapp/kustomize/pipelines-viewer/base/crd.yaml

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: viewers.kubeflow.org

spec:

version: v1beta1

group: kubeflow.org

names:

kind: Viewer

listKind: ViewerList

plural: viewers

shortNames:

- vi

singular: viewer

scope: Namespaced

versions:

- name: v1beta1

served: true

storage: true

存在该问题的还有scheduledworkflow,修改方法一样。

5、查看kubeflow组件的状态

执行

root@debian:/home/urmsone# kubectl get po -n kubeflow

NAME READY STATUS RESTARTS AGE

admission-webhook-bootstrap-stateful-set-0 1/1 Running 0 1h

admission-webhook-deployment-8475c5c945-dqtnm 1/1 Running 0 1h

argo-ui-84789c589d-5bjl9 1/1 Running 0 4d

centraldashboard-5dd95fc764-mcttq 1/1 Running 0 4d

dex-d8f84bc64-5xg6k 1/1 Running 0 4d

jupyter-web-app-deployment-fc8469bd6-5b74q 1/1 Running 0 1h

katib-controller-58c9d55cfb-qv8jk 1/1 Running 0 4d

katib-db-5b5689997f-fngpm 1/1 Running 0 4d

katib-manager-6cc55fc8d-bf275 1/1 Running 0 1h

katib-manager-rest-7578bb4b9d-s4bc7 1/1 Running 0 4d

katib-suggestion-bayesianoptimization-769646bcd6-dj6hp 1/1 Running 0 4d

katib-suggestion-grid-6b88f49b96-t72sm 1/1 Running 0 1h

katib-suggestion-hyperband-ccc9bcbfb-9zspb 1/1 Running 0 4d

katib-suggestion-nasrl-55b7d7c446-2sjrv 1/1 Running 0 1h

katib-suggestion-random-c5bfcd878-t2kmn 1/1 Running 0 4d

katib-ui-85cd99df8-lk5q9 1/1 Running 0 1h

metacontroller-0 1/1 Running 0 1h

minio-658b4fbf98-vpvhm 1/1 Running 0 1h

ml-pipeline-8687854bfb-clqgf 1/1 Running 0 4d

ml-pipeline-persistenceagent-666667f565-p2pcs 1/1 Running 0 4d

ml-pipeline-scheduledworkflow-654499c9ff-g5r8r 1/1 Running 0 1h

ml-pipeline-ui-6db695b769-xd5km 1/1 Running 0 1h

ml-pipeline-viewer-controller-deployment-55f75475b9-22ldn 1/1 Running 0 1h

mysql-c4c4c8f69-h9qqh 1/1 Running 0 1h

notebook-controller-deployment-7f7d7bb6fc-974px 1/1 Running 0 1h

profiles-deployment-7747ccb4f5-48ggl 2/2 Running 0 1h

pytorch-operator-74d98f49cd-4nvmh 1/1 Running 0 1h

spartakus-volunteer-86cdd46c74-9bfww 1/1 Running 0 1h

tensorboard-74f44b67f-rlhsw 1/1 Running 0 1h

tf-job-dashboard-768f7c9959-8dptz 1/1 Running 0 1h

tf-job-operator-7b9489bf94-4bkhc 1/1 Running 0 1h

workflow-controller-8655c8644c-4lxsv 1/1 Running 0 1h

PS:

当所有pod都处于running状态的时候,恭喜你,kubeflow已经部署成功!

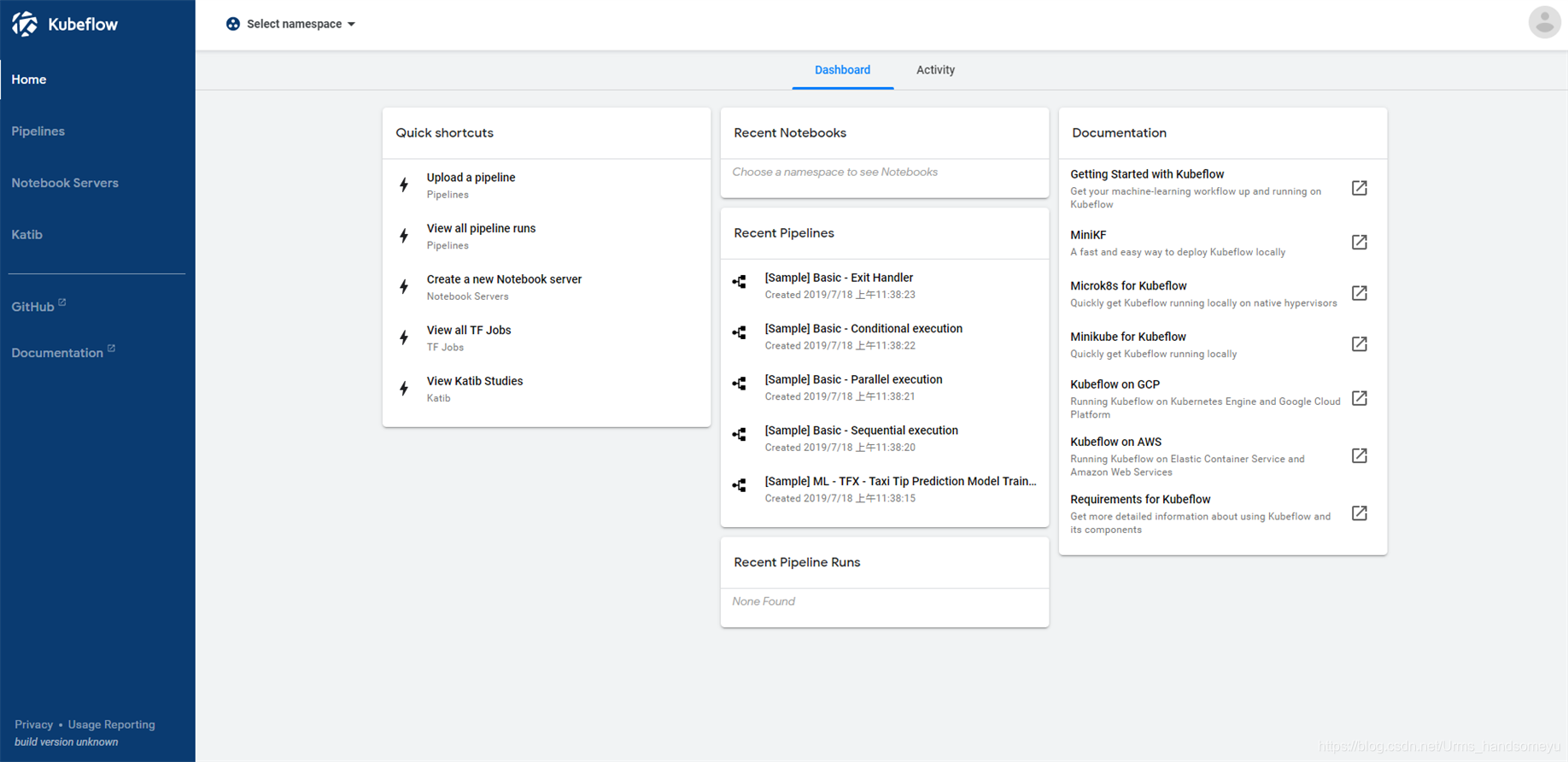

6、登录Kubeflow Dashboard

执行

root@debian:/home/urmsone# kubectl get svc -n istio-system istio-ingressgateway -o yaml |grep ip

- ip: 192.168.221.128

root@debian:/home/urmsone# kubectl get svc -n kubeflow |grep dex

dex ClusterIP 10.110.115.76 <none> 5556/TCP 4d

PS:

使用istio-ingress gateway ip+svc port访问Kubeflow Dashboard

7. pytorch-operator状态不是running

查看容器的logs,报错"msg":“CRD doesn’t exist. Exiting”。

原因:pytotch-operator使用的版本容器启动命令的脚本,跟crd创建的版本要一致否则会报错"msg":“CRD doesn’t exist. Exiting”。关键是源码的配置文件中pytorch-operator配置文件的版本是错误的。要手动修改。今天写到这,有空再补充解决办法。

PS:

kustomize build ~/someApp | kubectl apply -f -

crd 资源create后修改配置文件中version字段后,apply/replace会不生效。必须delete后再create