本文使用Matlab实现全局搜索算法案例,包括Melder_Mead单纯形法、模拟退火算法、粒子群算法和遗传算法,从而进一步理解相应的算法。

案例1:Melder_Mead单纯形法

1.题目要求:

2.MATLAB实现:

2.1 初始点选择

function [ output_args ] = nm_simplex( input_args )

%Nelder-Mead simplex method

%Based on the program by the Spring 2007 ECE580 student, Hengzhou Ding

disp ('We minimize a function using the Nelder-Mead method.')

disp ('There are two initial conditions.')

disp ('You can enter your own starting point.')

disp ('---------------------------------------------')

% disp(’Select one of the starting points’)

% disp (’[0.55;0.7] or [-0.9;-0.5]’)

% x0=input(’’)

disp (' ')

clear

close all;

disp('Select one of the starting points, or enter your own point')

disp('[0.55;0.7] or [-0.9;-0.5]')

disp('(Copy one of the above points and paste it at the prompt)')

x0=input(' ');

hold on

axis square

2.2 函数轮廓绘制

%Plot the contours of the objective function

%画目标函数的轮廓

[X1,X2]=meshgrid(-1:0.01:1);

Y=(X2-X1).^4+12.*X1.*X2-X1+X2-3;

[C,h] = contour(X1,X2,Y,20);

clabel(C,h);

2.3 初始参数和迭代点

% Initialize all parameters

%初始化参数

%{

lambda用于生成n+1个点

rho用于生成pr

chi用于生成pe

gamma用于生成pc

sigma用于生成重点

e1、e2为空间的标准基

%}

lambda=0.1;

rho=1;

chi=2;

gamma=1/2;

sigma=1/2;

e1=[1 0]';

e2=[0 1]';

%x0=[0.55 0.7]’;

%x0=[-0.9 -0.5]’;

% Plot initial point and initialize the simplex

%画初始的迭代点

%x(:,3)=x0表示矩阵x的第三列为向量x0

plot(x0(1),x0(2),'--*');

x(:,3)=x0;

x(:,1)=x0+lambda*e1;

x(:,2)=x0+lambda*e2;

2.4 迭代过程

%{

递归迭代的过程

%}

while 1

% Check the size of simplex for stopping criterion

% 检查单纯形是否满足停止条件

simpsize=norm(x(:,1)-x(:,2))+norm(x(:,2)-x(:,3))+norm(x(:,3)-x(:,1));

if(simpsize<1e-6)

break;

end

%上一次的迭代点

lastpt=x(:,3);

% Sort the simplex

% 对单纯形中的点进行排序——排序后x(:,3)中的点对应函数值最大

x=sort_points(x,3);

% Reflection

% 反射操作

centro=1/2*(x(:,1)+x(:,2));

xr=centro+rho*(centro-x(:,3));

% Accept condition

% 接受条件判断

% 条件1:反射点pr对应函数值位于fnl和fs之间

if(obj_fun(xr)>=obj_fun(x(:,1)) && obj_fun(xr)<obj_fun(x(:,2)))

x(:,3)=xr;

% Expand condition

%条件2:反射点pr对应的函数值小于fs

elseif(obj_fun(xr)<obj_fun(x(:,1)))

xe=centro+rho*chi*(centro-x(:,3));

if(obj_fun(xe)<obj_fun(xr))

x(:,3)=xe;

else

x(:,3)=xr;

end

% Outside contraction or shrink

%条件3:反射点pr对应的函数值大于 fnl,但小于fl。——外收缩

elseif(obj_fun(xr)>=obj_fun(x(:,2)) && obj_fun(xr)<obj_fun(x(:,3)))

xc=centro+gamma*rho*(centro-x(:,3));

if(obj_fun(xc)<obj_fun(x(:,3)))

x(:,3)=xc;

else

x=shrink(x,sigma);

end

% Inside contraction or shrink

% 条件4:反射点pr对应的函数值大于 fl——内收缩

else

xcc=centro-gamma*(centro-x(:,3));

if(obj_fun(xcc)<obj_fun(x(:,3)))

x(:,3)=xcc;

else

x=shrink(x,sigma);

end

end

% Plot the new point and connect

% 打印反射出来的新的迭代点,并与上一个点连线

plot([lastpt(1),x(1,3)],[lastpt(2),x(2,3)],'--*');

end

% Output the final simplex (minimizer)

% 输出最终的单纯形

x(:,1)

2.5 所需的目标函数、排序函数和收缩函数

% obj_fun

%目标函数

function y = obj_fun(x)

y=(x(1)-x(2))^4+12*x(1)*x(2)-x(1)+x(2)-3;

% sort_points

% 单纯形中的点进行排序——最终x(:,1)>x(:,2)>x(:,3)

function y = sort_points(x,N)

for i=1:(N-1)

for j=1:(N-i)

if(obj_fun(x(:,j))>obj_fun(x(:,j+1)))

tmp=x(:,j);

x(:,j)=x(:,j+1);

x(:,j+1)=tmp;

end

end

end

y=x;

% shrink

% 收缩函数

function y = shrink(x,sigma)

x(:,2)=x(:,1)+sigma*(x(:,2)-x(:,1));

x(:,3)=x(:,1)+sigma*(x(:,3)-x(:,1));

y=x;

3. 结果展示

如果选择[0.55;0.7]作为初始迭代点,则最终结果为:

如果选择[-0.9;-0.5]作为初始迭代点,则最终结果为:

在两个初始点作为输入的情况下这个函数具有两个局部最小点,这个情况取决于lambda的取值。而lambda的取值也决定了初始的单纯形,通过使用不同的lambda值,可以从相同的初始点开始达到两个最小值。在上述的解决方案中,初始的单纯形比较小,因为lambda 0.1。

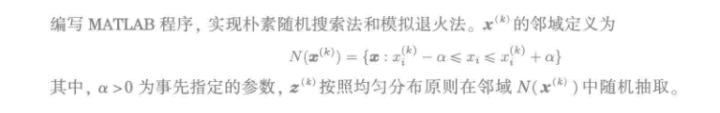

案例2:朴素随机搜索和模拟退火算法

1. 题目描述:

其中目标函数采用Matlab中的peaks爬山函数。

2. Matlab实现:

2.1 函数定义(f_p.m)

%函数定义

function y=f_p(x);

y=3*(1-x(1)).^2.*exp(-(x(1).^2)-(x(2)+1).^2) -10.*(x(1)/5-x(1).^3-x(2).^5).*exp(-x(1).^2-x(2).^2) - exp(-(x(1)+1).^2-x(2).^2)/3;

y=-y;

2.2 绘制函数定义(pltpts.m)

%绘制方法定义

function out=pltpts(xnew,xcurr)

plot([xcurr(1),xnew(1)],[xcurr(2),xnew(2)],'r-',xnew(1),xnew(2),'o');

drawnow; % Draws current graph now

%pause(1)

out = [];

2.3 绘图设置(pks_cnt.m)

%函数定义和绘制

echo off

X = [-3:0.2:3]';

Y = [-3:0.2:3]';

[x,y]=meshgrid(X',Y') ;

func = 3*(1-x).^2.*exp(-x.^2-(y+1).^2) -10.*(x/5-x.^3-y.^5).*exp(-x.^2-y.^2) -exp(-(x+1).^2-y.^2)/3;

func = -func;

clf

levels = exp(-5:10);

levels = [-5:0.9:10];

contour(X,Y,func,levels,'k--')

xlabel('x_1')

ylabel('x_2')

title('Minimization of Peaks function')

drawnow;

hold on

plot(-0.0303,1.5455,'o')

text(-0.0303,1.5455,'Solution')

2.4 朴素随机搜索案例(rs_demo.m)

下面为关键代码展示,完整代码文末有github代码链接

%迭代过程

for k = 1:max_iter,

%计算当前点的函数值

xcurr=xbestcurr;

f_curr=feval(funcname,xcurr);

alpha = alpha0;

% 产生新的点

xnew = xcurr + alpha*(2*rand(length(xcurr),1)-1);

% 判断新点是否越界

for i=1:length(xnew),

xnew(i) = max(xnew(i),xlower(i));

xnew(i) = min(xnew(i),xupper(i));

end %for

%判断并更新最优点和函数值

f_new=feval(funcname,xnew);

if f_new < f_best,

xbestold = xbestcurr;

xbestcurr = xnew;

f_best = f_new;

end

%打印迭代过程参数

if print,

disp('Iteration number k =')

disp(k); %print iteration index k

disp('alpha =');

disp(alpha); %print alpha

disp('New point =');

disp(xnew'); %print new point

disp('Function value =');

disp(f_new); %print func value at new point

end %if

%终止条件设置

if norm(xnew-xbestold) <= epsilon_x*norm(xbestold)

disp('Terminating: Norm of difference between iterates less than');

disp(epsilon_x);

break;

end %if

%打印

pltpts(xbestcurr,xbestold);

if k == max_iter

disp('Terminating with maximum number of iterations');

end %if

end %for

运行此文件首先设置alpha,使用options(18)=0.5设置alpha=0.5 然后运行 rs_demo(‘f_p’,[0;-2],options)

在RS算法中,当alpha为1.5的时候比0.5更容易达到全局最优点

2.5 模拟退火算法案例(sa_demo.m)

下面为关键代码展示,完整代码文末有github代码链接

%迭代过程

for k = 1:max_iter,

f_curr=feval(funcname,xcurr);

xnew = xcurr + alpha*(2*rand(length(xcurr),1)-1);

for i=1:length(xnew),

xnew(i) = max(xnew(i),xlower(i));

xnew(i) = min(xnew(i),xupper(i));

end %for

f_new=feval(funcname,xnew);

if f_new < f_curr,

xcurr = xnew;

f_curr = f_new;

else

% 如果新点的函数值大于原来的函数值,则以一定概率接受新点

cointoss = rand(1);

Temp = gamma/log(k+k0);

Prob = exp(-(f_new-f_curr)/Temp);

if cointoss < Prob,

xcurr = xnew;

f_curr = f_new;

end

end

if f_new < f_best,

xbestold = xbestcurr;

xbestcurr = xnew;

f_best = f_new;

end

if print,

disp('Iteration number k =')

disp(k); %print iteration index k

disp('alpha =');

disp(alpha); %print alpha

disp('New point =');

disp(xnew'); %print new point

disp('Function value =');

disp(f_new); %print func value at new point

end %if

if norm(xnew-xbestold) <= epsilon_x*norm(xbestold)

disp('Terminating: Norm of difference between iterates lessthan');

disp(epsilon_x);

break;

end %if

pltpts(xbestcurr,xbestold);

if k == max_iter

disp('Terminating with maximum number of iterations');

end %if

end %for

运行此文件首先设置alpha,使用options(18)=0.5设置alpha=0.5 然后运行 sa_demo(‘f_p’,[0;-2],options)

在SA算法中,当alpha=0.5仍能到达全局最优点

3.结果展示

朴素随机搜索算法

设置alpha为0.5的情况:

设置alpha为1.5的情况:

当参数设置为1.5之后会更容易接近真正的最小点,虽然经常到一个局部最小点,但多运行几次即可到达最小点。

模拟退火算法

设置alpha为0.5的情况:

可以发现,在模拟退火算法中,更容易跳出局部极小点,到达全局最小点。

案例3:粒子群算法

1. 问题描述

实现例子群优化算法,测试Matlab中的peaks爬山函数

2. Matlab实现

2.1 参数设置和轮廓绘制

% A particle swarm optimizer

% to find the minimum/maximum of the MATLABs’ peaks function

% D---# of inputs to the function (dimension of problem)

clear

%Parameters

% 参数设置

ps=10;%粒子个数

D=2; %维度

ps_lb=-3;%粒子取值下限

ps_ub=3; %粒子取值上限

vel_lb=-1;%速度取值下限

vel_ub=1; %速度取值上限

iteration_n = 50;%迭代次数

range = [-3, 3; -3, 3]; % 输入值的范围

% 画peaks函数的轮廓

[x, y, z] = peaks;

%pcolor(x,y,z); shading interp; hold on;

%contour(x, y, z, 20, ’r’);

mesh(x,y,z)

% hold off;

%colormap(gray);

set(gca,'Fontsize',14)

axis([-3 3 -3 3 -9 9])

%axis square;

xlabel('x_1','Fontsize',14);

ylabel('x_2','Fontsize',14);

zlabel('f(x_1,x_2)','Fontsize',14);

hold on

upper = zeros(iteration_n, 1);

average = zeros(iteration_n, 1);

lower = zeros(iteration_n, 1);

2.2 初始化位置和速度

% 初始化位置和速度

ps_pos=ps_lb + (ps_ub-ps_lb).*rand(ps,D);

% need to bound velocities between -mv,mv

ps_vel=vel_lb + (vel_ub-vel_lb).*rand(ps,D);

% initial pbest positions

p_best = ps_pos;

% returns column of cost values (1 for each particle)

% f1='3*(1-ps_pos(i,1))^2*exp(-ps_pos(i,1)^2-(ps_pos(i,2)+1)^2)';

% f2='-10*(ps_pos(i,1)/5-ps_pos(i,1)^3-ps_pos(i,2)^5)*exp(-ps_pos(i,1)^2-ps_pos(i,2)^2)';

% f3='-(1/3)*exp(-(ps_pos(i,1)+1)^2-ps_pos(i,2)^2)';

% f1+f2+f3为完整的函数

%初始化全局最优和每个粒子经过的最好的位置

p_best_fit=zeros(ps,1);

for i=1:ps

g1(i)=3*(1-ps_pos(i,1))^2*exp(-ps_pos(i,1)^2-(ps_pos(i,2)+1)^2);

g2(i)=-10*(ps_pos(i,1)/5-ps_pos(i,1)^3-ps_pos(i,2)^5)*exp(-ps_pos(i,1)^2-ps_pos(i,2)^2);

g3(i)=-(1/3)*exp(-(ps_pos(i,1)+1)^2-ps_pos(i,2)^2);

p_best_fit(i)=g1(i)+g2(i)+g3(i);

end

p_best_fit;

hand_p3=plot3(ps_pos(:,1),ps_pos(:,2),p_best_fit','*k','markersize',15);

% initial g_best

[g_best_val,g_best_idx] = max(p_best_fit);

%[g_best_val,g_best_idx] = min(p_best_fit); this is to minimize

g_best=ps_pos(g_best_idx,:);

2.3 迭代步骤

% 迭代步骤

for k=1:iteration_n

for count=1:ps

ps_vel(count,:) = 0.729*ps_vel(count,:)... % prev vel

+1.494*rand*(p_best(count,:)-ps_pos(count,:))... % independent

+1.494*rand*(g_best-ps_pos(count,:)); % social

end

ps_vel;

% update new position

ps_pos = ps_pos + ps_vel;

%update p_best

for i=1:ps

g1(i)=3*(1-ps_pos(i,1))^2*exp(-ps_pos(i,1)^2-(ps_pos(i,2)+1)^2);

g2(i)=-10*(ps_pos(i,1)/5-ps_pos(i,1)^3-ps_pos(i,2)^5)*exp(-ps_pos(i,1)^2-ps_pos(i,2)^2);

g3(i)=-(1/3)*exp(-(ps_pos(i,1)+1)^2-ps_pos(i,2)^2);

ps_current_fit(i)=g1(i)+g2(i)+g3(i);

if ps_current_fit(i)>p_best_fit(i)

p_best_fit(i)=ps_current_fit(i);

p_best(i,:)=ps_pos(i,:);

end

end

p_best_fit;

%update g_best

[g_best_val,g_best_idx] = max(p_best_fit);

g_best=ps_pos(g_best_idx,:);

% Fill objective function vectors

upper(k) = max(p_best_fit);

average(k) = mean(p_best_fit);

lower(k) = min(p_best_fit);

set(hand_p3,'xdata',ps_pos(:,1),'ydata',ps_pos(:,2),'zdata',ps_current_fit');

drawnow

pause%按一下回车键就迭代一次

end

g_best

g_best_val

2.4 画图

%画图——最终结果图

figure;

x = 1:iteration_n;

plot(x, upper, 'o', x, average, 'x', x, lower, '*');

hold on;

plot(x, [upper average lower]);

hold off;

legend('Best', 'Average', 'Poorest');

xlabel('Iterations'); ylabel('Objective function value');

3. 结果展示

经过多次迭代之后,所有的粒子均往着最高点爬去,最终会有粒子到达全局最高点。

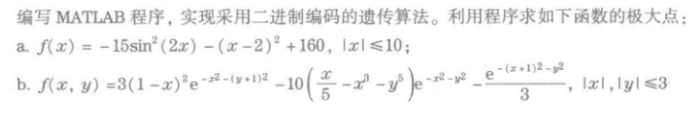

案例4:遗传算法

1. 题目描述

2. Matlab实现

2.1 二进制编码(bin2dec.m)

function dec = bin2dec(bin,range);

%function dec = bin2dec(bin,range);

%Function to convert from binary (bin) to decimal (dec) in a given range

index = polyval(bin,2);

dec = index*((range(2)-range(1))/(2^length(bin)-1)) + range(1);

2.2 遗传算法结构(ga_be.m)

下面展示关键代码,完整代码请查看文末github地址

for k = 1:max_iter,

%Selection

fitness = fitness - min(fitness); % to keep the fitness positive

if sum(fitness) == 0,

disp('Population has identical chromosomes -- STOP');

disp('Number of iterations:');

disp(k);

for i = k:max_iter,

myupper(i)=myupper(i-1);

average(i)=average(i-1);

mylower(i)=mylower(i-1);

end

break;

else

fitness = fitness/sum(fitness);

end

if selection == 0,

%roulette-wheel

cum_fitness = cumsum(fitness);

for i = 1:N,

tmp = find(cum_fitness-rand>0);

m(i) = tmp(1);

end

else

%tournament

for i = 1:N,

fighter1=ceil(rand*N);

fighter2=ceil(rand*N);

if fitness(fighter1)>fitness(fighter2),

m(i) = fighter1;

else

m(i) = fighter2;

end

end

end

M = zeros(N,L);

for i = 1:N,

M(i,:) = P(m(i),:);

end

%Crossover

Mnew = M;

for i = 1:N/2

ind1 = ceil(rand*N);

ind2 = ceil(rand*N);

parent1 = M(ind1,:);

parent2 = M(ind2,:);

if rand < p_c

crossover_pt = ceil(rand*(L-1));

offspring1 = [parent1(1:crossover_pt) parent2(crossover_pt+1:L)];

offspring2 = [parent2(1:crossover_pt) parent1(crossover_pt+1:L)];

Mnew(ind1,:) = offspring1;

Mnew(ind2,:) = offspring2;

end

end

%Mutation

mutation_points = rand(N,L) < p_m;

P = xor(Mnew,mutation_points);

%Evaluation

for i = 1:N,

fitness(i) = feval(fit_func,P(i,:));

end

[bestvalue,best] = max(fitness);

if bestvalue > bestvaluesofar,

bestsofar = P(best,:);

bestvaluesofar = bestvalue;

end

myupper(k) = bestvalue;

average(k) = mean(fitness);

mylower(k) = min(fitness);

end %for

3.结果展示

计算第一个函数

clear;

options(1)=1;

[x,y]=ga_be(8,10,'fit_func1',options);

f='f_manymax';

range=[-10,10];

disp('GA Solution:');

disp(bin2dec(x,range));

disp('Objective function value:');

disp(y);

运行结果为:

最终结果为x取值为3.1765,目标函数值为1.5854e+02

计算第二个函数

clear;

options(1)=1;

[x,y]=ga_be(16,20,'fit_func2',options);

f='f_peaks';

xrange=[-3,3];

yrange=[-3,3];

L=length(x);

x1=bin2dec(x(1:L/2),xrange);

x2=bin2dec(x(L/2+1:L),yrange);

disp('GA Solution:');

disp([x1,x2]);

disp('Objective function value:');

disp(y);

运行结果为:

完整代码github链接地址:https://github.com/LIANGQINGYUAN/OptimizationAlgorithmNote