驱动类要改的东西不是很大

![]()

![]()

![]()

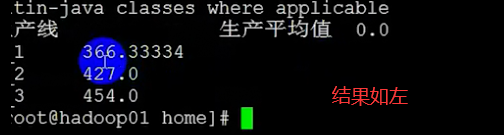

到此就算是完成了。

package qf.com.mr;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.FloatWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

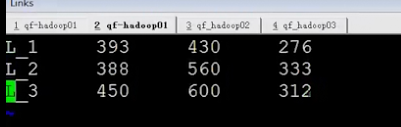

/*

*类说明:将汽车生产流水线的三个时间段的记录算一个平均值

*

*l z w y

*L_1 393 430 276

*L_2 388 560 333

*L_3 450 600 312

*

*生产线 生产平均值

*L_1 *

*L_2 *

*/

public class Avg {

public static class MyMapper extends Mapper<LongWritable, Text, Text, FloatWritable>{

public static Text k = new Text();

public static FloatWritable v = new FloatWritable();

//setup 只在map阶段执行之前执行一次(用于初始化等一次性操作)

@Override

protected void setup(Mapper<LongWritable, Text, Text, FloatWritable>.Context context)

throws IOException, InterruptedException {

}

@Override

protected void map(LongWritable key, Text value, Mapper<LongWritable, Text, Text, FloatWritable>.Context context)

throws IOException, InterruptedException {

//1.从输入数据中获取每一个文件中的每一行的值

String line = value.toString();

//2.对每一行的数据进行切分(有的不用)

String [] words = line.split("\t");

//3.按照业务循环处理

String lineName = words[0];

int z = Integer.parseInt(words[1]);

int w = Integer.parseInt(words[2]);

int y = Integer.parseInt(words[3]);

k.set(lineName);

float avg = (float)((z+w+y)*1.0/(words.length-1));

v.set(avg);

context.write(k, v);

}

//在整个map执行之后 一般作用于最后的结果

@Override

protected void cleanup(Mapper<LongWritable, Text, Text, FloatWritable>.Context context)

throws IOException, InterruptedException {

}

}

public static class MyReducer extends Reducer<Text, FloatWritable, Text, Text>{

//在reduce阶段执行之前 执行 仅一次

@Override

protected void setup(Reducer<Text, FloatWritable, Text, Text>.Context context)

throws IOException, InterruptedException {

context.write(new Text("生产线 生产平均值"), new Text(""));

}

@Override

protected void reduce(Text key, Iterable<FloatWritable> values, Context context)

throws IOException, InterruptedException {

context.write(key, new Text(values.iterator().next().get()+""));

}

//在reduce执行之后执行 仅执行一次

@Override

protected void cleanup(Reducer<Text, FloatWritable, Text, Text>.Context context)

throws IOException, InterruptedException {

}

}

//驱动

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1.获取配置对象信息

Configuration conf = new Configuration();

//2.对conf进行设置(没有就不用)

//3.获取job对象 (注意导入的包)

Job job = Job.getInstance(conf, "myAvg");

//4.设置job的运行主类

job.setJarByClass(Avg.class);

//System.out.println("jiazai finished");

//5.对map阶段进行设置

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(FloatWritable.class);

FileInputFormat.addInputPath(job, new Path(args[0]));//具体路径从控制台输入

//System.out.println("map finished");

//6.对reduce阶段进行设置

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//System.out.println("reduce finished");

//7.提交job并打印信息

int isok = job.waitForCompletion(true) ? 0 : 1;

//退出job

System.exit(isok);

System.out.println("all finished");

}

}