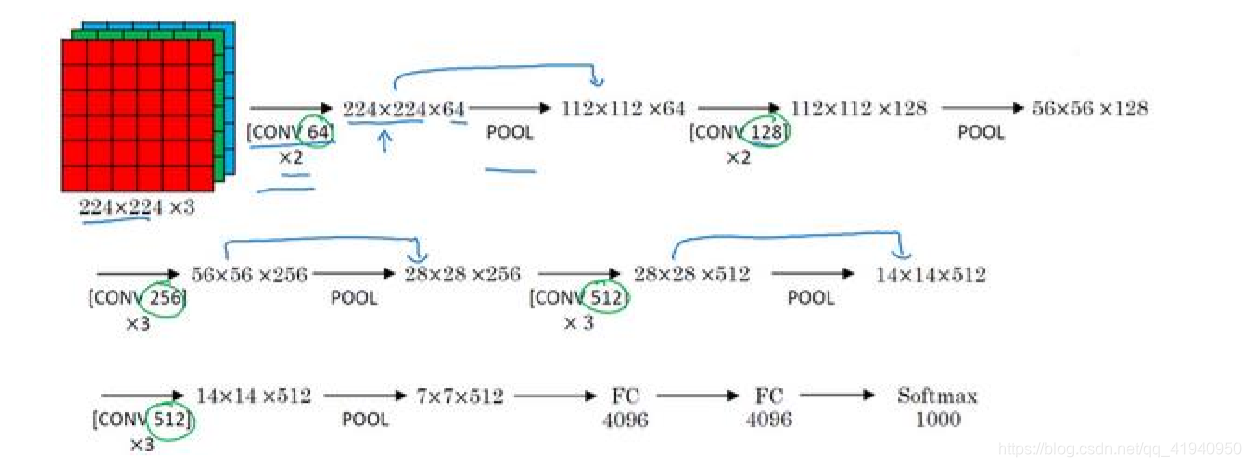

利用Pytorch复现VGG-16网络:

根据吴恩达老师在深度学习课程中的讲解,AlexNet网络的基本流程为:

代码如下:

import math

import torch

import torchvision

import torch.nn as nn

import torch.nn.functional as F

import torchvision.models as models

from torch.autograd import Variable

class VGG16(nn.Module):

def __init__(self, num_classes):

super(VGG16, self).__init__()

self.feature = nn.Sequential(

nn.Conv2d(in_channels = 3, out_channels = 64, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 64, out_channels = 64, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(in_channels = 64, out_channels = 128, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 128, out_channels = 128, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(in_channels = 128, out_channels = 256, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 256, out_channels = 256, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 256, out_channels = 256, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(in_channels = 256, out_channels = 512, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 512, out_channels = 512, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 512, out_channels = 512, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

nn.Conv2d(in_channels = 512, out_channels = 512, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 512, out_channels = 512, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(in_channels = 512, out_channels = 512, kernel_size = 3, stride=1, padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(2, 2),

)

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, 4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096, num_classes)

)

self._initialize_weights()

def forward(self, x):

x = self.feature(x)

x = x.view(x.size(0), -1)

x = self.classifier(x)

return(x)

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

m.weight.data.normal_(0, 0.01)

m.bias.data.zero_()

net = VGG16(1000)

print(net)

输出:

VGG16(

(feature): Sequential(

(0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU(inplace)

(2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): ReLU(inplace)

(4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(6): ReLU(inplace)

(7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(8): ReLU(inplace)

(9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(11): ReLU(inplace)

(12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(13): ReLU(inplace)

(14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(15): ReLU(inplace)

(16): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(17): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(18): ReLU(inplace)

(19): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(20): ReLU(inplace)

(21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(22): ReLU(inplace)

(23): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(24): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(25): ReLU(inplace)

(26): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(27): ReLU(inplace)

(28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(29): ReLU(inplace)

(30): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

)

(classifier): Sequential(

(0): Linear(in_features=25088, out_features=4096, bias=True)

(1): ReLU(inplace)

(2): Dropout(p=0.5)

(3): Linear(in_features=4096, out_features=4096, bias=True)

(4): ReLU(inplace)

(5): Dropout(p=0.5)

(6): Linear(in_features=4096, out_features=1000, bias=True)

)

)

留下一个问题:

全连接部分的4096是怎么来的,为什么是这个值呢?