部署安装hadoop2.9.1后下载hive2.2.0解压到安装目录,本文为E:\post\hive\apache-hive-2.3.3-bin

PS:经下载发现2.2.0以后没有win相关的cmd命令

PS:hadoop的配置相对简单,本文不再赘述

- 环境变量

总共需要配置四处环境变量(系统变量,如果不配置此4处将无法运行hive,被这里坑了很久)

- HIVE_BIN_PATH:E:\post\hive\apache-hive-2.2.0-bin\bin

- HIVE_HOME:E:\post\hive\apache-hive-2.2.0-bin

- HIVE_LIB:E:\post\hive\apache-hive-2.2.0-bin\lib

- PATH增加:%HIVE_HOME%\bin

- 配置文件

- Hive的conf目录下新建hive_site.xml文件,内容为以下(为避免采坑,贴出完成xml文件内容,此处也为一坑):

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://IP:3306/hive_metadata?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>datanucleus.fixedDatastore</name>

<value>false</value>

</property>

<property>

<name>datanucleus.autoCreateSchema</name>

<value>true</value>

</property>

<property>

<name>datanucleus.autoCreateTables</name>

<value>true</value>

</property>

<property>

<name>datanucleus.autoCreateColumns</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

<description>

Enforce metastore schema version consistency.

True: Verify that version information stored in metastore matches with one from Hive jars. Also disable automatic

schema migration attempt. Users are required to manully migrate schema after Hive upgrade which ensures proper metastore schema migration. (Default)

False: Warn if the version information stored in metastore doesn't match with one from in Hive jars.

</description>

</property>

</configuration>

PS:红色粗体处需要改成跟本人实际情况一致;另,如果还报其他错误,自行google增加相应property。

2.Hive的conf目录下新增hive-env.sh文件,内容如下:

export HADOOP_HOME=E:\post\hadoop\hadoop-2.9.1

export HIVE_CONF_DIR=E:\post\hadoop\hadoop-2.9.1\bin\conf

export HIVE_AUX_JARS_PATH=E:\post\hadoop\hadoop-2.9.1\bin\lib

PS:为相应hadoop相关环境,需按本人实际情况修改

3.Hive的lib目录添加mysql-connector-java.jar驱动

官网下载链接:https://dev.mysql.com/downloads/file/?id=476198

解压后将mysql-connector-java-5.1.46.jar重命名为mysql-connector-java.jar并添加至hive的lib目录下。(若链接失效,可google搜索下载之)

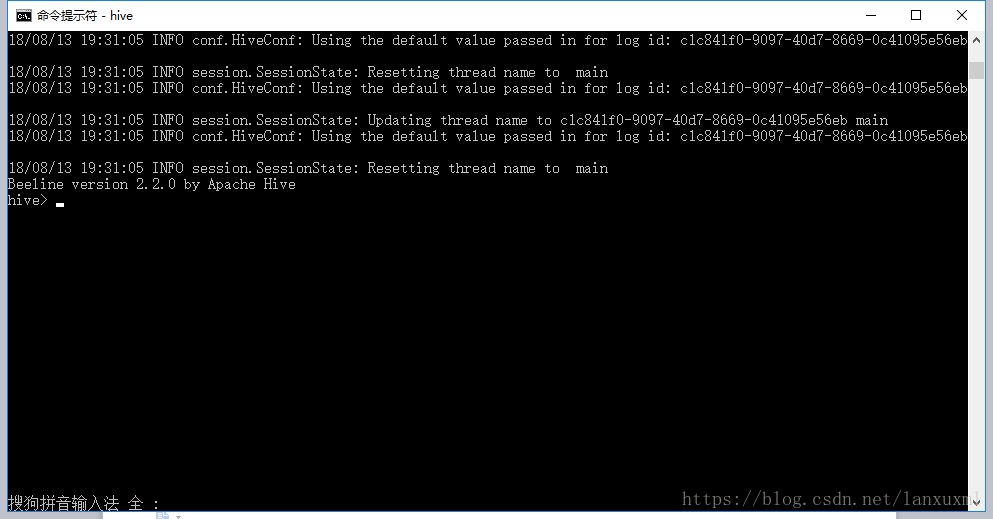

- 测试

- Win+R打开cmd,输入hive,成功进入hive:

PS:不需要运行以下命令进行初始化,被这点坑了很久

schematool -initSchema -dbType mysql

hive --service metastore

2.查看hive的mysql元数据库表: