孪生神经网络

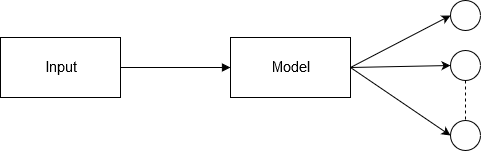

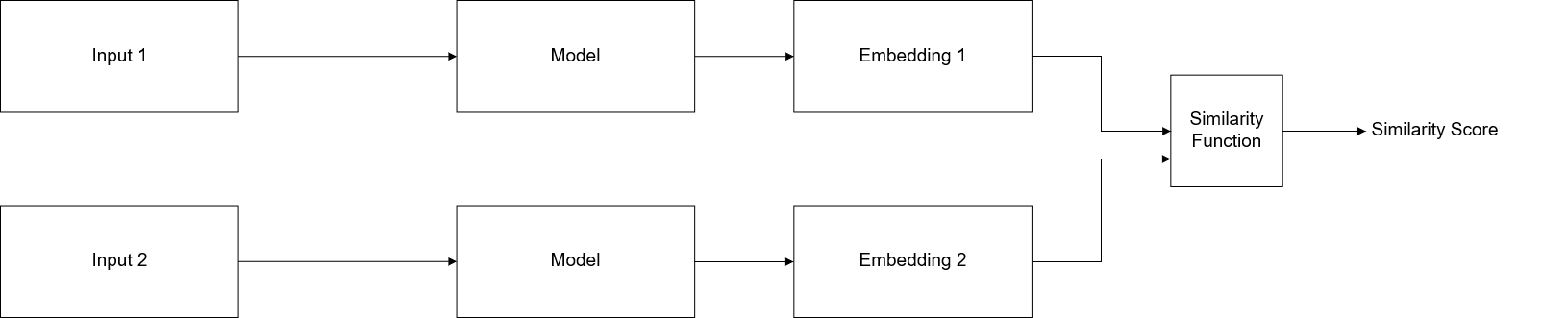

孪生神经网络(SNN)是一种神经网络,其中包含相同模型的多个实例,并共享相同的体系结构和权重。 当需要使用有限的数据进行学习并且我们没有完整的数据集(例如零/一枪学习任务)时,这种架构就显示了其优势。

传统神经网络会学习预测多个类别。 当我们需要向数据添加/删除新类时,这会带来问题。 在这种情况下,我们必须更新神经网络并在整个数据集中对其进行重新训练。 而且,深度神经网络需要大量的数据进行训练。 另一方面,由于SNN学习相似性函数。 因此,我们可以训练它以查看两个图像是否相同。 这样就可以对新的数据类别进行分类,而无需再次训练网络。

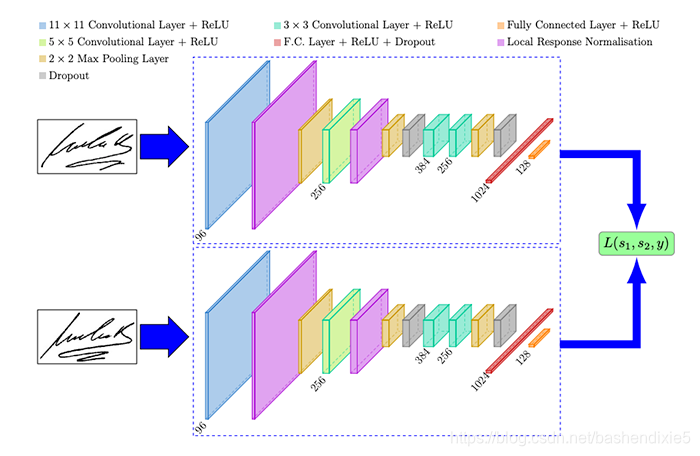

孪生神经网络最流行的应用就是人脸识别和签名验证。

构建并训练孪生神经网络

1、构建图像对

在训练孪生神经网络时,我们需要有正向输入和负向输入

正向 负向

负向

下面代码是构建图像对的主要代码,再下面会有完整代码

# import the necessary packages

from tensorflow.keras.datasets import mnist

from imutils import build_montages

import numpy as np

import cv2

def make_pairs(images, labels):

# 初始化两个空数组(图像对)和(标签,用来说明图像对是正向还是负向)

pairImages = []

pairLabels = []

# 计算数据集中存在的类总数,然后为每个类标签建立索引列表,该列表为具有给定标签的所有示例提供索引

numClasses = len(np.unique(labels))

idx = [np.where(labels == i)[0] for i in range(0, numClasses)]

# 遍历所有图像

for idxA in range(len(images)):

# grab the current image and label belonging to the current

# iteration

currentImage = images[idxA]

label = labels[idxA]

# randomly pick an image that belongs to the *same* class

# label

idxB = np.random.choice(idx[label])

posImage = images[idxB]

# prepare a positive pair and update the images and labels

# lists, respectively

pairImages.append([currentImage, posImage])

pairLabels.append([1])

# grab the indices for each of the class labels *not* equal to

# the current label and randomly pick an image corresponding

# to a label *not* equal to the current label

negIdx = np.where(labels != label)[0]

negImage = images[np.random.choice(negIdx)]

# prepare a negative pair of images and update our lists

pairImages.append([currentImage, negImage])

pairLabels.append([0])

# return a 2-tuple of our image pairs and labels

return (np.array(pairImages), np.array(pairLabels))

# load MNIST dataset and scale the pixel values to the range of [0, 1]

print("[INFO] loading MNIST dataset...")

(trainX, trainY), (testX, testY) = mnist.load_data('C:/Users/xiaomao/Desktop/mnist.npz')

# build the positive and negative image pairs

print("[INFO] preparing positive and negative pairs...")

(pairTrain, labelTrain) = make_pairs(trainX, trainY)

(pairTest, labelTest) = make_pairs(testX, testY)

# initialize the list of images that will be used when building our

# montage

images = []

# loop over a sample of our training pairs

for i in np.random.choice(np.arange(0, len(pairTrain)), size=(49,)):

# grab the current image pair and label

imageA = pairTrain[i][0]

imageB = pairTrain[i][1]

label = labelTrain[i]

# to make it easier to visualize the pairs and their positive or

# negative annotations, we're going to "pad" the pair with four

# pixels along the top, bottom, and right borders, respectively

output = np.zeros((36, 60), dtype="uint8")

pair = np.hstack([imageA, imageB])

output[4:32, 0:56] = pair

# set the text label for the pair along with what color we are

# going to draw the pair in (green for a "positive" pair and

# red for a "negative" pair)

text = "neg" if label[0] == 0 else "pos"

color = (0, 0, 255) if label[0] == 0 else (0, 255, 0)

# create a 3-channel RGB image from the grayscale pair, resize

# it from 60x36 to 96x51 (so we can better see it), and then

# draw what type of pair it is on the image

vis = cv2.merge([output] * 3)

vis = cv2.resize(vis, (96, 51), interpolation=cv2.INTER_LINEAR)

cv2.putText(vis, text, (2, 12), cv2.FONT_HERSHEY_SIMPLEX, 0.75,

color, 2)

# add the pair visualization to our list of output images

images.append(vis)

# construct the montage for the images

montage = build_montages(images, (96, 51), (7, 7))[0]

# show the output montage

cv2.imshow("Siamese Image Pairs", montage)

cv2.waitKey(0)得到诸如这样的正向、负向图像对以供进行训练

2、训练神经网络

扫描二维码关注公众号,回复:

12726455 查看本文章

# 孪生神经网络(完整代码)

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense

from tensorflow.keras.layers import Input

from tensorflow.keras.layers import Lambda

from tensorflow.keras.datasets import mnist

import numpy as np

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import Dense

from tensorflow.keras.layers import Dropout

from tensorflow.keras.layers import GlobalAveragePooling2D

from tensorflow.keras.layers import MaxPooling2D

import cv2

import os

import tensorflow.keras.backend as k

import matplotlib.pyplot as plt

os.environ['TF_FORCE_GPU_ALLOW_GROWTH'] = "true"

# 参数设置

# specify the shape of the inputs for our network

IMG_SHAPE = (28, 28, 1)

# specify the batch size and number of epochs

BATCH_SIZE = 64

EPOCHS = 100

# define the path to the base output directory

BASE_OUTPUT = "C:/Users/xiaomao/Desktop"

# use the base output path to derive the path to the serialized

# model along with training history plot

MODEL_PATH = os.path.sep.join([BASE_OUTPUT, "siamese_model"])

PLOT_PATH = os.path.sep.join([BASE_OUTPUT, "plot.png"])

# 创建孪生网络模型的方法

def build_siamese_model(input_shape, embedding_dim=48):

# specify the inputs for the feature extractor network

inputs = Input(input_shape)

# define the first set of CONV => RELU => POOL => DROPOUT layers

x = Conv2D(64, (2, 2), padding="same", activation="relu")(inputs)

x = MaxPooling2D(pool_size=(2, 2))(x)

x = Dropout(0.3)(x)

# second set of CONV => RELU => POOL => DROPOUT layers

x = Conv2D(64, (2, 2), padding="same", activation="relu")(x)

x = MaxPooling2D(pool_size=2)(x)

x = Dropout(0.3)(x)

# prepare the final outputs

pooled_output = GlobalAveragePooling2D()(x)

out_puts = Dense(embedding_dim)(pooled_output)

# build the model

my_model = Model(inputs, out_puts)

# return the model to the calling function

return my_model

# 创建图像对的方法

def make_pairs(images, labels):

# 初始化两个空数组(图像对)和(标签,用来说明图像对是正向还是负向)

pair_images = []

pair_labels = []

# 计算数据集中存在的类总数,然后为每个类标签建立索引列表,该列表为具有给定标签的所有示例提供索引

num_classes = len(np.unique(labels))

idx = [np.where(labels == i)[0] for i in range(0, num_classes)]

# 遍历所有图像

for idxA in range(len(images)):

# grab the current image and label belonging to the current

# iteration

current_image = images[idxA]

label = labels[idxA]

# randomly pick an image that belongs to the *same* class

# label

idx_b = np.random.choice(idx[label])

pos_image = images[idx_b]

# prepare a positive pair and update the images and labels

# lists, respectively

pair_images.append([current_image, pos_image])

pair_labels.append([1])

# grab the indices for each of the class labels *not* equal to

# the current label and randomly pick an image corresponding

# to a label *not* equal to the current label

neg_idx = np.where(labels != label)[0]

neg_image = images[np.random.choice(neg_idx)]

# prepare a negative pair of images and update our lists

pair_images.append([current_image, neg_image])

pair_labels.append([0])

# return a 2-tuple of our image pairs and labels

return np.array(pair_images), np.array(pair_labels)

# 保存训练数据

def plot_training(h, plot_path):

# construct a plot that plots and saves the training history

plt.style.use("ggplot")

plt.figure()

plt.plot(h.history["loss"], label="train_loss")

plt.plot(h.history["val_loss"], label="val_loss")

plt.plot(h.history["accuracy"], label="train_acc")

plt.plot(h.history["val_accuracy"], label="val_acc")

plt.title("Training Loss and Accuracy")

plt.xlabel("Epoch #")

plt.ylabel("Loss/Accuracy")

plt.legend(loc="lower left")

plt.savefig(plot_path)

# 计算欧氏距离

def euclidean_distance(vectors):

# unpack the vectors into separate lists

(featsAA, featsBB) = vectors

# compute the sum of squared distances between the vectors

sum_squared = k.sum(k.square(featsAA - featsBB), axis=1, keepdims=True)

# return the euclidean distance between the vectors

return k.sqrt(k.maximum(sum_squared, k.epsilon()))

# 加载数据集并且归一化

print("[INFO] loading MNIST dataset...")

(trainX, trainY), (testX, testY) = mnist.load_data('C:/Users/xiaomao/Desktop/mnist.npz')

trainX = trainX / 255.0

testX = testX / 255.0

# add a channel dimension to the images

trainX = np.expand_dims(trainX, axis=-1)

testX = np.expand_dims(testX, axis=-1)

# prepare the positive and negative pairs

print("[INFO] preparing positive and negative pairs...")

(pairTrain, labelTrain) = make_pairs(trainX, trainY)

(pairTest, labelTest) = make_pairs(testX, testY)

# 配置孪生神经网络

print("[INFO] building siamese network...")

imgA = Input(IMG_SHAPE)

imgB = Input(IMG_SHAPE)

featureExtractor = build_siamese_model(IMG_SHAPE)

featsA = featureExtractor(imgA)

featsB = featureExtractor(imgB)

# 建立神经网络

distance = euclidean_distance([featsA, featsB])

outputs = Dense(1, activation="sigmoid")(distance)

model = Model(inputs=[imgA, imgB], outputs=outputs)

# compile the model

print("[INFO] compiling model...")

model.compile(loss="binary_crossentropy", optimizer="adam", metrics=["accuracy"])

# train the model

print("[INFO] training model...")

history = model.fit([pairTrain[:, 0], pairTrain[:, 1]], labelTrain[:],

validation_data=([pairTest[:, 0], pairTest[:, 1]], labelTest[:]),

batch_size=BATCH_SIZE,

epochs=EPOCHS)

# serialize the model to disk

print("[INFO] saving siamese model...")

model.save(MODEL_PATH)

# plot the training history

print("[INFO] plotting training history...")

plot_training(history, PLOT_PATH)

训练完成,保存了模型和数据,但是训练一次很长时间。

Epoch 91/100

1875/1875 [==============================] - 37s 19ms/step - loss: 0.3480 - accuracy: 0.8484 - val_loss: 0.3275 - val_accuracy: 0.8551

Epoch 92/100

1875/1875 [==============================] - 37s 20ms/step - loss: 0.3496 - accuracy: 0.8484 - val_loss: 0.3207 - val_accuracy: 0.8573

Epoch 93/100

1875/1875 [==============================] - 37s 19ms/step - loss: 0.3483 - accuracy: 0.8487 - val_loss: 0.2985 - val_accuracy: 0.8694

Epoch 94/100

1875/1875 [==============================] - 36s 19ms/step - loss: 0.3495 - accuracy: 0.8472 - val_loss: 0.3156 - val_accuracy: 0.8608

Epoch 95/100

1875/1875 [==============================] - 37s 19ms/step - loss: 0.3488 - accuracy: 0.8491 - val_loss: 0.3241 - val_accuracy: 0.8552

Epoch 96/100

1875/1875 [==============================] - 37s 19ms/step - loss: 0.3475 - accuracy: 0.8497 - val_loss: 0.3199 - val_accuracy: 0.8581

Epoch 97/100

1875/1875 [==============================] - 42s 22ms/step - loss: 0.3476 - accuracy: 0.8488 - val_loss: 0.3132 - val_accuracy: 0.8597

Epoch 98/100

1875/1875 [==============================] - 38s 20ms/step - loss: 0.3485 - accuracy: 0.8488 - val_loss: 0.3199 - val_accuracy: 0.8579

Epoch 99/100

1875/1875 [==============================] - 36s 19ms/step - loss: 0.3486 - accuracy: 0.8482 - val_loss: 0.3125 - val_accuracy: 0.8622

Epoch 100/100

1875/1875 [==============================] - 38s 20ms/step - loss: 0.3482 - accuracy: 0.8480 - val_loss: 0.3009 - val_accuracy: 0.8680

[INFO] saving siamese model...

2020-12-19 23:09:07.451142: W tensorflow/python/util/util.cc:329] Sets are not currently considered sequences, but this may change in the future, so consider avoiding using them.

WARNING:tensorflow:From D:\deeplearn\tensorflow1\lib\site-packages\tensorflow\python\ops\resource_variable_ops.py:1817: calling BaseResourceVariable.__init__ (from tensorflow.python.ops.resource_variable_ops) with constraint is deprecated and will be removed in a future version.

Instructions for updating:

If using Keras pass *_constraint arguments to layers.

[INFO] plotting training history...

Process finished with exit code 0

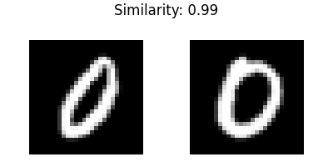

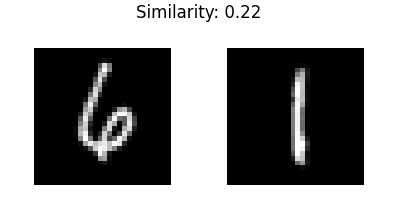

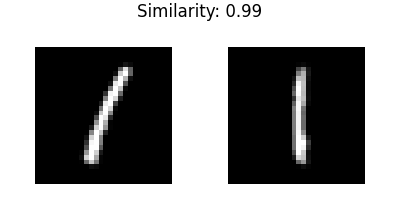

3、测试神经网络

加载模型,读取文件夹内图片,循环进行比较

# import the necessary packages

from tensorflow.keras.models import load_model

from imutils.paths import list_images

import matplotlib.pyplot as plt

import numpy as np

import argparse

import cv2

# construct the argument parser and parse the arguments

# ap = argparse.ArgumentParser()

# ap.add_argument("-i", "--input", required=True, help="path to input directory of testing images")

# args = vars(ap.parse_args())

# grab the test dataset image paths and then randomly generate a

# total of 10 image pairs

print("[INFO] loading test dataset...")

testImagePaths = list(list_images('C:/Users/xiaomao/Desktop/num'))

np.random.seed(42)

pairs = np.random.choice(testImagePaths, size=(10, 2))

# load the model from disk

print("[INFO] loading siamese model...")

model = load_model('C:/Users/xiaomao/Desktop/siamese_model/')

# loop over all image pairs

for (i, (pathA, pathB)) in enumerate(pairs):

# load both the images and convert them to grayscale

imageA = cv2.imread(pathA, 0)

imageB = cv2.imread(pathB, 0)

# create a copy of both the images for visualization purpose

origA = imageA.copy()

origB = imageB.copy()

# add channel a dimension to both the images

imageA = np.expand_dims(imageA, axis=-1)

imageB = np.expand_dims(imageB, axis=-1)

# add a batch dimension to both images

imageA = np.expand_dims(imageA, axis=0)

imageB = np.expand_dims(imageB, axis=0)

# scale the pixel values to the range of [0, 1]

imageA = imageA / 255.0

imageB = imageB / 255.0

# use our siamese model to make predictions on the image pair,

# indicating whether or not the images belong to the same class

preds = model.predict([imageA, imageB])

proba = preds[0][0]

# initialize the figure

fig = plt.figure("Pair #{}".format(i + 1), figsize=(4, 2))

plt.suptitle("Similarity: {:.2f}".format(proba))

# show first image

ax = fig.add_subplot(1, 2, 1)

plt.imshow(origA, cmap=plt.cm.gray)

plt.axis("off")

# show the second image

ax = fig.add_subplot(1, 2, 2)

plt.imshow(origB, cmap=plt.cm.gray)

plt.axis("off")

# show the plot

plt.show()