只有叶子张量才有梯度

import torch

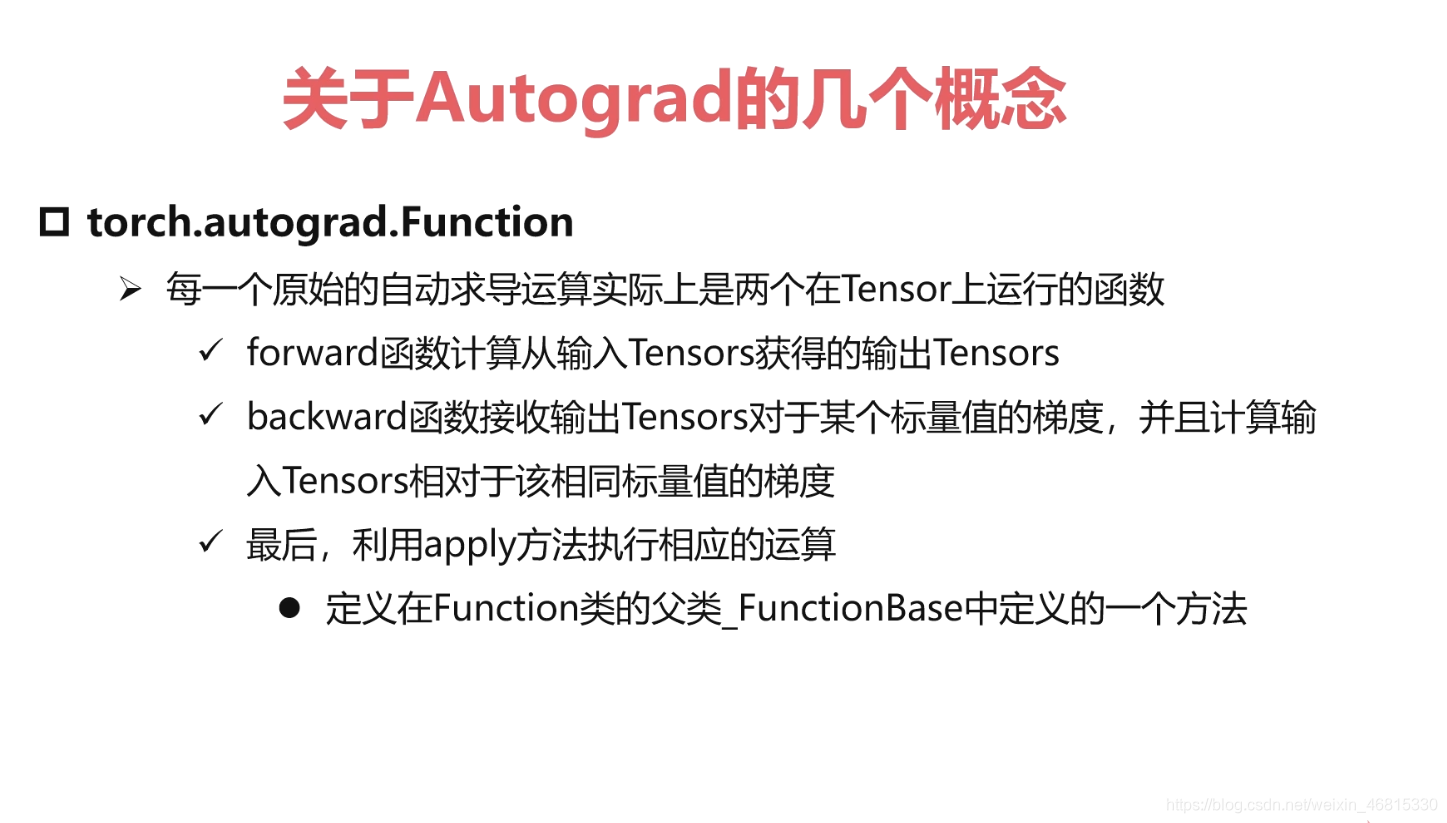

class line(torch.autograd.Function):

@staticmethod

def forward(ctx,w,x,b):

#y = w*x + b

ctx.save_for_backward(w,x,b)

return w * x + b

@staticmethod

def backward(ctx,grad_out):

w,x,b = ctx.saved_tensors

grad_w = grad_out * x

grad_x = grad_out * w

grad_b = grad_out

return grad_w,grad_x,grad_b

w = torch.rand(2,2,requires_grad=True)

x = torch.rand(2,2,requires_grad=True)

b = torch.rand(2,2,requires_grad=True)

out = line.apply(w,x,b)

out.backward(torch.ones(2,2))

print(w,x,b)

print(w.grad,x.grad,b.grad)

tensor([[0.9691, 0.7199],

[0.3945, 0.3675]], requires_grad=True) tensor([[0.3341, 0.2925],

[0.3486, 0.7383]], requires_grad=True) tensor([[0.5318, 0.2300],

[0.7061, 0.9636]], requires_grad=True)

tensor([[0.3341, 0.2925],

[0.3486, 0.7383]]) tensor([[0.9691, 0.7199],

[0.3945, 0.3675]]) tensor([[1., 1.],

[1., 1.]])