一、概述

这篇文章需要完成的是将实时数据写到Redis,我这里自定义了Ridis对应的Sink函数,为了方便直接从socket端接收数据,operator处理后,直接写入redis中,由于比较简单,详细内容直接看实例代码即可。

软件版本:

flink1.10

redis5.0.5

二、代码实战

1.添加redis对应pom依赖

<dependency> <groupId>org.apache.bahir</groupId> <artifactId>flink-connector-redis_2.11</artifactId> <version>1.0</version></dependency>

2.主函数代码:

package com.hadoop.ljs.flink110.redis;import org.apache.flink.api.common.functions.FilterFunction;import org.apache.flink.api.common.functions.MapFunction;import org.apache.flink.streaming.api.datastream.DataStream;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.streaming.connectors.redis.RedisSink;import org.apache.flink.streaming.connectors.redis.common.config.FlinkJedisPoolConfig;import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommand;import org.apache.flink.streaming.connectors.redis.common.mapper.RedisCommandDescription;import org.apache.flink.streaming.connectors.redis.common.mapper.RedisMapper;import scala.Tuple2;/*** @author: Created By lujisen* @company ChinaUnicom Software JiNan* @date: 2020-05-02 10:30* @version: v1.0* @description: com.hadoop.ljs.flink110.redis*/public class RedisSinkMain {public static void main(String[] args) throws Exception {StreamExecutionEnvironment senv =StreamExecutionEnvironment.getExecutionEnvironment();DataStream<String> source = senv.socketTextStream("localhost", 9000);DataStream<String> filter = source.filter(new FilterFunction<String>() {@Overridepublic boolean filter(String value) throws Exception {if (null == value || value.split(",").length != 2) {return false;}return true;}});DataStream<Tuple2<String, String>> keyValue = filter.map(new MapFunction<String, Tuple2<String, String>>() {@Overridepublic Tuple2<String, String> map(String value) throws Exception {String[] split = value.split(",");return new Tuple2<>(split[0], split[1]);}});//创建redis的配置 单机redis用FlinkJedisPoolConfig,集群redis需要用FlinkJedisClusterConfigFlinkJedisPoolConfig redisConf = new FlinkJedisPoolConfig.Builder().setHost("worker2.hadoop.ljs").setPort(6379).setPassword("123456a?").build();keyValue.addSink(new RedisSink<Tuple2<String, String>>(redisConf, new RedisMapper<Tuple2<String, String>>() {@Overridepublic RedisCommandDescription getCommandDescription() {return new RedisCommandDescription(RedisCommand.HSET,"table1");}@Overridepublic String getKeyFromData(Tuple2<String, String> data) {return data._1;}@Overridepublic String getValueFromData(Tuple2<String, String> data) {return data._2;}}));/*启动执行*/senv.execute();}}

3.函数测试

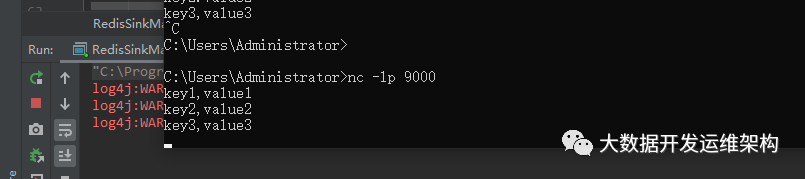

1).window端scoket发送数据

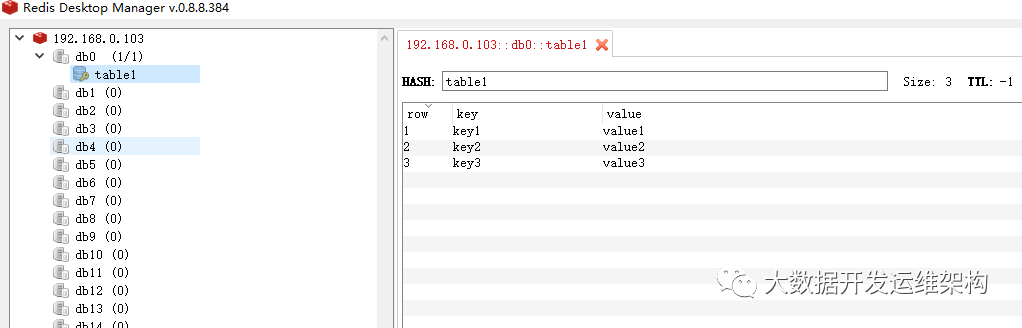

2.redis结果验证