client获取资源

一些概念:

1.SDK 就是 Software Development Kit 的缩写,中文意思就是【软件开发工具包】

2.client-go是kubernetes官方提供的go语言的客户端库,go应用使用该库可以访问kubernetes的API Server,这样我们就能通过编程来对kubernetes资源进行增删改查操作

3.“API( 应用程序编程接口):一套明确定义的各种软件组件之间的通信方法。

(你的开发工具比如goland要配置好GOROOT和GOPATH)

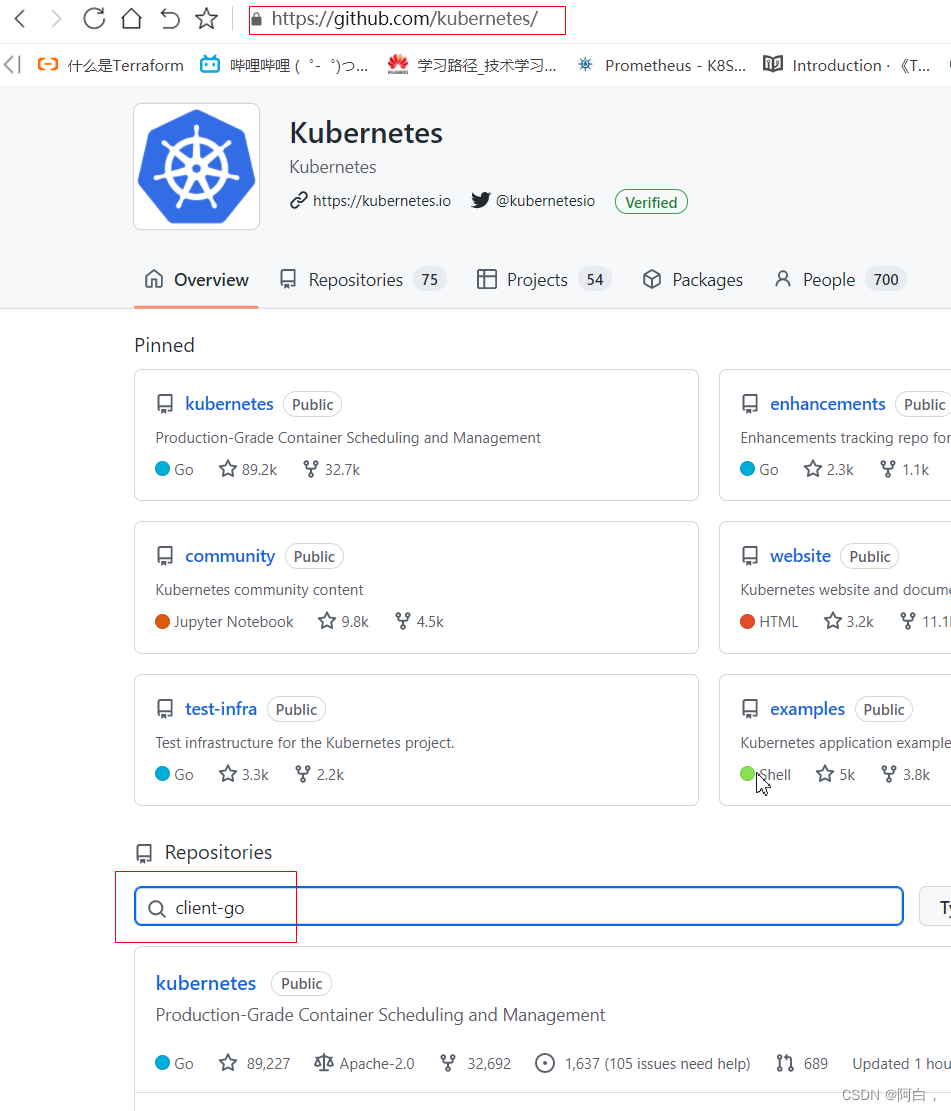

下载对应版本

下载client-go

方法一

(建议,逻辑理解更清晰,更可控)

在releaese界面进去,手动下载对应版本的包,解压到GOPATH的src下,我这里有个项目目录也在src下,所以这里我的下载的包和我的x项目目录都在src下

[root@node2 src]# ls

cl-go client-go hello

[root@node2 src]# pwd

/root/go/src

cl-go是我自己建立的项目目录,里面建立main.go,文件会导入client-go包下的内容的

go install相应的的包,然后就可以导入使用

[root@node2 client-go]# ls

CHANGELOG.md discovery go.sum kubernetes_test OWNERS rest testing util

code-of-conduct.md dynamic informers LICENSE pkg restmapper third_party

CONTRIBUTING.md examples INSTALL.md listers plugin scale tools

deprecated go.mod kubernetes metadata README.md SECURITY_CONTACTS transport

[root@node2 client-go]# cd tools/

[root@node2 tools]# ls

auth clientcmd leaderelection pager record remotecommand

cache events metrics portforward reference watch

[root@node2 tools]# pwd

/root/go/src/client-go/tools

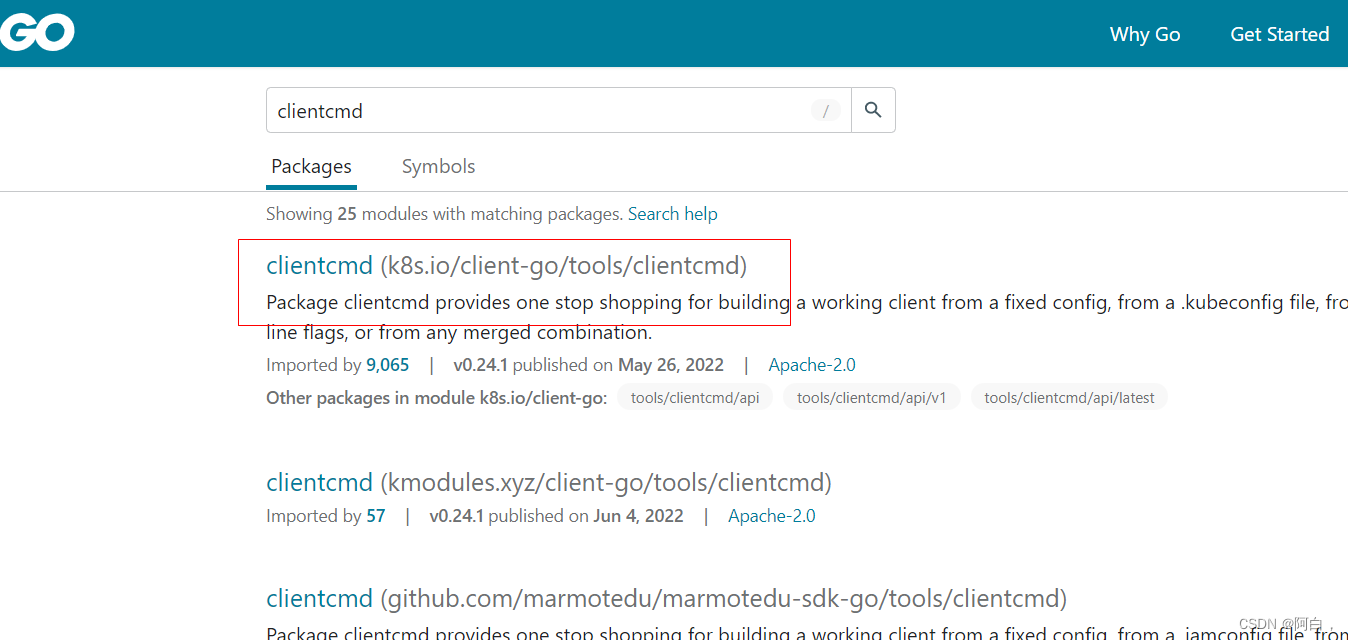

我们这里要用的是clientcmd包,该包下面基本是一系列的go文件

直接在这里go install clientcmd即可

下载报错就运行go env -w GOPROXY="https://goproxy.cn",重新install

到这里就可以使用了,比如在main.go文件中

import (

"client-go/tools/clientcmd"

)

可以使用./开头的相对路径,也可以用相对于GOPATH和GOROOT的"绝对路径",会从这些路径下一层一层找包

如果提示报错,根据报错信息拷贝包的内容到提示的GOROOT下的路径即可(比如我这里将clientcmd下的文件全拷贝了一份到GOROOT/src/client-go/tools/clientcmd下)

在项目目录下go mod init xxx(任意名称比如项目名)

在go mod tidy即可

方法二

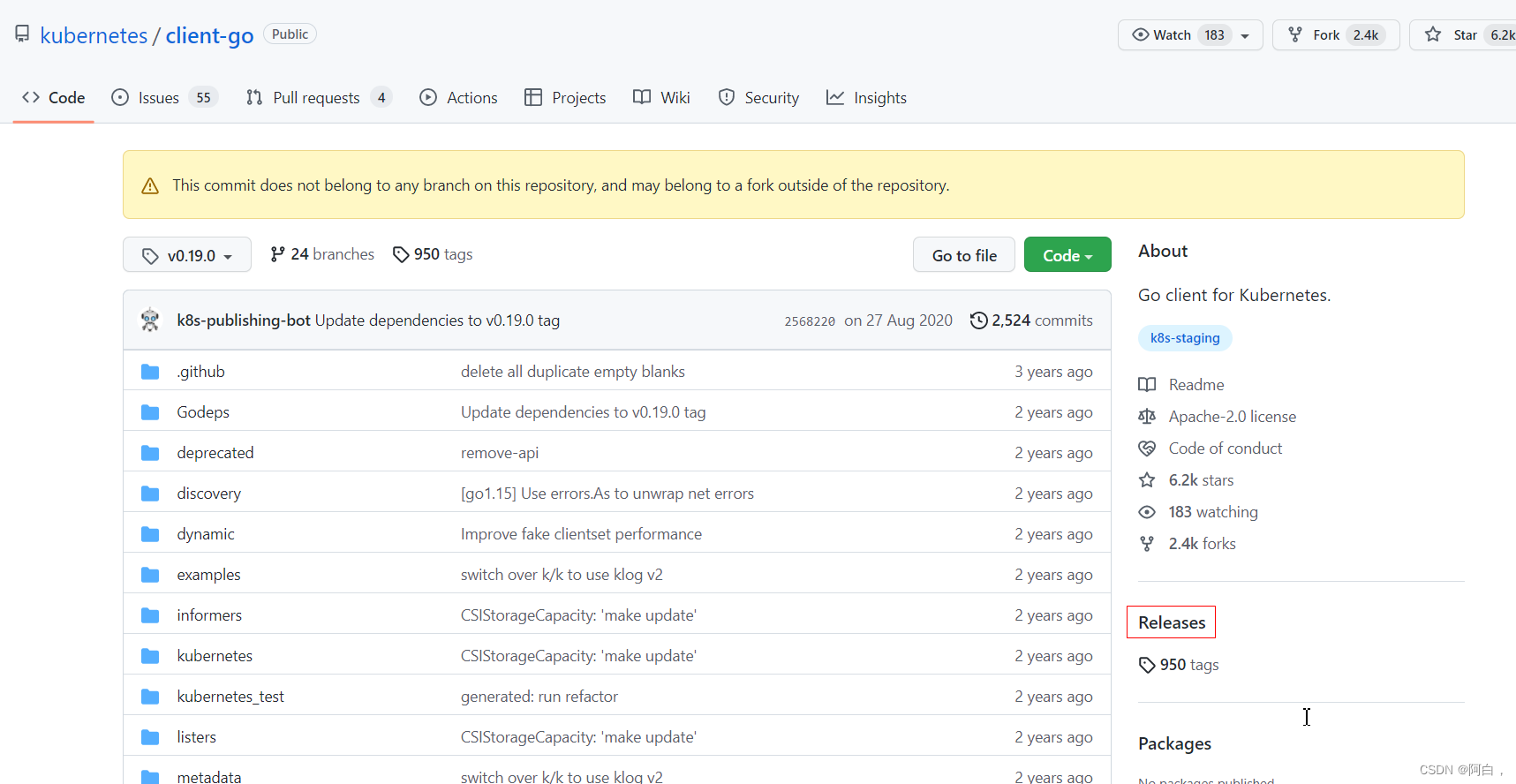

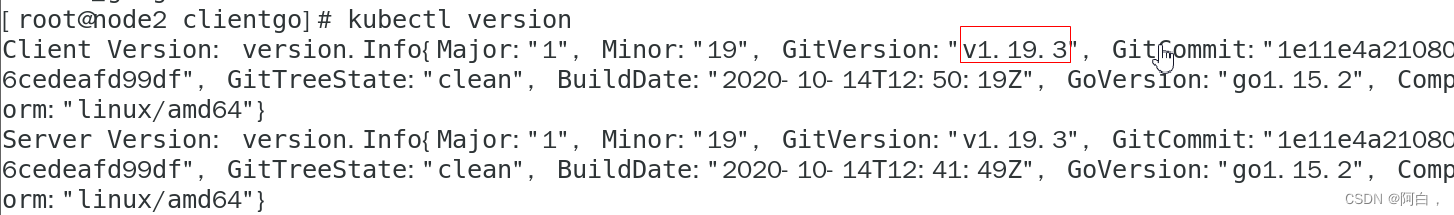

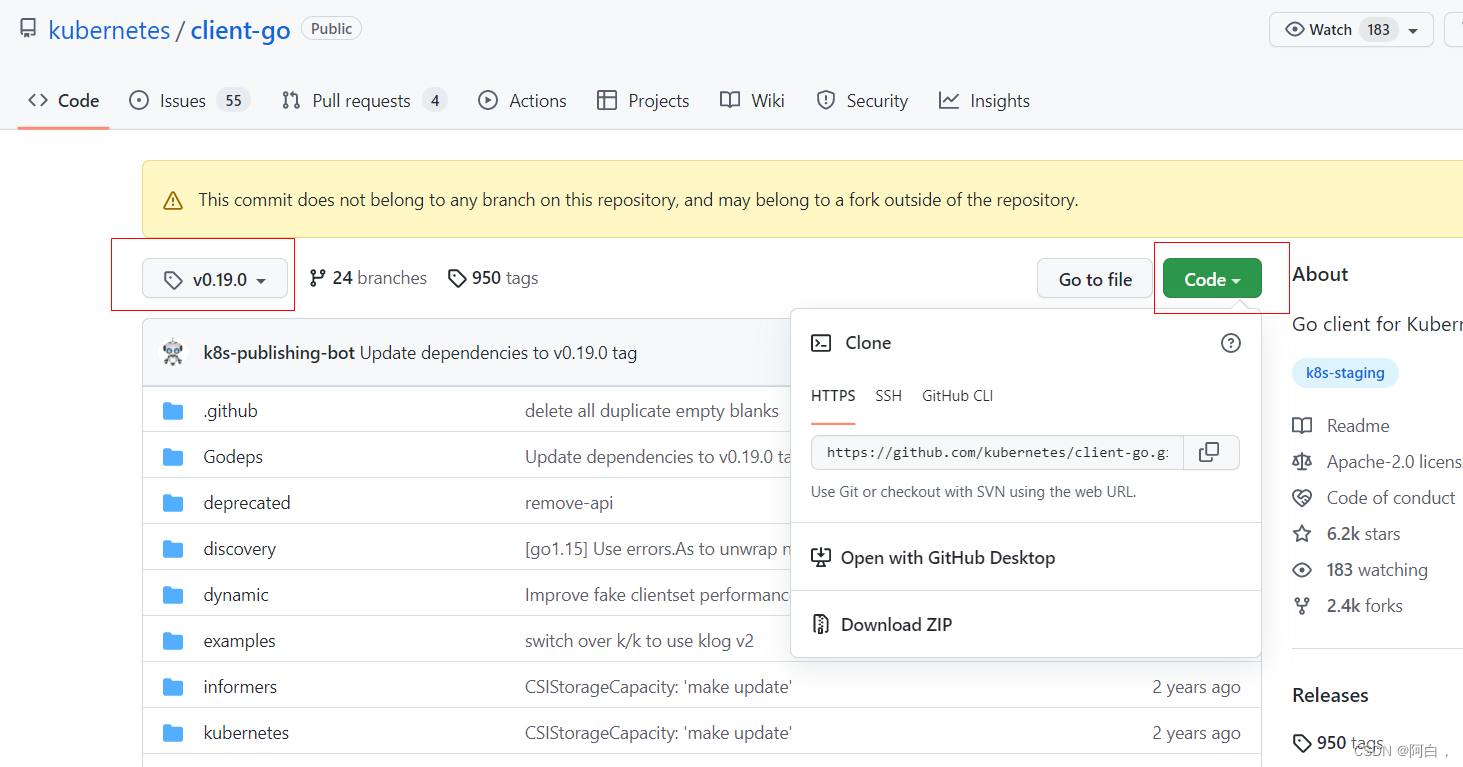

我这里选择了v0.19.13的分支

这些版本一般都是可以向下兼容的

(里面的example文件夹可以看一下,看这些代码案例很快能理解这个API的使用,也是学习client-go的方法)

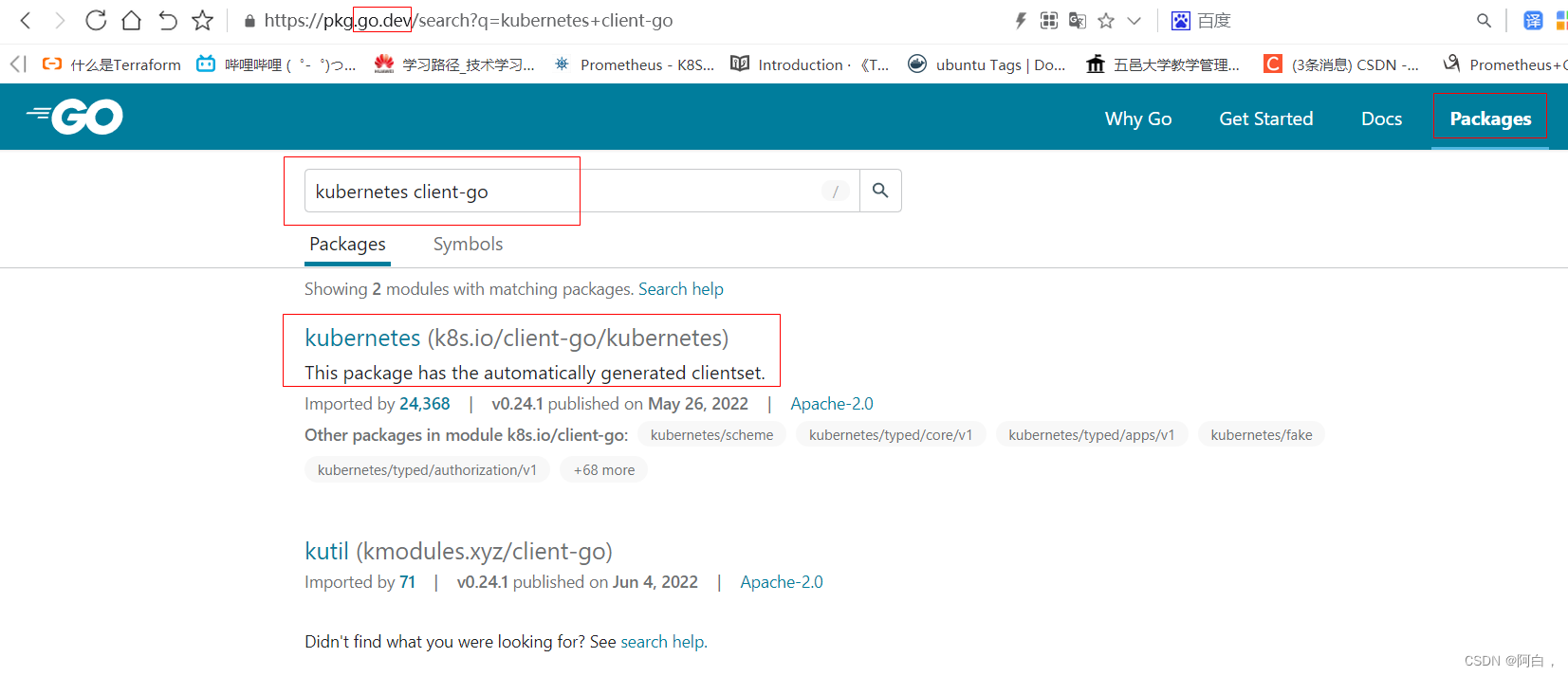

导入client包

import下面有client各类的包

写明版本号,不用自动工具识别

这里要看你的go的版本,似乎是1.17之后弃用了go get,所以我这里改成了

你也可以直接下载整个client-go,即改成k8s.io/[email protected]

go install k8s.io/client-go/[email protected]

如果发现下载不了,可以尝试对提示信息中的域名做个解析,这里推荐设置代理的方式

go env -w GOPROXY=https://goproxy.cn

go mod 以及包详解

(导包方式很多,只要能下载到相应的包,编译好,放在某个目录下,就能到入了)

(go get其实也没有完全弃用,install和get凭个人爱好即可,只是新版一般需要在当前项目目录下go mod init和go mod tidy,运行完之后,用get也没问题了,但是版本格式使用@)

(go install的使用有个细节,要求被install的对象底下主要是一系列的go文件的,比如装第三方包也是这样)

方法三

直接给git clone下来

达到kubectl效果,自定义获取的资源

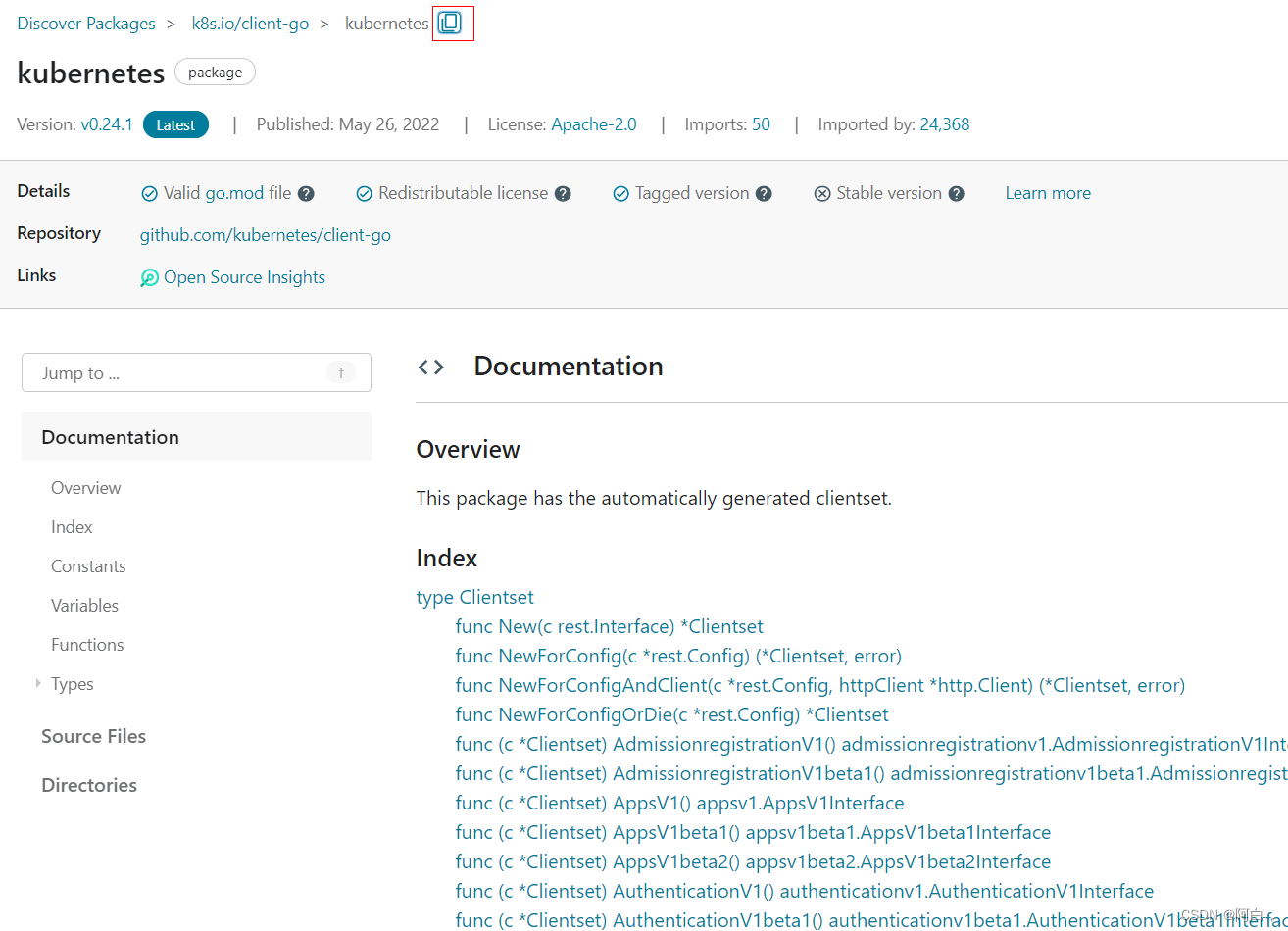

(要使用到的包主要是client-go下的kubernetes和clientcmd)

先放一下完整的源码

package main

import (

"client-go/tools/clientcmd"

"context"

"fmt"

metaV1 "k8s.io/apimachinery/pkg/apis/meta/v1" //重命名

"k8s.io/client-go/kubernetes"

"log"

)

func main() {

//fmt.Println("client-go")

configPath := "etc/kube.conf" //使用API编程k8s,需要kubeconfig文件来验证

config, err := clientcmd.BuildConfigFromFlags("", configPath) //使用clientcmd的BuildConfigFromFlags,加载配置文件生成Config对象,该对象包含apiserver的地址和用户名和密码和token等,""那里是值masterurl,就是完整路径使用,这里将文件放到main.go同一个的cg目录下,直接空就行,然后configPath写etc/kube.conf即可,config用来变成使用api操作集群验证用

if err != nil {

log.Fatal(err) //接收err这个error类型作为参数,只开启Fatal级别的日志,日志级别有LOG DEBUG INFO WARN ERROR FATAL

}

clientset, err := kubernetes.NewForConfig(config) //使用kubernetes包下的NewForConfig来初始化clientset中的每个client,参数是配置文件,返回clientset对象的,是多个client的集合,就像是kubernetes的客户端

if err != nil {

log.Fatal(err)

}

nodeList, err := clientset.CoreV1().Nodes().List(context.TODO(), metaV1.ListOptions{

})

if err != nil {

log.Fatal(err)

}

fmt.Println("node:")

for _, node := range nodeList.Items {

fmt.Printf("%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\n",

node.Name,

node.Status.Addresses,

node.Status.Phase,

node.Status.NodeInfo.OSImage,

node.Status.NodeInfo.KubeletVersion,

node.Status.NodeInfo.OperatingSystem,

node.Status.NodeInfo.Architecture,

node.CreationTimestamp,

)

}

fmt.Println("namespace")

namespaceList, err := clientset.CoreV1().Namespaces().List(context.TODO(), metaV1.ListOptions{

})

for _, namespace := range namespaceList.Items {

fmt.Println(namespace.Name, namespace.CreationTimestamp, namespace.Status.Phase)

}

serviceList, _ := clientset.CoreV1().Services("").List(context.TODO(), metaV1.ListOptions{

})

fmt.Println("services")

for _, service := range serviceList.Items {

fmt.Println(service.Name, service.Spec.Type, service.CreationTimestamp, service.Spec.Ports, service.Spec.ClusterIP)

}

deploymentList, _ := clientset.AppsV1().Deployments("default").List(context.TODO(), metaV1.ListOptions{

})

fmt.Println("deployment")

for _, deployment := range deploymentList.Items {

fmt.Println(deployment.Name, deployment.Namespace, deployment.CreationTimestamp, deployment.Labels, deployment.Spec.Selector.MatchLabels, deployment.Status.Replicas, deployment.Status.AvailableReplicas)

}

}

在单当前的项目文件夹下创建etc/kube.conf,将这台节点的~/.kube/config文件内容复制进来

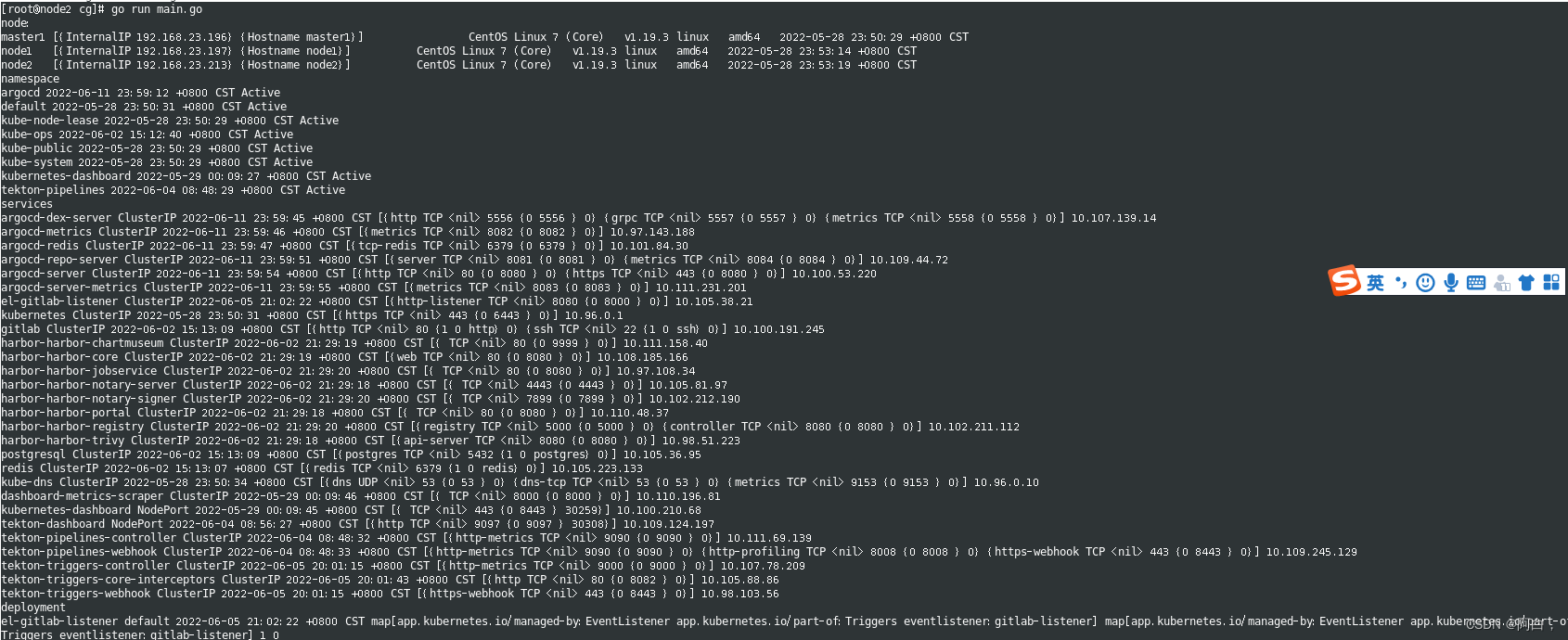

运行结果:

[root@node2 cg]# go run main.go

node:

master1 [{

InternalIP 192.168.23.196} {

Hostname master1}] CentOS Linux 7 (Core) v1.19.3 linux amd64 2022-05-28 23:50:29 +0800 CST

node1 [{

InternalIP 192.168.23.197} {

Hostname node1}] CentOS Linux 7 (Core) v1.19.3 linux amd64 2022-05-28 23:53:14 +0800 CST

node2 [{

InternalIP 192.168.23.213} {

Hostname node2}] CentOS Linux 7 (Core) v1.19.3 linux amd64 2022-05-28 23:53:19 +0800 CST

namespace

argocd 2022-06-11 23:59:12 +0800 CST Active

default 2022-05-28 23:50:31 +0800 CST Active

kube-node-lease 2022-05-28 23:50:29 +0800 CST Active

kube-ops 2022-06-02 15:12:40 +0800 CST Active

kube-public 2022-05-28 23:50:29 +0800 CST Active

kube-system 2022-05-28 23:50:29 +0800 CST Active

kubernetes-dashboard 2022-05-29 00:09:27 +0800 CST Active

tekton-pipelines 2022-06-04 08:48:29 +0800 CST Active

services

argocd-dex-server ClusterIP 2022-06-11 23:59:45 +0800 CST [{

http TCP <nil> 5556 {

0 5556 } 0} {

grpc TCP <nil> 5557 {

0 5557 } 0} {

metrics TCP <nil> 5558 {

0 5558 } 0}] 10.107.139.14

argocd-metrics ClusterIP 2022-06-11 23:59:46 +0800 CST [{

metrics TCP <nil> 8082 {

0 8082 } 0}] 10.97.143.188

argocd-redis ClusterIP 2022-06-11 23:59:47 +0800 CST [{

tcp-redis TCP <nil> 6379 {

0 6379 } 0}] 10.101.84.30

argocd-repo-server ClusterIP 2022-06-11 23:59:51 +0800 CST [{

server TCP <nil> 8081 {

0 8081 } 0} {

metrics TCP <nil> 8084 {

0 8084 } 0}] 10.109.44.72

argocd-server ClusterIP 2022-06-11 23:59:54 +0800 CST [{

http TCP <nil> 80 {

0 8080 } 0} {

https TCP <nil> 443 {

0 8080 } 0}] 10.100.53.220

argocd-server-metrics ClusterIP 2022-06-11 23:59:55 +0800 CST [{

metrics TCP <nil> 8083 {

0 8083 } 0}] 10.111.231.201

el-gitlab-listener ClusterIP 2022-06-05 21:02:22 +0800 CST [{

http-listener TCP <nil> 8080 {

0 8000 } 0}] 10.105.38.21

kubernetes ClusterIP 2022-05-28 23:50:31 +0800 CST [{

https TCP <nil> 443 {

0 6443 } 0}] 10.96.0.1

gitlab ClusterIP 2022-06-02 15:13:09 +0800 CST [{

http TCP <nil> 80 {

1 0 http} 0} {

ssh TCP <nil> 22 {

1 0 ssh} 0}] 10.100.191.245

harbor-harbor-chartmuseum ClusterIP 2022-06-02 21:29:19 +0800 CST [{

TCP <nil> 80 {

0 9999 } 0}] 10.111.158.40

harbor-harbor-core ClusterIP 2022-06-02 21:29:19 +0800 CST [{

web TCP <nil> 80 {

0 8080 } 0}] 10.108.185.166

harbor-harbor-jobservice ClusterIP 2022-06-02 21:29:20 +0800 CST [{

TCP <nil> 80 {

0 8080 } 0}] 10.97.108.34

harbor-harbor-notary-server ClusterIP 2022-06-02 21:29:18 +0800 CST [{

TCP <nil> 4443 {

0 4443 } 0}] 10.105.81.97

harbor-harbor-notary-signer ClusterIP 2022-06-02 21:29:20 +0800 CST [{

TCP <nil> 7899 {

0 7899 } 0}] 10.102.212.190

harbor-harbor-portal ClusterIP 2022-06-02 21:29:18 +0800 CST [{

TCP <nil> 80 {

0 8080 } 0}] 10.110.48.37

harbor-harbor-registry ClusterIP 2022-06-02 21:29:20 +0800 CST [{

registry TCP <nil> 5000 {

0 5000 } 0} {

controller TCP <nil> 8080 {

0 8080 } 0}] 10.102.211.112

harbor-harbor-trivy ClusterIP 2022-06-02 21:29:18 +0800 CST [{

api-server TCP <nil> 8080 {

0 8080 } 0}] 10.98.51.223

postgresql ClusterIP 2022-06-02 15:13:09 +0800 CST [{

postgres TCP <nil> 5432 {

1 0 postgres} 0}] 10.105.36.95

redis ClusterIP 2022-06-02 15:13:07 +0800 CST [{

redis TCP <nil> 6379 {

1 0 redis} 0}] 10.105.223.133

kube-dns ClusterIP 2022-05-28 23:50:34 +0800 CST [{

dns UDP <nil> 53 {

0 53 } 0} {

dns-tcp TCP <nil> 53 {

0 53 } 0} {

metrics TCP <nil> 9153 {

0 9153 } 0}] 10.96.0.10

dashboard-metrics-scraper ClusterIP 2022-05-29 00:09:46 +0800 CST [{

TCP <nil> 8000 {

0 8000 } 0}] 10.110.196.81

kubernetes-dashboard NodePort 2022-05-29 00:09:45 +0800 CST [{

TCP <nil> 443 {

0 8443 } 30259}] 10.100.210.68

tekton-dashboard NodePort 2022-06-04 08:56:27 +0800 CST [{

http TCP <nil> 9097 {

0 9097 } 30308}] 10.109.124.197

tekton-pipelines-controller ClusterIP 2022-06-04 08:48:32 +0800 CST [{

http-metrics TCP <nil> 9090 {

0 9090 } 0}] 10.111.69.139

tekton-pipelines-webhook ClusterIP 2022-06-04 08:48:33 +0800 CST [{

http-metrics TCP <nil> 9090 {

0 9090 } 0} {

http-profiling TCP <nil> 8008 {

0 8008 } 0} {

https-webhook TCP <nil> 443 {

0 8443 } 0}] 10.109.245.129

tekton-triggers-controller ClusterIP 2022-06-05 20:01:15 +0800 CST [{

http-metrics TCP <nil> 9000 {

0 9000 } 0}] 10.107.78.209

tekton-triggers-core-interceptors ClusterIP 2022-06-05 20:01:43 +0800 CST [{

http TCP <nil> 80 {

0 8082 } 0}] 10.105.88.86

tekton-triggers-webhook ClusterIP 2022-06-05 20:01:15 +0800 CST [{

https-webhook TCP <nil> 443 {

0 8443 } 0}] 10.98.103.56

deployment

el-gitlab-listener default 2022-06-05 21:02:22 +0800 CST map[app.kubernetes.io/managed-by:EventListener app.kubernetes.io/part-of:Triggers eventlistener:gitlab-listener] map[app.kubernetes.io/managed-by:EventListener app.kubernetes.io/part-of:Triggers eventlistener:gitlab-listener] 1 0

node

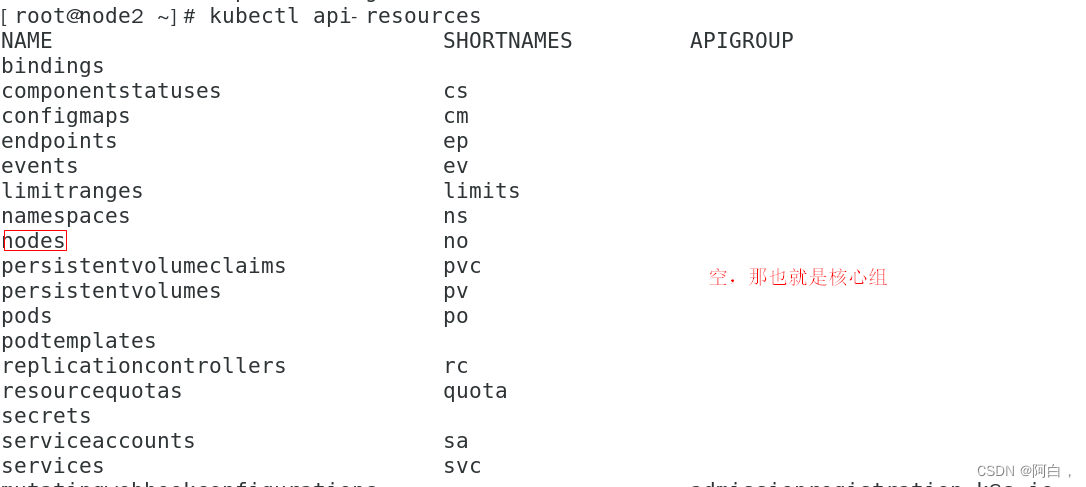

查看kubernetes每种的api资源的api组

[root@node2 cg]# kubectl api-resources

NAME SHORTNAMES APIGROUP NAMESPACED KIND

bindings true Binding

componentstatuses cs false ComponentStatus

configmaps cm true ConfigMap

endpoints ep true Endpoints

events ev true Event

limitranges limits true LimitRange

namespaces ns false Namespace

nodes no false Node

persistentvolumeclaims pvc true PersistentVolumeClaim

persistentvolumes pv false PersistentVolume

pods po true Pod

podtemplates true PodTemplate

replicationcontrollers rc true ReplicationController

resourcequotas quota true ResourceQuota

secrets true Secret

serviceaccounts sa true ServiceAccount

services svc true Service

mutatingwebhookconfigurations admissionregistration.k8s.io false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io false ValidatingWebhookConfiguration

customresourcedefinitions crd,crds apiextensions.k8s.io false CustomResourceDefinition

apiservices apiregistration.k8s.io false APIService

controllerrevisions apps true ControllerRevision

daemonsets ds apps true DaemonSet

deployments deploy apps true Deployment

replicasets rs apps true ReplicaSet

statefulsets sts apps true StatefulSet

applications app,apps argoproj.io true Application

appprojects appproj,appprojs argoproj.io true AppProject

tokenreviews authentication.k8s.io false TokenReview

localsubjectaccessreviews authorization.k8s.io true LocalSubjectAccessReview

selfsubjectaccessreviews authorization.k8s.io false SelfSubjectAccessReview

selfsubjectrulesreviews authorization.k8s.io false SelfSubjectRulesReview

subjectaccessreviews authorization.k8s.io false SubjectAccessReview

horizontalpodautoscalers hpa autoscaling true HorizontalPodAutoscaler

cronjobs cj batch true CronJob

jobs batch true Job

images img caching.internal.knative.dev true Image

certificatesigningrequests csr certificates.k8s.io false CertificateSigningRequest

leases coordination.k8s.io true Lease

extensions dashboard.tekton.dev true Extension

endpointslices discovery.k8s.io true EndpointSlice

events ev events.k8s.io true Event

ingresses ing extensions true Ingress

ingressclasses networking.k8s.io false IngressClass

ingresses ing networking.k8s.io true Ingress

networkpolicies netpol networking.k8s.io true NetworkPolicy

runtimeclasses node.k8s.io false RuntimeClass

poddisruptionbudgets pdb policy true PodDisruptionBudget

podsecuritypolicies psp policy false PodSecurityPolicy

clusterrolebindings rbac.authorization.k8s.io false ClusterRoleBinding

clusterroles rbac.authorization.k8s.io false ClusterRole

rolebindings rbac.authorization.k8s.io true RoleBinding

roles rbac.authorization.k8s.io true Role

priorityclasses pc scheduling.k8s.io false PriorityClass

csidrivers storage.k8s.io false CSIDriver

csinodes storage.k8s.io false CSINode

storageclasses sc storage.k8s.io false StorageClass

volumeattachments storage.k8s.io false VolumeAttachment

clustertasks tekton.dev false ClusterTask

conditions tekton.dev true Condition

pipelineresources tekton.dev true PipelineResource

pipelineruns pr,prs tekton.dev true PipelineRun

pipelines tekton.dev true Pipeline

taskruns tr,trs tekton.dev true TaskRun

tasks tekton.dev true Task

ingressroutes traefik.containo.us true IngressRoute

ingressroutetcps traefik.containo.us true IngressRouteTCP

ingressrouteudps traefik.containo.us true IngressRouteUDP

middlewares traefik.containo.us true Middleware

middlewaretcps traefik.containo.us true MiddlewareTCP

serverstransports traefik.containo.us true ServersTransport

tlsoptions traefik.containo.us true TLSOption

tlsstores traefik.containo.us true TLSStore

traefikservices traefik.containo.us true TraefikService

clusterinterceptors ci triggers.tekton.dev false ClusterInterceptor

clustertriggerbindings ctb triggers.tekton.dev false ClusterTriggerBinding

eventlisteners el triggers.tekton.dev true EventListener

triggerbindings tb triggers.tekton.dev true TriggerBinding

triggers tri triggers.tekton.dev true Trigger

triggertemplates tt triggers.tekton.dev true TriggerTemplate

空就是核心组core()

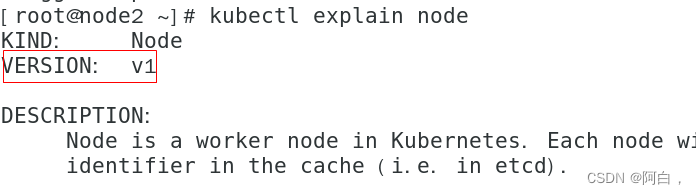

要看相应的版本,比如pod

kubectl explain pod

status和spec很多信息都常用的

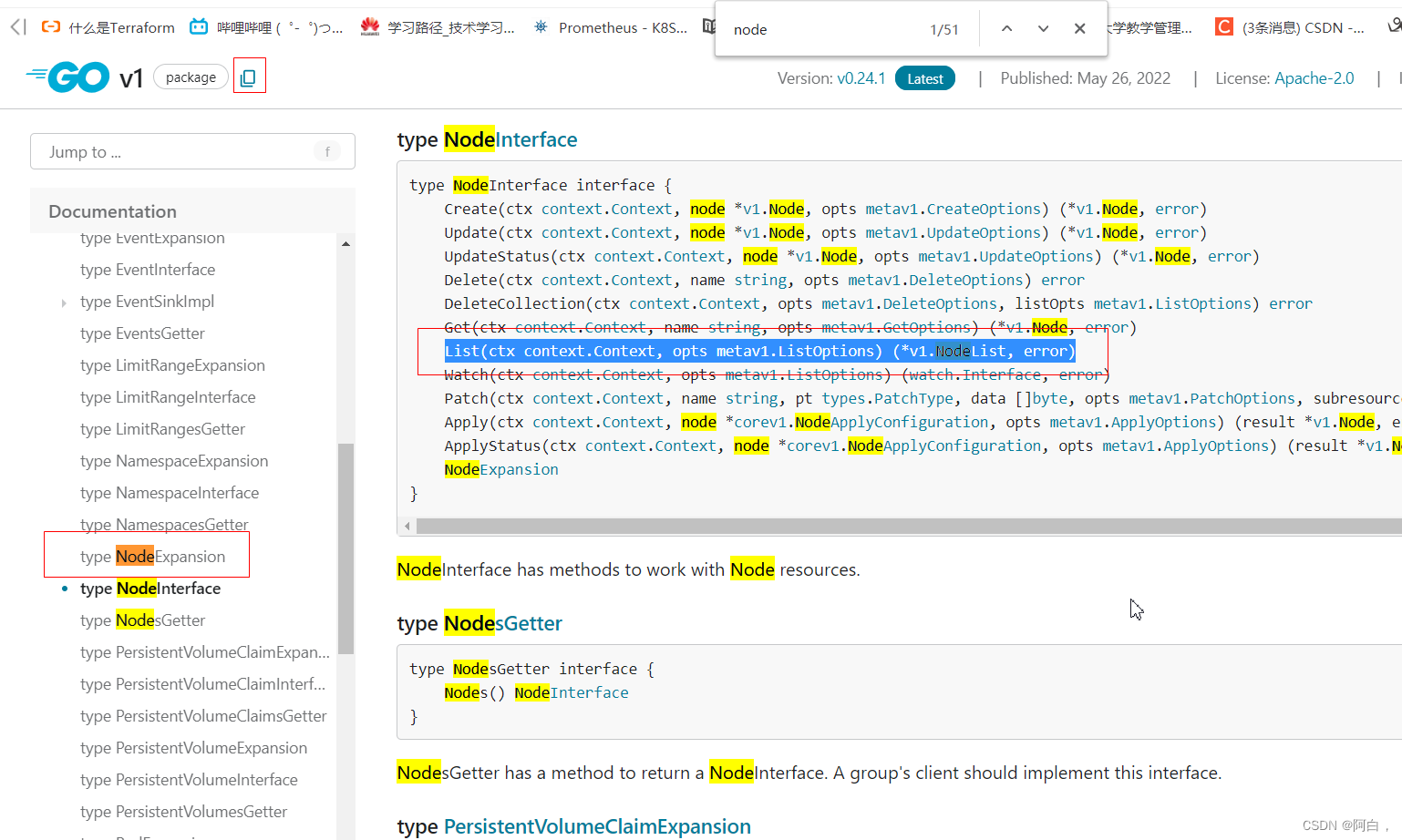

nodeList, err := clientset.CoreV1().Nodes().List(context.TODO(), metaV1.ListOptions{

}) //使用clientset下的方法,各方法顺序指定了CoreV1即组合版本,对node,用List获取全部的,ListOptions是标准REST列表调用的查询选项。,返回一个NodeList对象

//context是上下文对象,这里可以不用管他,TODO()返回一个空的context

if err != nil {

log.Fatal(err)

}

fmt.Println("node:")

for _, node := range nodeList.Items {

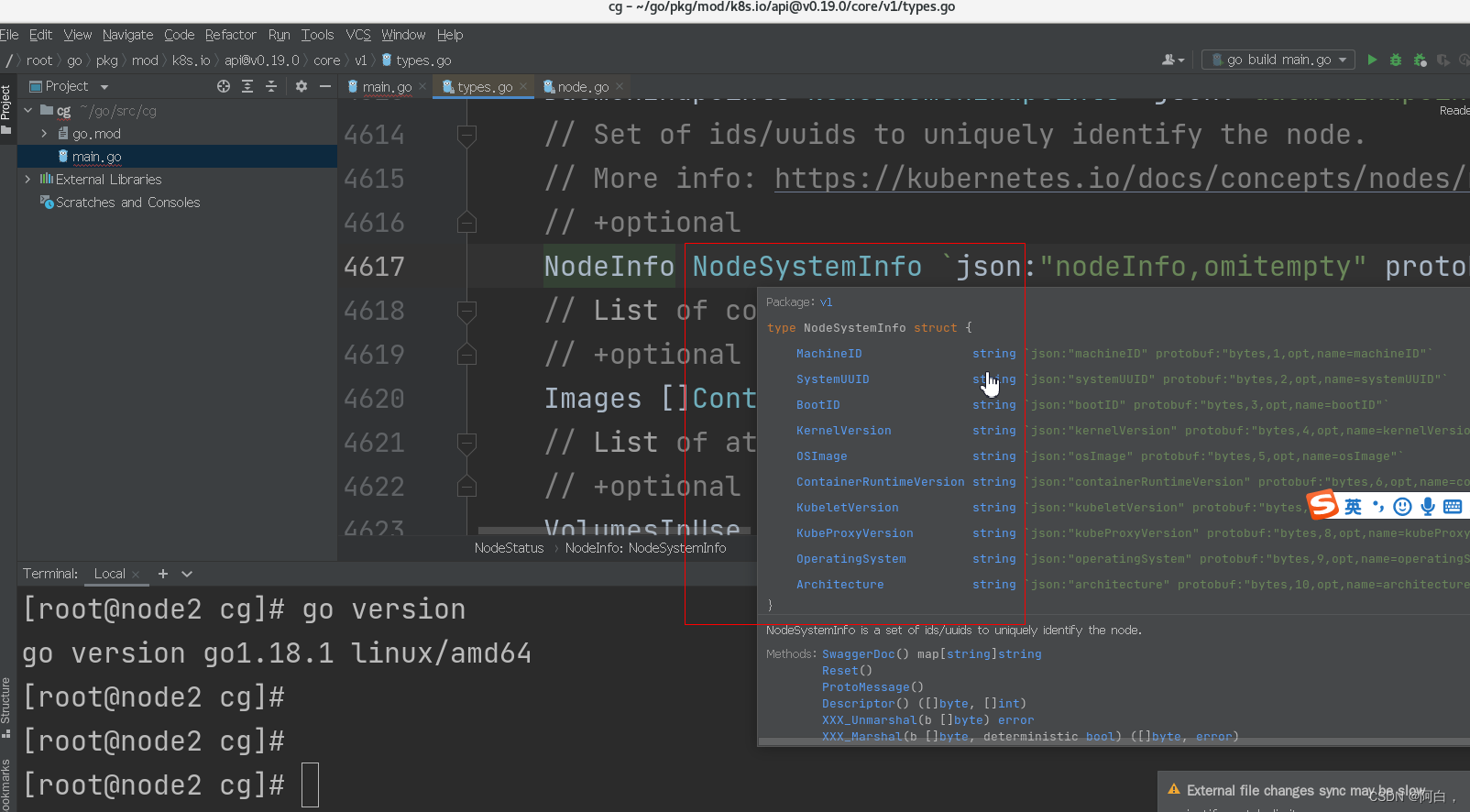

//NodeList是一个结构体对象,其中的Items是一个Node类型的切片,range可以逐个拿出数组切片字符串等序列数据,所以这里拿到的是一个个Node类型对象,也是一个结构体,其中每个元素有事各种类型的数据可以调用一系列的算法

fmt.Printf("%s\t%s\t%s\t%s\t%s\t%s\t%s\t%s\n",

node.Name,

node.Status.Addresses,

node.Status.Phase,

node.Status.NodeInfo.OSImage,

node.Status.NodeInfo.KubeletVersion,

node.Status.NodeInfo.OperatingSystem,

node.Status.NodeInfo.Architecture,

node.CreationTimestamp,

)

}

除了Ctrl+B能查看源码,还能查看帮助文档

复制报的路径k8s.io/apimachinery/pkg/apis/meta/v1(ListOptions包)

Ctrl+B,Ctrl+F搜索,快熟查看源码,这里面的内容都能展示

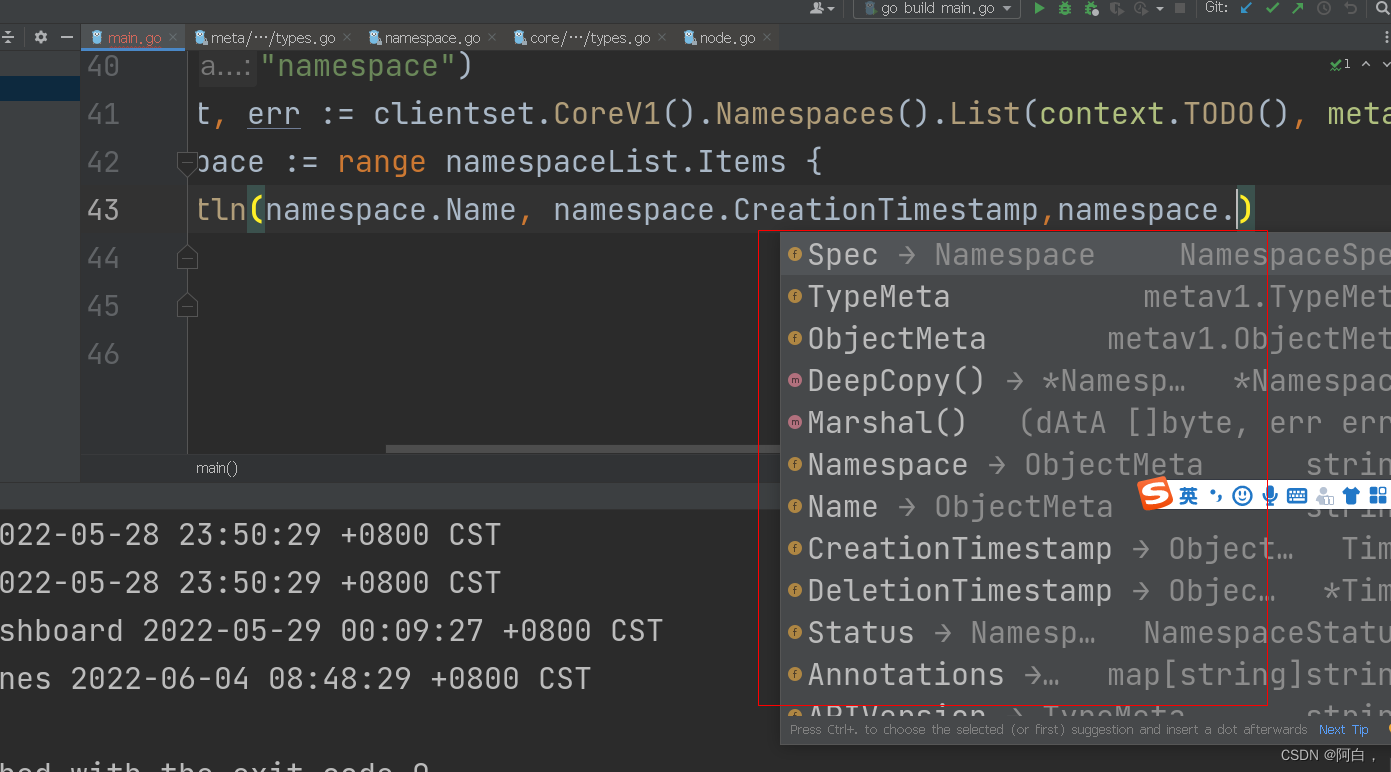

namespace

fmt.Println("namespace")

namespaceList, err := clientset.CoreV1().Namespaces().List(context.TODO(), metaV1.ListOptions{

})

for _, namespace := range namespaceList.Items {

fmt.Println(namespace.Name, namespace.CreationTimestamp, namespace.Status.Phase)

}

还有一系列可以写

service

serviceList, _ := clientset.CoreV1().Services("").List(context.TODO(), metaV1.ListOptions{

})

fmt.Println("services")

for _, service := range serviceList.Items {

fmt.Println(service.Name, service.Spec.Type, service.CreationTimestamp, service.Spec.Ports, service.Spec.ClusterIP)

}

deployment

deploymentList, _ := clientset.AppsV1().Deployments("default").List(context.TODO(), metaV1.ListOptions{

})

fmt.Println("deployment")

for _, deployment := range deploymentList.Items {

fmt.Println(deployment.Name, deployment.Namespace, deployment.CreationTimestamp, deployment.Labels, deployment.Spec.Selector.MatchLabels, deployment.Status.Replicas, deployment.Status.AvailableReplicas)

}