时隔这么久,还是决定继续把这个博客写下去,之前介绍了非监督学习和分类网络,那么剩下的只有目标检测方面的内容了,这50篇感觉也写不完啊,退堂鼓先打一下,目标检测我觉得可以分成两类,一类是yolo和ssd这样的用于回归出框的坐标的定位,一类是类似FCN,Deeplabel这样的segmentation网络,个人感觉而言,yolo理解起来相对比较复杂繁琐一些,所以我感觉还是从segmentation开始操作,我们Deeplabel V3+ 为网络模型来进行模拟和测试。

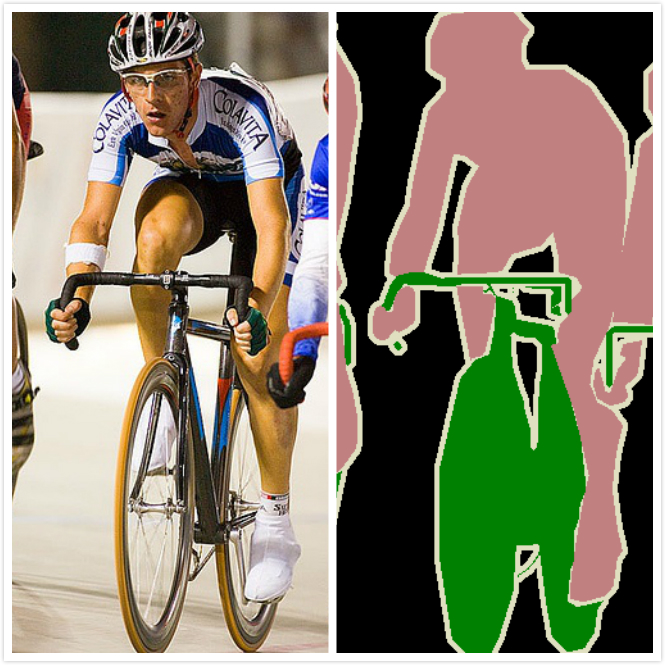

1.Segmentation的效果

首先我们来看看,Segmentation干的事情是什么,上图

它可以把左边的这个图变成右边的这个图,它是怎么变的呢,我们就开始来说说。

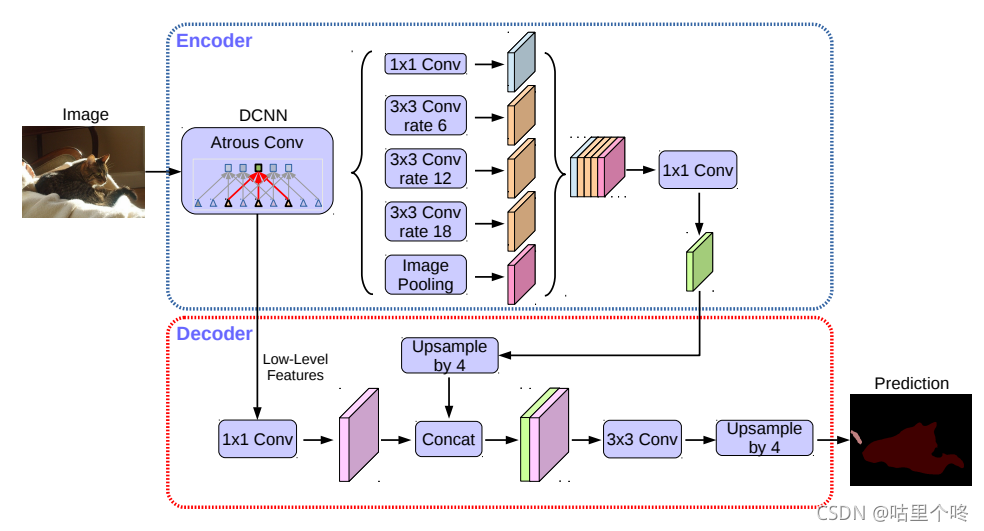

2.DeepLabel v3+

我们先来看看 DeepLabel v3+的网络结构图吧,然后一步一步的梳理一下,里面用到的一些方法。

可以看到的其实可以分成两个部分,一个部分是Encoder,另一个部分是Decoder.

其中Encoder部分用到了Astrous Conv也就是空洞卷积,不知道什么是空洞的卷积的话,可以自己去查一查,我认为它的作用就是增加感受野,要是不知道感受野是啥的话,还是那句话,可以自己去查一查,感受野这个东西对segmentation来说是个关键的东西,在空洞卷积之后,又用到了一个ASPP的方法,也就是空间金字塔池化,听上去就很牛逼的样子,我认为这部分的作用是为了增强模型对不同尺度分割目标的感知能力的。

同时我们也可以看到,Encoder过程中的low-level的featuremap也送到了Decoder部分,其次ASPP之后featuremap再concat到一起,最后也送到了Decoder阶段,送入的到Docoder部分的作用,我的想法是,实现浅层和深层特征的融合,使得分割出来的对象有更加鲜明和顺滑的轮廓。

Decoder阶段就是对featuremap进行上采样,使得最后的featuremap的长宽和图片的长宽变成一样的。

下面,我提供一个Pytorch版本的DeepLabel V3+的网路结构模型代码:

大家可以根据这个代码先撸一撸网络模型,backbone我用的Xception。

import math

import torch

import torch.nn as nn

import torch.nn.functional as F

def fixed_padding(inputs, kernel_size, dilation):

kernel_size_effective = kernel_size + (kernel_size - 1) * (dilation - 1)

pad_total = kernel_size_effective - 1

pad_beg = pad_total // 2

pad_end = pad_total - pad_beg

padded_inputs = F.pad(inputs, (pad_beg, pad_end, pad_beg, pad_end))

return padded_inputs

class SeparableConv2d_same(nn.Module):

def __init__(self, inplanes, planes, kernel_size=3, stride=1, dilation=1, bias=False):

super(SeparableConv2d_same, self).__init__()

self.conv1 = nn.Conv2d(inplanes, inplanes, kernel_size, stride, 0, dilation,

groups=inplanes, bias=bias)

self.pointwise = nn.Conv2d(inplanes, planes, 1, 1, 0, 1, 1, bias=bias)

def forward(self, x):

x = fixed_padding(x, self.conv1.kernel_size[0], dilation=self.conv1.dilation[0])

x = self.conv1(x)

x = self.pointwise(x)

return x

class Block(nn.Module):

def __init__(self, inplanes, planes, reps, stride=1, dilation=1, start_with_relu=True, grow_first=True, is_last=False):

super(Block, self).__init__()

if planes != inplanes or stride != 1:

self.skip = nn.Conv2d(inplanes, planes, 1, stride=stride, bias=False)

self.skipbn = nn.BatchNorm2d(planes)

else:

self.skip = None

self.relu = nn.ReLU(inplace=True)

rep = []

filters = inplanes

if grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d_same(inplanes, planes, 3, stride=1, dilation=dilation))

rep.append(nn.BatchNorm2d(planes))

filters = planes

for i in range(reps - 1):

rep.append(self.relu)

rep.append(SeparableConv2d_same(filters, filters, 3, stride=1, dilation=dilation))

rep.append(nn.BatchNorm2d(filters))

if not grow_first:

rep.append(self.relu)

rep.append(SeparableConv2d_same(inplanes, planes, 3, stride=1, dilation=dilation))

rep.append(nn.BatchNorm2d(planes))

if not start_with_relu:

rep = rep[1:]

if stride != 1:

rep.append(SeparableConv2d_same(planes, planes, 3, stride=2))

if stride == 1 and is_last:

rep.append(SeparableConv2d_same(planes, planes, 3, stride=1))

self.rep = nn.Sequential(*rep)

def forward(self, inp):

x = self.rep(inp)

if self.skip is not None:

skip = self.skip(inp)

skip = self.skipbn(skip)

else:

skip = inp

x += skip

return x

class Xception(nn.Module):

"""

Modified Alighed Xception

"""

def __init__(self, inplanes=3, os=16):

super(Xception, self).__init__()

if os == 16:

entry_block3_stride = 2

middle_block_dilation = 1

exit_block_dilations = (1, 2)

elif os == 8:

entry_block3_stride = 1

middle_block_dilation = 2

exit_block_dilations = (2, 4)

else:

raise NotImplementedError

# Entry flow

self.conv1 = nn.Conv2d(inplanes, 32, 3, stride=2, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(32)

self.relu = nn.ReLU(inplace=True)

self.conv2 = nn.Conv2d(32, 64, 3, stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(64)

self.block1 = Block(64, 128, reps=2, stride=2, start_with_relu=False)

self.block2 = Block(128, 256, reps=2, stride=2, start_with_relu=True, grow_first=True)

self.block3 = Block(256, 728, reps=2, stride=entry_block3_stride, start_with_relu=True, grow_first=True,

is_last=True)

# Middle flow

self.block4 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block5 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block6 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block7 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block8 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block9 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block10 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block11 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block12 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block13 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block14 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block15 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block16 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block17 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block18 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

self.block19 = Block(728, 728, reps=3, stride=1, dilation=middle_block_dilation, start_with_relu=True, grow_first=True)

# Exit flow

self.block20 = Block(728, 1024, reps=2, stride=1, dilation=exit_block_dilations[0],

start_with_relu=True, grow_first=False, is_last=True)

self.conv3 = SeparableConv2d_same(1024, 1536, 3, stride=1, dilation=exit_block_dilations[1])

self.bn3 = nn.BatchNorm2d(1536)

self.conv4 = SeparableConv2d_same(1536, 1536, 3, stride=1, dilation=exit_block_dilations[1])

self.bn4 = nn.BatchNorm2d(1536)

self.conv5 = SeparableConv2d_same(1536, 2048, 3, stride=1, dilation=exit_block_dilations[1])

self.bn5 = nn.BatchNorm2d(2048)

# Init weights

self._init_weight()

def forward(self, x):

# Entry flow

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.conv2(x)

x = self.bn2(x)

x = self.relu(x)

x = self.block1(x)

low_level_feat = x

x = self.block2(x)

x = self.block3(x)

# Middle flow

x = self.block4(x)

x = self.block5(x)

x = self.block6(x)

x = self.block7(x)

x = self.block8(x)

x = self.block9(x)

x = self.block10(x)

x = self.block11(x)

x = self.block12(x)

x = self.block13(x)

x = self.block14(x)

x = self.block15(x)

x = self.block16(x)

x = self.block17(x)

x = self.block18(x)

x = self.block19(x)

# Exit flow

x = self.block20(x)

x = self.conv3(x)

x = self.bn3(x)

x = self.relu(x)

x = self.conv4(x)

x = self.bn4(x)

x = self.relu(x)

x = self.conv5(x)

x = self.bn5(x)

x = self.relu(x)

return x, low_level_feat

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

class ASPP_module(nn.Module):

def __init__(self, inplanes, planes, os):

super(ASPP_module, self).__init__()

# ASPP

if os == 16:

dilations = [1, 6, 12, 18]

elif os == 8:

dilations = [1, 12, 24, 36]

self.aspp1 = nn.Sequential(nn.Conv2d(inplanes, planes, kernel_size=1, stride=1,

padding=0, dilation=dilations[0], bias=False),

nn.BatchNorm2d(planes),

nn.ReLU())

self.aspp2 = nn.Sequential(nn.Conv2d(inplanes, planes, kernel_size=3, stride=1,

padding=dilations[1], dilation=dilations[1], bias=False),

nn.BatchNorm2d(planes),

nn.ReLU())

self.aspp3 = nn.Sequential(nn.Conv2d(inplanes, planes, kernel_size=3, stride=1,

padding=dilations[2], dilation=dilations[2], bias=False),

nn.BatchNorm2d(planes),

nn.ReLU())

self.aspp4 = nn.Sequential(nn.Conv2d(inplanes, planes, kernel_size=3, stride=1,

padding=dilations[3], dilation=dilations[3], bias=False),

nn.BatchNorm2d(planes),

nn.ReLU())

self.global_avg_pool = nn.Sequential(nn.AdaptiveAvgPool2d((1, 1)),

nn.Conv2d(2048, 256, 1, stride=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU())

self.conv1 = nn.Conv2d(1280, 256, 1, bias=False)

self.bn1 = nn.BatchNorm2d(256)

self._init_weight()

def forward(self, x):

x1 = self.aspp1(x)

x2 = self.aspp2(x)

x3 = self.aspp3(x)

x4 = self.aspp4(x)

x5 = self.global_avg_pool(x)

x5 = F.interpolate(x5, size=x4.size()[2:], mode='bilinear', align_corners=True)

x = torch.cat((x1, x2, x3, x4, x5), dim=1)

return x

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

class DeepLabv3_plus(nn.Module):

def __init__(self, nInputChannels=3, n_classes=21, os=16, _print=True):

if _print:

print("Constructing DeepLabv3+ model...")

print("Backbone: Xception")

print("Number of classes: {}".format(n_classes))

print("Output stride: {}".format(os))

print("Number of Input Channels: {}".format(nInputChannels))

super(DeepLabv3_plus, self).__init__()

# Atrous Conv

self.xception_features = Xception(nInputChannels, os)

self.ASPP = ASPP_module(2048, 256, 16)

self.conv1 = nn.Conv2d(1280, 256, 1, bias=False)

self.bn1 = nn.BatchNorm2d(256)

self.relu = nn.ReLU()

# adopt [1x1, 48] for channel reduction.

self.conv2 = nn.Conv2d(128, 48, 1, bias=False)

self.bn2 = nn.BatchNorm2d(48)

self.last_conv = nn.Sequential(nn.Conv2d(304, 256, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, 256, kernel_size=3, stride=1, padding=1, bias=False),

nn.BatchNorm2d(256),

nn.ReLU(),

nn.Conv2d(256, n_classes, kernel_size=1, stride=1))

def forward(self, input):

x, low_level_features = self.xception_features(input)

x = self.ASPP(x)

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = F.interpolate(x, size=(int(math.ceil(input.size()[-2]/4)),

int(math.ceil(input.size()[-1]/4))), mode='bilinear', align_corners=True)

low_level_features = self.conv2(low_level_features)

low_level_features = self.bn2(low_level_features)

low_level_features = self.relu(low_level_features)

x = torch.cat((x, low_level_features), dim=1)

x = self.last_conv(x)

x = F.interpolate(x, size=input.size()[2:], mode='bilinear', align_corners=True)

return x

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels

m.weight.data.normal_(0, math.sqrt(2. / n))

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

if __name__ == "__main__":

model = DeepLabv3_plus(nInputChannels=3, n_classes=1, os=16, _print=True)

model.eval()

image = torch.randn(1, 3, 256, 256)

output = model(image)

print(output.size())代码有点长,慢慢看。第一篇先到这里。

至此,敬礼,salute!!!。