SLAM Summary (1)-Overview and Introduction of SLAM Principles

-

SLAM (Simultaneous Localization and Mapping): Simultaneous localization and mapping. Localization is to locate the pose and transformation of the body in the world coordinate system. The single-sensor body generally refers to the camera optical center, the lidar scan center, the IMU center, and the encoder's two-wheel axis. Multi-sensor generally uses the IMU center to avoid the influence of centrifugal force. The pose includes a position with 3 degrees of freedom (translation) and a pose (rotation) with 3 degrees of freedom. The origin and attitude of the world coordinate system can be obtained from the first key frame, the existing global map or landmark points, and GPS (the real world coordinates are the same in the world). Mapping is to build a map of the surrounding environment perceived by the robot. The basic geometric element of the map is a point. The point has no direction and only has 3 degrees of freedom. It can be sparse point, dense point, grid map, octree map, topological map, etc. The main function of the map is positioning and navigation. Navigation can be divided into navigation and navigation. Navigation includes global planning and local planning. After navigation is planned, it controls the movement of the robot. Sparse points are generally only used for positioning; raster maps and octree maps can be used for positioning and navigation, dense maps can be used for positioning, and can be converted into raster maps or octree maps for navigation after processing.

In short, a common SLAM problem is to estimate an n-dimensional variable composed of a large number of discrete body poses with 6 degrees of freedom (positioning) and 3 degrees of freedom points (maps). Then time and motion range together cause n to increase, which causes CPU and memory overhead to increase. To expand, change "simultaneous positioning and mapping" to "positioning or mapping", then it can be divided into the following situations:

1) The pose and point of the body are completely unknown: this situation is a SLAM problem

2) The pose of the body is completely Known and points are completely unknown: This is a pure mapping problem. For example, during the ORB-SLAM2 run, save the corresponding image of the key frame, and perform a global optimization after running. At this time, the fully known pose is obtained, and then Use known poses and pictures to build maps.

3) The pose of the body is completely unknown and the point is completely known: this is a pure positioning problem.

4) The pose of the body is completely unknown and the points are known: these points can generally be used as road signs, and they are considered to have no cumulative error. They can be used to make each boot in the same world coordinate system and reduce the cumulative error.

5) In theory, there are 3*3=9 cases. Other cases are not so common, so I won’t list them one by one. -

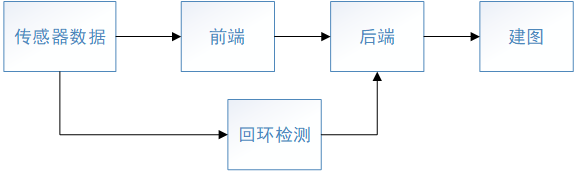

The SLAM framework is shown in the figure below. The entire system consists of front-end, back-end, and loopback detection.

1) Front end: The frequency is the frame rate of the sensor. Data association (such as feature point matching, optical flow method, etc.), initialization, geometric methods or small-scale optimization to quickly obtain a more accurate initial value (Tracking) of the body pose of the current frame. The current frame generally only refers to the previous frame or previous frames, so the accumulated error will become larger as the number of key frames increases.

2) Backend: The frequency is the key frame rate. The key frame needs to reduce the number of frames as much as possible while ensuring the tracking quality. The time interval of the frames is mainly related to the robot's linear velocity, angular velocity (the faster the speed, the easier the tracking is lost), and the field of view (Too close is easier to track and lose) is related to the feature structure in the environment (feature points are sparse and corners are more likely to be lost).

The function is to obtain the more accurate initial position of the new point in the world coordinate system through triangulation, remove or merge some old points, add constraints and optimize the key frame pose and point position in a larger range of local windows. Global optimization can be performed once for a long period of time.

3) Loopback detection: The frequency is uncertain, generally much lower than the first two. It is related to the number of loopbacks. The highest frequency will be set so that the closed loop is not detected within a short distance. Find the places you have visited before through data association. After finding the loop, it will first use the similar transformation method (3D-3D) to adjust the pose of the key frame associated with the closed loop frame, then optimize all the key frames and points in the loop, and finally perform a global optimization. Compared with ordinary global optimization, global optimization after closed-loop detection is easier to converge.

4) Map building: Generally, a sparse point map can be obtained through the first few steps. Other formats of maps can be established according to different needs. -

The SLAM classification is classified according to the method as shown in the figure below. My main research is the geometric feature method based on key frames. I am more interested in the fusion of multi-sensor and multi-geometric features, and incremental nonlinear optimization. The following content mainly discusses nonlinear optimization The geometric feature method.

-

Front end: Commonly used visual geometric features are points, but in recent years there has been more and more research on lines, surfaces and objects. Some people are committed to expressing them in a unified mathematical expression for easy processing; some people are committed to adding semantics to objects and giving them characteristics in combination with the reality of life. For example, people often move and cannot collide; some people are committed to To estimate the pose and movement speed of dynamic objects. These are common geometric features in our lives. Using them is bound to improve the robustness and accuracy of the algorithm. Giving semantics to objects will also make robots more intelligent.

-

Back-end: For nonlinear optimization, the key is the objective function (constraint, residual term), the Jacobi matrix of the objective function to the variable and the incremental solution method. The incremental solution generally uses the LM method. Finally, it is transformed into solving the linear equation AX=b. Solving methods include CSparse, Cholesky, Preconditioned Conjugate Gradient (PCG)-CG improvement, QR. Before solving, you can also use schur, plain, reording and other methods to process the coefficient matrix A to speed up Calculation speed

-

Extension

1) Dynamic SLAM: SLAM generally assumes that the environment is static, so when there are dynamic objects in space, this assumption is bound to be broken. When a dynamic object occupies a small part of the sensor data, the data association, RANSAC, and optimized robust kernel filter out the dynamic object, because compared to the static point, the point on the dynamic object is an outlier (outlier). But when dynamic objects occupy most of the sensor, the dynamic points are more like inliers, and the above methods cannot filter out dynamic objects. As a result, dynamic SLAM came into being, as the name suggests, that is, the SLAM algorithm that runs in a dynamic environment. In addition to estimating the 3D position of a static point, it can also estimate the 3D position and velocity of a dynamic point. Among them, SLAM_MOT (Muti-object tracking) is more representative and has many applications in autonomous vehicles. In addition to completing SLAM tasks, it can also track dynamic objects in the environment.

2) Semantic SLAM: Semantic SLAM is generally combined with tasks such as semantic segmentation and object recognition in CV. It can make full use of the semantic information in visual features to give semantics to objects in the environment, so that the robot can better interact with the environment. Make the robot more intelligent.

3) Active SLAM: SLAM is generally based on the movement of people or under the control of navigation algorithms to create maps. In this case, the robot's motion is not necessarily conducive to positioning and map creation. Active SLAM is to use algorithms to control the robot to move in a trajectory that is conducive to positioning and mapping. For example, the robot actively uses the existing map to explore unknown areas, and reduces the accumulated error and improves the robustness through closed loops. -

As the opening of the technical article, I briefly describe my experience of learning SLAM and some major references. In a blink of an eye, the graduate student's life is halfway through. From work to now, I have tried switching power supply, embedded development, machine learning, image processing, CV and NLP, and finally chose SLAM. Start with "Fourteen Lectures on Visual SLAM", and then open the ORB-SLAM2 paper and source code. After taking ORB-SLAM2, an open source algorithm can be initially worked out in about a week or two, from the multi-sensor fusion VINS-Mono and PL-VIO to the lidar algorithm Cartographer. Sigh, the source code of carto is the most convoluted code I have ever seen. It is like peeling an onion layer by layer. I finally know how to use "C++ primer"! Thanks to the mentor’s stocking, I can go to the company for internships and deepen my understanding of the above algorithms through projects. I feel that I have met the best tutor in the world, and I am devoted to my students. After some internship interviews, I found that I didn't have enough depth of knowledge, so I decided to stop and summarize it before exploring new algorithms. Therefore, I decided to restart CSDN and put the four algorithms in a series to summarize and compare them. In addition, I will analyze each algorithm in more detail with the source code separately. I hope to deepen my understanding in this way, and also provide some help for SLAM fans. I hope that interested friends will come in and take a look. Please correct me! The following are some of the main references of this series (SLAM summary)

1) ORB-SLAM2

[1] ORB-SLAM: Tracking and Mapping Recognizable Features (2014)

[2] ORB-SLAM: A Versatile and Accurate Monocular SLAM System (2015)

[ 3]ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras (2017)

[4]Fast corner point: Faster and Better: A Machine Learning Approach to Corner Detection

[5] Brief descriptor: Binary robust independent elementary features

[6] ORB feature: ORB_an efficient alternative to SIFT or SURF

[7] Bags of words: Bags of Binary Words for Fast Place Recognition in Image Sequences

bag-of-words similarity calculation (need to collect a large number of features of the data set, "Visual SLAM 14 Lectures" P309)

[8]Video google: A text retrieval approach to object matching in videos

[9] Understanding inverse document frequency: on theoretical arguments for idf

[10] Graph optimization: g2o: A General Framework for Graph Optimization

[11] H decomposition t, R: Motion and structure from motion in a piecewise planar environment

[12] SVD of E Decomposition: Multiple View Geometry in Computer Vision

[13]RANSAC: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography

[14] Closed loop detection and relocation method: Fast Relocalisation and Loop Closing in Keyframe-Based SLAM

[15] 2D-2D 3D reconstruction (5 point method): An efficient solution to the five-point relative pose problem

[16] Obtain the initial pose of the current frame during relocation. 3D-2D method (EPnP): An accurate On solution to the PnP problem

. Similar in closed loop detection Transformation and pose graph optimization:

[17] Scale Drift-Aware Large Scale Monocular SLAM

[18] closed-form solution of absolute orientation using unit quaternions

[19] 3D-3D points (used in closed loop detection) to solve R, T: Least- Squares Fitting of Two 3-D Point Sets

2) VINS-Mono (multi-sensor): The same papers as in 1 will not be listed

[1] VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator

[2]VIO: Monocular visual-inertial state estimation for mobile augmented reality

[3] Relocalization, closed-loop detection and map fusion: Relocalization, global optimization and map merging for monocular visual-inertial SLAM

[4] EuRoc camera model and calibration method: Single View Point Omnidirectional Camera Calibration from Planar Grids

[5] Initialization: Robust initialization of monocular visual-inertial estimation on aerial robots

[6] Time offset calibration: Online Temporal Calibration for Monocular Visual-Inertial Systems

[7] Harris corner point: Good features to track

[8]KLT sparse optical flow: An iterative image registration technique with an application to stereo vision

IMU pre-integration:

[9]Tightly-coupled monocular visualinertial fusion for autonomous flight of rotorcraft MAVs

[10]On-manifold preintegration for real -time visual–inertial odometry

[11] IMU preintegration on manifold for efficient visual-inertial maximum-a-posteriori estimation

[12] Marginalization method (schur): Sliding window filter with application to planetary landing

3) PL-VIO (multi-sensor, point-line feature fusion) : The same papers as in 1 are no longer listed

[1] PL-VIO: Tightly-Coupled Monocular Visual–Inertial

Odometry Using Point and Line Features

[2] LSD Line Features: LSD: A Fast Line Segment Detector with a False Detection Control

[3] LBD line feature descriptor: An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency

4) Cartographer

[1] Real-Time Loop Closure in 2D LIDAR SLAM

[2] ICP: Linear Least-Squares Optimization for Point-to-Plane ICP Surface Registration

[3] Closed loop optimization: Sparse pose adjustment for 2D mapping

5)多几何特征

多特征:

[1] Unified Representation and Registration of Heterogeneous Sets of Geometric Primitives

[2] StructVIO: Visual-Inertial Odometry With Structural Regularity of Man-Made Environments

[3] Systematic Handling of Heterogeneous Geometric Primitives in Graph-SLAM Optimization

线特征:

[4] The 3D Line Motion Matrix and Alignment of Line Reconstructions∗

[5] Building a 3-D Line-Based Map Using Stereo SLAM

面特征:

[6] GPO: Global Plane Optimization for Fast and Accurate Monocular SLAM Initialization

物体:

[7] QuadricSLAM: Dual Quadrics From Object Detections as Landmarks in Object-Oriented SLAM

[8] CubeSLAM: Monocular 3-D Object SLAM

6)相关书籍

[1] 视觉SLAM十四讲

[2] Probabilistic Robots

[3] Computer Vision: Multi-view Geometry

[4] State Estimation in Robotics