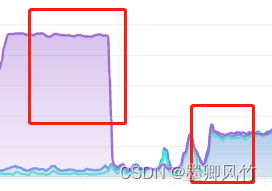

Spark has two common submission methods: client mode and cluster mode. These two methods have slightly different effects on the machine's CPU

, please refer to the instructions below

Client mode:

In Client mode, Spark Driver runs on the client node that submits the task (that is, the machine that runs the spark-submit command). The Driver is responsible for performing the scheduling and monitoring of the application, while the Executor launches and runs tasks on the worker nodes of the cluster.

In the Client mode, the CPU burden of the machine is mainly concentrated on the Driver process, because the Driver is responsible for scheduling and monitoring the operation of the entire application.

Client mode is suitable for development, debugging, and interactive operations, and is effective for small datasets and tasks that iterate quickly.

Cluster mode:

In Cluster mode, Spark Driver runs on a node in the cluster and runs in parallel with other Executors. The client is only responsible for submitting the application, and does not participate in the actual operation of the application.

In Cluster mode, the CPU load of the machines is distributed across the cluster because both Driver and Executor run on their own nodes.

Cluster mode is suitable for production environments, for processing large-scale datasets and long-running tasks.

In general, the impact on the CPU of the machine in Client mode is relatively large, because the Driver runs on the client node, and the impact on the CPU of the machine in Cluster mode is relatively uniform, because the task runs in the entire cluster. When choosing a submission mode, factors such as the scale of the task, the amount of data and computing resources, and whether real-time monitoring and interactive operations are required should be considered.