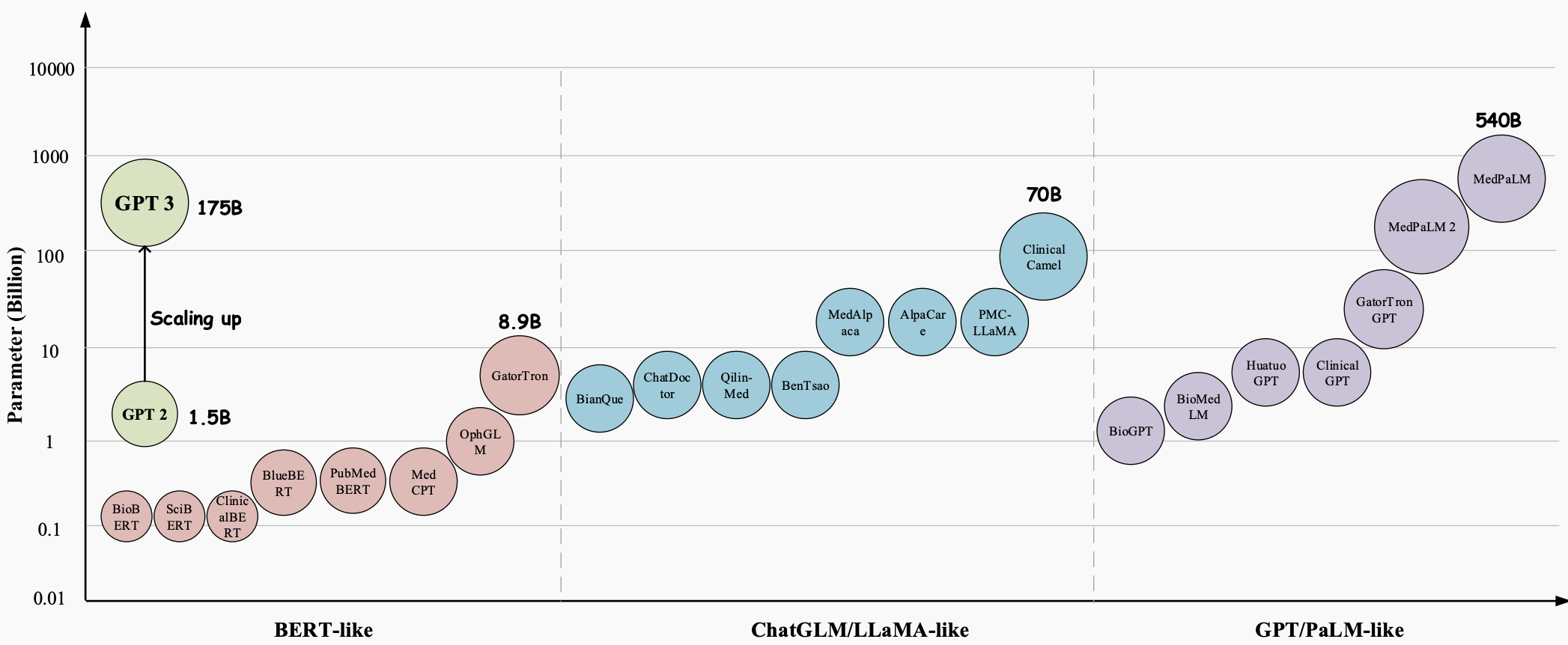

Over the years, Large Language Models (LLMs) have evolved into a groundbreaking technology with huge potential to revolutionize every aspect of the healthcare industry. These models, such as GPT-3 , GPT-4 , and Med-PaLM 2 , have demonstrated superior capabilities in understanding and generating human-like text, making them valuable tools for handling complex medical tasks and improving patient care. They show great promise in a variety of medical applications, such as medical question answering (QA), dialogue systems, and text generation. Additionally, with the exponential growth of electronic health records (EHRs), medical literature, and patient-generated data, LLMs can help medical professionals extract valuable insights and make informed decisions.

However, despite the huge potential of large language models (LLMs) in the medical field, there are still some important and specific challenges that need to be solved.

When the model is used in the context of entertainment conversations, the impact of errors is minimal; however, this is not the case when used in the medical field, where incorrect interpretations and answers can have serious consequences for patient care and outcomes. The accuracy and reliability of information provided by language models can be a matter of life and death, as it can impact medical decisions, diagnosis, and treatment plans.

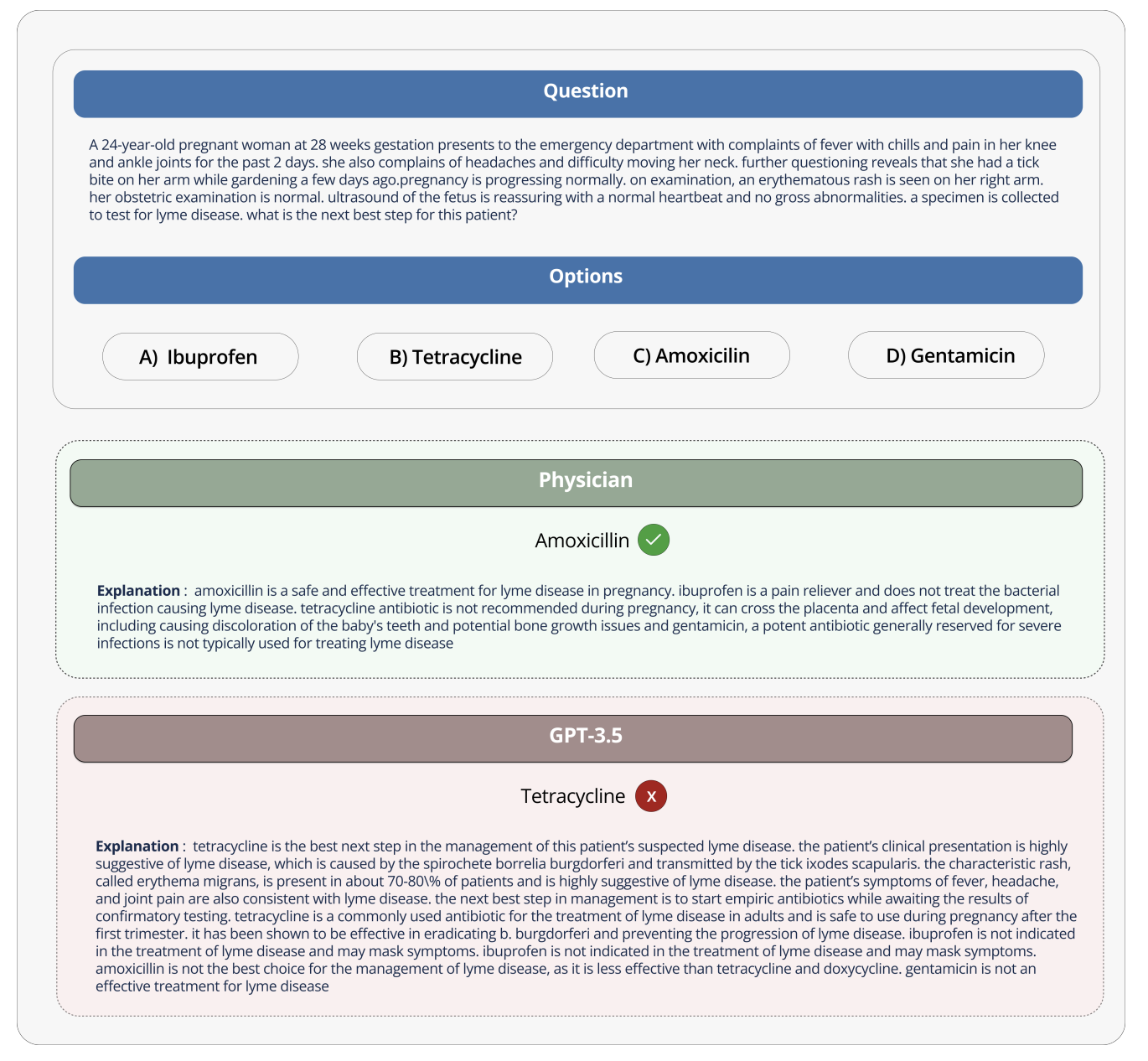

For example, when GPT-3 was asked about what medications pregnant women could use, GPT-3 incorrectly recommended tetracycline, even though it also correctly stated that tetracycline is harmful to the fetus and should not be used by pregnant women. If you really follow this wrong advice and give medicine to pregnant women, it may cause the child's bones to grow poorly in the future.

To make good use of such large language models in the medical field, these models must be designed and benchmarked according to the characteristics of the medical industry. Because medical data and applications have their own special features, these must be taken into consideration. And it's actually important to develop methods to evaluate these models for medical use not just for research, but because they could pose risks if used incorrectly in real-world medical work.

The Open Source Medical Large Model Ranking aims to address these challenges and limitations by providing a standardized platform to evaluate and compare the performance of various large language models on a variety of medical tasks and datasets. By providing a comprehensive assessment of each model's medical knowledge and question-answering capabilities, the ranking promotes the development of more effective and reliable medical models.

This platform enables researchers and practitioners to identify the strengths and weaknesses of different approaches, drive further development in the field, and ultimately help improve patient outcomes.

Datasets, tasks, and assessment settings

The Medical Large Model Ranking contains a variety of tasks and uses accuracy as its main evaluation metric (accuracy measures the percentage of correct answers provided by the language model in various medical question and answer datasets).

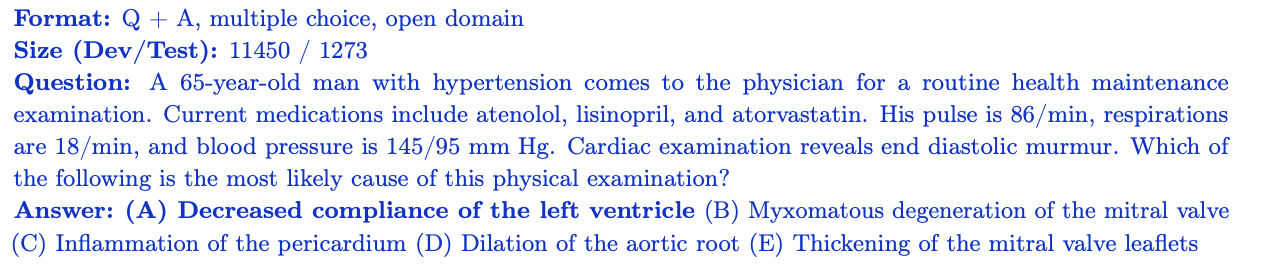

MedQA

The MedQA dataset contains multiple-choice questions from the United States Medical Licensing Examination (USMLE). It covers a wide range of medical knowledge and includes 11,450 training set questions and 1,273 test set questions. With 4 or 5 answer options per question, this dataset is designed to assess the medical knowledge and reasoning skills required to obtain a medical license in the United States.

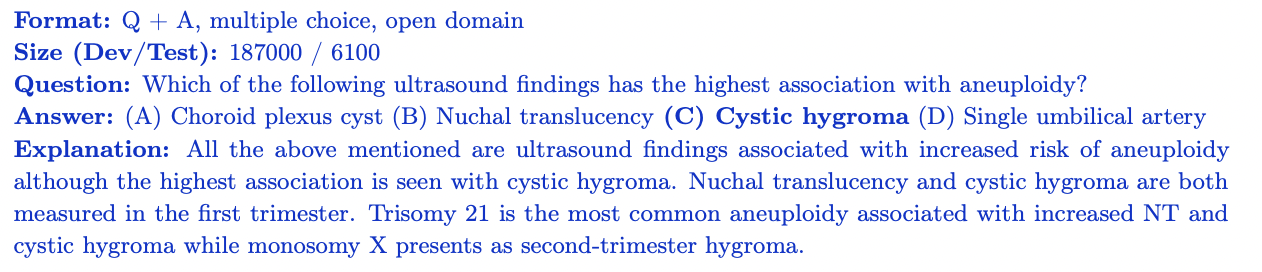

MedMCQA

MedMCQA is a large-scale multiple-choice question and answer dataset derived from the Indian Medical Entrance Examination (AIIMS/NEET). It covers 2400 medical field topics and 21 medical subjects, with more than 187,000 questions in the training set and 6,100 questions in the test set. Each question has 4 answer options with explanations. MedMCQA assesses a model's general medical knowledge and reasoning abilities.

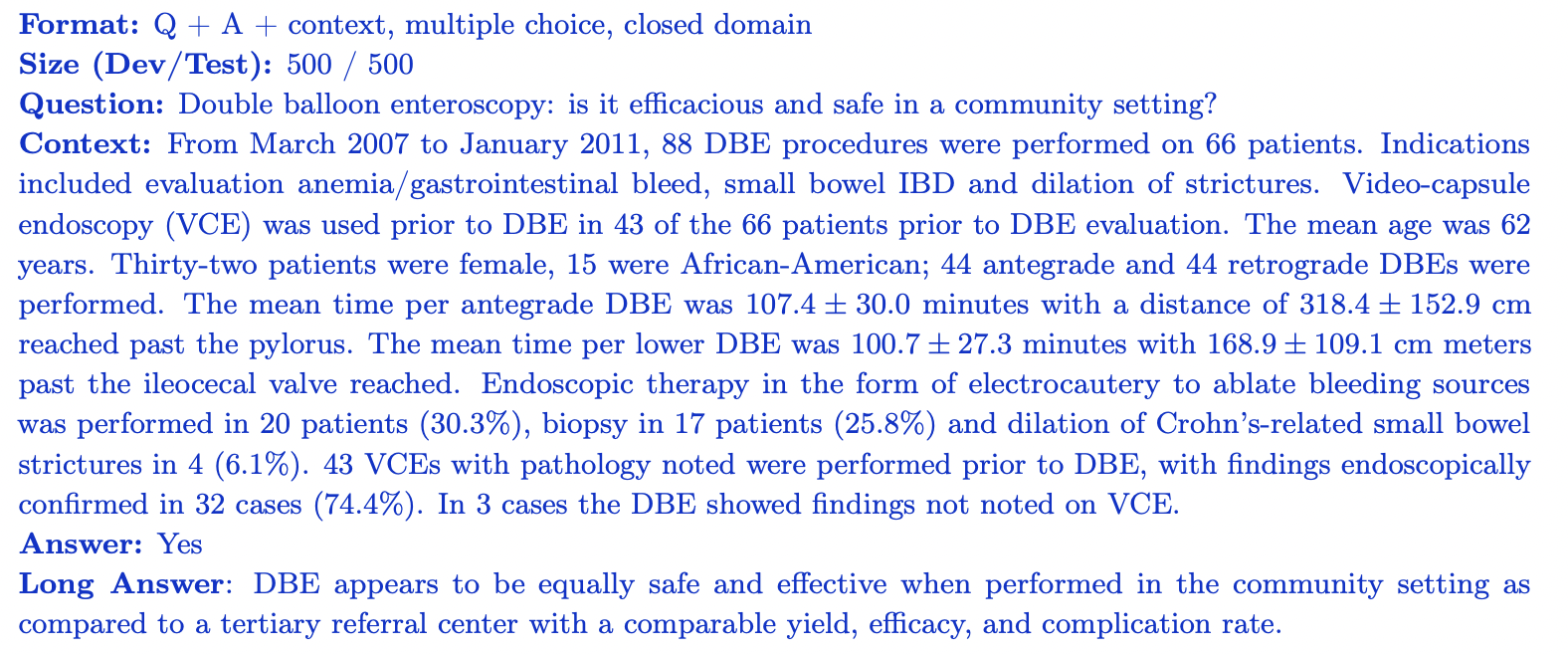

PubMedQA

PubMedQA is a closed-domain question answering dataset where each question can be answered by looking at the relevant context (PubMub summary). It contains 1,000 expert-labeled question-answer pairs. Each question is accompanied by a PubMed summary for context, and the task is to provide a yes/no/maybe answer based on the summary information. The data set is divided into 500 training questions and 500 test questions. PubMedQA evaluates a model's ability to understand and reason about scientific biomedical literature.

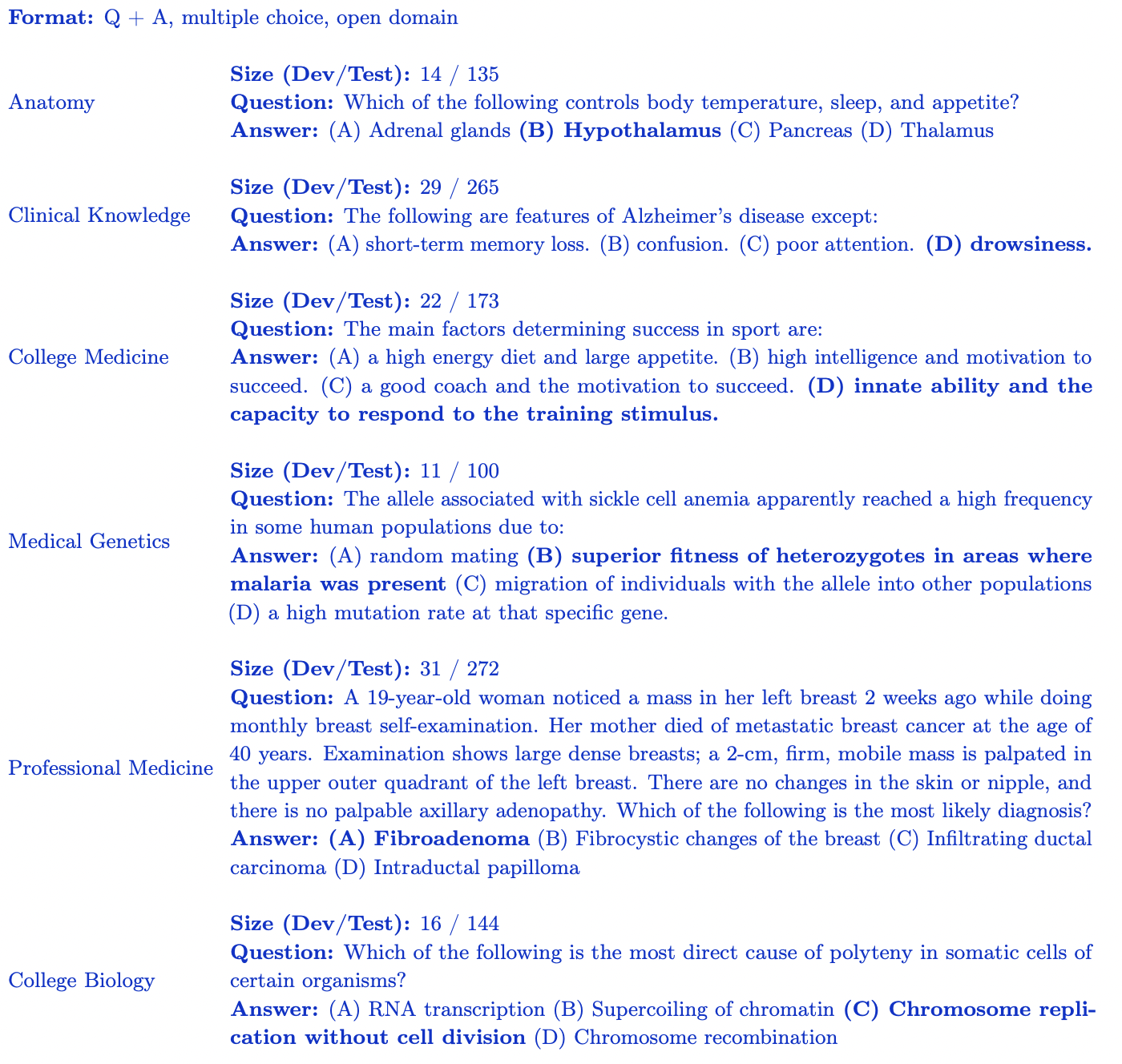

MMLU subset (medicine and biology)

The MMLU benchmark (Measuring Large-Scale Multi-Task Language Understanding) contains multiple-choice questions from various domains. For the open source medical large model rankings, we focus on the subset most relevant to medical knowledge:

- Clinical Knowledge: 265 questions assessing clinical knowledge and decision-making skills.

- Medical Genetics: 100 questions covering topics related to medical genetics.

- Anatomy: 135 questions assessing knowledge of human anatomy.

- Professional Medicine: 272 questions that assess the knowledge required of medical professionals.

- College Biology: 144 questions covering college-level biology concepts.

- College Medicine: 173 questions assessing college-level medical knowledge. Each MMLU subset contains multiple-choice questions with 4 answer options designed to assess the model's understanding of a specific medical and biological domain.

The open source medical large model rankings provide a robust assessment of model performance in various aspects of medical knowledge and reasoning.

Insights and Analysis

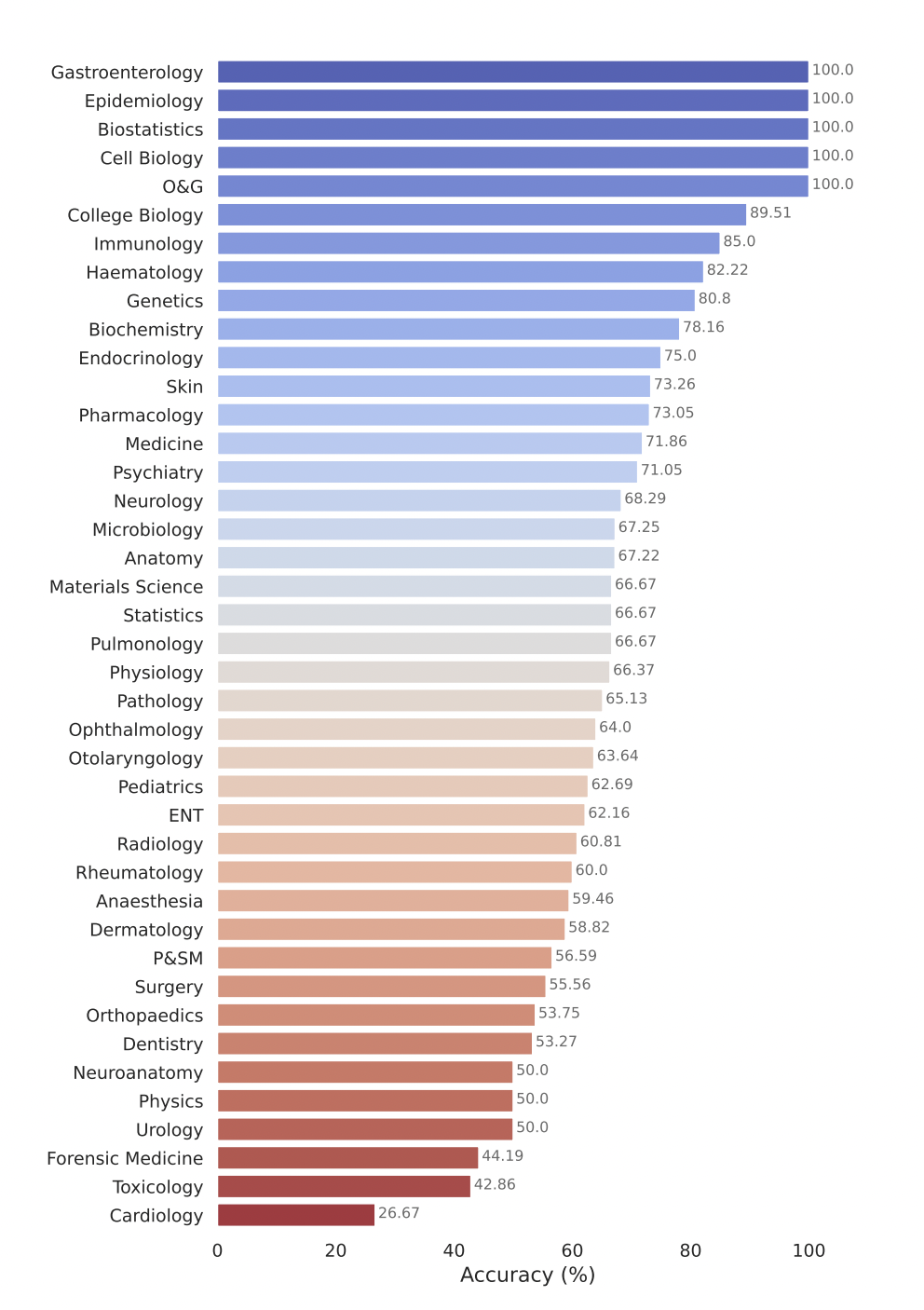

The Open Source Medical Large Model Ranking evaluates the performance of various large language models (LLMs) on a range of medical question answering tasks. Here are some of our key findings:

- Commercial models such as GPT-4-base and Med-PaLM-2 consistently achieve high accuracy scores on various medical datasets, demonstrating strong performance in different medical fields.

- Open source models, such as Starling-LM-7B , gemma-7b , Mistral-7B-v0.1 and Hermes-2-Pro-Mistral-7B , although the number of parameters is only about 7 billion, perform well on certain data sets and tasks. delivered competitive performance.

- Commercial and open source models perform well on tasks such as understanding and reasoning about scientific biomedical literature (PubMedQA) and applying clinical knowledge and decision-making skills (MMLU clinical knowledge subset).

Google's model Gemini Pro has demonstrated strong performance in multiple medical fields, especially in data-intensive and procedural tasks such as biostatistics, cell biology, and obstetrics and gynecology. However, it showed moderate to low performance in key areas such as anatomy, cardiology, and dermatology, revealing gaps that require further improvements for application in more comprehensive medicine.

Submit your model for evaluation

To submit your model for evaluation on the Open Source Healthcare Large Model Ranking, please follow these steps:

1. Convert model weights to Safetensors format

First, convert your model weights to safetensors format. Safetensors are a new format for storing weights that are safer and faster to load and use. Converting your model to this format will also allow the leaderboard to display the number of parameters for your model in the main table.

2. Ensure compatibility with AutoClasses

Before submitting the model, make sure you can load the model and tokenizer using AutoClasses in the Transformers library. Use the following code snippet to test compatibility:

from transformers import AutoConfig, AutoModel, AutoTokenizer

config = AutoConfig.from_pretrained(MODEL_HUB_ID)

model = AutoModel.from_pretrained("your model name")

tokenizer = AutoTokenizer.from_pretrained("your model name")

If you fail at this step, follow the error message to debug your model before submitting. Most likely your model was uploaded improperly.

3. Make your model public

Make sure your model is publicly accessible. Leaderboards cannot evaluate private models or models that require special access.

4. Remote code execution (coming soon)

Currently, the open source medical large model rankings do not support the required use_remote_code=Truemodels. However, the leaderboard team is actively adding this feature, so stay tuned for updates.

5. Submit your model through the leaderboard website

Once your model is converted to safetensors format, compatible with AutoClasses, and publicly accessible, you can evaluate it using the Submit Here! panel on the Open Source Medical Large Model Ranking website. Fill in the required information, such as model name, description, and any additional details, and click the Submit button. The leaderboard team will process your submission and evaluate your model's performance on various medical Q&A datasets. Once the evaluation is complete, your model's score will be added to the leaderboard and you can compare its performance with other models.

What's next? Expanded open source medical large model rankings

The Open Source Healthcare Large Model Ranking is committed to expanding and adapting to meet the changing needs of the research community and healthcare industry. Key areas include:

- Incorporate broader healthcare data sets covering all aspects of care such as radiology, pathology and genomics through collaboration with researchers, healthcare organizations and industry partners.

- Enhance evaluation metrics and reporting capabilities by exploring additional performance measures beyond accuracy, such as point-to-point scores and domain-specific metrics that capture the unique needs of medical applications.

- There is already some work underway in this direction. If you are interested in collaborating on the next benchmark we plan to propose, please join our Discord community to learn more and get involved. We'd love to collaborate and brainstorm!

If you are passionate about the intersection of AI and healthcare, building models for healthcare, and care about the safety and hallucination issues of large medical models, we invite you to join our active community on Discord .

Acknowledgments

Special thanks to everyone who helped make this possible, including Clémentine Fourrier and the Hugging Face team. I would like to thank Andreas Motzfeldt, Aryo Gema, and Logesh Kumar Umapathi for discussions and feedback during the development of the leaderboard. We would like to express our sincere thanks to Professor Pasquale Minervini of the University of Edinburgh for his time, technical assistance and GPU support.

About Open Life Sciences AI

Open Life Sciences AI is a project that aims to revolutionize the application of artificial intelligence in the life sciences and medical fields. It serves as a central hub that lists medical models, datasets, benchmarks, and tracks conference deadlines, promoting collaboration, innovation, and advancement in the field of AI-assisted healthcare. We strive to establish Open Life Sciences AI as the premier destination for anyone interested in the intersection of AI and healthcare. We provide a platform for researchers, clinicians, policymakers and industry experts to engage in dialogue, share insights and explore the latest developments in the field.

Quote

If you find our assessment useful, please consider citing our work

Medical large model rankings

@misc{Medical-LLM Leaderboard,

author = {Ankit Pal, Pasquale Minervini, Andreas Geert Motzfeldt, Aryo Pradipta Gema and Beatrice Alex},

title = {openlifescienceai/open_medical_llm_leaderboard},

year = {2024},

publisher = {Hugging Face},

howpublished = "\url{https://huggingface.co/spaces/openlifescienceai/open_medical_llm_leaderboard}"

}

> Original English text: https://hf.co/blog/leaderboard-medicalllm > Original author: Aaditya Ura (looking for PhD), Pasquale Minervini, Clémentine Fourrier > Translator: innovation64

A programmer born in the 1990s developed a video porting software and made over 7 million in less than a year. The ending was very punishing! High school students create their own open source programming language as a coming-of-age ceremony - sharp comments from netizens: Relying on RustDesk due to rampant fraud, domestic service Taobao (taobao.com) suspended domestic services and restarted web version optimization work Java 17 is the most commonly used Java LTS version Windows 10 market share Reaching 70%, Windows 11 continues to decline Open Source Daily | Google supports Hongmeng to take over; open source Rabbit R1; Android phones supported by Docker; Microsoft's anxiety and ambition; Haier Electric shuts down the open platform Apple releases M4 chip Google deletes Android universal kernel (ACK ) Support for RISC-V architecture Yunfeng resigned from Alibaba and plans to produce independent games on the Windows platform in the future