WordCount案例实操

1)需求

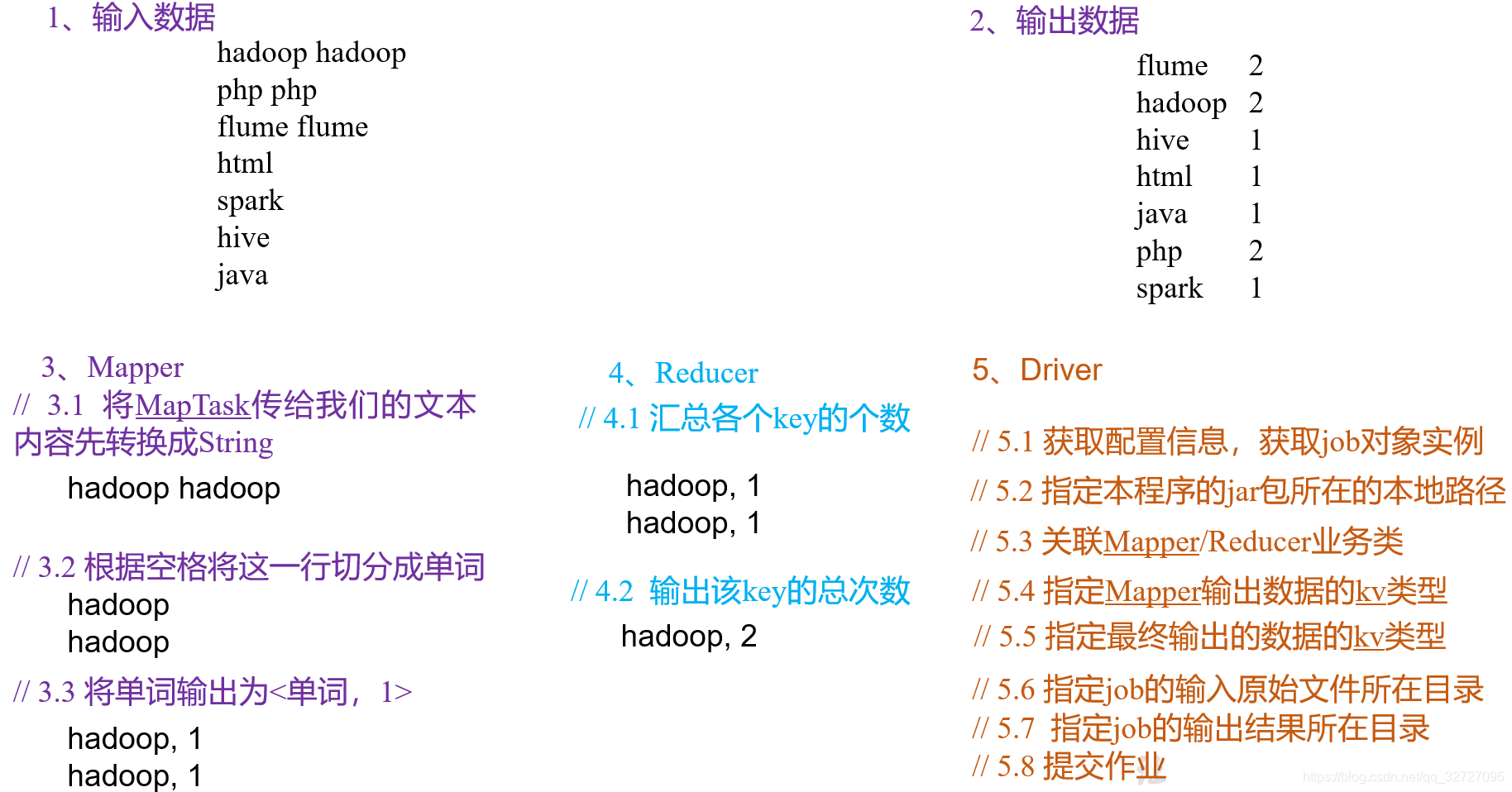

在给定的文本文件中统计输出每一个单词出现的总次数

(1)输入数据(test.txt)

(2)期望输出数据

flume 2

hadoop 2

hive 1

html 1

java 1

php 2

spark 1

2)需求分析

按照MapReduce编程规范,分别编写Mapper,Reducer,Driver。

3)环境准备

(1)创建maven工程

(2)在pom.xml文件中添加如下依赖

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

</dependencies>

(2)在项目的src/main/resources目录下,新建一个文件,命名为"log4j2.xml",在文件中填入。

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="error" strict="true" name="XMLConfig">

<Appenders>

<!-- 类型名为Console,名称为必须属性 -->

<Appender type="Console" name="STDOUT">

<!-- 布局为PatternLayout的方式,

输出样式为[INFO] [2020-01-01 00:00:00][org.test.Console]I'm here -->

<Layout type="PatternLayout"

pattern="[%p] [%d{yyyy-MM-dd HH:mm:ss}][%c{10}]%m%n" />

</Appender>

</Appenders>

<Loggers>

<!-- 可加性为false -->

<Logger name="test" level="info" additivity="false">

<AppenderRef ref="STDOUT" />

</Logger>

<!-- root loggerConfig设置 -->

<Root level="info">

<AppenderRef ref="STDOUT" />

</Root>

</Loggers>

</Configuration>

4)编写程序

(1)编写Mapper类

package com.qinjl.mapreduce.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

/**

* KEYIN, map阶段输入K的类型 LongWritalbe

* VALUEIN, map阶段输入V的类型 Text

* KEYOUT, map阶段输出K的类型 Text

* VALUEOUT,map阶段输出V的类型 intwritable

*/

public class WordCountMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private Text outK = new Text();

private IntWritable outV = new IntWritable(1);

//参数解读 1、偏移量 2、输入的一行数据 3、上下文对象

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//1 获取一行数据,并转换成字符串

String line = value.toString();

//2 切割一行数据,按空格切割

String[] words = line.split(" ");

//3 遍历words数组

for (String word : words) {

//封装outK,outV

outK.set(word);

//输出

context.write(outK, outV);

}

}

}

(2)编写Reducer类

package com.qinjl.mapreduce.wordcount;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/**

* KEYIN, reduce阶段输入K的类型 Text

* VALUEIN, reduce阶段输入V的类型 IntWritable

* KEYOUT, reduce阶段输出K的类型 Text

* VALUEOUT,reduce阶段输出V的类型 intwritable

*/

public class WordCountReducer extends Reducer<Text, IntWritable, Text, IntWritable> {

private IntWritable outV = new IntWritable();

//参数解读 1.单词 2.相同单词的一组数据 3 上下文对象

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int sum = 0;

//遍历相同单词的一组数据 说白了就是一个个的1 (1,1)

for (IntWritable value : values) {

int i = value.get();

sum += i;

}

//封装outK outV

outV.set(sum);

//写出

context.write(key,outV);

}

}

(3)编写Driver驱动类

package com.qinjl.mapreduce.wordcount;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

public class WordCountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

//1 获取job对象

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

//2 设置本Driver程序的类

job.setJarByClass(WordCountDriver.class);

//3 关联mapper和reducer

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

//4 设置map端输出的KV类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

//5 设置mr程序的最终输出KV类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//6 设置程序的输入输出路径

FileInputFormat.setInputPaths(job, new Path("D:\\hadoop\\inputword"));

FileOutputFormat.setOutputPath(job, new Path("D:\\hadoop\\output"));

//7 提交job

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

5)本地测试

(1)需要首先配置好HADOOP_HOME变量以及Windows运行依赖

(2)在IDEA上运行程序

6)集群上测试

(0)用maven打jar包,需要添加的打包插件依赖

<build>

<plugins>

<plugin>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.6.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

- 注意:如果工程上显示红叉。在项目上右键->maven->Reimport即可。

(1)将程序打成jar包,然后拷贝到Hadoop集群中

步骤详情:右键->Run as->maven install。等待编译完成就会在项目的target文件夹中生成jar包。如果看不到。在项目上右键->Refresh,即可看到。修改不带依赖的jar 包名称为 wc.jar,并拷贝该 jar包到 Hadoop集群。

(2)启动Hadoop集群

(3)执行WordCount程序

[qinjl@hadoop102 software]$ hadoop jar wc.jar

com.qinjl.wordcount.WordcountDriver /user/qinjl/input /user/qinjl/output

7)在Windows上向集群提交任务

(1)添加必要配置信息

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// 1 获取配置信息以及封装任务

Configuration configuration = new Configuration();

//设置HDFS NameNode的地址

configuration.set("fs.defaultFS", "hdfs://hadoop102:9820");

// 指定MapReduce运行在Yarn上

configuration.set("mapreduce.framework.name","yarn");

// 指定mapreduce可以在远程集群运行

configuration.set("mapreduce.app-submission.cross-platform","true");

//指定Yarn resourcemanager的位置

configuration.set("yarn.resourcemanager.hostname","hadoop103");

Job job = Job.getInstance(configuration);

// 2 设置jar加载路径

job.setJarByClass(WordcountDriver.class);

// 3 设置map和reduce类

job.setMapperClass(WordcountMapper.class);

job.setReducerClass(WordcountReducer.class);

// 4 设置map输出

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

// 5 设置最终输出kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// 6 设置输入和输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

(2)编辑任务配置

1)检查第一个参数 Main class是不是我们要运行的类的全类名,如果不是的话一定要修改!

2)在VM options后面加上 :-DHADOOP\_USER\_NAME=qinjl

3)在Program arguments后面加上两个参数分别代表输入输出路径,两个参数之间用空格隔开。

- 如:

hdfs://hadoop102:9820/inputhdfs://hadoop102:9820/output

(3)打包,并将Jar包设置到Driver中

public class WordcountDriver {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

// 1 获取配置信息以及封装任务

Configuration configuration = new Configuration();

configuration.set("fs.defaultFS", "hdfs://hadoop102:9820");

configuration.set("mapreduce.framework.name","yarn");

configuration.set("mapreduce.app-submission.cross-platform","true");

configuration.set("yarn.resourcemanager.hostname","hadoop103");

Job job = Job.getInstance(configuration);

// 2 设置jar加载路径

//job.setJarByClass(WordCountDriver.class);

job.setJar("E:\IdeaProjects\mapreduce\target\mapreduce-1.0-SNAPSHOT.jar");

// 3 设置map和reduce类

job.setMapperClass(WordcountMapper.class);

job.setReducerClass(WordcountReducer.class);

// 4 设置map输出

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

// 5 设置最终输出kv类型

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

// 6 设置输入和输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// 7 提交

boolean result = job.waitForCompletion(true);

System.exit(result ? 0 : 1);

}

}

(4)提交并查看结果