一 程序修改

在VINS_Course源码中对如下函数进行修改:

在run_eurco.cpp中:

//把IMU数据放进VINS---基本和之前的函数差不多

void PubImuData()

{

string sImu_data_file = "/home/nnz/data/vio/bin/imu_pose_noise.txt";//带噪声的IMU数据的路径

cout << "1 PubImuData start sImu_data_filea: " << sImu_data_file << endl;

ifstream fsImu;//文件流对象

fsImu.open(sImu_data_file.c_str());

if (!fsImu.is_open())

{

cerr << "Failed to open imu file! " << sImu_data_file << endl;

return;

}

std::string sImu_line;

double dStampNSec = 0.0;//时间戳

double tmp;

Vector3d vAcc;//加速度

Vector3d vGyr;

while (std::getline(fsImu, sImu_line) && !sImu_line.empty()) // read imu data sImu_line获得每行的文件流

{

// timestamp (1),imu quaternion(4),imu position(3),imu gyro(3),imu acc(3)

std::istringstream ssImuData(sImu_line);//ssImuData得到每行文件的内容

ssImuData >> dStampNSec;//时间戳

//利用循环跳过imu quaternion(4),imu position(3)

for(int i=0;i<7;i++)

ssImuData>>tmp;

ssImuData>>vGyr.x() >> vGyr.y() >> vGyr.z() >> vAcc.x() >> vAcc.y() >> vAcc.z();

// 时间单位为 s

pSystem->PubImuData(dStampNSec, vGyr, vAcc);//PubImuData不需要改变 用来将不同时刻下的IMU数据放进VINS系统

usleep(5000*nDelayTimes);//休眠??

}

fsImu.close();

}

//把相机的特征点放进VINS---里面用到的PubSimImageData函数要在system.cpp写入

void PubImageData()

{

string sImage_file = "/home/nnz/data/vio/bin/cam_pose.txt"; //相机相关数据的路径

//

//包含时间戳的文件

cout << "1 PubImageData start sImage_file: " << sImage_file << endl;

ifstream fsImage;//文件流对象

fsImage.open(sImage_file.c_str());

if (!fsImage.is_open())

{

cerr << "Failed to open image file! " << sImage_file << endl;

return;

}

std::string sImage_line;

double dStampNSec;

string sImgFileName;

int n=0;

// cv::namedWindow("SOURCE IMAGE", CV_WINDOW_AUTOSIZE);

//这个循环是遍历所有的相机

while (std::getline(fsImage, sImage_line) && !sImage_line.empty())//sImage_line是cam_pose每行的数据流

{

std::istringstream ssImgData(sImage_line);//是cam_pose每行的内容

ssImgData >> dStampNSec; //读入时间戳

cout<<"cam time: "<<fixed<<dStampNSec<<endl;

// all_points_ 文件存储的是house模型的线特征,每行4个数,对应该线两端点在归一化平面的坐标

//all_points_ 文件每行的内容是 x, y, z, 1, u, v 这里的u v是归一化下的x ,y 不是像素坐标

//在函数PubSimImageData中会算出具体特征点的像素坐标

string all_points_file_name = "/home/nnz/data/vio/bin/keyframe/all_points_" + to_string(n)+ ".txt"; //第n个相机对应的观测数据的文件名

cout<<"points_file: "<<all_points_file_name<<endl;

vector<cv::Point2f> FeaturePoints;//容器FeaturePoints存放一个相机的特征点(归一化坐标)

std::ifstream f;

f.open(all_points_file_name);

//这个循环是遍历每个相机的特征点信息

// file content in each line: x, y, z, 1, u, v

//经过这个循环把all_points_的特征点都放在FeaturePoints了

while(!f.eof())

{

std::string s;

std::getline(f,s);//得到all_points_的文件流s

// 一行两个点连成线,获取每行点判断一下是否之前获取过

if(!s.empty())

{

std::stringstream ss;//

ss << s;//ss得到每行的内容

double tmp;//跳过 x y z 1

for(int i=0;i<4;i++)

ss>>tmp;

float px,py;

ss >> px;

ss >> py;

cv::Point2f pt( px, py);//归一化坐标

FeaturePoints.push_back(pt);

}

}

// cout << "All points:" << endl;

// for(auto point : FeaturePoints){

// cout << point << " ";

// }

// cout << endl;

pSystem->PubSimImageData(dStampNSec, FeaturePoints);//把每一个图片的特征点 放进VINS系统里

usleep(50000*nDelayTimes);

n++;

}

fsImage.close();

}

下面的PubSimImageData函数里没有计算特征点速度,当然自己写了带有计算速度的函数,但是程序有问题,也有可能是我的原理有问题。QAQ

我认为特征点速度是(不同相机下的同一特征点像素位置差) / (相机间的时间差)

在system.cpp中:

void System::PubSimImageData(double dStampSec, const vector<cv::Point2f> &FeaturePoints)

{

if (!init_feature)//

{

cout << "1 PubImageData skip the first detected feature, which doesn't contain optical flow speed" << endl;

init_feature = 1;

return;

}

if (first_image_flag)//

{

cout << "2 PubImageData first_image_flag" << endl;

first_image_flag = false;

first_image_time = dStampSec;

last_image_time = dStampSec;

return;

}

// detect unstable camera stream 发现时间戳不连续甚至倒退,提示重新输入

if (dStampSec - last_image_time > 1.0 || dStampSec < last_image_time)

{

cerr << "3 PubImageData image discontinue! reset the feature tracker!" << endl;

first_image_flag = true;

last_image_time = 0;

pub_count = 1;

return;

}

last_image_time = dStampSec;

// frequency control 控制频率设定小于某一阈值

// if (round(1.0 * pub_count / (dStampSec - first_image_time)) <= FREQ)

// {

// PUB_THIS_FRAME = true;

// // reset the frequency control TODO question:若当前连续图像序列的频率与 FREQ=10 误差在一定范围内重置?

// if (abs(1.0 * pub_count / (dStampSec - first_image_time) - FREQ) < 0.01 * FREQ)

// {

// first_image_time = dStampSec;

// pub_count = 0;

// }

// }

// else

// {

// PUB_THIS_FRAME = false;

// }

PUB_THIS_FRAME = true;

TicToc t_r;

// cout << "3 PubImageData t : " << dStampSec << endl;

// TODO Bookmark:获取图像特征点

// trackerData[0].readImage(img, dStampSec);

// trackerData[0].readPoints(FeaturePoints, dStampSec);

// for (unsigned int i = 0;; i++)

// {

// bool completed = false;

// completed |= trackerData[0].updateID(i);

//

// if (!completed)

// break;

// }

if (PUB_THIS_FRAME)

{

pub_count++;//pub进VINS的相机的个数

shared_ptr<IMG_MSG> feature_points(new IMG_MSG());

//这里的 IMG_MSG 的数据结构如下

/* struct IMG_MSG {

double header;

vector<Vector3d> points;//相机下的3d点

vector<int> id_of_point;//点对应的id

vector<float> u_of_point;//像素u

vector<float> v_of_point;//像素v

vector<float> velocity_x_of_point;//u的速度

vector<float> velocity_y_of_point;//v的速度

};*/

feature_points->header = dStampSec;//

vector<set<int>> hash_ids(NUM_OF_CAM);

//这里其实默认是1

for (int i = 0; i < NUM_OF_CAM; i++)

{

// auto &un_pts = trackerData[i].cur_un_pts;// 去畸变的归一化图像坐标

// auto &cur_pts = trackerData[i].cur_pts;// 当前追踪到的特征点

// auto &ids = trackerData[i].ids;

// auto &pts_velocity = trackerData[i].pts_velocity;

//遍历相机的所有特征点

for (unsigned int j = 0; j < FeaturePoints.size(); j++)

{

// if (trackerData[i].track_cnt[j] > 1)

// {

// int p_id = ids[j];

int p_id = j;

hash_ids[i].insert(p_id);

double x = FeaturePoints[j].x;

double y = FeaturePoints[j].y;

double z = 1;

feature_points->points.push_back(Vector3d(x, y, z));

feature_points->id_of_point.push_back(p_id * NUM_OF_CAM + i);

// feature_points->u_of_point.push_back(cur_pts[j].x); // 像素坐标

// feature_points->v_of_point.push_back(cur_pts[j].y);

// feature_points->velocity_x_of_point.push_back(pts_velocity[j].x);

// feature_points->velocity_y_of_point.push_back(pts_velocity[j].y);

cv::Point2f pixel_point;//特征点对应的像素坐标

pixel_point.x = 460 * x + 255;

pixel_point.y = 460 * y + 255;

feature_points->u_of_point.push_back(pixel_point.x); // 像素坐标

feature_points->v_of_point.push_back(pixel_point.y);

这里默认速度为0不考虑

feature_points->velocity_x_of_point.push_back(0);

feature_points->velocity_y_of_point.push_back(0);

// }

}

// skip the first image; since no optical speed on frist image

if (!init_pub)

{

cout << "4 PubImage init_pub skip the first image!" << endl;

init_pub = 1;

}

else

{

m_buf.lock();

feature_buf.push(feature_points);

// cout << "5 PubImage t : " << fixed << feature_points->header

// << " feature_buf size: " << feature_buf.size() << endl;

m_buf.unlock();

con.notify_one();

}

}

}

}

system.h中:加入

void PubSimImageData(double dStampSec, const vector<cv::Point2f> &FeaturePoints);

这里我给出自己写的带有计算特征点速度的PubSimImageData函数,但是有问题,网友们有解决方案的可以Q我

void System::PubsimuImageData(double dStampSec, const vector<cv::Point2f> &FeaturePoints)

{

/*if (!init_feature)

{

cout << "1 PubImageData skip the first detected feature, which doesn't contain optical flow speed" << endl;

init_feature = 1;

return;

}*/

/*if (first_image_flag)

{

cout << "2 PubImageData first_image_flag" << endl;

first_image_flag = false;

first_image_time = dStampSec;

last_image_time = dStampSec;

return;

}*/

// detect unstable camera stream

if (dStampSec - last_image_time > 1.0 || dStampSec < last_image_time)

{

cerr << "3 PubImageData image discontinue! reset the feature tracker!" << endl;

first_image_flag = true;

last_image_time = 0;

pub_count = 1;

return;

}

last_image_time = dStampSec;

/*// frequency control

if (round(1.0 * pub_count / (dStampSec - first_image_time)) <= FREQ)

{

PUB_THIS_FRAME = true;

// reset the frequency control

if (abs(1.0 * pub_count / (dStampSec - first_image_time) - FREQ) < 0.01 * FREQ)

{

first_image_time = dStampSec;

pub_count = 0;

}

}

else

{

PUB_THIS_FRAME = false;

}*/

PUB_THIS_FRAME=true;

TicToc t_r;

/*// cout << "3 PubImageData t : " << dStampSec << endl;

for (unsigned int i = 0;; i++)

{

bool completed = false;

completed |= trackerData[0].updateID(i);

if (!completed)

break;

}*/

if (PUB_THIS_FRAME)

{

pub_count++;//读入的相机数据++

/*struct IMG_MSG {

double header;

vector<Vector3d> points;

vector<int> id_of_point;

vector<float> u_of_point;

vector<float> v_of_point;

vector<float> velocity_x_of_point;

vector<float> velocity_y_of_point;

};*/

shared_ptr<IMG_MSG> feature_points(new IMG_MSG());

feature_points->header = dStampSec;

vector<set<int>> hash_ids(NUM_OF_CAM);//NUM_OF_CAM是1,因为本函数是对一张图片的数据作处理

//遍历相机

for (int i = 0; i < NUM_OF_CAM; i++)

{

/* auto &un_pts = trackerData[i].cur_un_pts;//去畸变的归一化图像坐标

auto &cur_pts = trackerData[i].cur_pts;//当前追踪到的特征点

auto &ids = trackerData[i].ids;//id

auto &pts_velocity = trackerData[i].pts_velocity;//点的速度??*/

//遍历当前相机所有特征点

//获得图像之间的时间间隔

tmp_time[cam_num-1]=dStampSec;

for(int k=0;k<cam_num;k++)

{

if(k==0)

delta_time[k]=tmp_time[k];

else

delta_time[k]=dStampSec-tmp_time[k-1];

}

if(dStampSec==0)//相机第一个的时候 没有速度

{

for (unsigned int j = 0; j < FeaturePoints.size(); j++)

{

if (trackerData[i].track_cnt[j] > 1)//

{

/*int p_id = ids[j];

hash_ids[i].insert(p_id);

double x = un_pts[j].x;

double y = un_pts[j].y;*/

double x=FeaturePoints[j].x;//归一化下的x

double y=FeaturePoints[j].y;//归一化下的y

double z = 1;

feature_points->points.push_back(Vector3d(x, y, z));//把特征点的归一化坐标放进去

feature_points->id_of_point.push_back(j * NUM_OF_CAM + i);//特征点在相机中的id

/*feature_points->u_of_point.push_back(cur_pts[j].x); //像素坐标

feature_points->v_of_point.push_back(cur_pts[j].y);*/

cv::Point2f pixel_p;//存放特征点(归一化)的像素坐标

//公式: u=fx*x+cx 这里的x y是归一化下的x y

// v=fy*y+cy

pixel_p.x=460*x+255;

pixel_p.y=460*y+255;

feature_points->u_of_point.push_back(pixel_p.x); //像素坐标

feature_points->v_of_point.push_back(pixel_p.y);

feature_points->velocity_x_of_point.push_back(0);

feature_points->velocity_y_of_point.push_back(0);

}

}

}

else

{

for (unsigned int j = 0; j < FeaturePoints.size(); j++)

{

if (trackerData[i].track_cnt[j] > 1)//

{

/*int p_id = ids[j];

hash_ids[i].insert(p_id);

double x = un_pts[j].x;

double y = un_pts[j].y;*/

double x=FeaturePoints[j].x;//归一化下的x

double y=FeaturePoints[j].y;//归一化下的y

double z = 1;

feature_points->points.push_back(Vector3d(x, y, z));//把特征点的归一化坐标放进去

feature_points->id_of_point.push_back(j * NUM_OF_CAM + i);//特征点在相机中的id

/*feature_points->u_of_point.push_back(cur_pts[j].x); //像素坐标

feature_points->v_of_point.push_back(cur_pts[j].y);*/

cv::Point2f pixel_p;//存放特征点(归一化)的像素坐标

//公式: u=fx*x+cx 这里的x y是归一化下的x y

// v=fy*y+cy

pixel_p.x=460*x+255;

pixel_p.y=460*y+255;

feature_points->u_of_point.push_back(pixel_p.x); //像素坐标

feature_points->v_of_point.push_back(pixel_p.y);

vector<double> tmp_pixel_x;

tmp_pixel_x[j]=pixel_p.x;

vector<double> tmp_pixel_y;

tmp_pixel_y[j]=pixel_p.y;

double vx=0,vy=0;

vx=(tmp_features[j].x-tmp_pixel_x[j])/delta_time[i];

vy=(tmp_features[j].y-tmp_pixel_y[j])/delta_time[i];

feature_points->velocity_x_of_point.push_back(vx);

feature_points->velocity_y_of_point.push_back(vy);

}

}

}

//}

// skip the first image; since no optical speed on frist image

if (!init_pub)

{

cout << "4 PubImage init_pub skip the first image!" << endl;

init_pub = 1;

}

else

{

m_buf.lock();

feature_buf.push(feature_points);

// cout << "5 PubImage t : " << fixed << feature_points->header

// << " feature_buf size: " << feature_buf.size() << endl;

m_buf.unlock();

con.notify_one();

}

//获得每个图片的特征点(像素坐标 保存上一次的特征点,用来计算速度,不知道这样写对不对,逻辑上应该没啥问题)

for(int n=0;n<FeaturePoints.size();n++)

{

double tmp_x=0,tmp_y=0;

tmp_x= FeaturePoints[n].x;

tmp_y= FeaturePoints[n].y;

tmp_features[n].x=460*tmp_x+255;

tmp_features[n].y=460*tmp_y+255;

}

cam_num++;

}

}

}

这里用到了一些全局变量:

vector<double> delta_time;//图片之间的时间间隔

vector<double> tmp_time;//用来存放每个图片的时间戳,用来算图片间的时间间隔

vector<double> tmp_pixel_x;//用来存放上一张图片的的像素坐标 来计算速度

vector<double> tmp_pixel_y;//

vector<cv::Point2f> tmp_features;//存放上一图片特征点,方便计算速度

int cam_num=1;//相机个数

二、对不同大小噪声的IMU数据和相机数据仿真

1无噪声

下面是中值积分法的加速度与角速度的代码:

//midOmega=(imudata[i].imu_gyro-imudata[i-1].imu_gyro_bias+imudata[i-1].imu_gyro-imudata[i-1].imu_gyro_bias)/2;

midOmega=(imudata[i].imu_gyro+imudata[i-1].imu_gyro)/2;//无噪声

// Eigen::Vector3d acc_w =0.5*( Qwbk * (imudata[i-1].imu_acc-imudata[i-1].imu_acc_bias) + gw +Qwbk1 * (imupose.imu_acc-imudata[i-1].imu_acc_bias) + gw );

Eigen::Vector3d acc_w =0.5*( Qwbk * (imudata[i-1].imu_acc) + gw +Qwbk1 * (imupose.imu_acc) + gw );//无噪声

2有噪声

在无噪声的代码中把无噪声的加上注释,把有噪声的注释取消即可

在第二讲的源代码的param.h文件中修改噪声大小

1、小噪声

double gyro_bias_sigma = 1.0e-7; 陀螺仪bias随机游走噪声 bias的导数服从高斯分布

double acc_bias_sigma = 0.000001; 加速度bias随机游走噪声 bias的导数服从高斯分布

double gyro_noise_sigma = 0.00015; 陀螺仪高斯白噪声 // rad/s * 1/sqrt(hz)

double acc_noise_sigma = 0.00019; 加速度高斯白噪声 // m/(s^2) * 1/sqrt(hz)

2、加大一点噪声

double gyro_bias_sigma = 1.0e-6; 陀螺仪bias随机游走噪声 bias的导数服从高斯分布

double acc_bias_sigma = 0.00001; 加速度bias随机游走噪声 bias的导数服从高斯分布

double gyro_noise_sigma = 0.0015; 陀螺仪高斯白噪声 // rad/s * 1/sqrt(hz)

double acc_noise_sigma = 0.0019; 加速度高斯白噪声 // m/(s^2) * 1/sqrt(hz)

3、再加大点噪声

double gyro_bias_sigma = 1.0e-5; 陀螺仪bias随机游走噪声 bias的导数服从高斯分布

double acc_bias_sigma = 0.0001; 加速度bias随机游走噪声 bias的导数服从高斯分布

double gyro_noise_sigma = 0.015; 陀螺仪高斯白噪声 // rad/s * 1/sqrt(hz)

double acc_noise_sigma = 0.019; 加速度高斯白噪声 // m/(s^2) * 1/sqrt(hz)

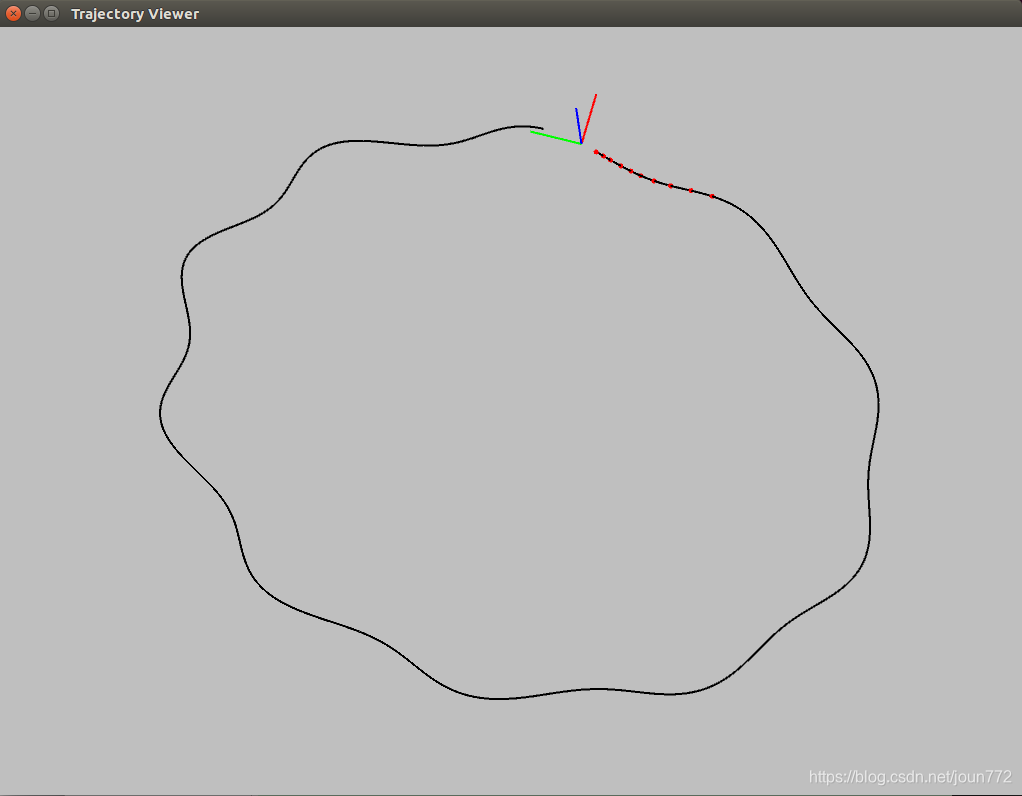

总结:从图可以看出,噪声越大,效果越差。