具体语句代码解释参照此文:MySQL-JDBC详细介绍,和MySQL的jdbc基本上没啥大的区别

二、添加config配置文件和日志配置

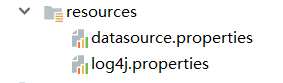

- 新建资源包以及config、日志的配置文件

driver=org.apache.hive.jdbc.HiveDriver

url=jdbc:hive2://192.168.221.140:10000/default

username=root

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/hadoop.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

三、初始化资源类

package cn.kgc.hive.jdbc.hdbc;

import java.io.FileNotFoundException;

import java.io.FileReader;

import java.io.IOException;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.Properties;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class BaseConfig {

class Config{

private String driver;

private String url;

private String username;

private String password;

}

private Config config;

private boolean valid(String url){

Pattern p = Pattern.compile("jdbc:\\w+://((\\d{1,3}\\.){3}\\d{1,3}|\\w+):\\d{1,5}/\\w+");//把字符串

Matcher m = p.matcher(url);

return m.matches();

}

private void init() throws Exception {

String path = Thread.currentThread().getContextClassLoader().getResource("datasource.properties").getPath();

Properties pro = new Properties();

pro.load(new FileReader(path));

String url = pro.getProperty("url");//url不能没有

if (null==url||!valid(url)){

throw new Exception("invalid url exception");

}

config = new Config();

config.url = url;

config.driver = pro.getProperty("driver","com.mysql.jdbc.Driver");

config.username = pro.getProperty("username","root");

config.password= pro.getProperty("password","");

}

{

try {

init();

Class.forName(config.driver);

} catch (Exception e) {

e.printStackTrace();

}

}

Connection getCon() throws SQLException {

return DriverManager.getConnection(config.url,config.username,config.password);

}

void close(AutoCloseable...closeables){

for (AutoCloseable closeable : closeables) {

if (null!=closeable){

try {

closeable.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

}

四、执行sql语句类

package cn.kgc.hive.jdbc.hdbc;

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.List;

public class BaseDao extends BaseConfig {

private PreparedStatement getPst(Connection con,String sql,Object...params) throws SQLException {

PreparedStatement pst = con.prepareStatement(sql);

if (params.length>0){

for (int i = 0; i < params.length; i++) {

pst.setObject(i+1,params[i]);

}

}

return pst;

}

public Result exeNonQuery(String sql,Object...params){

Connection con =null;

PreparedStatement pst =null;

try {

con = getCon();

pst = getPst(con,sql,params);

pst.execute();

return Result.succeed();

} catch (SQLException e) {

return Result.fail();

}finally {

close(pst,con);

}

}

public Result exeQuery(String sql,Object...params){

Connection con =null;

PreparedStatement pst = null;

ResultSet rst = null;

try {

con=getCon();

pst = getPst(con,sql,params);

rst = pst.executeQuery();

List<List<String>> table = null;

if (null!=rst&&rst.next()){

table = new ArrayList<>();

final int COL = rst.getMetaData().getColumnCount();

do{

List<String> row = new ArrayList<>(COL);

for (int i = 1; i < COL; i++) {

row.add(rst.getObject(i).toString());

}

table.add(row);

}while (rst.next());

}

return Result.succeed(table);

} catch (SQLException e) {

return Result.fail();

}finally {

close(rst,pst,con);

}

}

public String readSql(String...paths) throws Exception {

String path = paths.length==0 ? "sql/sql.sql" : paths[0];

StringBuilder builder = new StringBuilder();

BufferedReader reader = new BufferedReader(new FileReader(path));

String line =null;

while (null != (line=reader.readLine())){

builder.append(line.trim()+" ");//防止第一行和第二行连在一起,加一个空格

}

return builder.toString();

}

}

4.1、上面的readSql方法为读取文件中的sql语句,看上去更清晰

public String readSql(String...paths) throws Exception {

String path = paths.length==0 ? "sql/sql.sql" : paths[0];

StringBuilder builder = new StringBuilder();

BufferedReader reader = new BufferedReader(new FileReader(path));

String line =null;

while (null != (line=reader.readLine())){

builder.append(line.trim()+" ");//防止第一行和第二行连在一起,加一个空格

}

return builder.toString();

}

五、结果集类

package cn.kgc.hive.jdbc.hdbc;

public abstract class Result<T> {

private boolean err;

private T data;

//用来统一格式,构造方法私有,统一收口

public static Result fail(){

return new Result(true) {

};

}

//加方法集泛型

public static <T> Result succeed(T...t){

return new Result(false,t) {

};

}

//构造器

private Result(boolean err, T...data) {

this.err = err;

this.data = data.length>0?data[0]:null;

}

public T getData() {

return data;

}

}

六、测试类

public class Test {

public static void main(String[] args) throws Exception {

BaseDao dao = new BaseDao();

Result<List<List<String>>> tables = dao.exeQuery(dao.readSql());

tables.getData().forEach(row->{

row.forEach(item->{

System.out.print(item+"\t");

});

System.out.println();

});

}

}

一、引入pom依赖

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${

hive.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-auth</artifactId>

<version>${

hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${

hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${

hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${

hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-mapreduce-client-core</artifactId>

<version>${

hadoop.version}</version>

</dependency>

</dependencies>