写在前面

- 分享一个k8s集群容灾备份恢复开源工具

Velero - 博文内容涉及:

- Velero 的简单介绍

- Velero 安装下载

- 备份恢复 Demo,以及容灾测试 Demo

- 恢复失败情况分析

- 理解不足小伙伴帮忙指正

我所渴求的,無非是將心中脫穎語出的本性付諸生活,為何竟如此艱難呢 ------赫尔曼·黑塞《德米安》

Velero 的简单介绍

Velero 是一个 vmware 开源的工具,用于 k8s 安全备份和恢复、执行灾难恢复以及迁移 Kubernetes 集群资源和持久卷。

Velero 可以做的:

- 备份集群并在丢失时恢复。

- 将集群资源迁移到其他集群。

- 将您的生产集群复制到开发和测试集群。

Velero 包括两部分:

- 在集群上运行的服务器(Velero 服务器)

- 在本地运行的命令行客户端(velero cli)

什么时候使用 Velero 代替 etcd 的内置备份/恢复是合适的?

关于 Velero 和 etcd 的快照备份如何选择?

我个人认为

- etcd 快照备份适用于比较严重的集群灾难。比如所有 etcd 集群所有节点宕机,快照文件丢失损坏的情况。k8s 集群挂掉的情况, etcd 备份恢复 是一种快速且成功率高的恢复方式,Velero 的恢复需要依赖其他组件,并且需要保证集群是存活的。

- Velero 适用于集群迁移,k8s 子集备份恢复,比如基于命名空间备份。某个命名空间误删,且 YAML 文件没有备份,那么可以 Velero 快速恢复。涉及多API资源对象 的系统升级,可以做升级前备份,升级失败通过 Velero 快速恢复。

Velero github 上面的解答:

Etcd 的备份/恢复工具非常适合从单个 etcd 集群中的数据丢失中恢复。例如,在升级 etcd istelf 之前备份 etcd 是个好主意。对于更复杂的 Kubernetes 集群备份和恢复管理,我们认为 Velero 通常是更好的方法。它使您能够扔掉不稳定的集群,并将您的 Kubernetes 资源和数据恢复到新的集群中,而仅通过备份和恢复 etcd 无法轻松做到这一点。

Velero 有用的案例示例:

- 您无权访问 etcd(例如,您在 GKE 上运行)

- 备份 Kubernetes 资源和持久卷状态

- 集群迁移

- 备份 Kubernetes 资源的子集

- 备份存储在多个 etcd 集群中的 Kubernetes 资源(例如,如果您运行自定义 apiserver)

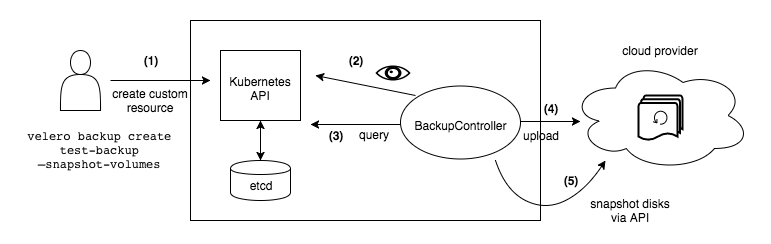

灾备恢复原理简单介绍

这部分建议小伙伴官网了解 这里简单介绍

每个 Velero 操作——按需备份、计划备份、恢复——都是自定义资源,使用 Kubernetes 自定义资源定义 (CRD) 定义并存储在 etcd 中。Velero 还包括处理自定义资源以执行备份、恢复和所有相关操作的控制器。

备份工作流程

- 当你运行时

velero backup create test-backup Velero客户端调用Kubernetes API服务器来创建一个Backup对象。BackupController通知新对象Backup并执行验证。BackupController开始备份过程。它通过查询 API 服务器的资源来收集要备份的数据。- 调用对象存储服务(BackupController 例如 AWS S3)以上传备份文件。

默认情况下,velero backup create 为任何持久卷制作磁盘快照。您可以通过指定额外的标志来调整快照。运行 velero backup create --help 以查看可用标志。可以使用选项禁用快照 --snapshot-volumes=false。

恢复工作流程

- 当你运行时

velero restore create: Velero客户端调用 Kubernetes API 服务器来创建一个Restore对象。RestoreController通知新的Restore对象并执行验证。- 从对象存储服务中 RestoreController 获取备份信息。然后它对备份的资源进行一些预处理,以确保这些资源可以在新集群上运行。例如,使用 备份的 API 版本来验证还原资源是否可以在目标集群上运行。

- RestoreController 启动还原过程,一次还原每个符合条件的资源。

默认情况下,Velero 执行非破坏性恢复,这意味着它不会删除目标集群上的任何数据。如果备份中的资源已存在于目标集群中,Velero 将跳过该资源。您可以将 Velero 配置为使用更新策略,而不是使用 --existing-resource-policy 恢复标志。当此标志设置为 时 update,Velero 将尝试更新目标集群中的现有资源以匹配备份中的资源。

安装下载

集群兼容性问题查看:

https://github.com/vmware-tanzu/velero#velero-compatibility-matrix

当前的集群环境

┌──[root@vms100.liruilongs.github.io]-[~]

└─$kubectl version --output=json

{

"clientVersion": {

"major": "1",

"minor": "25",

"gitVersion": "v1.25.1",

"gitCommit": "e4d4e1ab7cf1bf15273ef97303551b279f0920a9",

"gitTreeState": "clean",

"buildDate": "2022-09-14T19:49:27Z",

"goVersion": "go1.19.1",

"compiler": "gc",

"platform": "linux/amd64"

},

"kustomizeVersion": "v4.5.7",

"serverVersion": {

"major": "1",

"minor": "25",

"gitVersion": "v1.25.1",

"gitCommit": "e4d4e1ab7cf1bf15273ef97303551b279f0920a9",

"gitTreeState": "clean",

"buildDate": "2022-09-14T19:42:30Z",

"goVersion": "go1.19.1",

"compiler": "gc",

"platform": "linux/amd64"

}

}

安装文件下载:

https://github.com/vmware-tanzu/velero/releases/tag/v1.10.1-rc.1

https://github.com/vmware-tanzu/velero/releases/download/v1.10.1-rc.1/velero-v1.10.1-rc.1-linux-amd64.tar.gz

客户端

客户端安装:

┌──[[email protected]]-[~/ansible/velero]

└─$wget --no-check-certificate https://github.com/vmware-tanzu/velero/releases/download/v1.10.1-rc.1/velero-v1.10.1-rc.1-linux-amd64.tar.gz

┌──[[email protected]]-[~/ansible/velero]

└─$ls

velero-v1.10.1-rc.1-linux-amd64.tar.gz

┌──[[email protected]]-[~/ansible/velero]

└─$tar -zxvf velero-v1.10.1-rc.1-linux-amd64.tar.gz

velero-v1.10.1-rc.1-linux-amd64/LICENSE

velero-v1.10.1-rc.1-linux-amd64/examples/.DS_Store

velero-v1.10.1-rc.1-linux-amd64/examples/README.md

velero-v1.10.1-rc.1-linux-amd64/examples/minio

velero-v1.10.1-rc.1-linux-amd64/examples/minio/00-minio-deployment.yaml

velero-v1.10.1-rc.1-linux-amd64/examples/nginx-app

velero-v1.10.1-rc.1-linux-amd64/examples/nginx-app/README.md

velero-v1.10.1-rc.1-linux-amd64/examples/nginx-app/base.yaml

velero-v1.10.1-rc.1-linux-amd64/examples/nginx-app/with-pv.yaml

velero-v1.10.1-rc.1-linux-amd64/velero

┌──[[email protected]]-[~/ansible/velero]

└─$cd velero-v1.10.1-rc.1-linux-amd64/

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$cp velero /usr/local/bin/

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero version

Client:

Version: v1.10.1-rc.1

Git commit: e4d2a83917cd848e5f4e6ebc445fd3d262de10fa

<error getting server version: no matches for kind "ServerStatusRequest" in version "velero.io/v1">

配置命令补齐

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero completion bash >/etc/bash_completion.d/velero

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero client config set colorized=false

服务端安装

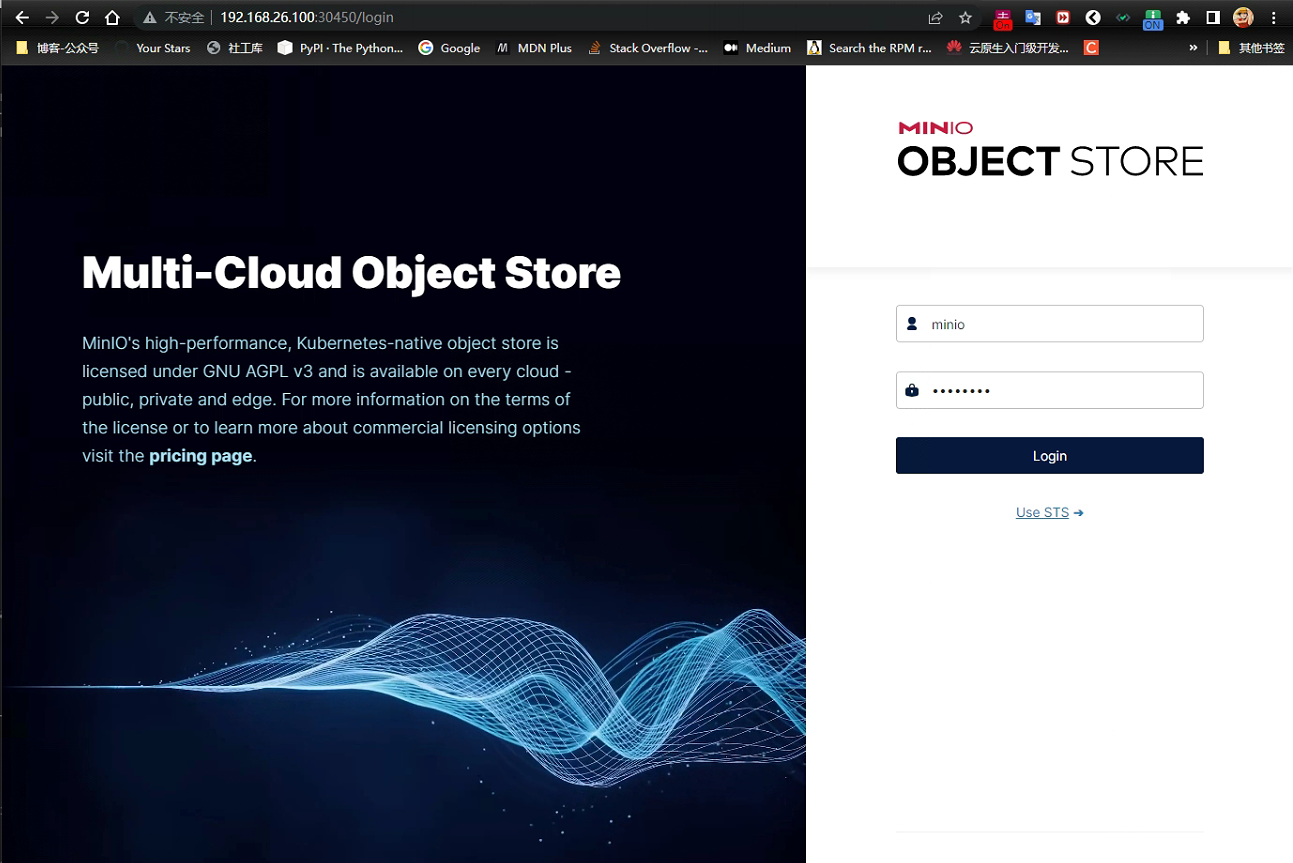

在安装 服务端的同时,需要安装一个 存放 备份数据文件的对象存储系统 Minio

credentials-velero 在您的 Velero 目录中创建特定于 Velero 的凭据文件,用于连接 minio

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$vim credentials-velero

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$cat credentials-velero

[default]

aws_access_key_id = minio

aws_secret_access_key = minio123

启动服务器和本地存储服务。

本地存储服务部署

下面的 YAML 文件在 Velero 目录中,在上面的客户端的安装包里,解压出来就可以看到这个 Yaml 文件

这个 YAML 文件 用于部署一个从集群内访问的 Minio 实例。并且启动一个 Job 在 Minion 中建立备份需要的桶,需要在集群外部公开 Minio 服务。需要外部访问才能访问日志和运行 velero describe 命令。

修改下 yaml 文件,这里主要修改 Service 为 NodePort。并且把 Minion 的 控制台访问 IP 涉及为静态。

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$cat examples/minio/00-minio-deployment.yaml

# Copyright 2017 the Velero contributors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

---

apiVersion: v1

kind: Namespace

metadata:

name: velero

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

strategy:

type: Recreate

selector:

matchLabels:

component: minio

template:

metadata:

labels:

component: minio

spec:

volumes:

- name: storage

emptyDir: {

}

- name: config

emptyDir: {

}

containers:

- name: minio

image: quay.io/minio/minio:latest

imagePullPolicy: IfNotPresent

args:

- server

- /storage

- --console-address=:9090

- --config-dir=/config

env:

- name: MINIO_ROOT_USER

value: "minio"

- name: MINIO_ROOT_PASSWORD

value: "minio123"

ports:

- containerPort: 9000

- containerPort: 9090

volumeMounts:

- name: storage

mountPath: "/storage"

- name: config

mountPath: "/config"

---

apiVersion: v1

kind: Service

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

# ClusterIP is recommended for production environments.

# Change to NodePort if needed per documentation,

# but only if you run Minio in a test/trial environment, for example with Minikube.

type: NodePort

ports:

- port: 9000

name: api

targetPort: 9000

protocol: TCP

- port: 9099

name: console

targetPort: 9090

protocol: TCP

selector:

component: minio

---

apiVersion: batch/v1

kind: Job

metadata:

namespace: velero

name: minio-setup

labels:

component: minio

spec:

template:

metadata:

name: minio-setup

spec:

restartPolicy: OnFailure

volumes:

- name: config

emptyDir: {

}

containers:

- name: mc

image: minio/mc:latest

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- "mc --config-dir=/config config host add velero http://minio:9000 minio minio123 && mc --config-dir=/config mb -p velero/velero"

volumeMounts:

- name: config

mountPath: "/config"

注意:Minio yaml 提供的示例使用“empty dir”。您的节点需要有足够的可用空间来存储正在备份的数据以及 1GB 的可用空间。如果节点没有足够的空间,您可以修改示例 yaml 以使用 Persistent Volume 而不是“empty dir”

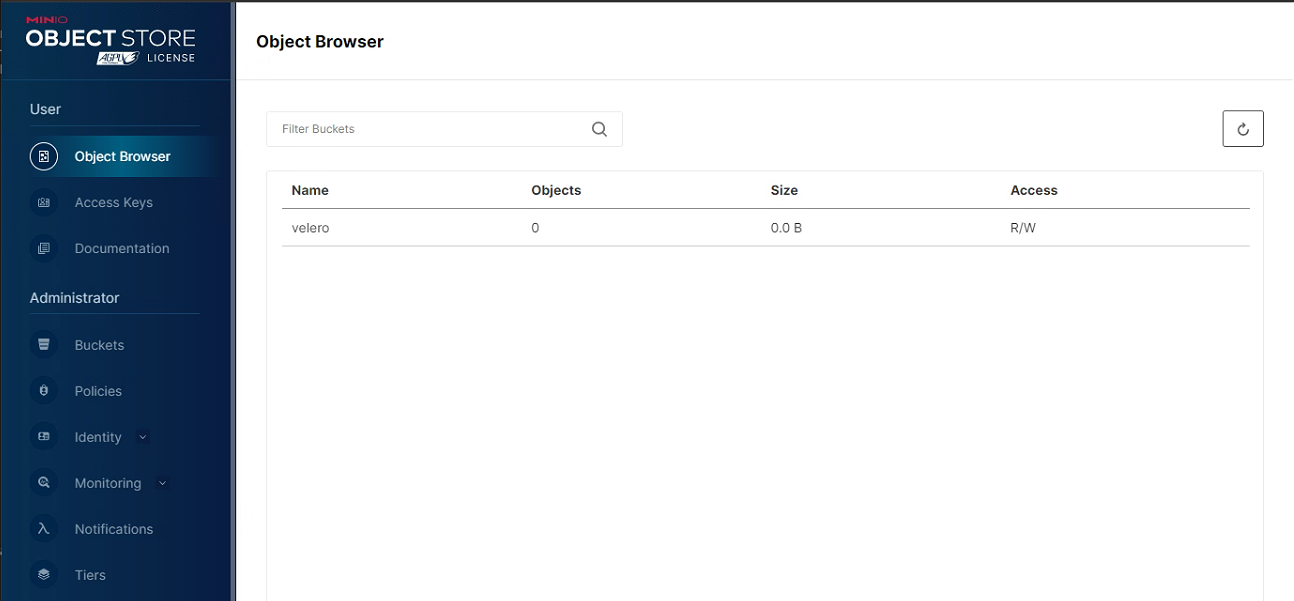

- bucket:你在 minio 中创建的 bucketname

- backup-location-config: 把 xxx.xxx.xxx.xxx 改成你 minio 服务器的 ip 地址。

集群中部署 Velero

部署命令

velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.2.1 \

--bucket velero \

--secret-file ./credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.velero.svc:9000

如果为私仓,可以导出 YAML 文件调整在应用。

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.2.1 \

--bucket velero \

--secret-file ./credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.velero.svc:9000

--dry-run -o yaml > velero_deploy.yaml

应用部署

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$kubectl apply -f velero_deploy.yaml

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource client

..........

BackupStorageLocation/default: attempting to create resource

BackupStorageLocation/default: attempting to create resource client

BackupStorageLocation/default: created

Deployment/velero: attempting to create resource

Deployment/velero: attempting to create resource client

Deployment/velero: created

Velero is installed! ⛵ Use 'kubectl logs deployment/velero -n velero' to view the status.

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$

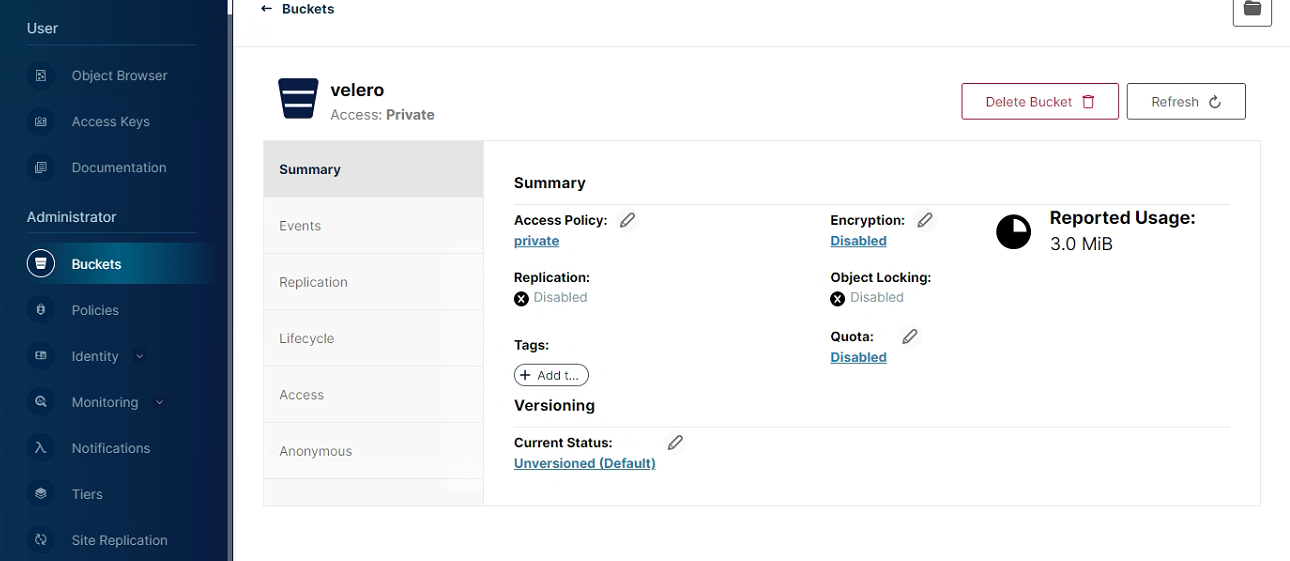

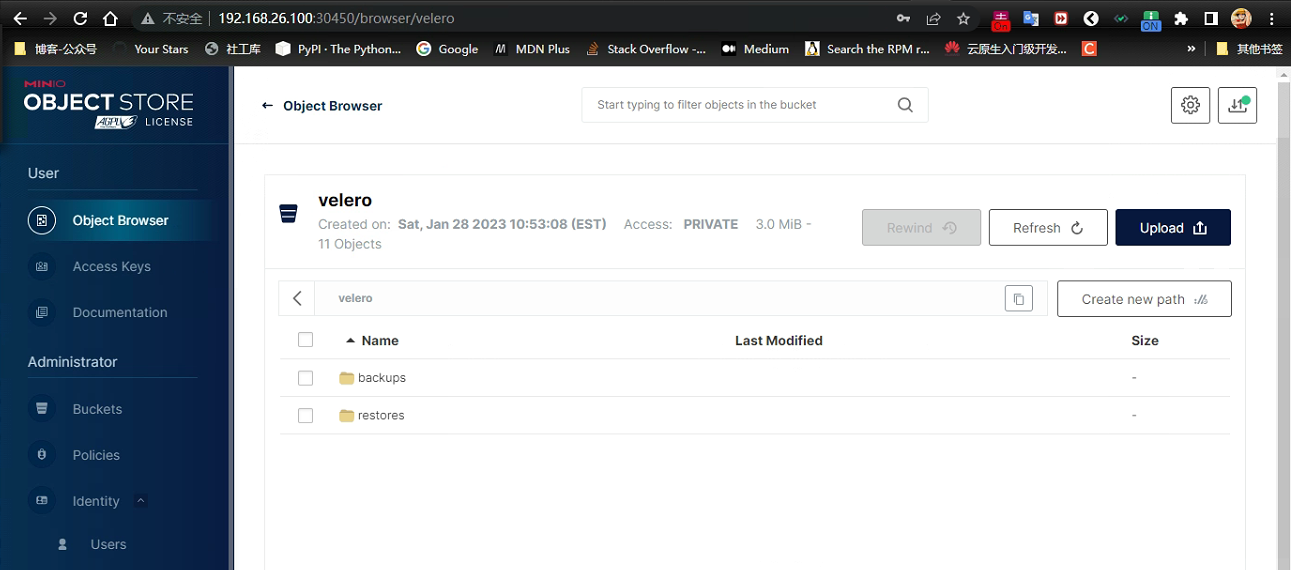

部署完成的 job 会自动新建 备用文件上传用的桶

备份

全量备份,部分备份

普通备份

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero backup create velero-demo

Backup request "velero-demo" submitted successfully.

Run `velero backup describe velero-demo` or `velero backup logs velero-demo` for more details.

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get backup velero-demo

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

velero-demo InProgress 0 0 2023-01-28 22:18:45 +0800 CST 29d default <none>

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$

查看备份信息

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get backup velero-demo

NAME STATUS ERRORS WARNINGS CREATED EXPIRES STORAGE LOCATION SELECTOR

velero-demo Completed 0 0 2023-01-28 22:18:45 +0800 CST 29d default <none>

定时备份

定时备份,每天午夜备份一次。

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero schedule create k8s-backup --schedule="@daily"

Schedule "k8s-backup" created successfully.

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get schedule

NAME STATUS CREATED SCHEDULE BACKUP TTL LAST BACKUP SELECTOR PAUSED

k8s-backup Enabled 2023-01-29 00:11:03 +0800 CST @daily 0s n/a <none> false

恢复

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero restore create --from-backup velero-demo

Restore request "velero-demo-20230129001615" submitted successfully.

Run `velero restore describe velero-demo-20230129001615` or `velero restore logs velero-demo-20230129001615` for more details.

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get restore

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

velero-demo-20230129001615 velero-demo InProgress 2023-01-29 00:16:15 +0800 CST <nil> 0 0 2023-01-29 00:16:15 +0800 CST <none>

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$velero get restore

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

velero-demo-20230129001615 velero-demo Completed 2023-01-29 00:16:15 +0800 CST 2023-01-29 00:17:20 +0800 CST 0 135 2023-01-29 00:16:15 +0800 CST <none>

┌──[[email protected]]-[~/ansible/velero/velero-v1.10.1-rc.1-linux-amd64]

└─$

容灾测试

删除一个命名空间测试

当前命令空间资源

┌──[[email protected]]-[~/back]

└─$kubectl-ketall -n cadvisor

W0129 00:34:28.299699 126128 warnings.go:70] kubevirt.io/v1 VirtualMachineInstancePresets is now deprecated and will be removed in v2.

W0129 00:34:28.354853 126128 warnings.go:70] metallb.io v1beta1 AddressPool is deprecated, consider using IPAddressPool

NAME NAMESPACE AGE

configmap/kube-root-ca.crt cadvisor 2d4h

pod/cadvisor-5v7hl cadvisor 2d4h

pod/cadvisor-7dnmk cadvisor 2d4h

pod/cadvisor-7l4zf cadvisor 2d4h

pod/cadvisor-dj6dm cadvisor 2d4h

pod/cadvisor-sjpq8 cadvisor 2d4h

serviceaccount/cadvisor cadvisor 2d4h

serviceaccount/default cadvisor 2d4h

controllerrevision.apps/cadvisor-6cc5c5c9cc cadvisor 2d4h

daemonset.apps/cadvisor cadvisor 2d4h

删除命名空间

┌──[[email protected]]-[~/back]

└─$kubectl delete ns cadvisor

namespace "cadvisor" deleted

^C┌──[[email protected]]-[~/back]

└─$kubectl delete ns cadvisor --force

Warning: Immediate deletion does not wait for confirmation that the running resource has been terminated. The resource may continue to run on the cluster indefinitely.

Error from server (NotFound): namespaces "cadvisor" not found

查看命名空间资源

┌──[[email protected]]-[~/back]

└─$kubectl-ketall -n cadvisor

W0129 00:35:25.548656 127598 warnings.go:70] kubevirt.io/v1 VirtualMachineInstancePresets is now deprecated and will be removed in v2.

W0129 00:35:25.581030 127598 warnings.go:70] metallb.io v1beta1 AddressPool is deprecated, consider using IPAddressPool

No resources found.

使用上面的备份恢复刚才删除的命名空间

┌──[[email protected]]-[~/back]

└─$velero restore create --from-backup velero-demo

Restore request "velero-demo-20230129003541" submitted successfully.

Run `velero restore describe velero-demo-20230129003541` or `velero restore logs velero-demo-20230129003541` for more details.

┌──[[email protected]]-[~/back]

└─$velero get restore

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

velero-demo-20230129001615 velero-demo Completed 2023-01-29 00:16:15 +0800 CST 2023-01-29 00:17:20 +0800 CST 0 135 2023-01-29 00:16:15 +0800 CST <none>

velero-demo-20230129003541 velero-demo InProgress 2023-01-29 00:35:41 +0800 CST <nil> 0 0 2023-01-29 00:35:41 +0800 CST <none>

┌──[[email protected]]-[~/back]

└─$velero get restore

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

velero-demo-20230129001615 velero-demo Completed 2023-01-29 00:16:15 +0800 CST 2023-01-29 00:17:20 +0800 CST 0 135 2023-01-29 00:16:15 +0800 CST <none>

velero-demo-20230129003541 velero-demo Completed 2023-01-29 00:35:41 +0800 CST 2023-01-29 00:36:46 +0800 CST 0 135 2023-01-29 00:35:41 +0800 CST <none>

确定命名空间资源恢复

┌──[[email protected]]-[~/back]

└─$kubectl-ketall -n cadvisor

W0129 00:37:29.787766 130766 warnings.go:70] kubevirt.io/v1 VirtualMachineInstancePresets is now deprecated and will be removed in v2.

W0129 00:37:29.819111 130766 warnings.go:70] metallb.io v1beta1 AddressPool is deprecated, consider using IPAddressPool

NAME NAMESPACE AGE

configmap/kube-root-ca.crt cadvisor 94s

pod/cadvisor-5v7hl cadvisor 87s

pod/cadvisor-7dnmk cadvisor 87s

pod/cadvisor-7l4zf cadvisor 87s

pod/cadvisor-dj6dm cadvisor 87s

pod/cadvisor-sjpq8 cadvisor 87s

serviceaccount/cadvisor cadvisor 88s

serviceaccount/default cadvisor 94s

controllerrevision.apps/cadvisor-6cc5c5c9cc cadvisor 63s

daemonset.apps/cadvisor cadvisor 63s

┌──[[email protected]]-[~/back]

└─$

┌──[[email protected]]-[~/back]

└─$kubectl get all -n cadvisor

Warning: kubevirt.io/v1 VirtualMachineInstancePresets is now deprecated and will be removed in v2.

NAME READY STATUS RESTARTS AGE

pod/cadvisor-5v7hl 1/1 Running 0 2m50s

pod/cadvisor-7dnmk 1/1 Running 0 2m50s

pod/cadvisor-7l4zf 1/1 Running 0 2m50s

pod/cadvisor-dj6dm 1/1 Running 0 2m50s

pod/cadvisor-sjpq8 1/1 Running 0 2m50s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/cadvisor 5 5 5 5 5 <none> 2m26s

┌──[[email protected]]-[~/back]

└─$

恢复失败情况分析

这里需要说明一点过,如果当前有命令空间发生了删除,但是你中断了它,类似下面这样,kubevirt 通过命令行发生的删除操作,但是它的删除没有完成。或者你进行了一些其他的操作。重复的删除创建 API 资源,导致的某些问题希望恢复操作之前的集群状态

┌──[[email protected]]-[~/ansible/kubevirt]

└─$kubectl get ns

NAME STATUS AGE

cadvisor Active 39h

default Active 3d20h

ingress-nginx Active 3d20h

kube-node-lease Active 3d20h

kube-public Active 3d20h

kube-system Active 3d20h

kubevirt Terminating 3d20h

local-path-storage Active 3d20h

metallb-system Active 3d20h

velero Active 40h

这个时候,如果使用 velero 发生 备份还原操作。可以会卡在下面的两个状态 InProgress 或者 New

┌──[[email protected]]-[~/ansible/kubevirt]

└─$velero get restore

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

velero-demo-20230130105328 velero-demo InProgress 2023-01-30 10:53:28 +0800 CST <nil> 0 0 2023-01-30 10:53:28 +0800 CST <none>

┌──[[email protected]]-[~/ansible/kubevirt]

└─$velero get restores

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

velero-demo-20230130161258 velero-demo New <nil> <nil> 0 0 2023-01-30 16:12:58 +0800 CST <none>

如果长时间没有变化,需要把通过脚本把命名空间彻底删除,然后还原工作才可以正常进行

┌──[[email protected]]-[~/ansible/kubevirt]

└─$velero get restores

NAME BACKUP STATUS STARTED COMPLETED ERRORS WARNINGS CREATED SELECTOR

.............

velero-demo-20230130161258 velero-demo Completed 2023-01-30 20:53:58 +0800 CST 2023-01-30 20:55:20 +0800 CST 0 164 2023-01-30 16:12:58 +0800 CST <none>

┌──[[email protected]]-[~/ansible/kubevirt]

└─$date

2023年 01月 30日 星期一 21:02:49 CST

┌──[[email protected]]-[~/ansible/kubevirt]

└─$

┌──[[email protected]]-[~/ansible/k8s_shell_secript]

└─$cat delete_namespace.sh

#!/bin/bash

coproc kubectl proxy --port=30990 &

if [ $# -eq 0 ] ; then

echo "后面加上你所要删除的ns."

exit 1

fi

kubectl get namespace $1 -o json > logging.json

sed -i '/"finalizers"/{n;d}' logging.json

curl -k -H "Content-Type: application/json" -X PUT --data-binary @logging.json http://127.0.0.1:30990/api/v1/namespaces/${1}/finalize

kill %1

┌──[[email protected]]-[~/ansible/k8s_shell_secript]

└─$sh delete_namespace.sh kubevirt

┌──[[email protected]]-[~/ansible/k8s_shell_secript]

└─$ls

delete_namespace.sh logging.json

博文部分内容参考

文中涉及参考链接内容版权归原作者所有,如有侵权请告知

https://github.com/vmware-tanzu/velero/discussions/3367

https://github.com/vmware-tanzu/velero

https://velero.io/docs/v0.6.0/faq/

https://github.com/vmware-tanzu/helm-charts/blob/main/charts/velero/README.md

https://kubernetes.io/docs/concepts/storage/volumes/#mount-propagation

https://velero.io/docs/v1.10/locations/

https://github.com/replicatedhq/local-volume-provider

https://docs.microfocus.com/doc/SMAX/2021.05/

https://www.minio.org.cn/overview.shtml

https://velero.io/docs/main/contributions/minio/

© 2018-2023 [email protected],All rights reserved. 保持署名-非商用-自由转载-相同方式共享(创意共享 3.0 许可证)