题目描述:

代码如下:

from sklearn import datasets

from sklearn import cross_validation

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

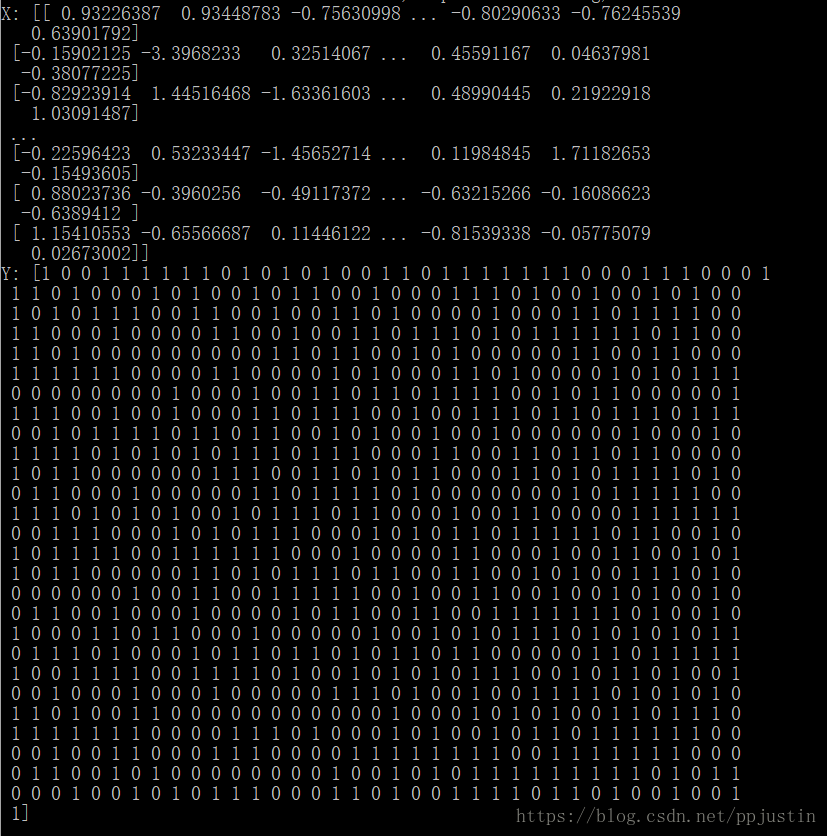

X,y = datasets.make_classification(n_samples = 1000, n_features = 10)

print("X:",X)

print("Y:",y)

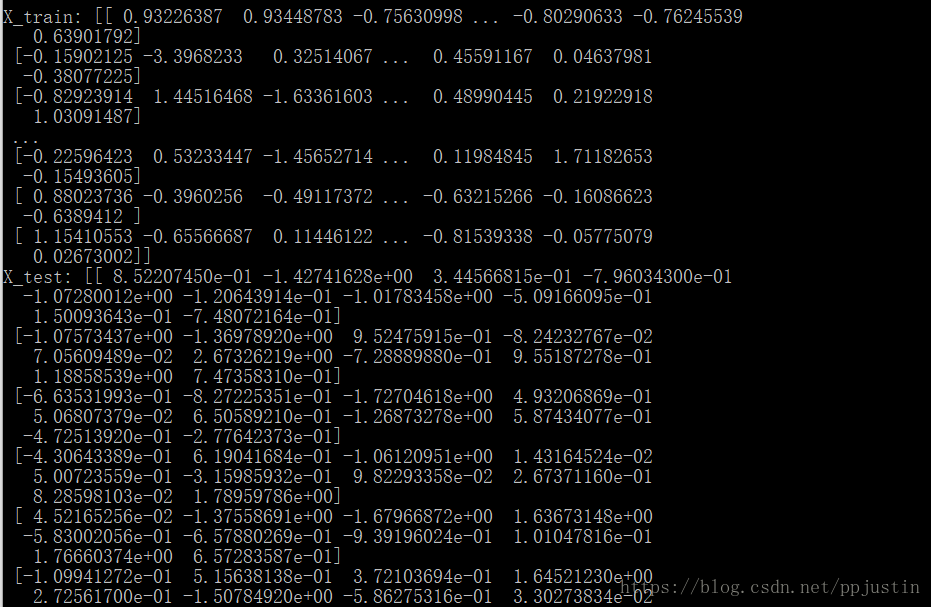

kf = cross_validation.KFold(1000, n_folds = 10, shuffle = True)

for train_index, test_index in kf:

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

print ("\nX_train:",X_train)

print ("X_test:",X_test)

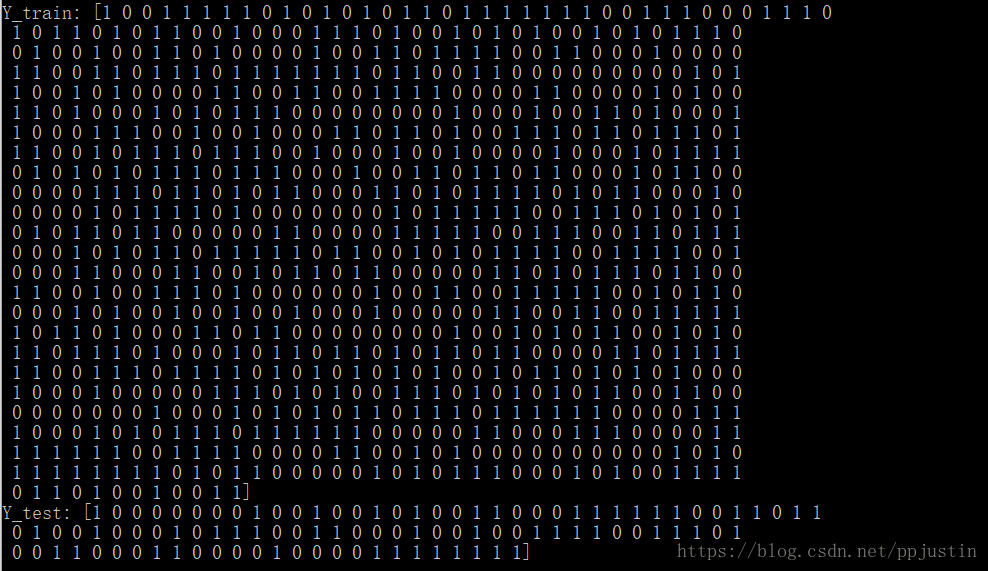

print ("Y_train:",y_train)

print ("Y_test:",y_test)

#Gaussian 朴素贝叶斯方法:

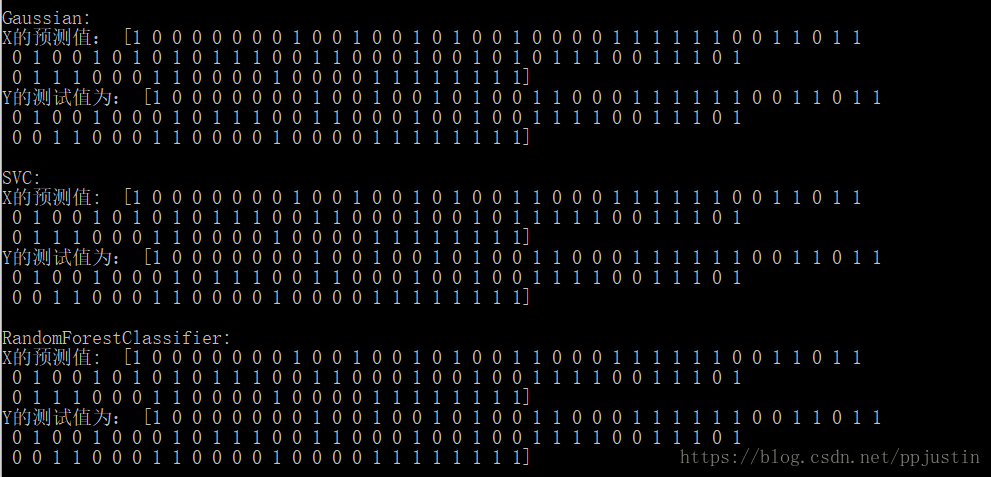

print("\nGaussian:")

clf1 = GaussianNB()

clf1.fit(X_train,y_train)

pred1 = clf1.predict(X_test)

print ("X的预测值:",pred1)

print ("Y的测试值为:",y_test)

#SVC支持向量机方法

print("\nSVC:")

clf2 = SVC(C = 1e-01,kernel = 'rbf',gamma=0.1)

clf2.fit(X_train,y_train)

pred2 = clf2.predict(X_test)

print ("X的预测值: ",pred2)

print ("Y的测试值为:",y_test)

#RandomForestClassifier

print("\nRandomForestClassifier:")

clf3 = RandomForestClassifier(n_estimators=6)

clf3.fit(X_train, y_train)

pred3 = clf3.predict(X_test)

print ("X的预测值: ",pred3)

print ("Y的测试值为:",y_test)

def evaluate(y_test, pred, method):

acc = metrics.accuracy_score(y_test, pred)

f1 = metrics.f1_score(y_test, pred)

auc = metrics.roc_auc_score(y_test, pred)

print(method + ":")

print ("Accuracy: ",acc)

print ("F1-score: ",f1)

print ("AUC ROC: ",auc)

print("\n")

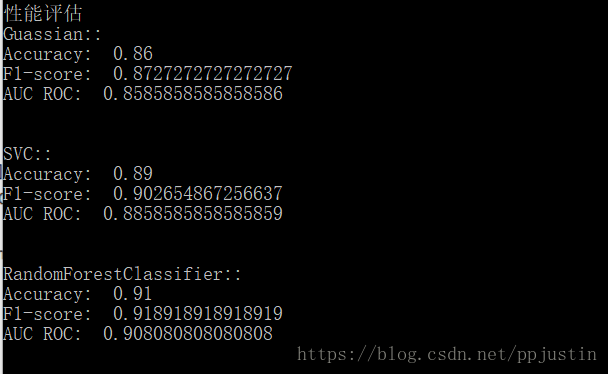

print("\n性能评估")

evaluate(y_test, pred1, "Guassian:")

evaluate(y_test, pred2, "Guassian:")

evaluate(y_test, pred3, "Guassian:")

运行结果:

Step 1:

Step 2:

Step 3:

Step 4:

Step 5:

所以从Step 4中可以看出,RandomForestClassifier方法更好