视频地址:尚硅谷大数据项目《在线教育之离线数仓》_哔哩哔哩_bilibili

目录

P025

在Hive所在节点部署Spark

P026

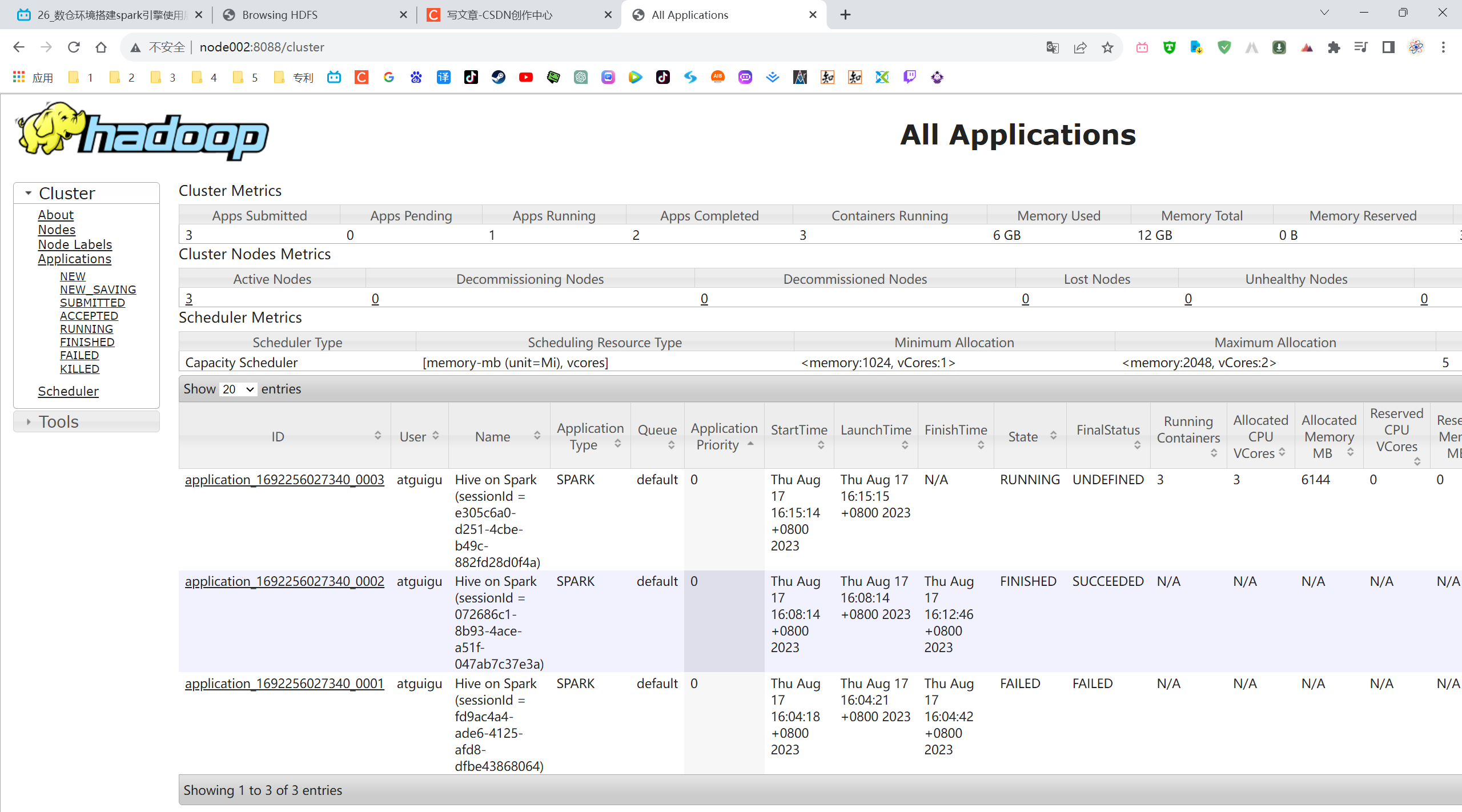

3)Hive on Spark测试

(1)启动hive客户端

[atguigu@hadoop102 hive]$ hive

(2)创建一张测试表

hive (default)> create table student(id int, name string);

(3)通过insert测试效果

hive (default)> insert into table student values(1,'abc');

将hive的引擎替换为spark,执行相同的命令,Sprak的优势性能能够体现出来。

P027

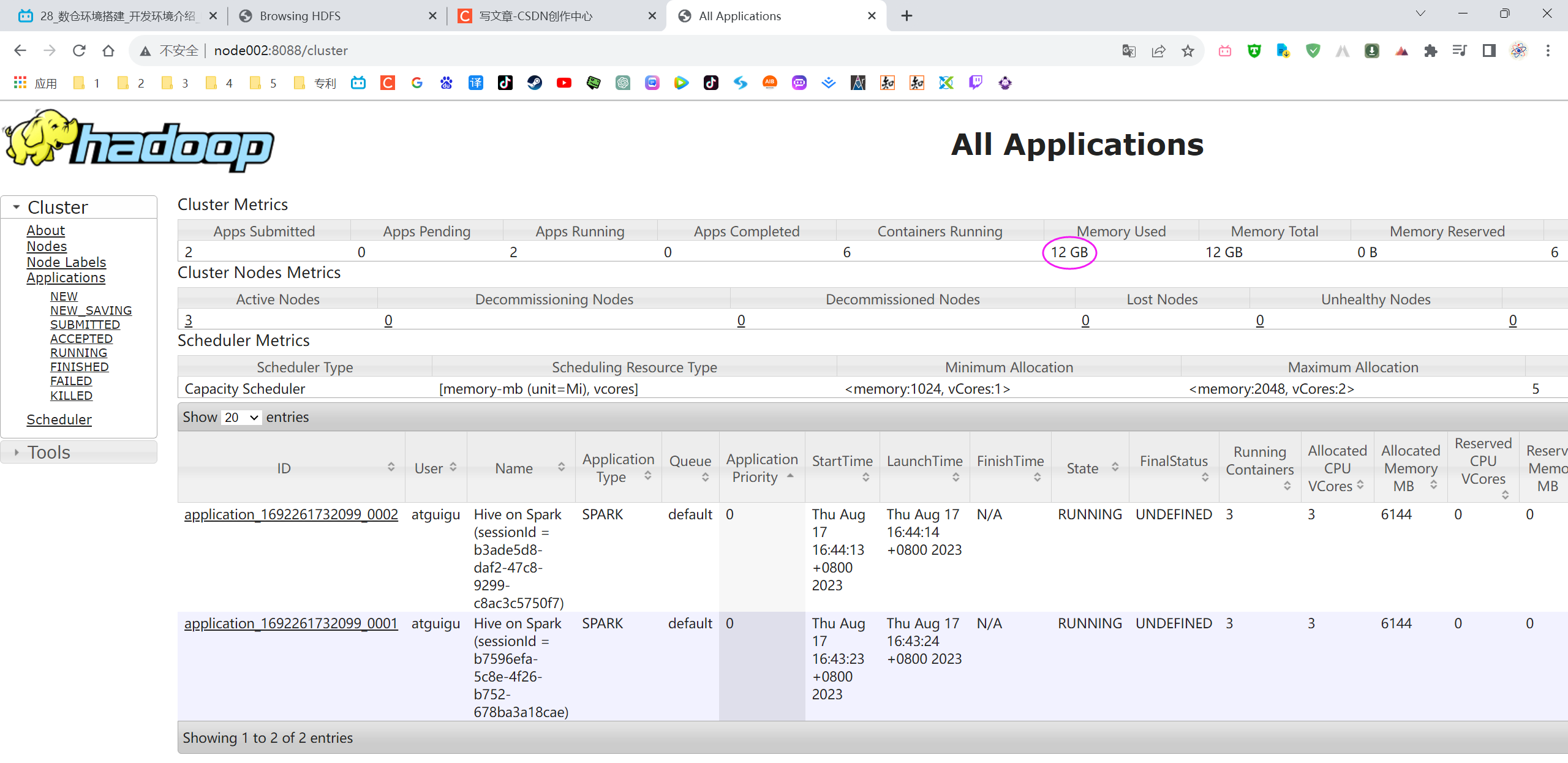

6.1.2 Yarn环境配置

在不退出第一个hive客户端的情况下,启动第二个hive客户端。

P028

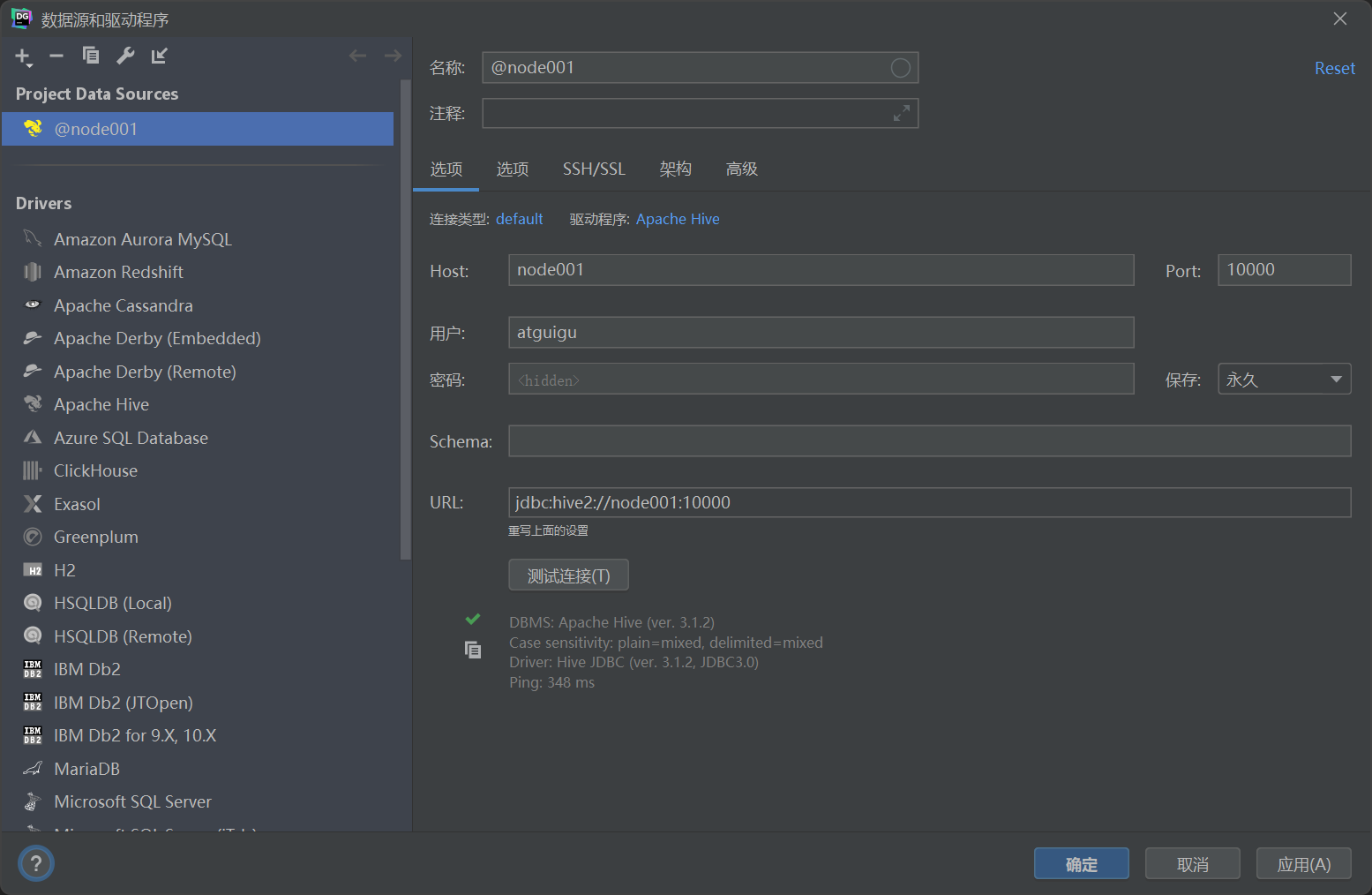

(1)启动hiveserver2

[atguigu@hadoop102 hive]$ bin/hive --service hiveserver2

(2)使用命令行客户端beeline进行远程访问

启动beeline客户端

[atguigu@hadoop102 hive]$ bin/beeline -u jdbc:hive2://node001:10000 -n atguigu

看到如下界面

Connecting to jdbc:hive2://hadoop102:10000

Connected to: Apache Hive (version 3.1.3)

Driver: Hive JDBC (version 3.1.3)

Transaction isolation: TRANSACTION_REPEATABLE_READ

Beeline version 3.1.3 by Apache Hive

0: jdbc:hive2://hadoop102:10000>

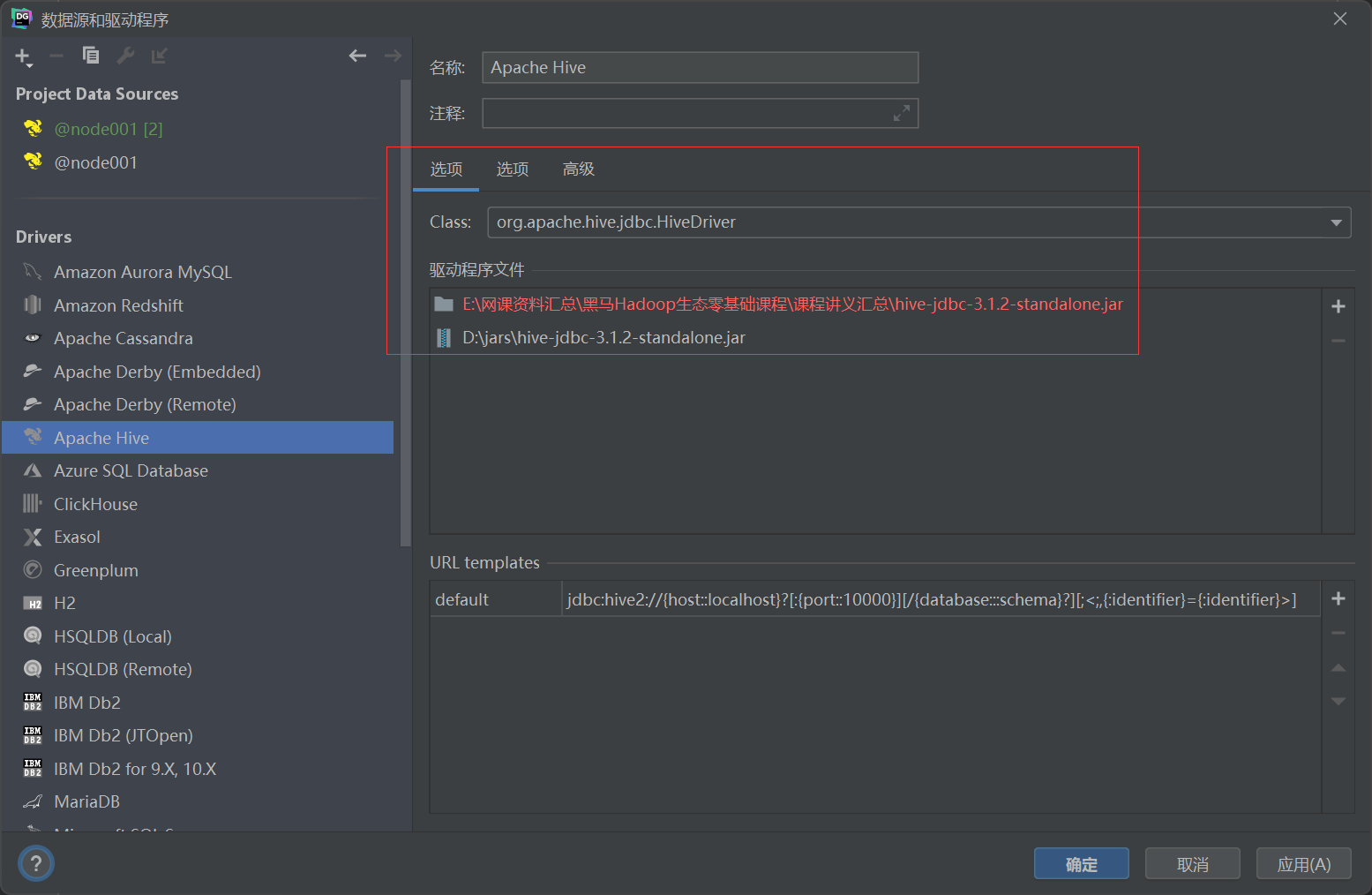

(3)使用Datagrip图形化客户端进行远程访问。

[atguigu@node001 ~]$ jpsall

================ node001 ================

42880 Jps

34373 NodeManager

34585 JobHistoryServer

33803 NameNode

33967 DataNode

40511 RunJar

41807 RunJar

================ node002 ================

9680 DataNode

13080 Jps

10013 NodeManager

9886 ResourceManager

================ node003 ================

9618 SecondaryNameNode

9526 DataNode

12827 Jps

9756 NodeManager

[atguigu@node001 ~]$ P029

6.3 模拟数据准备

[atguigu@node001 ~]$ hadoop fs -text /origin_data/edu/db/base_category_info_full/2022-02-21/*

2023-08-17 20:21:41,687 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

1 编程技术 2021-09-24 22:19:37 0

[atguigu@node001 ~]$ [atguigu@node001 01-onlineEducation]$ cd /opt/module/data_mocker/01-onlineEducation

[atguigu@node001 01-onlineEducation]$ java -jar edu2021-mock-2022-06-18.jar

{"common":{"ar":"20","ba":"Xiaomi","ch":"xiaomi","is_new":"0","md":"Xiaomi Mix2 ","mid":"mid_400","os":"Android 11.0","sc":"1","sid":"c9fe48b4-1dd9-4a8e-b09e-b3d113b2d0e6","uid":"26","vc":"v2.1.134"},"displays":[{"display_type":"recommend","item":"8","item_type":"course_id","order":1,"pos_id":1},{"display_type":"query","item":"9","item_type":"course_id","order":2,"pos_id":1},{"display_type":"query","item":"6","item_type":"course_id","order":3,"pos_id":2},{"display_type":"query","item":"10","item_type":"course_id","order":4,"pos_id":5},{"display_type":"promotion","item":"5","item_type":"course_id","order":5,"pos_id":4},{"display_type":"query","item":"6","item_type":"course_id","order":6,"pos_id":5},{"display_type":"query","item":"2","item_type":"course_id","order":7,"pos_id":4},{"display_type":"promotion","item":"2","item_type":"course_id","order":8,"pos_id":3},{"display_type":"promotion","item":"7","item_type":"course_id","order":9,"pos_id":4}],"page":{"during_time":9407,"item":"31797","item_type":"order_id","last_page_id":"order","page_id":"payment"},"ts":1692274393226}

---演算中...---

---演算完成 ---

[atguigu@node001 01-onlineEducation]$ mock.sh init 2022-02-21

[atguigu@node001 01-onlineEducation]$ mysql_to_hdfs_full.sh all 2022-02-21

正在处理/opt/module/datax/job/import/edu.base_category_info.json...

正在处理/opt/module/datax/job/import/edu.base_province.json...

正在处理/opt/module/datax/job/import/edu.base_source.json...

正在处理/opt/module/datax/job/import/edu.base_subject_info.json...

正在处理/opt/module/datax/job/import/edu.cart_info.json...

正在处理/opt/module/datax/job/import/edu.chapter_info.json...

正在处理/opt/module/datax/job/import/edu.course_info.json...

正在处理/opt/module/datax/job/import/edu.knowledge_point.json...

正在处理/opt/module/datax/job/import/edu.test_paper.json...

正在处理/opt/module/datax/job/import/edu.test_paper_question.json...

正在处理/opt/module/datax/job/import/edu.test_point_question.json...

正在处理/opt/module/datax/job/import/edu.test_question_info.json...

正在处理/opt/module/datax/job/import/edu.test_question_option.json...

正在处理/opt/module/datax/job/import/edu.user_chapter_process.json...

正在处理/opt/module/datax/job/import/edu.video_info.json...

[atguigu@node001 01-onlineEducation]$ P030

[atguigu@node001 01-onlineEducation]$ mysql_to_hdfs_full.sh all

正在处理/opt/module/datax/job/import/edu.base_category_info.json...

正在处理/opt/module/datax/job/import/edu.base_province.json...

正在处理/opt/module/datax/job/import/edu.base_source.json...

正在处理/opt/module/datax/job/import/edu.base_subject_info.json...

正在处理/opt/module/datax/job/import/edu.cart_info.json...

正在处理/opt/module/datax/job/import/edu.chapter_info.json...

正在处理/opt/module/datax/job/import/edu.course_info.json...

正在处理/opt/module/datax/job/import/edu.knowledge_point.json...

正在处理/opt/module/datax/job/import/edu.test_paper.json...

正在处理/opt/module/datax/job/import/edu.test_paper_question.json...

正在处理/opt/module/datax/job/import/edu.test_point_question.json...

正在处理/opt/module/datax/job/import/edu.test_question_info.json...

正在处理/opt/module/datax/job/import/edu.test_question_option.json...

正在处理/opt/module/datax/job/import/edu.user_chapter_process.json...

正在处理/opt/module/datax/job/import/edu.video_info.json...

[atguigu@node001 01-onlineEducation]$ P031

6.3 模拟数据准备

flume、kafka断点续传功能。

# f2.sh

#! /bin/bash

case $1 in

"start") {

echo " --------消费flume启动-------"

ssh node003 "nohup /opt/module/flume/flume-1.9.0/bin/flume-ng agent -n a1 -c /opt/module/flume/flume-1.9.0/conf/ -f /opt/module/flume/flume-1.9.0/job/kafka_to_hdfs_log.conf >/dev/null 2>&1 &"

};;

"stop") {

echo " --------消费flume关闭-------"

ssh node003 "ps -ef | grep kafka_to_hdfs_log | grep -v grep | awk '{print \$2}' | xargs -n1 kill -9"

};;

esac#! /bin/bash

case $1 in

"start") {

for i in node003

do

echo " -------- 启动 $i 消费 flume -------"

ssh $i "nohup /opt/module/flume/flume-1.9.0/bin/flume-ng agent --conf-file /opt/module/flume/flume-1.9.0/job/kafka-flume-hdfs.conf --name al -Dflume.root.logger=INFO,LOGFILE >/opt/module/flume/flume-1.9.0/logs/log2.txt 2>&1 &"

done

};;

"stop") {

for i in node003

do

echo " -------- 停止 $i 消费 flume -------"

ssh $i "ps -ef | grep kafka-flume-hdfs | grep -v grep | awk '{print \$2}' | xargs -n1 kill"

done

};;

esacP032

第7章 数仓开发之ODS层

ODS层的设计要点如下:

(1)ODS层的表结构设计依托于从业务系统同步过来的数据结构。

(2)ODS层要保存全部历史数据,故其压缩格式应选择压缩比较高的,此处选择gzip。

(3)ODS层表名的命名规范为:ods_表名_单分区增量全量标识(inc/full)。

{"common":{"ar":"20","ba":"Redmi","ch":"xiaomi","is_new":"0","md":"Redmi k30","mid":"mid_401","os":"Android 11.0","sc":"2","sid":"479db822-8978-4d4c-bb3c-acfd6e7a3edf","uid":"12","vc":"v2.1.134"},"displays":[{"display_type":"query","item":"3","item_type":"course_id","order":1,"pos_id":2},{"display_type":"recommend","item":"1","item_type":"course_id","order":2,"pos_id":2},{"display_type":"promotion","item":"5","item_type":"course_id","order":3,"pos_id":4},{"display_type":"query","item":"4","item_type":"course_id","order":4,"pos_id":2}],"page":{"during_time":17262,"item":"32323","item_type":"order_id","last_page_id":"order","page_id":"payment"},"ts":1692274473313}

{"appVideo":{"play_sec":22,"position_sec":810,"video_id":"5410"},"common":{"ar":"24","ba":"Honor","ch":"xiaomi","is_new":"0","md":"Honor 20s","mid":"mid_287","os":"Android 11.0","sc":"2","sid":"ca3ff80c-5755-4036-9cbf-847cf525ea68","uid":"50","vc":"v2.1.134"},"ts":1692274472318}

日志数据:读取日志数据,创建hive外部表映射对应的文件。处理json格式的数据,使用get_json_object()函数。

{"appVideo":{"play_sec":22,"position_sec":810,"video_id":"5410"},"common":{"ar":"24","ba":"Honor","ch":"xiaomi","is_new":"0","md":"Honor 20s","mid":"mid_287","os":"Android 11.0","sc":"2","sid":"ca3ff80c-5755-4036-9cbf-847cf525ea68","uid":"50","vc":"v2.1.134"},"ts":1692274472318}

{

"appVideo":{

"play_sec":22,

"position_sec":810,

"video_id":"5410"

},

"common":{

"ar":"24",

"ba":"Honor",

"ch":"xiaomi",

"is_new":"0",

"md":"Honor 20s",

"mid":"mid_287",

"os":"Android 11.0",

"sc":"2",

"sid":"ca3ff80c-5755-4036-9cbf-847cf525ea68",

"uid":"50",

"vc":"v2.1.134"

},

"ts":1692274472318

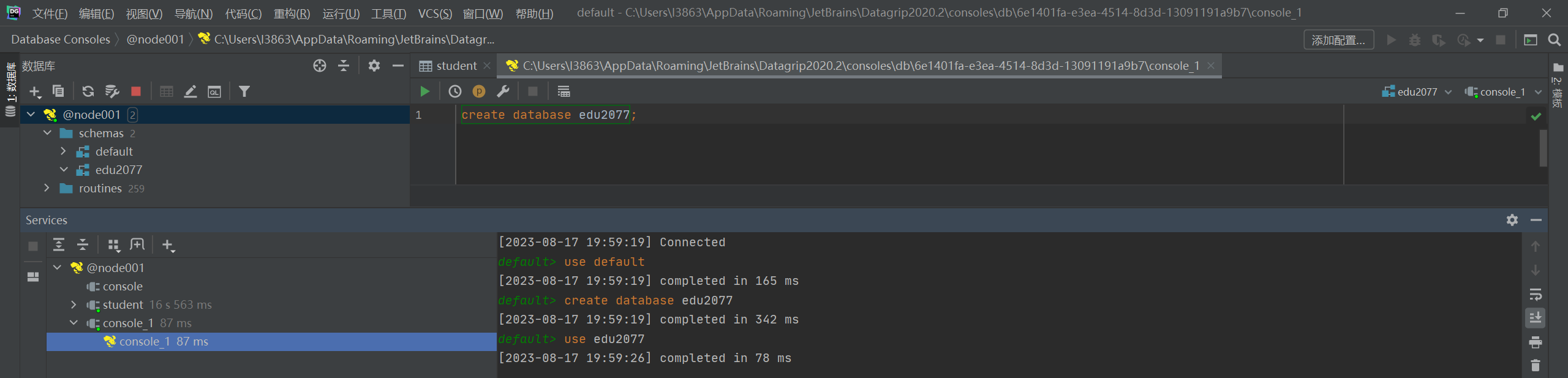

}create database edu2077;

create external table json_text

(

json_text string

) location "/jsonText";

select *

from json_text;

show functions;

desc function get_json_object;

desc function extended get_json_object;

select get_json_object(json_text, "$.appVideo")

from json_text;

select get_json_object(json_text, "$.appVideo.video_id")

from json_text;

select get_json_object(json_text, "$.appVideo.video_id") video_id

from json_text;P033

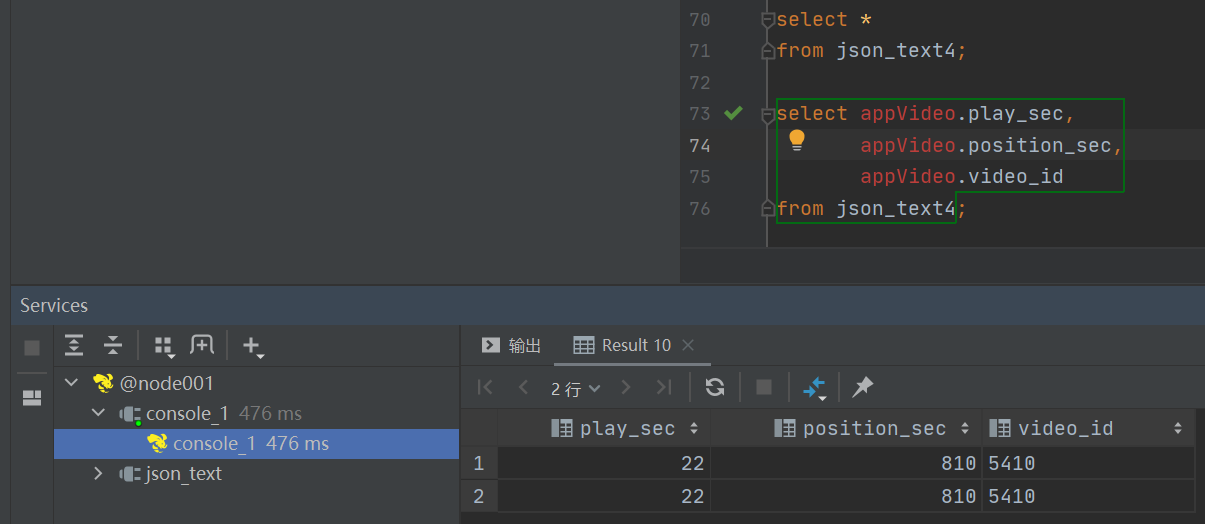

直接对json文件进行映射,而不是使用函数进行处理。

SerDe - Apache Hive - Apache Software Foundation

SerDe Overview

SerDe is short for Serializer/Deserializer. Hive uses the SerDe interface for IO. The interface handles both serialization and deserialization and also interpreting the results of serialization as individual fields for processing.

A SerDe allows Hive to read in data from a table, and write it back out to HDFS in any custom format. Anyone can write their own SerDe for their own data formats.

See Hive SerDe for an introduction to SerDes.

LanguageManual DDL - Apache Hive - Apache Software Foundation

CREATETABLEmy_table(a string, bbigint, ...)ROW FORMAT SERDE'org.apache.hadoop.hive.serde2.JsonSerDe'STOREDASTEXTFILE;

create database edu2077;

create external table json_text

(

json_text string

) location "/jsonText";

select *

from json_text;

show functions;

desc function get_json_object;

desc function extended get_json_object;

select get_json_object(json_text, "$.appVideo")

from json_text;

select get_json_object(json_text, "$.appVideo.video_id")

from json_text;

select get_json_object(json_text, "$.appVideo.video_id") video_id

from json_text;

drop table json_text2;

CREATE external table json_text2

(

ts bigint

)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

STORED AS TEXTFILE

location "/jsonText";

select *

from json_text2;

drop table json_text3;

CREATE external table json_text3

(

appVideo string,

ts bigint

)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

STORED AS TEXTFILE

location "/jsonText";

select *

from json_text3;

drop table json_text4;

CREATE external table json_text4

(

appVideo struct<play_sec:bigint,position_sec:bigint,video_id:string>,

ts bigint

)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

STORED AS TEXTFILE

location "/jsonText";

select *

from json_text4;

select appVideo.play_sec,

appVideo.position_sec,

appVideo.video_id

from json_text4;P034

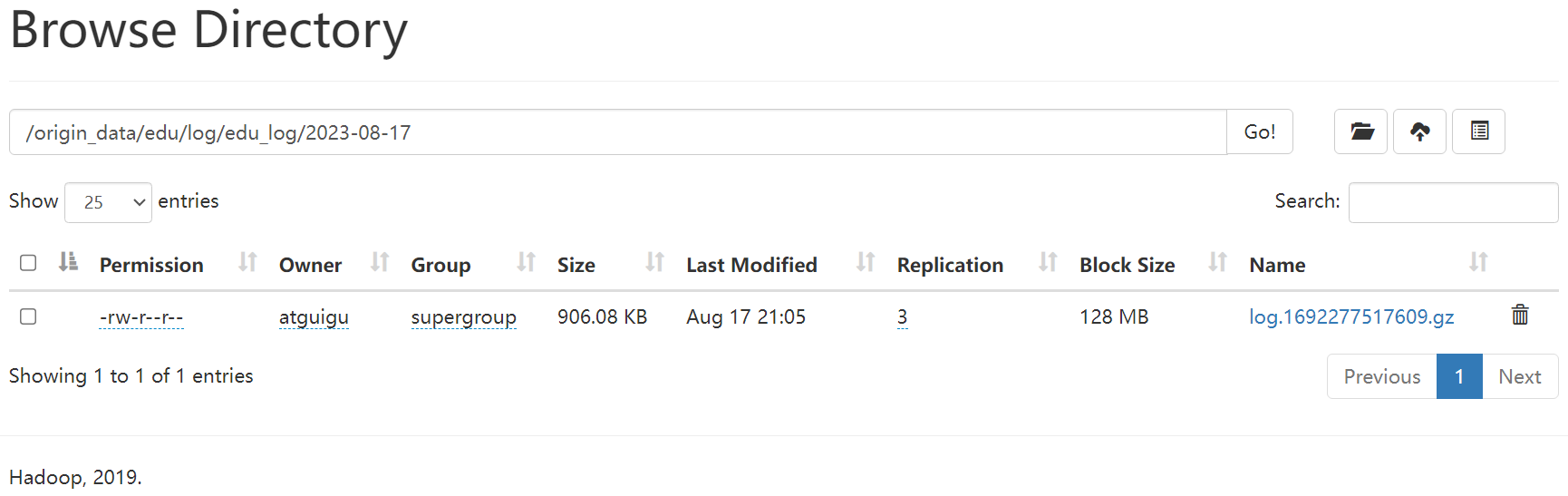

7.1 日志表

一个表格中存在三种不同的用户行为日志。

[atguigu@node001 ~]$ hadoop fs -text /origin_data/edu/log/edu_log/2023-08-17/*

{"appVideo":{"play_sec":4,"position_sec":600,"video_id":"1649"},"common":{"ar":"3","ba":"Xiaomi","ch":"oppo","is_new":"1","md":"Xiaomi Mix2 ","mid":"mid_221","os":"Android 11.0","sc":"2","sid":"68f58362-64ee-40c7-ac5f-daabe61af159","uid":"20","vc":"v2.1.134"},"ts":1692274471724}

DROP TABLE IF EXISTS ods_log_inc;

create external table ods_log_inc

(

`common` STRUCT<ar :STRING,sid :STRING,ba :STRING,ch :STRING,is_new :STRING,md :STRING,mid :STRING,os :STRING,uid

:STRING,vc :STRING,sc :STRING> COMMENT '公共信息',

`page` STRUCT<during_time :STRING,item :STRING,item_type :STRING,last_page_id :STRING,page_id :STRING,source_type

:STRING> COMMENT '页面信息',

`actions` ARRAY<STRUCT<action_id:STRING,item:STRING,item_type:STRING,ts:BIGINT>> COMMENT '动作信息',

`displays` ARRAY<STRUCT<display_type :STRING,item :STRING,item_type :STRING,`order` :STRING,pos_id

:STRING>> COMMENT '曝光信息',

`start` STRUCT<entry :STRING,loading_time :BIGINT,open_ad_id :BIGINT,open_ad_ms :BIGINT,open_ad_skip_ms :BIGINT,first_open

:STRING> COMMENT '启动信息',

`err` STRUCT<error_code:BIGINT,msg:STRING> COMMENT '错误信息',

`appVideo` STRUCT<video_id:STRING, position_sec:BIGINT, play_sec: BIGINT> COMMENT '视频播放信息',

`ts` BIGINT COMMENT '时间戳'

) COMMENT '日志增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_log_inc/';

load data inpath "/origin_data/edu/log/edu_log/2023-08-11"

into table ods_log_inc partition (dt = '2023-08-11');

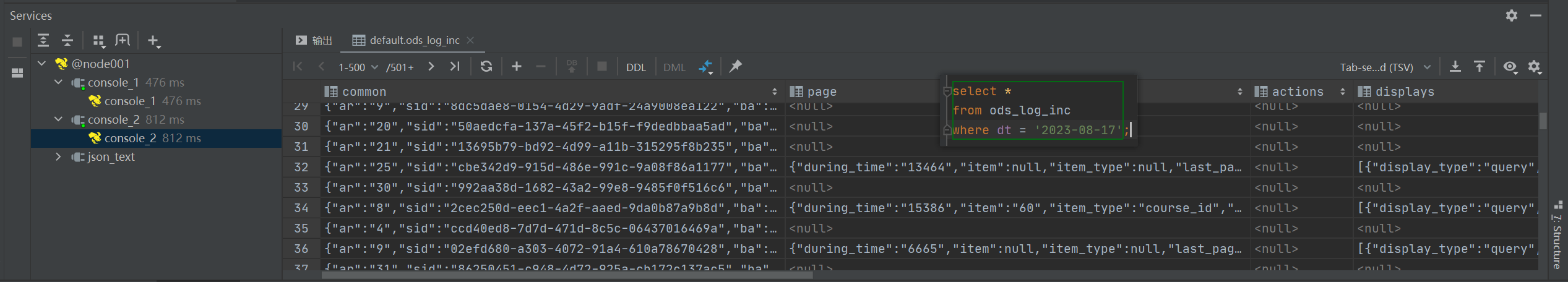

select *

from ods_log_inc;

select *

from ods_log_inc

where dt = '2023-08-17';P035

尚硅谷大数据项目之在线教育离线数仓(1用户行为采集平台)V1.0.docx

3.2.3 埋点数据日志结构

我们的日志结构大致可分为三类,一是普通页面埋点日志,二是启动日志,三是播放日志。

set hive.cbo.enable = false; //关闭优化,过滤掉空字段值

select *

from ods_log_inc

where dt = '2023-08-17'

and page is not null;

select *

from ods_log_inc

where dt = '2023-08-17'

and 'start' is not null;P036

DataGrip如何将创建的console保存路径设置到指定目录?_datagrip脚本保存路径_CoreDao的博客-CSDN博客

--7.2.1 创建分类信息表(全量)

DROP TABLE IF EXISTS `ods_base_category_info_full`;

CREATE EXTERNAL TABLE `ods_base_category_info_full`

(

`id` STRING COMMENT '编号',

`category_name` STRING COMMENT '分类名称',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`deleted` STRING COMMENT '是否删除'

) COMMENT '分类信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_base_category_info_full/';

--7.2.2 创建来源信息表(全量)

DROP TABLE IF EXISTS `ods_base_source_full`;

CREATE EXTERNAL TABLE `ods_base_source_full`

(

`id` STRING COMMENT '编号',

`source_site` STRING COMMENT '来源',

`source_url` STRING COMMENT '来源网址'

) COMMENT '来源信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_base_source_full/';

--7.2.3 创建省份表(全量)

DROP TABLE IF EXISTS `ods_base_province_full`;

CREATE EXTERNAL TABLE `ods_base_province_full`

(

`id` STRING COMMENT '编号',

`name` STRING COMMENT '省名称',

`region_id` STRING COMMENT '地区id',

`area_code` STRING COMMENT '行政区位码',

`iso_code` STRING COMMENT '国际编码',

`iso_3166_2` STRING COMMENT 'ISO3166编码'

) COMMENT '省份全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_base_province_full/';

--7.2.4 创建科目信息表(全量)

DROP TABLE IF EXISTS `ods_base_subject_info_full`;

CREATE EXTERNAL TABLE `ods_base_subject_info_full`

(

`id` STRING COMMENT '编号',

`subject_name` STRING COMMENT '科目名称',

`category_id` STRING COMMENT '分类id',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`deleted` STRING COMMENT '是否删除'

) COMMENT '科目信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_base_subject_info_full/';

--7.2.5 创建购物车表(全量)

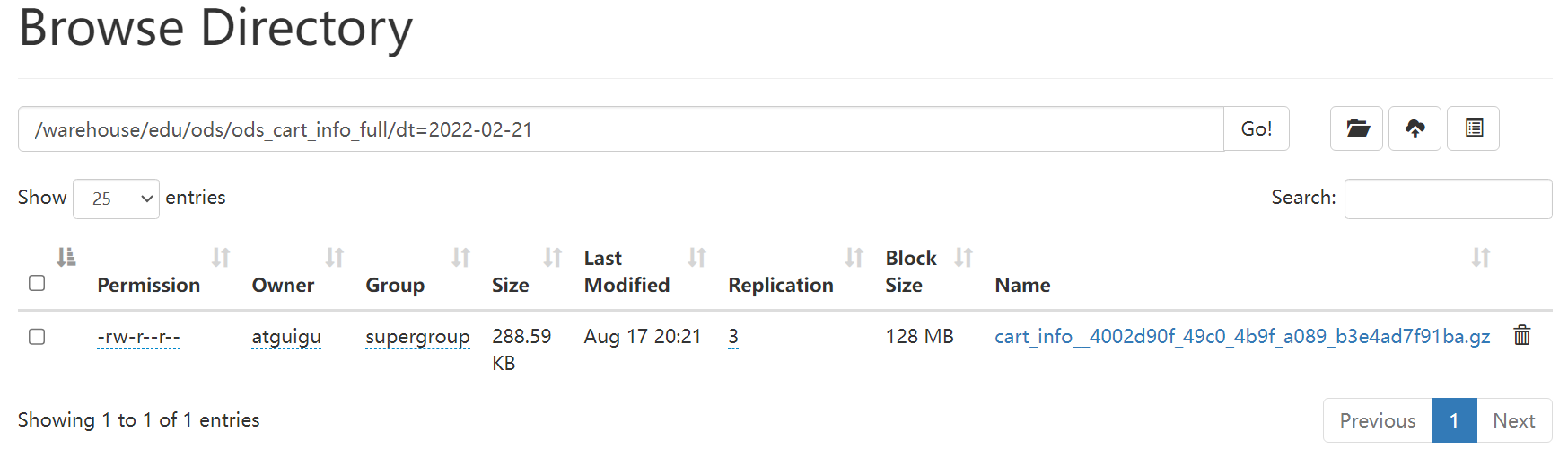

DROP TABLE IF EXISTS `ods_cart_info_full`;

CREATE EXTERNAL TABLE `ods_cart_info_full`

(

`id` STRING COMMENT '编号',

`user_id` STRING COMMENT '用户id',

`course_id` STRING COMMENT '课程id',

`course_name` STRING COMMENT '课程名称 (冗余)',

`cart_price` DEC(16, 2) COMMENT '放入购物车时价格',

`img_url` STRING COMMENT '图片文件',

`session_id` STRING COMMENT '会话id',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '修改时间',

`deleted` STRING COMMENT '是否已删',

`sold` STRING COMMENT '是否已售'

) COMMENT '购物车全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_cart_info_full/';

--7.2.6 创建章节信息表(全量)

DROP TABLE IF EXISTS `ods_chapter_info_full`;

CREATE EXTERNAL TABLE `ods_chapter_info_full`

(

`id` STRING COMMENT '编号',

`chapter_name` STRING COMMENT '章节名称',

`course_id` STRING COMMENT '课程id',

`video_id` STRING COMMENT '视频id',

`publisher_id` STRING COMMENT '发布者id',

`is_free` STRING COMMENT '是否免费',

`create_time` STRING COMMENT '创建时间',

`deleted` STRING COMMENT '是否删除',

`update_time` STRING COMMENT '更新时间'

) COMMENT '章节信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_chapter_info_full/';

--7.2.7 创建课程信息表(全量)

DROP TABLE IF EXISTS `ods_course_info_full`;

CREATE EXTERNAL TABLE `ods_course_info_full`

(

`id` STRING COMMENT '编号',

`course_name` STRING COMMENT '课程名称',

`course_slogan` STRING COMMENT '课程标语',

`course_cover_url` STRING COMMENT '课程封面',

`subject_id` STRING COMMENT '学科id',

`teacher` STRING COMMENT '讲师名称',

`publisher_id` STRING COMMENT '发布者id',

`chapter_num` BIGINT COMMENT '章节数',

`origin_price` DECIMAL(16, 2) COMMENT '价格',

`reduce_amount` DECIMAL(16, 2) COMMENT '优惠金额',

`actual_price` DECIMAL(16, 2) COMMENT '实际价格',

`course_introduce` STRING COMMENT '课程介绍',

`create_time` STRING COMMENT '创建时间',

`deleted` STRING COMMENT '是否删除',

`update_time` STRING COMMENT '更新时间'

) COMMENT '课程信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_course_info_full/';

--7.2.8 创建知识点信息表(全量)

DROP TABLE IF EXISTS `ods_knowledge_point_full`;

CREATE EXTERNAL TABLE `ods_knowledge_point_full`

(

`id` STRING COMMENT '编号',

`point_txt` STRING COMMENT '知识点内容 ',

`point_level` STRING COMMENT '知识点级别',

`course_id` STRING COMMENT '课程id',

`chapter_id` STRING COMMENT '章节id',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '修改时间',

`publisher_id` STRING COMMENT '发布者id',

`deleted` STRING COMMENT '是否删除'

) COMMENT '知识点信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_knowledge_point_full/';

--7.2.9 创建试卷表(全量)

DROP TABLE IF EXISTS `ods_test_paper_full`;

CREATE EXTERNAL TABLE `ods_test_paper_full`

(

`id` STRING COMMENT '编号',

`paper_title` STRING COMMENT '试卷名称',

`course_id` STRING COMMENT '课程id',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`publisher_id` STRING COMMENT '发布者id',

`deleted` STRING COMMENT '是否删除'

) COMMENT '试卷全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_test_paper_full/';

--7.2.10 创建试卷题目表(全量)

DROP TABLE IF EXISTS `ods_test_paper_question_full`;

CREATE EXTERNAL TABLE `ods_test_paper_question_full`

(

`id` STRING COMMENT '编号',

`paper_id` STRING COMMENT '试卷id',

`question_id` STRING COMMENT '题目id',

`score` DECIMAL(16, 2) COMMENT '得分',

`create_time` STRING COMMENT '创建时间',

`deleted` STRING COMMENT '是否删除',

`publisher_id` STRING COMMENT '发布者id'

) COMMENT '试卷题目全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_test_paper_question_full/';

--7.2.11 创建知识点题目表(全量)

DROP TABLE IF EXISTS `ods_test_point_question_full`;

CREATE EXTERNAL TABLE `ods_test_point_question_full`

(

`id` STRING COMMENT '编号',

`point_id` STRING COMMENT '知识点id',

`question_id` STRING COMMENT '题目id',

`create_time` STRING COMMENT '创建时间',

`publisher_id` STRING COMMENT '发布者id',

`deleted` STRING COMMENT '是否删除'

) COMMENT '知识点题目全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_test_point_question_full/';

--7.2.12 创建题目信息表(全量)

DROP TABLE IF EXISTS `ods_test_question_info_full`;

CREATE EXTERNAL TABLE `ods_test_question_info_full`

(

`id` STRING COMMENT '编号',

`question_txt` STRING COMMENT '题目内容',

`chapter_id` STRING COMMENT '章节id',

`course_id` STRING COMMENT '课程id',

`question_type` STRING COMMENT '题目类型',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`publisher_id` STRING COMMENT '发布者id',

`deleted` STRING COMMENT '是否删除'

) COMMENT '题目信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_test_question_info_full/';

--7.2.13 创建用户章节进度表(全量)

DROP TABLE IF EXISTS `ods_user_chapter_process_full`;

CREATE EXTERNAL TABLE `ods_user_chapter_process_full`

(

`id` STRING COMMENT '编号',

`course_id` STRING COMMENT '课程id',

`chapter_id` STRING COMMENT '章节id',

`user_id` STRING COMMENT '用户id',

`position_sec` BIGINT COMMENT '时长位置',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`deleted` STRING COMMENT '是否删除'

) COMMENT '用户章节进度全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_user_chapter_process_full/';

--7.2.14 创建题目选项表(全量)

DROP TABLE IF EXISTS `ods_test_question_option_full`;

CREATE EXTERNAL TABLE `ods_test_question_option_full`

(

`id` STRING COMMENT '编号',

`option_txt` STRING COMMENT '选项内容',

`question_id` STRING COMMENT '题目id',

`is_correct` STRING COMMENT '是否正确',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`deleted` STRING COMMENT '是否删除'

) COMMENT '题目选项全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_test_question_option_full/';

--7.2.15 创建视频信息表(全量)

DROP TABLE IF EXISTS `ods_video_info_full`;

CREATE EXTERNAL TABLE `ods_video_info_full`

(

`id` STRING COMMENT '编号',

`video_name` STRING COMMENT '视频名称',

`during_sec` BIGINT COMMENT '时长',

`video_status` STRING COMMENT '状态 未上传,上传中,上传完',

`video_size` BIGINT COMMENT '大小',

`video_url` STRING COMMENT '视频存储路径',

`video_source_id` STRING COMMENT '云端资源编号',

`version_id` STRING COMMENT '版本号',

`chapter_id` STRING COMMENT '章节id',

`course_id` STRING COMMENT '课程id',

`publisher_id` STRING COMMENT '发布者id',

`create_time` STRING COMMENT '创建时间',

`update_time` STRING COMMENT '更新时间',

`deleted` STRING COMMENT '是否删除'

) COMMENT '视频信息全量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'

NULL DEFINED AS ''

LOCATION '/warehouse/edu/ods/ods_video_info_full/';

--7.2.16 创建购物车表(增量)

DROP TABLE IF EXISTS ods_cart_info_inc;

CREATE EXTERNAL TABLE ods_cart_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, user_id : STRING, course_id : STRING, course_name : DEC(16, 2), cart_price : DECIMAL(16, 2), img_url : STRING, session_id : STRING, create_time : STRING, update_time : STRING, deleted : STRING, sold : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '购物车增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_cart_info_inc/';P037

[atguigu@node001 ~]$ hadoop fs -text /origin_data/edu/db/cart_info_inc/2023-08-11/db.1691740297351.gz

{"database":"edu","table":"cart_info","type":"update","ts":1691740292,"xid":156364,"commit":true,"data":{"id":7941,"user_id":1756,"course_id":193,"course_name":"JavaWeb从入门到精通","cart_price":200.00,"img_url":null,"session_id":"64ff13be-cefe-422f-baec-ade1cc4ce7e2","create_time":"2023-08-11 15:51:32","update_time":"2023-08-11 15:51:32","deleted":"0","sold":"1"},"old":{"update_time":null,"sold":"0"}}DROP TABLE IF EXISTS ods_cart_info_inc;

CREATE EXTERNAL TABLE ods_cart_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, user_id : STRING, course_id : STRING, course_name : DEC(16, 2), cart_price

: DECIMAL(16, 2), img_url : STRING, session_id : STRING, create_time : STRING, update_time : STRING,

deleted : STRING, sold : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '购物车增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_cart_info_inc/';

load data inpath "/origin_data/edu/db/cart_info_inc/2023-08-11" into table ods_cart_info_inc

partition (dt = '2022-08-11');

select * from ods_cart_info_inc;--7.2.16 创建购物车表(增量)

DROP TABLE IF EXISTS ods_cart_info_inc;

CREATE EXTERNAL TABLE ods_cart_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, user_id : STRING, course_id : STRING, course_name : DEC(16, 2), cart_price : DECIMAL(16, 2), img_url : STRING, session_id : STRING, create_time : STRING, update_time : STRING, deleted : STRING, sold : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '购物车增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_cart_info_inc/';

--7.2.17 创建章节评价表(增量)

DROP TABLE IF EXISTS ods_comment_info_inc;

CREATE EXTERNAL TABLE ods_comment_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, user_id : STRING, chapter_id : STRING, course_id : STRING, comment_txt : STRING, create_time :STRING, deleted : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '章节评价增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_comment_info_inc/';

--7.2.18 创建收藏表(增量)

DROP TABLE IF EXISTS ods_favor_info_inc;

CREATE EXTERNAL TABLE ods_favor_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, course_id : STRING, user_id : STRING, create_time : STRING, update_time : STRING, deleted : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '收藏增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_favor_info_inc/';

--7.2.19 创建订单明细表(增量)

DROP TABLE IF EXISTS ods_order_detail_inc;

CREATE EXTERNAL TABLE ods_order_detail_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, course_id : STRING, course_name : STRING, order_id : STRING, user_id : STRING, origin_amount : DECIMAL(16, 2), coupon_reduce : DECIMAL(16, 2), final_amount : DECIMAL(16, 2), create_time : STRING, update_time : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '订单明细增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_order_detail_inc/';

--7.2.20 创建订单表(增量)

DROP TABLE IF EXISTS ods_order_info_inc;

CREATE EXTERNAL TABLE ods_order_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : String, user_id : String, origin_amount : DECIMAL(16, 2), coupon_reduce : DECIMAL(16, 2), final_amount : DECIMAL(16, 2), order_status : String, out_trade_no : String, trade_body : String, session_id : String, province_id : String, create_time : String, expire_time : String, update_time : String> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '订单增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_order_info_inc/';

--7.2.21 创建支付表(增量)

DROP TABLE IF EXISTS ods_payment_info_inc;

CREATE EXTERNAL TABLE ods_payment_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, out_trade_no : STRING, order_id : STRING, alipay_trade_no : STRING, total_amount : DECIMAL(16, 2), trade_body : STRING, payment_type : STRING, payment_status : STRING, create_time : STRING, update_time : STRING, callback_content : STRING, callback_time : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '支付增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_payment_info_inc/';

--7.2.22 创建课程评价表(增量)

DROP TABLE IF EXISTS ods_review_info_inc;

CREATE EXTERNAL TABLE ods_review_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, user_id : STRING, course_id : STRING, review_txt : STRING, review_stars : STRING, create_time : STRING, deleted : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '课程评价增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_review_info_inc/';

--7.2.23 创建考试表(增量)

DROP TABLE IF EXISTS ods_test_exam_inc;

CREATE EXTERNAL TABLE ods_test_exam_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, paper_id : STRING, user_id : STRING, score : DECIMAL(16, 2), duration_sec : BIGINT, create_time : STRING, submit_time : STRING, update_time : STRING, deleted : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '考试增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_test_exam_inc/';

--7.2.24 创建用户表(增量)

DROP TABLE IF EXISTS ods_user_info_inc;

CREATE EXTERNAL TABLE ods_user_info_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, login_name : STRING, nick_name : STRING, passwd : STRING, real_name : STRING, phone_num : STRING, email : STRING, head_img : STRING, user_level : STRING, birthday : STRING, gender : STRING, create_time : STRING, operate_time : STRING, status : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '用户增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_user_info_inc/';

--7.2.25 创建VIP等级变动明细表(增量)

DROP TABLE IF EXISTS ods_vip_change_detail_inc;

CREATE EXTERNAL TABLE ods_vip_change_detail_inc

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT<id : STRING, user_id : STRING, from_vip : STRING, to_vip : STRING, create_time : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT 'VIP等级变动明细增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_vip_change_detail_inc/';

--7.2.26 创建考试题目表(增量)

DROP TABLE IF EXISTS `ods_test_exam_question_inc`;

CREATE EXTERNAL TABLE `ods_test_exam_question_inc`

(

`type` STRING COMMENT '变动类型',

`ts` STRING COMMENT '变动时间',

`data` STRUCT< id : STRING, exam_id : STRING, paper_id : STRING, question_id : STRING,user_id : STRING, answer : STRING, is_correct : STRING,score : decimal(16, 2), create_time : STRING,update_time : STRING,deleted : STRING> COMMENT '数据',

`old` MAP<STRING,STRING> COMMENT '旧值'

) COMMENT '考试题目增量表'

PARTITIONED BY (`dt` STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.JsonSerDe'

LOCATION '/warehouse/edu/ods/ods_test_exam_question_inc/';P038

7.2.27 数据装载脚本

1)在hadoop102的/home/atguigu/bin目录下创建hdfs_to_ods_db.sh

[atguigu@hadoop102 bin]$ vim hdfs_to_ods_db.sh

2)编写如下内容

#!/bin/bash

APP='edu2077'

if [ -n "$2" ] ;then

do_date=$2

else

do_date=`date -d '-1 day' +%F`

fi

load_data(){

sql=""

for i in $*; do

#判断路径是否存在

hadoop fs -test -e /origin_data/edu/db/${i:4}/$do_date

#路径存在方可装载数据

if [[ $? = 0 ]]; then

sql=$sql"load data inpath '/origin_data/edu/db/${i:4}/$do_date' OVERWRITE into table ${APP}.${i} partition(dt='$do_date');"

fi

done

hive -e "$sql"

}

case $1 in

ods_base_category_info_full | ods_base_province_full | ods_base_source_full | ods_base_subject_info_full | ods_cart_info_full | ods_cart_info_inc | ods_chapter_info_full | ods_comment_info_inc | ods_course_info_full | ods_favor_info_inc | ods_knowledge_point_full | ods_order_detail_inc | ods_order_info_inc | ods_payment_info_inc | ods_review_info_inc | ods_test_exam_inc | ods_test_exam_question_inc | ods_test_paper_full | ods_test_paper_question_full | ods_test_point_question_full | ods_test_question_info_full | ods_test_question_option_full | ods_user_chapter_process_full | ods_user_info_inc | ods_video_info_full | ods_vip_change_detail_inc)

load_data $1

;;

"all")

load_data ods_base_category_info_full ods_base_province_full ods_base_source_full ods_base_subject_info_full ods_cart_info_full ods_cart_info_inc ods_chapter_info_full ods_comment_info_inc ods_course_info_full ods_favor_info_inc ods_knowledge_point_full ods_order_detail_inc ods_order_info_inc ods_payment_info_inc ods_review_info_inc ods_test_exam_inc ods_test_exam_question_inc ods_test_paper_full ods_test_paper_question_full ods_test_point_question_full ods_test_question_info_full ods_test_question_option_full ods_user_chapter_process_full ods_user_info_inc ods_video_info_full ods_vip_change_detail_inc

;;

esac3)增加脚本执行权限

[atguigu@hadoop102 bin]$ chmod +x hdfs_to_ods_db.sh

4)脚本用法

[atguigu@hadoop102 bin]$ hdfs_to_ods_db.sh all 2022-02-21