内容源于曹建老师的tensorflow笔记课程

源码链接 : https://github.com/cj0012/AI-Practice-Tensorflow-Notes

在反向传播算法中,训练代码如下:

saver = tf.train.Saver() with tf.Session() as sess: init_op = tf.global_variables_initializer() sess.run(init_op) #加入断点续训功能 ckpt = tf.train.get_checkpoint_state(MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: saver.restore(sess,ckpt.model_checkpoint_path) for i in range(STEPS): xs,ys = mnist.train.next_batch(BATCH_SIZE) _,loss_value,step = sess.run([train_op,loss,global_step],feed_dict={x:xs,y_:ys}) if i % 1000 == 0: print("Ater {} training step(s),loss on training batch is {} ".format(step,loss_value)) saver.save(sess,os.path.join(MODEL_SAVE_PATH,MODEL_NAME),global_step=global_step)

其中:

MODEL_SAVE_PATH = "./model/" MODEL_NAME = "mnist_model"

saver.save会在"./model"中自动保存checkpoint文件,训练的时候可以查看一下,然后实现断点续训只需在训练前添加下列代码即可

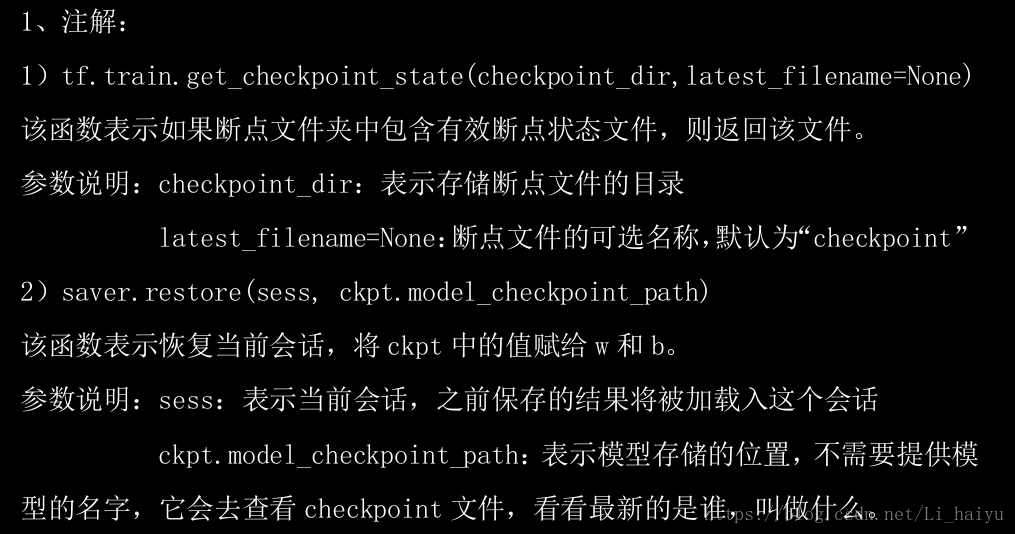

断点续训代码:

#加入断点续训功能 ckpt = tf.train.get_checkpoint_state(MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: saver.restore(sess,ckpt.model_checkpoint_path)

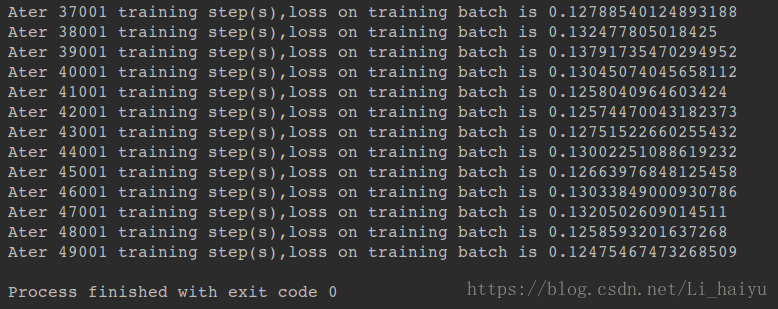

输出:

① 第一次执行输出如下(训练次数50000)

② 可以查看一下"./model"路径下以及存在checkpoint文件,添加续训代码之后,再次执行训练文件可以继续往下训练

The end.