1、视频稳定性

- 视频稳定性是指用来减少与摄像机运动有关的模糊现象的一系列方法。可以补偿任意角度的运动,相当于摄像机的偏转、倾斜、旋转,以及x、y方向上的移动。

- 机械稳定系统:当移动摄像头时,运动可以被加速器和陀螺仪检测到,并且系统产生一个镜头运动。

- 电子稳定系统:稳定图像的表面比原图像略小(有裁减,牺牲分辨率和清晰度)。通过被采集图像的移动来补偿这个运动。

- 视频稳定算法:

- 一般处理过程:①在连续帧之间使用RANSAC方法进行帧间运动的初次估计。实现视频稳定。得到一个3*3数组,数组每一项描述连续两帧的运动。②基于运动估计产生一个新的帧序列。执行附加的处理,如平滑、去模糊、边界推测等。③去除不规则扰动。

- 以下代码参考于课本代码(可以运行,但可能参数设置不太好,效果不是很明显)

#include <string>

#include <iostream>

#include <opencv2/opencv.hpp>

#include <opencv2/videostab.hpp>

using namespace std;

using namespace cv;

using namespace cv::videostab;

void processing(Ptr<IFrameSource> stabilizedFrames, string outputPath);

int main()

{

Ptr<IFrameSource> stabilizedFrames;

try {

//1-准备输入视频并进行检查

string inputPath, outputPath;

inputPath = "test.avi";

outputPath = "out.avi";

Ptr<VideoFileSource> source = makePtr<VideoFileSource>(inputPath);

cout << "frame count(rough):" << source->count() << endl;

//2-准备运动估计器

//①准备运动估计生成器 RANSAC L2

double min_inlier_ratio = 0.1;

Ptr<MotionEstimatorRansacL2> est = makePtr<MotionEstimatorRansacL2>(MM_AFFINE);

RansacParams ransac = est->ransacParams();

ransac.thresh = 5;

ransac.eps = 0.5;

est->setRansacParams(ransac);

est->setMinInlierRatio(min_inlier_ratio);

//②创建一个特征检测器

int nkps = 100;

Ptr<FastFeatureDetector> feature_detector = FastFeatureDetector::create(nkps);

//③创建运动估计器

Ptr<KeypointBasedMotionEstimator> motionEstBuilder = makePtr<KeypointBasedMotionEstimator>(est);

motionEstBuilder->setDetector(feature_detector);

Ptr<IOutlierRejector> outlierRejector = makePtr<NullOutlierRejector>();

motionEstBuilder->setOutlierRejector(outlierRejector);

//3-准备稳定器

StabilizerBase *stabilizer = 0;

//①准备单程或双程稳定器

bool isTwoPass = 1;

int radius_pass = 15;

if (isTwoPass) {

//使用一个双程稳定器

bool est_trim = true;

TwoPassStabilizer *twoPassStabiliezr = new TwoPassStabilizer();

twoPassStabiliezr->setEstimateTrimRatio(est_trim);

twoPassStabiliezr->setMotionStabilizer(makePtr<GaussianMotionFilter>(radius_pass));

stabilizer = twoPassStabiliezr;

}

else {

//使用一个单程稳定器

OnePassStabilizer *onePassStabilizer = new OnePassStabilizer();

onePassStabilizer->setMotionFilter(makePtr<GaussianMotionFilter>(radius_pass));

stabilizer = onePassStabilizer;

}

//②设置参数

int radius = 15;

double trim_ratio = 0.1;

bool incl_constr = false;

stabilizer->setFrameSource(source);

stabilizer->setMotionEstimator(motionEstBuilder);

stabilizer->setRadius(radius);

stabilizer->setTrimRatio(trim_ratio);

stabilizer->setCorrectionForInclusion(incl_constr);

stabilizer->setBorderMode(BORDER_REPLICATE);

//将稳定器赋给简单框架源接口,以读取稳定帧

stabilizedFrames.reset(dynamic_cast<IFrameSource*>(stabilizer));

//4-处理稳定帧,并显示和保留结果

processing(stabilizedFrames, outputPath);

}

catch (const exception &e) {

cout << "error:" << e.what() << endl;

stabilizedFrames.release();

return -1;

}

stabilizedFrames.release();

return 0;

}

void processing(Ptr<IFrameSource> stabilizedFrames,string outputPath) {

VideoWriter writer;

Mat stabilizedFrame;

int nframes = 0;

double outputFps = 25;

//对于每个稳定帧

while (!(stabilizedFrame=stabilizedFrames->nextFrame()).empty()) {

nframes++;

//初始化writer,并保存稳定帧

if (!outputPath.empty()) {

if (!writer.isOpened())

writer.open(outputPath, VideoWriter::fourcc('X', 'V', 'I', 'D'), outputFps, stabilizedFrame.size());

writer << stabilizedFrame;

}

imshow("stabilizedFrame", stabilizedFrame);

char key = static_cast<char>(waitKey(3));

if (key == 27) { cout << endl; break; }

}

cout << "processed frames:" << nframes << endl;

cout << "finished" << endl;

}2、超分辨率

- 专门为增强一幅图像或视频的空间分辨率的技术或算法。超分辨率合并来自相同场景的多幅图像的信息,以便表示那些在原始图像中最初未被拍摄到的细节。

- OpenCV中的superres模块,包括可以用于解决分辨率增强问题的一组函数和类。下面例子采用 双边TV-L1(Bilateral TV-L1)方法,该方法使用光流来估计规整函数。

- 例程参考课本(编译通过,测试失败,原因未知)

#include <string>

#include <iostream>

#include <iomanip>

#include <opencv2/core.hpp>

#include <opencv2/core/utility.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

#include <opencv2/superres.hpp>

#include <opencv2/superres/optical_flow.hpp>

#include <opencv2/opencv_modules.hpp>

using namespace std;

using namespace cv;

using namespace cv::superres;

int main()

{

//1-初始化初始参数

string inputVideo, outputVideo;

inputVideo = "test.avi";

outputVideo = "out.avi";

const int scale = 4; //尺度因子

const int iterations = 180; //迭代次数

const int temporalAreaRadius = 4;//临时搜索区域的半径

double outputFps = 25.0; //输出帧数

//2-创建一个光流方法

Ptr<DenseOpticalFlowExt> optical_flow = createOptFlow_Farneback();

if (optical_flow.empty()) return -1;

//3-创建超分辨率方法并设置参数

Ptr<SuperResolution> superRes;

superRes = createSuperResolution_BTVL1();

superRes->setOpticalFlow(optical_flow);

superRes->setScale(scale);

superRes->setIterations(iterations);

superRes->setTemporalAreaRadius(temporalAreaRadius);

Ptr<FrameSource> frameSource;

frameSource = createFrameSource_Video(inputVideo);

superRes->setInput(frameSource);

//不使用第一帧

Mat frame;

frameSource->nextFrame(frame);

//4-用超分辨率处理输入视频

//显示初始选项

cout << "Input:" << inputVideo << " " << frame.size() << endl;

cout << "Output:" << outputVideo << endl;

cout << "Playback speed out:" << outputFps << endl;

cout << "Scale factor:" << scale << endl;

cout << "……" << endl;

VideoWriter writer;

double start_time, finish_time;

for (int i = 0;; ++i) {

cout << '[' << setw(3) << i << "]:";

Mat result;

//计算处理时间

start_time = getTickCount();

superRes->nextFrame(result);

finish_time = getTickCount();

cout << (finish_time - start_time) / getTickFrequency() << "secs,Size:" << result.size() << endl;

if (result.empty()) break;

//显示结果

imshow("Super Resolution", result);

if (waitKey(1000) > 0) break;

//保存结果到文件

if (!outputVideo.empty()) {

if (!writer.isOpened())

writer.open(outputVideo, VideoWriter::fourcc('X', 'V', 'I', 'D'), outputFps, result.size());

writer << result;

}

}

writer.release();

return 0;

}

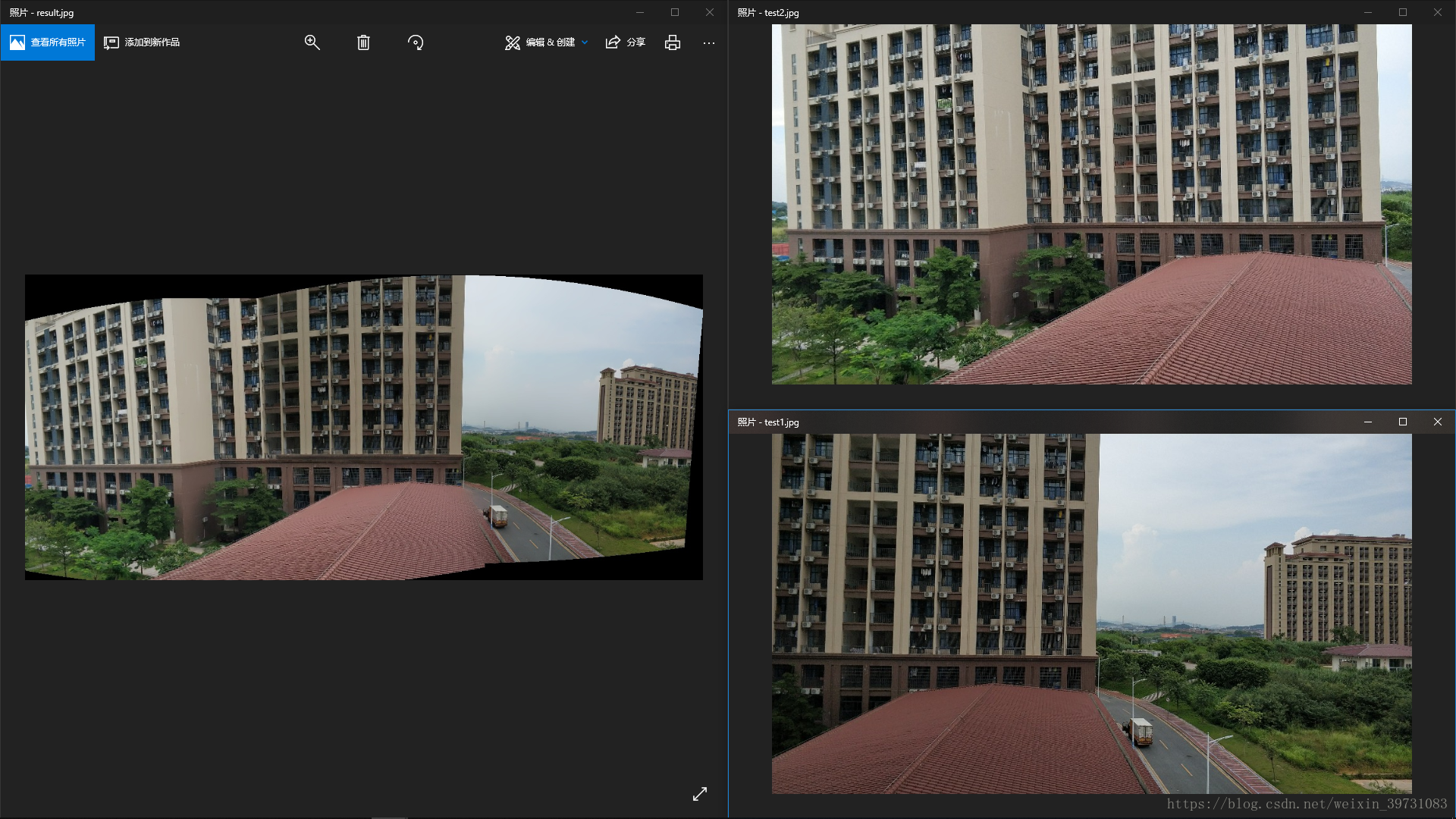

3、拼接

- 通过图像拼接,发现具有一定重叠部分的图像之间的对应关系,产生一幅全景图像或高分辨率图像

- 拼接可分为3个重要步骤:①配准:在一组图像中进行特征匹配,寻找使重叠像素之间的绝对值之和为最小的一个位移。②校准:专注于最小化理想模型和相机镜头系统之间的差异。③合成:得到输出投影,图像之间的色彩也有所调整,对曝光差异进行补偿。

- 大致流程图:

- 例程(注意照片分辨率不要太大,否则会跑崩了;另外曝光补偿用不了,暂时不知道为啥)

#include <string>

#include <iostream>

#include <opencv2/opencv_modules.hpp>

#include <opencv2/core/utility.hpp>

#include <opencv2/imgcodecs.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/features2d.hpp>

#include <opencv2/stitching/detail/blenders.hpp>

#include <opencv2/stitching/detail/camera.hpp>

#include <opencv2/stitching/detail/exposure_compensate.hpp>

#include <opencv2/stitching/detail/matchers.hpp>

#include <opencv2/stitching/detail/motion_estimators.hpp>

#include <opencv2/stitching/detail/seam_finders.hpp>

#include <opencv2/stitching/detail/util.hpp>

#include <opencv2/stitching/detail/warpers.hpp>

#include <opencv2/stitching/warpers.hpp>

using namespace std;

using namespace cv;

using namespace cv::detail;

int main()

{

//默认参数

vector<String> img_names;

double scale = 1;

string features_type = "orb";//surf或orb特征类型

float match_conf = 0.3f;

float conf_thresh = 1.f;

string adjuster_method = "ray";//reproj或ray调解器方法

bool do_wave_corret = true;

WaveCorrectKind wave_corret_type = WAVE_CORRECT_HORIZ;

string warp_type = "spherical";

int expos_comp_type = ExposureCompensator::GAIN_BLOCKS;

string seam_find_type = "gc_color";

float blend_strength = 5;

int blend_type = Blender::MULTI_BAND;

string result_name = "result.jpg";

double start_time = getTickCount();

//1-输入图像

img_names.push_back("test1.jpg");

img_names.push_back("test2.jpg");

int num_images = 2;

//2-调整图像的大小和找到特征

cout << "Finding features..." << endl;

double t = getTickCount();

Ptr<FeaturesFinder> finder;

if (features_type == "surf")

finder = makePtr<SurfFeaturesFinder>();

else if (features_type == "orb")

finder = makePtr<OrbFeaturesFinder>();

Mat full_img, img;

vector<ImageFeatures> features(num_images);

vector<Mat> images(num_images);

vector<Size> full_img_sizes(num_images);

for (int i = 0; i < num_images; ++i) {

full_img = imread(img_names[i]);

full_img_sizes[i] = full_img.size();

resize(full_img, img, Size(), scale, scale);

images[i] = img.clone();

(*finder)(img, features[i]);

features[i].img_idx = i;

cout << "Feature in image #" << i + 1 << "are:" << features[i].keypoints.size() << endl;

}

finder->collectGarbage();

full_img.release();

img.release();

cout << "Finding features,time:" << ((getTickCount() - t) / getTickFrequency()) << "sec" << endl;

//3-特征匹配

cout << "Pairwise matching" << endl;

t = getTickCount();

vector<MatchesInfo> pairwise_matches;

BestOf2NearestMatcher matcher(false, match_conf);

matcher(features, pairwise_matches);

matcher.collectGarbage();

cout << "Pairwise matching,time:" << ((getTickCount() - t) / getTickFrequency()) << "sec" << endl;

//4-选取图像并匹配子集,以建立全景图像

vector<int> indices = leaveBiggestComponent(features, pairwise_matches, conf_thresh);

vector<Mat> img_subset;

vector<String> img_names_subset;

vector<Size> full_img_sizes_subset;

for (size_t i = 0; i < indices.size(); ++i) {

img_names_subset.push_back(img_names[indices[i]]);

img_subset.push_back(images[indices[i]]);

full_img_sizes_subset.push_back(full_img_sizes[indices[i]]);

}

images = img_subset;

img_names = img_names_subset;

full_img_sizes = full_img_sizes_subset;

//粗略估计相机参数

HomographyBasedEstimator estimator;

vector<CameraParams> cameras;

if (!estimator(features, pairwise_matches, cameras)) {

cout << "Homography estimation failed." << endl;

return -1;

}

for (size_t i = 0; i < cameras.size(); i++) {

Mat R;

cameras[i].R.convertTo(R, CV_32F);

cameras[i].R = R;

cout << "Initial intrinsic #" << indices[i] + 1 << ":\n" << cameras[i].K() << endl;

}

//5-细化全局相机参数

Ptr<BundleAdjusterBase> adjuster;

if (adjuster_method == "reproj")

adjuster = makePtr<BundleAdjusterReproj>();

else

adjuster = makePtr<BundleAdjusterRay>();

adjuster->setConfThresh(conf_thresh);

//找到中值焦距

vector<double> focals;

for (size_t i = 0; i < cameras.size(); ++i) {

cout << "Camera #" << indices[i] + 1 << ":\n" << cameras[i].K() << endl;

focals.push_back(cameras[i].focal);

}

sort(focals.begin(), focals.end());

float warped_image_scale;

if ((focals.size() % 2) == 1)

warped_image_scale = static_cast<float>(focals[focals.size() / 2]);

else

warped_image_scale = static_cast<float>(focals[focals.size() / 2 - 1] + focals[focals.size() / 2])*0.5f;

//6-载波相关

if (do_wave_corret) {

vector<Mat> rmats;

for (size_t i = 0; i < cameras.size(); ++i)

rmats.push_back(cameras[i].R.clone());

waveCorrect(rmats, wave_corret_type);

for (size_t i = 0; i < cameras.size(); ++i)

cameras[i].R = rmats[i];

}

//7-弯曲图像

cout << "Warping images(auxiliary)..." << endl;

t = getTickCount();

vector<Point> corners(num_images);

vector<UMat> masks_warped(num_images);

vector<UMat> images_warped(num_images);

vector<Size> sizes(num_images);

vector<UMat> masks(num_images);

//准备图像掩码

for (int i = 0; i < num_images; ++i) {

masks[i].create(images[i].size(), CV_8U);

masks[i].setTo(Scalar::all(255));

}

//地图投影

Ptr<WarperCreator> warper_creator;

if (warp_type == "rectilinear")

warper_creator = makePtr<cv::CompressedRectilinearPortraitWarper>();

else if (warp_type == "cylindrical")

warper_creator = makePtr<cv::CylindricalWarper>();

else if (warp_type == "spherical")

warper_creator = makePtr<cv::SphericalWarper>();

else if (warp_type == "stereographic")

warper_creator = makePtr<cv::StereographicWarper>();

else if (warp_type == "panini")

warper_creator = makePtr<cv::PaniniPortraitWarper>(2.0f,1.0f);

if (!warper_creator) {

cout << "Can't create the following warper" << warp_type << endl;

return 1;

}

Ptr<RotationWarper> warper = warper_creator->create(static_cast<float>(warped_image_scale * scale));

for (int i = 0; i < num_images; ++i) {

Mat_<float> K;

cameras[i].K().convertTo(K, CV_32F);

float swa = (float)scale;

K(0, 0) *= swa; K(0, 2) *= swa;

K(1, 1) *= swa; K(1, 2) *= swa;

corners[i] = warper->warp(images[i], K, cameras[i].R, INTER_LINEAR, BORDER_REFLECT, images_warped[i]);

sizes[i] = images_warped[i].size();

warper->warp(masks[i], K, cameras[i].R, INTER_NEAREST, BORDER_CONSTANT, masks_warped[i]);

}

vector<UMat> images_warped_f(num_images);

for (int i = 0; i < num_images; i++)

images_warped[i].convertTo(images_warped_f[i], CV_32F);

cout<<"Warping images, time:"<< ((getTickCount() - t) / getTickFrequency()) << "sec" << endl;

//8-补偿曝光误差

//Ptr<ExposureCompensator> compensator = ExposureCompensator::createDefault(ExposureCompensator::GAIN_BLOCKS);

//compensator->feed(corners, images_warped, masks_warped);

//9-找到接缝掩码

//这步的时间可能比较久,需要耐心等待

t = getTickCount();

Ptr<SeamFinder> seam_finder;

if (seam_find_type == "no")

seam_finder = makePtr<NoSeamFinder>();

else if (seam_find_type == "voronoi")

seam_finder = makePtr<VoronoiSeamFinder>();

else if (seam_find_type == "gc_color")

seam_finder = makePtr<GraphCutSeamFinder>(GraphCutSeamFinderBase::COST_COLOR);

else if (seam_find_type == "gc_colorgrad")

seam_finder = makePtr<GraphCutSeamFinder>(GraphCutSeamFinderBase::COST_COLOR_GRAD);

else if (seam_find_type == "dp_color")

seam_finder = makePtr<DpSeamFinder>(DpSeamFinder::COLOR);

else if (seam_find_type == "dp_colorgrad")

seam_finder = makePtr<DpSeamFinder>(DpSeamFinder::COLOR_GRAD);

seam_finder->find(images_warped_f, corners, masks_warped);

//释放未使用的内存

images.clear();

images_warped.clear();

images_warped_f.clear();

masks.clear();

cout << "Finding seam, time:" << ((getTickCount() - t) / getTickFrequency()) << "sec" << endl;

//10-创建一个混合器

Ptr<Blender> blender = Blender::createDefault(blend_type, false);

Size dst_sz = resultRoi(corners, sizes).size();

float blend_width = sqrt(static_cast<float>(dst_sz.area()))*blend_strength / 100.f;

if (blend_width < 1.f) {

blender = Blender::createDefault(Blender::NO, false);

}

else if (blend_type == Blender::MULTI_BAND) {

MultiBandBlender* mb = dynamic_cast<MultiBandBlender*>(blender.get());

mb->setNumBands(static_cast<int>(ceil(log(blend_width) / log(2.)) - 1.));

cout << "Multi-band blender,number of bands:" << mb->numBands() << endl;

}

blender->prepare(corners, sizes);

//11-图像合成

cout << "Compositing..." << endl;

t = getTickCount();

Mat img_warped, img_warped_s;

Mat dilated_mask, seam_mask, mask, mask_warped;

for (int img_idx = 0; img_idx < num_images; img_idx++) {

cout << "Compositing image #" << indices[img_idx] + 1 << endl;

//①读入图像,必要时调整图像大小

full_img = imread(img_names[img_idx]);

if (abs(scale - 1) > 1e-1)

resize(full_img, img, Size(), scale, scale);

else

img = full_img;

full_img.release();

Size img_size = img.size();

Mat K;

cameras[img_idx].K().convertTo(K, CV_32F);

//②弯曲当前图像

warper->warp(img, K, cameras[img_idx].R, INTER_LINEAR, BORDER_REFLECT, img_warped);

//②弯曲当前掩码

mask.create(img_size, CV_8U);

mask.setTo(Scalar::all(255));

warper->warp(mask, K, cameras[img_idx].R, INTER_NEAREST, BORDER_CONSTANT, mask_warped);

//③补偿曝光误差

//compensator->apply(img_idx, corners[img_idx], img_warped, mask_warped);

img_warped.convertTo(img_warped_s, CV_16S);

img_warped.release();

img.release();

mask.release();

dilate(masks_warped[img_idx], dilated_mask, Mat());

resize(dilated_mask, seam_mask, mask_warped.size());

mask_warped = seam_mask & mask_warped;

//④合成图像

blender->feed(img_warped_s, mask_warped, corners[img_idx]);

}

Mat result, result_mask;

blender->blend(result, result_mask);

cout<< "Compositing, time:" << ((getTickCount() - t) / getTickFrequency()) << "sec" << endl;

imwrite(result_name, result);

cout << "Finish!!! Total time:" << ((getTickCount() - start_time) / getTickFrequency()) << "sec" << endl;

getchar();

return 0;

}

- 运行结果

4、参考资料

《OpenCV 图像处理》Gloria Bueno Garcia、Oscar Deniz Suarez、Jose Luis Espinosa Aranda著,刘冰 翻译,机械工业出版社出版,2016年11月