版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/qq_27914913/article/details/70306359

利用feedforwardnet函数建立BP神经网络,十分简单:

clear;

load input_white.mat;

load output_white.mat;

input=input_white'; %转置为F*N矩阵,F为特征的个数,N为样本的个数

output=output_white';

%[norminput,norminputps]=mapminmax(input); %归一化

%[normoutput,normoutputps]=mapminmax(output);

net=feedforwardnet(10,'trainlm');

net = train(net,input,output);

view(net);

y=net(input); %相当于sim(net,input)

x1=[6.3;0.3;0.34;1.6;0.049;14;132;0.994;3.3;0.49;9.5];

y1=net(x1);

perf=perform(net,y,output);其中input_white.mat是输入,output_white.mat是目标输出。x1是测试的一个样本,结果存于y1中。注意train的输入参数矩阵是F*N,F为特征的个数,N为样本的个数,train的输出参数矩阵是1*N。feedforwardnet是matlab新版的函数,newff是旧版的函数。具体使用方法可输入help。

help feedforwardnet:

Syntax:

feedforwardnet(hiddenSizes,trainFcn)

Description:

> Feedforward networks consist of a series of layers. The first layer has a connection from the network input. Each subsequent layer has a connection from the previous layer. The final layer produces the network's output.

> Feedforward networks can be used for any kind of input to output mapping. A feedforward network with one hidden layer and enough neurons in the hidden layers, can fit any finite input-output mapping problem.feedforwardnet(hiddenSizes,trainFcn) takes these arguments:

- hiddenSizes:Row vector of one or more hidden layer sizes (default = 10)

- trainFcn:Training function (default = ‘trainlm’)

help newff:

newff Create a feed-forward backpropagation network.

Obsoleted in R2010b NNET 7.0. Last used in R2010a NNET 6.0.4.

The recommended function is feedforwardnet.

这里说明newff这个函数已经过时了,但也能用。

Syntax

net = newff(P,T,S)

net = newff(P,T,S,TF,BTF,BLF,PF,IPF,OPF,DDF)

Description

newff(P,T,S) takes,

P - RxQ1 matrix of Q1 representative R-element input vectors.

T - SNxQ2 matrix of Q2 representative SN-element target vectors.

Si - Sizes of N-1 hidden layers, S1 to S(N-1), default = [].

(Output layer size SN is determined from T.)

and returns an N layer feed-forward backprop network.

newff(P,T,S,TF,BTF,BLF,PF,IPF,OPF,DDF) takes optional inputs,

TFi - Transfer function of ith layer. Default is 'tansig' for

hidden layers, and 'purelin' for output layer.

BTF - Backprop network training function, default = 'trainlm'.

BLF - Backprop weight/bias learning function, default = 'learngdm'.

PF - Performance function, default = 'mse'.

IPF - Row cell array of input processing functions.

Default is {'fixunknowns','remconstantrows','mapminmax'}.

OPF - Row cell array of output processing functions.

Default is {'remconstantrows','mapminmax'}.

DDF - Data division function, default = 'dividerand';

and returns an N layer feed-forward backprop network.

The transfer functions TF{i} can be any differentiable transfer

function such as TANSIG, LOGSIG, or PURELIN.

The training function BTF can be any of the backprop training

functions such as TRAINLM, TRAINBFG, TRAINRP, TRAINGD, etc.

*WARNING*: TRAINLM is the default training function because it

is very fast, but it requires a lot of memory to run. If you get

an "out-of-memory" error when training try doing one of these:

(1) Slow TRAINLM training, but reduce memory requirements, by

setting NET.efficiency.memoryReduction to 2 or more. (See HELP TRAINLM.)

(2) Use TRAINBFG, which is slower but more memory efficient than TRAINLM.

(3) Use TRAINRP which is slower but more memory efficient than TRAINBFG.

The learning function BLF can be either of the backpropagation

learning functions such as LEARNGD, or LEARNGDM.

The performance function can be any of the differentiable performance

functions such as MSE or MSEREG.

Examples

[inputs,targets] = simplefitdata;

net = newff(inputs,targets,20);

net = train(net,inputs,targets);

outputs = net(inputs);

errors = outputs - targets;

perf = perform(net,outputs,targets)

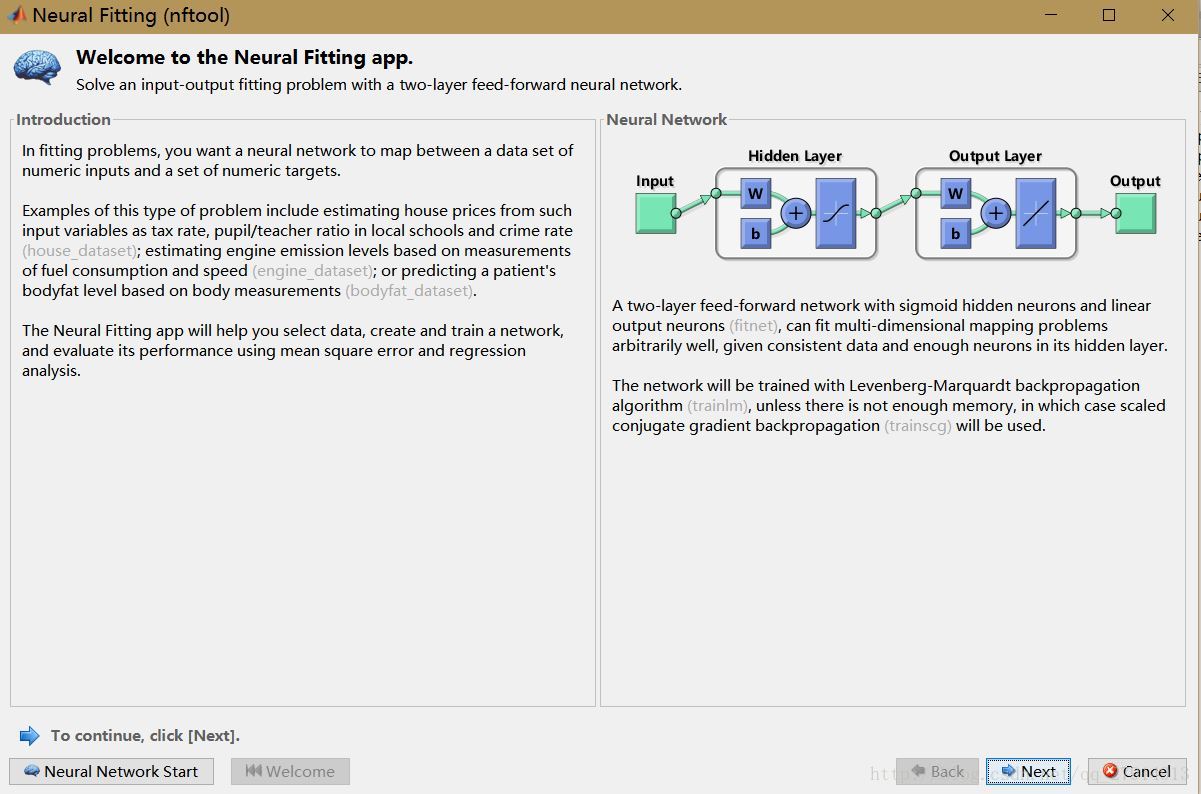

matlab中自带神经网络工具箱,在命令行中输入nftool,回车:

点击next,可设置相关参数。