1、了解Storm

1.1、什么是Storm?

疑问:已经有了Hadoop,为什么还要有Storm?

源码:https://github.com/apache/storm

-

Storm是一个开源免费的分布式实时计算系统,Storm可以轻松的处理无界的数据流。

-

Storm有许多用例:实时分析、在线机器学习、连续计算、分布式RPC、ETL等等。Storm很快:每个节点每秒处理超过一百万个条消息。Storm是可扩展的、容错的,保证您的数据将被处理,并且易于设置和操作。

-

Storm只负责数据的计算,不负责数据的存储。

-

2013年前后,阿里巴巴基于storm框架,使用java语言开发了类似的流式计算框架佳作,Jstorm。2016年年底阿里巴巴将源码贡献给了Apache storm,两个项目开始合并,新的项目名字叫做storm2.x。阿里巴巴团队专注flink开发。

1.2、流式计算的架构

2、Storm架构

2.1、Storm的核心技术组成

-

Topology(拓扑)

-

一个拓扑是一个图的计算。用户在一个拓扑的每个节点包含处理逻辑,节点之间的连接显示数据应该如何在节点间传递。Topology的运行时很简单的。

-

-

Stream(流)

-

流是Storm的核心抽象。一个流是一个无界Tuple序列,Tuple可以包含整型、长整型、短整型、字节、字符、双精度数、浮点数、布尔值和字节数组。用户可以通过自定义序列化器,在本机Tuple使用自定义类型。

-

-

Spout(喷口)

-

Spout是Topology流的来源。一般Spout从外部来源读取Tuple,提交到Topology(如Kestrel队列或Twitter API)。Spout可以分为可靠的和不可靠的两种模式。Spout可以发出超过一个流。

-

-

Bolt(螺栓)

-

Topology中的所有数据的处理都在Bolt中完成。Bolt可以完成数据过滤、业务处理、连接运算、连接、访问数据库等操作。Bolt可以做简单的流转换,发出超过一个流,主要方法是execute方法。完全可以在Bolt中启动新的线程做异步处理。

-

-

Stream grouping(流分组)

-

流分组在Bolt的任务中定义流应该如何分区。

-

-

Task(任务)

-

每个Spout或Bolt在集群中执行许多任务。每个任务对应一个线程的执行,流分组定义如何从一个任务集到另一个任务集发送Tuple。

-

-

worker(工作进程)

-

Topology跨一个或多个Worker节点的进程执行。每个Worker节点的进程是一个物理的JVM和Topology执行所有任务的一个子集。

-

2.2、Storm应用的编程模型

需要我们知道的是:

-

Spout是数据的来源;

-

Bolt是执行具体业务逻辑;

-

数据的流向,是可以任意组合的;

-

一个Topology是由若干个Spout、Bolt组成。

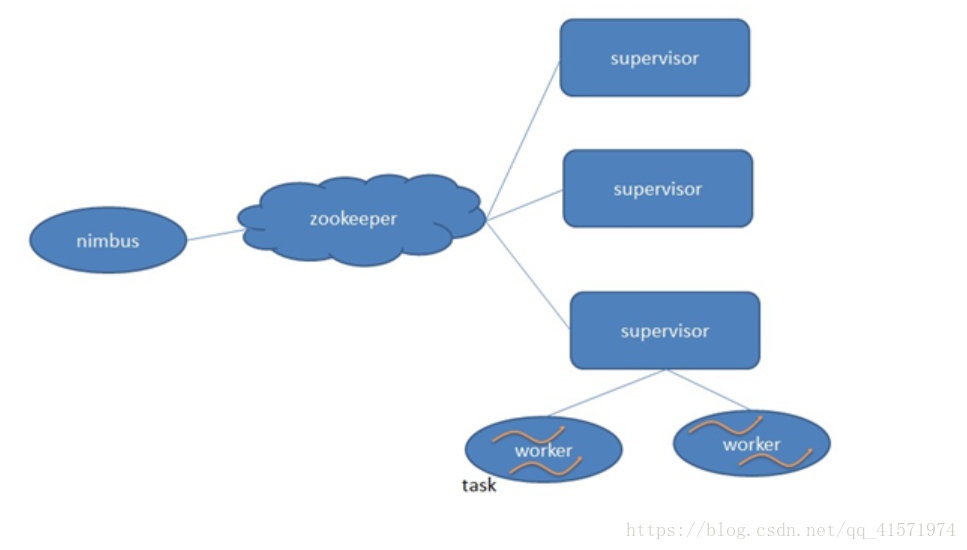

2.3、集群架构

-

Nimbus:负责资源分配和任务调度。

-

Supervisor:负责接受nimbus分配的任务,启动和停止属于自己管理的worker进程。

-

Worker:运行具体处理组件逻辑的进程。

-

Task:worker中每一个spout/bolt的线程称为一个task. 在storm0.8之后,task不再与物理线程对应,同一个spout/bolt的task可能会共享一个物理线程,该线程称为executor。

架构说明:

-

在集群架构中,用户提交到任务到storm,交由nimbus处理。

-

nimbus通过zookeeper进行查找supervisor的情况,然后选择supervisor进行执行任务。

-

supervisor会启动一个woker进程,在worker进程中启动线程进行执行具体的业务逻辑。

2.4、开发环境与生产环境

在开发Storm应用时,会面临着2套环境,一是开发环境,另一个是生产环境也是集群环境。

-

开发环境无需搭建集群,Storm已经为开发环境做了模拟支持,可以让开发人员非常轻松的在本地运行Storm应用,无需安装部分任何的环境。

-

集群环境,需要在linux机器上进行部署,然后将开发好的jar包,部署到集群中才能运行,类似于hadoop中的MapReduce程序的运行。

3、Storm快速入门

3.1、需求分析

Topology的设计:

说明:

-

RandomSentenceSpout:随机生成一个英文的字符串,模拟用户的输入;

-

SplitSentenceBolt:将接收到的句子按照空格进行分割;

-

WordCountBolt:负责将接收到上游的单词对出现的次数进行统计;

-

PrintBolt:负责将接收到的数据打印出来;

3.2、创建工程,导入依赖

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <parent> <artifactId>itcast-bigdata</artifactId> <groupId>cn.itcast.bigdata</groupId> <version>1.0.0-SNAPSHOT</version> </parent> <modelVersion>4.0.0</modelVersion> <artifactId>itcast-bigdata-storm</artifactId> <dependencies> <dependency> <groupId>org.apache.storm</groupId> <artifactId>storm-core</artifactId> <version>1.1.1</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.12</version> <scope>test</scope> </dependency> </dependencies> </project>

3.3、编写RandomSentenceSpout

package cn.itcast.storm;

import org.apache.storm.spout.SpoutOutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichSpout;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Values;

import java.util.Map;

import java.util.Random;

/**

* Spout类需要继承BaseRichSpout抽象类实现

*/

public class RandomSentenceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

private String[] sentences = new String[]{"the cow jumped over the moon", "an apple a day keeps the doctor away",

"four score and seven years ago", "snow white and the seven dwarfs", "i am at two with nature"};

/**

* 初始化的一些操作放到这里

*

* @param conf 配置信息

* @param context 应用的上下文

* @param collector 向下游输出数据的收集器

*/

public void open(Map conf, TopologyContext context, SpoutOutputCollector collector) {

this.collector = collector;

}

/**

* 处理业务逻辑,在最后向下游输出数据

*/

public void nextTuple() {

//随机生成句子

String sentence = this.sentences[new Random().nextInt(sentences.length)];

System.out.println("生成的句子为 --> " + sentence);

//向下游输出

this.collector.emit(new Values(sentence));

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

//定义向下游输出的名称

declarer.declare(new Fields("sentence"));

}

}

3.4、编写SplitSentenceBolt

package cn.itcast.storm;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.Map;

/**

* 实现Bolt,需要继承BaseRichBolt

*/

public class SplitSentenceBolt extends BaseRichBolt{

private OutputCollector collector;

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

public void execute(Tuple input) {

// 通过Tuple的getValueByField获取上游传递的数据,其中"sentence"是定义的字段名称

String sentence = input.getStringByField("sentence");

// 进行分割处理

String[] words = sentence.split(" ");

// 向下游输出数据

for (String word : words) {

this.collector.emit(new Values(word));

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}

3.5、编写WordCountBolt

package cn.itcast.storm;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Fields;

import org.apache.storm.tuple.Tuple;

import org.apache.storm.tuple.Values;

import java.util.HashMap;

import java.util.Map;

public class WordCountBolt extends BaseRichBolt {

private Map<String, Integer> wordMaps = new HashMap<String, Integer>();

private OutputCollector collector;

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

public void execute(Tuple input) {

String word = input.getStringByField("word");

Integer count = this.wordMaps.get(word);

if (null == count) {

count = 0;

}

count++;

this.wordMaps.put(word, count);

// 向下游输出数据,注意这里输出的多个字段数据

this.collector.emit(new Values(word, count));

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

}

3.6、编写PrintBolt

package cn.itcast.storm;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple;

import java.util.Map;

public class PrintBolt extends BaseRichBolt {

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

}

public void execute(Tuple input) {

String word = input.getStringByField("word");

Integer count = input.getIntegerByField("count");

// 打印上游传递的数据

System.out.println(word + " : " + count);

// 注意:这里不需要再向下游传递数据了,因为没有下游了

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

3.7、编写WordCountTopology

package cn.itcast.storm;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout());

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt()).shuffleGrouping("RandomSentenceSpout");

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt()).shuffleGrouping("SplitSentenceBolt");

topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

LocalCluster localCluster = new LocalCluster();

Config config = new Config();

localCluster.submitTopology("WordCountTopology", config, topology);

}

}

3.8、测试

生成的句子为 --> i am at two with nature i : 1 am : 1 at : 1 two : 1 with : 1 nature : 1 生成的句子为 --> the cow jumped over the moon the : 1 cow : 1 jumped : 1 over : 1 the : 2 moon : 1 生成的句子为 --> an apple a day keeps the doctor away an : 1 apple : 1 a : 1 day : 1 keeps : 1 the : 3 doctor : 1 away : 1

至此,一个简单的Storm应用就编写完成了。

4、集群模式

编写完的Storm的Topology应用最终需要提交到集群运行的,所以需要先部署Storm集群环境。

4.1、集群机器的分配情况

| 主机名 | IP地址 | zookeeper | nimbus | supervisor |

|---|---|---|---|---|

| node01 | 192.168.40.133 | √ | √ | |

| node02 | 192.168.40.134 | √ | √ | |

| node03 | 192.168.40.135 | √ | √ |

注意:storm集群依赖于zookeeper,所以要先保证zookeeper集群的正确运行。

4.2、搭建Storm集群环境

cd /export/software/

rz 上传apache-storm-1.1.1.tar.gz

tar -xvf apache-storm-1.1.1.tar.gz -C /export/servers/

cd /export/servers/

mv apache-storm-1.1.1/ storm

#配置环境变量

export STORM_HOME=/export/servers/storm

export PATH=${STORM_HOME}/bin:$PATH

source /etc/profile

修改配置文件:

cd /export/servers/storm/conf/ vim storm.yaml #指定zookeeper服务的地址 storm.zookeeper.servers: - "node01" - "node02" - "node03" #指定nimbus所在的机器 nimbus.seeds: ["node01"] #指定ui管理界面的端口 ui.port: 18080 #保存退出

分发到node02、node03上。

scp -r /export/servers/storm/ node02:/export/servers/ scp -r /export/servers/storm/ node03:/export/servers/ scp /etc/profile node02:/etc/ source /etc/profile #在node02上执行 scp /etc/profile node03:/etc/ source /etc/profile #在node03上执行

在node01上启动nimbus和ui,node02、node03上启动supervisor。

node01:

nohup storm nimbus > /dev/null 2>&1 & nohup storm ui > /dev/null 2>&1 & #logviewer用于在线查看日志文件 nohup storm logviewer > /dev/null 2>&1 &

node02:

nohup storm supervisor > /dev/null 2>&1 & nohup storm logviewer > /dev/null 2>&1 &

node03:

nohup storm supervisor > /dev/null 2>&1 & nohup storm logviewer > /dev/null 2>&1 &

4.3、检查集群是否正常运行

打开浏览器,访问地址:http://node01:18080/index.html

在线查看日志:

至此,storm的集群搭建完毕。

5、提交Topology到集群

5.1、修改Topology的提交代码

package cn.itcast.storm;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout());

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt()).shuffleGrouping("RandomSentenceSpout");

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt()).shuffleGrouping("SplitSentenceBolt");

topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

Config config = new Config();

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

// LocalCluster localCluster = new LocalCluster();

// localCluster.submitTopology("WordCountTopology", config, topology);

try {

//提交到集群

StormSubmitter.submitTopology("WordCountTopology", config, topology);

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

} catch (AuthorizationException e) {

e.printStackTrace();

}

}

}

5.2、项目打包

打包成功。

5.3、上传到服务器

cd /tmp rz上传itcast-bigdata-storm-1.0.0-SNAPSHOT.jar

5.4、提交Topology到集群

#通过storm jar命令提交jar,并且需要指定运行的入口类 storm jar itcast-bigdata-storm-1.0.0-SNAPSHOT.jar cn.itcast.storm.WordCountTopology

提交过程如下:

Running: /export/software/jdk1.8.0_141/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/export/servers/storm -Dstorm.log.dir=/export/servers/storm/logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /export/servers/storm/lib/asm-5.0.3.jar:/export/servers/storm/lib/objenesis-2.1.jar:/export/servers/storm/lib/log4j-core-2.8.2.jar:/export/servers/storm/lib/reflectasm-1.10.1.jar:/export/servers/storm/lib/storm-rename-hack-1.1.1.jar:/export/servers/storm/lib/kryo-3.0.3.jar:/export/servers/storm/lib/log4j-over-slf4j-1.6.6.jar:/export/servers/storm/lib/slf4j-api-1.7.21.jar:/export/servers/storm/lib/servlet-api-2.5.jar:/export/servers/storm/lib/clojure-1.7.0.jar:/export/servers/storm/lib/log4j-slf4j-impl-2.8.2.jar:/export/servers/storm/lib/log4j-api-2.8.2.jar:/export/servers/storm/lib/disruptor-3.3.2.jar:/export/servers/storm/lib/storm-core-1.1.1.jar:/export/servers/storm/lib/minlog-1.3.0.jar:/export/servers/storm/lib/ring-cors-0.1.5.jar:itcast-bigdata-storm-1.0.0-SNAPSHOT.jar:/export/servers/storm/conf:/export/servers/storm/bin -Dstorm.jar=itcast-bigdata-storm-1.0.0-SNAPSHOT.jar -Dstorm.dependency.jars= -Dstorm.dependency.artifacts={} cn.itcast.storm.WordCountTopology

1197 [main] WARN o.a.s.u.Utils - STORM-VERSION new 1.1.1 old null

1248 [main] INFO o.a.s.StormSubmitter - Generated ZooKeeper secret payload for MD5-digest: -6891877266277720388:-8731485235457199991

1412 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node01:6627

1539 [main] INFO o.a.s.s.a.AuthUtils - Got AutoCreds []

1564 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node01:6627

1644 [main] INFO o.a.s.StormSubmitter - Uploading dependencies - jars...

1651 [main] INFO o.a.s.StormSubmitter - Uploading dependencies - artifacts...

1651 [main] INFO o.a.s.StormSubmitter - Dependency Blob keys - jars : [] / artifacts : []

1698 [main] INFO o.a.s.StormSubmitter - Uploading topology jar itcast-bigdata-storm-1.0.0-SNAPSHOT.jar to assigned location: /export/servers/storm/storm-local/nimbus/inbox/stormjar-d80d9d68-4257-4b69-b179-7ffff28134e5.jar

1742 [main] INFO o.a.s.StormSubmitter - Successfully uploaded topology jar to assigned location: /export/servers/storm/storm-local/nimbus/inbox/stormjar-d80d9d68-4257-4b69-b179-7ffff28134e5.jar

1742 [main] INFO o.a.s.StormSubmitter - Submitting topology WordCountTopology in distributed mode with conf {"storm.zookeeper.topology.auth.scheme":"digest","storm.zookeeper.topology.auth.payload":"-6891877266277720388:-8731485235457199991"}

1742 [main] WARN o.a.s.u.Utils - STORM-VERSION new 1.1.1 old 1.1.1

2553 [main] INFO o.a.s.StormSubmitter - Finished submitting topology: WordCountTopology

可以看到在界面中已经存在Topology的信息。

提示:可以点击Topology的名称查看详情。

5.5、查看运行结果

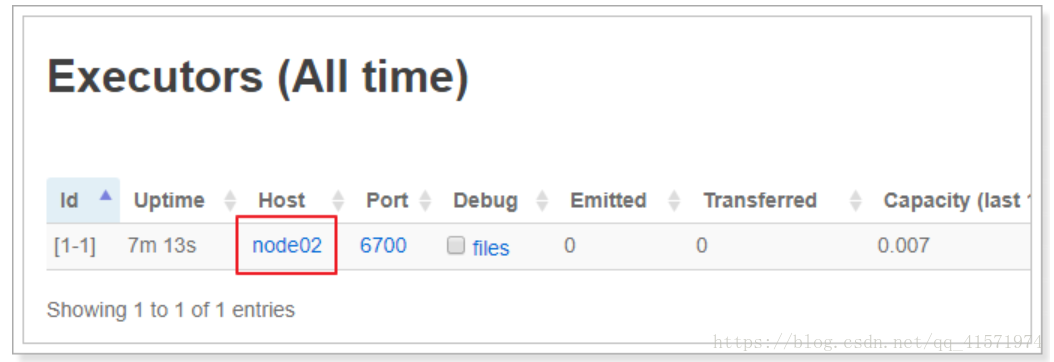

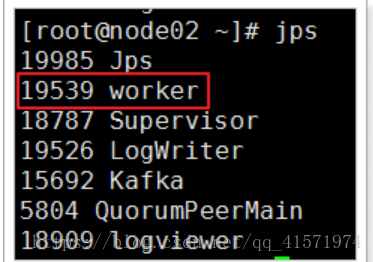

通过界面管理工具可以看到,该任务被分配到了node02上:

进入node02机器的logs目录:/export/servers/storm/logs/workers-artifacts/WordCountTopology-1-1531816634/6700

tail -f worker.log 2018-07-17 16:48:06.401 STDIO Thread-4-RandomSentenceSpout-executor[2 2] [INFO] 生成的句子为 --> the cow jumped over the moon 2018-07-17 16:48:06.415 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] the : 2507 2018-07-17 16:48:06.415 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] cow : 642 2018-07-17 16:48:06.415 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] jumped : 642 2018-07-17 16:48:06.415 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] over : 642 2018-07-17 16:48:06.416 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] the : 2508 2018-07-17 16:48:06.417 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] moon : 642 2018-07-17 16:48:06.602 STDIO Thread-4-RandomSentenceSpout-executor[2 2] [INFO] 生成的句子为 --> i am at two with nature 2018-07-17 16:48:06.615 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] i : 625 2018-07-17 16:48:06.615 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] am : 625 2018-07-17 16:48:06.615 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] at : 625 2018-07-17 16:48:06.615 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] two : 625 2018-07-17 16:48:06.615 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] with : 625 2018-07-17 16:48:06.615 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] nature : 625 2018-07-17 16:48:06.803 STDIO Thread-4-RandomSentenceSpout-executor[2 2] [INFO] 生成的句子为 --> an apple a day keeps the doctor away 2018-07-17 16:48:06.811 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] an : 598 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] apple : 598 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] a : 598 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] day : 598 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] keeps : 598 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] the : 2509 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] doctor : 598 2018-07-17 16:48:06.812 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] away : 598 2018-07-17 16:48:07.004 STDIO Thread-4-RandomSentenceSpout-executor[2 2] [INFO] 生成的句子为 --> an apple a day keeps the doctor away 2018-07-17 16:48:07.017 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] an : 599 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] apple : 599 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] a : 599 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] day : 599 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] keeps : 599 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] the : 2510 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] doctor : 599 2018-07-17 16:48:07.018 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] away : 599 2018-07-17 16:48:07.205 STDIO Thread-4-RandomSentenceSpout-executor[2 2] [INFO] 生成的句子为 --> an apple a day keeps the doctor away 2018-07-17 16:48:07.215 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] an : 600 2018-07-17 16:48:07.215 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] apple : 600 2018-07-17 16:48:07.215 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] a : 600 2018-07-17 16:48:07.215 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] day : 600 2018-07-17 16:48:07.215 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] keeps : 600 2018-07-17 16:48:07.216 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] the : 2511 2018-07-17 16:48:07.216 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] doctor : 600 2018-07-17 16:48:07.216 STDIO Thread-8-PrintBolt-executor[1 1] [INFO] away : 600

可以看到任务在正常的执行。

除了通过命令行查看,也可以在界面中查看,如下:

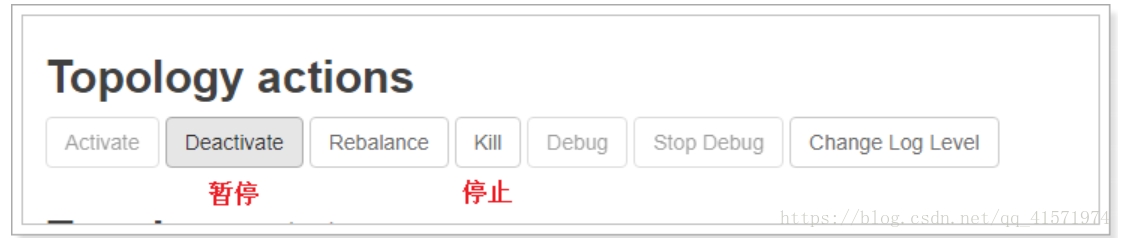

5.6、停止任务

在Storm集群中,停止任务有2种方式:(停止后,如果想继续运行该任务需要重新提交任务)

方式一:通过命令停止

#指定Topology的名称进行停止 storm kill WordCountTopology Running: /export/software/jdk1.8.0_141/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/export/servers/storm -Dstorm.log.dir=/export/servers/storm/logs -Djava.library.path=/usr/local/lib:/opt/local/lib:/usr/lib -Dstorm.conf.file= -cp /export/servers/storm/lib/asm-5.0.3.jar:/export/servers/storm/lib/objenesis-2.1.jar:/export/servers/storm/lib/log4j-core-2.8.2.jar:/export/servers/storm/lib/reflectasm-1.10.1.jar:/export/servers/storm/lib/storm-rename-hack-1.1.1.jar:/export/servers/storm/lib/kryo-3.0.3.jar:/export/servers/storm/lib/log4j-over-slf4j-1.6.6.jar:/export/servers/storm/lib/slf4j-api-1.7.21.jar:/export/servers/storm/lib/servlet-api-2.5.jar:/export/servers/storm/lib/clojure-1.7.0.jar:/export/servers/storm/lib/log4j-slf4j-impl-2.8.2.jar:/export/servers/storm/lib/log4j-api-2.8.2.jar:/export/servers/storm/lib/disruptor-3.3.2.jar:/export/servers/storm/lib/storm-core-1.1.1.jar:/export/servers/storm/lib/minlog-1.3.0.jar:/export/servers/storm/lib/ring-cors-0.1.5.jar:/export/servers/storm/conf:/export/servers/storm/bin org.apache.storm.command.kill_topology WordCountTopology 3484 [main] INFO o.a.s.u.NimbusClient - Found leader nimbus : node01:6627 3609 [main] INFO o.a.s.c.kill-topology - Killed topology: WordCountTopology

方式二:通过管理界面停止

推荐使用第二种方式。

6、核心内容详解

通过以上的学习,我们基本掌握了Storm的应用开发。

6.1、Topology的并行度(Parallelism)

问题:

-

如果Spout中产生的数据过多,下游的bolt处理不及时,怎么办?

-

同理,bolt中产生的数据过多,下游的bolt处理不及时,怎么办?

-

所提交的任务只被分配给了一个supervisor,另一个空闲,怎么办?

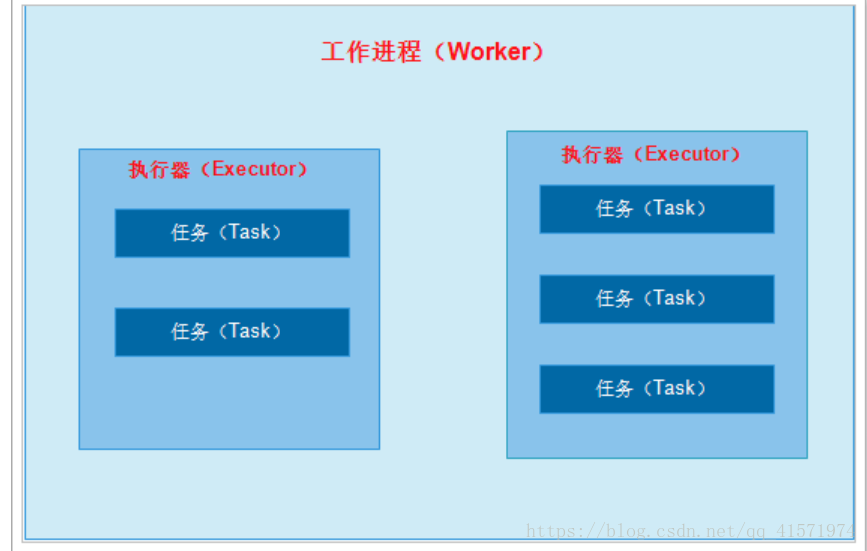

6.1.1、工作进程、执行器、任务

在了解Topology的并行度之前先要理清楚工作进程、执行器、任务的关系。

工作进程(worker):在Storm中,所提交的Topology将会在supervisor服务器上,启动独立的进程来执行。

worker数可以在config对象中设置:

config.setNumWorkers(2); // 设置工作进程数

执行器(Executor):是在worker中执行的线程,在向Topology添加spout或bolt时可以设置线程数;

如:

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout(),2);

说明:数字2代表是线程数,也是并行度数,但,并不是Topology的并行度。

任务(task):是在执行器中最小的工作单元,从storm 0.8后,task不再对应的是物理线程,每个 spout 或者 bolt 都会在集群中运行很多个 task。可以在代码中设置tast数,如:

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt()).shuffleGrouping("RandomSentenceSpout").setNumTasks(4);

在拓扑的整个生命周期中每个组件的 task 数量都是保持不变的,不过每个组件的 executor 数量却是有可能会随着时间变化。在默认情况下 task 的数量是和 executor 的数量一样的,也就是说,默认情况下 Storm 会在每个线程上运行一个 task。

它们三者的关系如下:

6.1.2、案例

package cn.itcast.storm;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout(),2).setNumTasks(2);

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt(), 4).shuffleGrouping("RandomSentenceSpout").setNumTasks(4);

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt(), 2).shuffleGrouping("SplitSentenceBolt");

topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

Config config = new Config();

config.setNumWorkers(2); // 设置工作进程数

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

// LocalCluster localCluster = new LocalCluster();

// localCluster.submitTopology("WordCountTopology", config, topology);

try {

//提交到集群

StormSubmitter.submitTopology("WordCountTopology", config, topology);

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

} catch (AuthorizationException e) {

e.printStackTrace();

}

}

}

以上的Topology提交到集群后,总共是有多个worker、Executor、Task?

works:2

Executor:9

Task:8

对吗?

每个执行器至少会有一个任务。所以,任务数应该是9。

6.1.3、实际开发中,这些数该如何设置?

首先,这些数字不能拍脑袋设置,需要进行计算每个spout、bolt的执行时间和需要处理的数据量大小进行计算。才能设置出合理的数字,并且这些数字需要根据业务量的变化和进行调整。

6.2、Stream grouping(流分组)

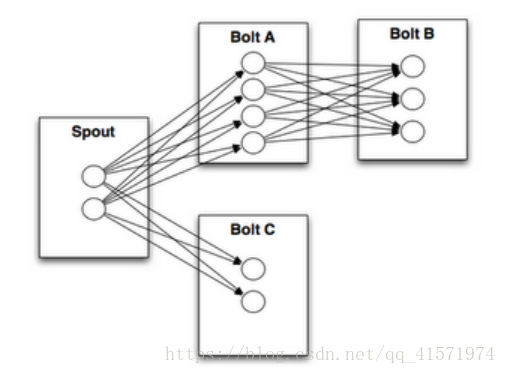

如上图所示,BoltA向BoltB发送数据时,由于BoltB中有3个任务,那么应该发给哪一个呢?

流分组就是来解决这个问题的。

Storm内置了8个流分组方式:

package org.apache.storm.topology;

import org.apache.storm.generated.GlobalStreamId;

import org.apache.storm.generated.Grouping;

import org.apache.storm.grouping.CustomStreamGrouping;

import org.apache.storm.tuple.Fields;

public interface InputDeclarer<T extends InputDeclarer> {

// 字段分组

public T fieldsGrouping(String componentId, Fields fields);

public T fieldsGrouping(String componentId, String streamId, Fields fields);

// 全局分组

public T globalGrouping(String componentId);

public T globalGrouping(String componentId, String streamId);

// 随机分组

public T shuffleGrouping(String componentId);

public T shuffleGrouping(String componentId, String streamId);

// 本地或随机分组

public T localOrShuffleGrouping(String componentId);

public T localOrShuffleGrouping(String componentId, String streamId);

// 无分组

public T noneGrouping(String componentId);

public T noneGrouping(String componentId, String streamId);

// 广播分组

public T allGrouping(String componentId);

public T allGrouping(String componentId, String streamId);

// 直接分组

public T directGrouping(String componentId);

public T directGrouping(String componentId, String streamId);

// 部分关键字分组

public T partialKeyGrouping(String componentId, Fields fields);

public T partialKeyGrouping(String componentId, String streamId, Fields fields);

// 自定义分组

public T customGrouping(String componentId, CustomStreamGrouping grouping);

public T customGrouping(String componentId, String streamId, CustomStreamGrouping grouping);

}

-

字段分组(Fields Grouping ):根据指定的字段的值进行分组,举个栗子,流按照“user-id”进行分组,那么具有相同的“user-id”的tuple会发到同一个task,而具有不同“user-id”值的tuple可能会发到不同的task上。这种情况常常用在单词计数,而实际情况是很少用到,因为如果某个字段的某个值太多,就会导致task不均衡的问题。

-

全局分组(Global grouping ):这种分组会将所有的tuple都发到一个taskid最小的task上。由于所有的tuple都发到唯一一个task上,势必在数据量大的时候会造成资源不够用的情况。

-

随机分组(Shuffle grouping):随机的将tuple分发给bolt的各个task,每个bolt实例接收到相同数量的tuple。

-

本地或随机分组(Local or shuffle grouping):和随机分组类似,但是如果目标Bolt在同一个工作进程中有一个或多个任务,那么元组将被随机分配到那些进程内task。简而言之就是如果发送者和接受者在同一个worker则会减少网络传输,从而提高整个拓扑的性能。有了此分组就完全可以不用shuffle grouping了。

-

无分组(None grouping):不指定分组就表示你不关心数据流如何分组。目前来说不分组和随机分组效果是一样的,但是最终,Storm可能会使用与其订阅的bolt或spout在相同进程的bolt来执行这些tuple。

-

广播分组(All grouping):将所有的tuple都复制之后再分发给Bolt所有的task,每一个订阅数据流的task都会接收到一份相同的完全的tuple的拷贝。

-

直接分组(Direct grouping):这是一种特殊的分组策略。这种方式分组的流意味着将由元组的生成者决定消费者的哪个task能接收该元组。

-

部分关键字分组(Partial Key grouping):流由分组中指定的字段分区,如“字段”分组,但是在两个下游Bolt之间进行负载平衡,当输入数据歪斜时,可以更好地利用资源。有了这个分组就完全可以不用Fields grouping了。

-

自定义分组(Custom Grouping):通过实现CustomStreamGrouping接口来实现自己的分组策略。

6.2.1、案例

对于我们写的WordCount的程序应该使用哪一种? 原来使用的随机分组有没有问题?

package cn.itcast.storm;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout(), 2).setNumTasks(2);

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt(), 4).shuffleGrouping("RandomSentenceSpout").setNumTasks(4);

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt(), 2).partialKeyGrouping("SplitSentenceBolt", new Fields("word"));

topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

Config config = new Config();

config.setNumWorkers(1); // 设置工作进程数

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("WordCountTopology", config, topology);

// try {

// //提交到集群

// StormSubmitter.submitTopology("WordCountTopology", config, topology);

// } catch (AlreadyAliveException e) {

// e.printStackTrace();

// } catch (InvalidTopologyException e) {

// e.printStackTrace();

// } catch (AuthorizationException e) {

// e.printStackTrace();

// }

}

}

测试:

生成的句子为 --> the cow jumped over the moon 生成的句子为 --> an apple a day keeps the doctor away apple : 21 day : 21 keeps : 21 away : 21 over : 19 an : 21 a : 21 the : 71 doctor : 21 the : 72 cow : 19 jumped : 19 the : 73 moon : 19 生成的句子为 --> the cow jumped over the moon 生成的句子为 --> the cow jumped over the moon over : 20 the : 74 cow : 20 jumped : 20 the : 75 moon : 20 over : 21 the : 76 cow : 21 jumped : 21 the : 77 moon : 21 生成的句子为 --> four score and seven years ago four : 12 score : 12 years : 12 and : 26 seven : 26 ago : 12 生成的句子为 --> four score and seven years ago four : 13 score : 13 years : 13 and : 27 seven : 27 ago : 13 生成的句子为 --> the cow jumped over the moon over : 22 the : 78 cow : 22 jumped : 22 the : 79 moon : 22 生成的句子为 --> four score and seven years ago four : 14 score : 14 years : 14 and : 28 seven : 28 ago : 14 生成的句子为 --> snow white and the seven dwarfs snow : 15 white : 15 and : 29 the : 80 seven : 29 dwarfs : 15 生成的句子为 --> four score and seven years ago four : 15 score : 15 years : 15 and : 30 seven : 30 ago : 15

6.2.2、建议

Storm提供了8种分组方式,实际常用的有几种? 一般常用的有2种:

-

本地或随机分组

-

优化了网络传输,优先在同一个进程中传递。

-

-

部分关键字分组

-

实现了根据字段分组,并且考虑了下游的负载均衡。

-

7、案例

将前面我们写的WordCount程序进行优化改造,结果存储到Redis,并且通过图表的形式将各个单词出现的次数进行展现。

7.1、部署Redis服务

yum -y install cpp binutils glibc glibc-kernheaders glibc-common glibc-devel gcc make gcc-c++ libstdc++-devel tcl cd /export/software wget http://download.redis.io/releases/redis-3.0.2.tar.gz 或者 rz 上传 tar -xvf redis-3.0.2.tar.gz -C /export/servers cd /export/servers/ mv redis-3.0.2 redis cd redis make make test #这个就不要执行了,需要很长时间 make install mkdir /export/servers/redis-server cp /export/servers/redis/redis.conf /export/servers/redis-server vi /export/servers/redis-server/redis.conf # 修改如下,默认为no daemonize yes cd /export/servers/redis-server/ #启动 redis-server ./redis.conf #测试 redis-cli

7.2、导入jedis依赖

<dependency> <groupId>redis.clients</groupId> <artifactId>jedis</artifactId> <version>2.9.0</version> </dependency>

7.3、编写RedisBolt,实现存储数据到redis

package cn.itcast.storm;

import org.apache.storm.task.OutputCollector;

import org.apache.storm.task.TopologyContext;

import org.apache.storm.topology.OutputFieldsDeclarer;

import org.apache.storm.topology.base.BaseRichBolt;

import org.apache.storm.tuple.Tuple;

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.JedisPoolConfig;

import java.util.Map;

public class RedisBolt extends BaseRichBolt {

private JedisPool jedisPool;

public void prepare(Map stormConf, TopologyContext context, OutputCollector collector) {

jedisPool = new JedisPool(new JedisPoolConfig(), "node01",6379);

}

public void execute(Tuple input) {

String word = input.getStringByField("word");

Integer count = input.getIntegerByField("count");

// 保存到redis中的key

String key = "wordCount:" + word;

Jedis jedis = null;

try {

jedis = this.jedisPool.getResource();

jedis.set(key, String.valueOf(count));

} finally {

if(null != jedis){

jedis.close();

}

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

}

}

7.4、修改WordCountTopology类

增加RedistBolt到Topology中。具体如下:

package cn.itcast.storm;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout(), 2).setNumTasks(2);

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt(), 4).localOrShuffleGrouping("RandomSentenceSpout").setNumTasks(4);

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt(), 2).partialKeyGrouping("SplitSentenceBolt", new Fields("word"));

// topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

topologyBuilder.setBolt("RedistBolt", new RedisBolt()).localOrShuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

Config config = new Config();

config.setNumWorkers(2); // 设置工作进程数

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("WordCountTopology", config, topology);

// try {

// //提交到集群

// StormSubmitter.submitTopology("WordCountTopology", config, topology);

// } catch (AlreadyAliveException e) {

// e.printStackTrace();

// } catch (InvalidTopologyException e) {

// e.printStackTrace();

// } catch (AuthorizationException e) {

// e.printStackTrace();

// }

}

}

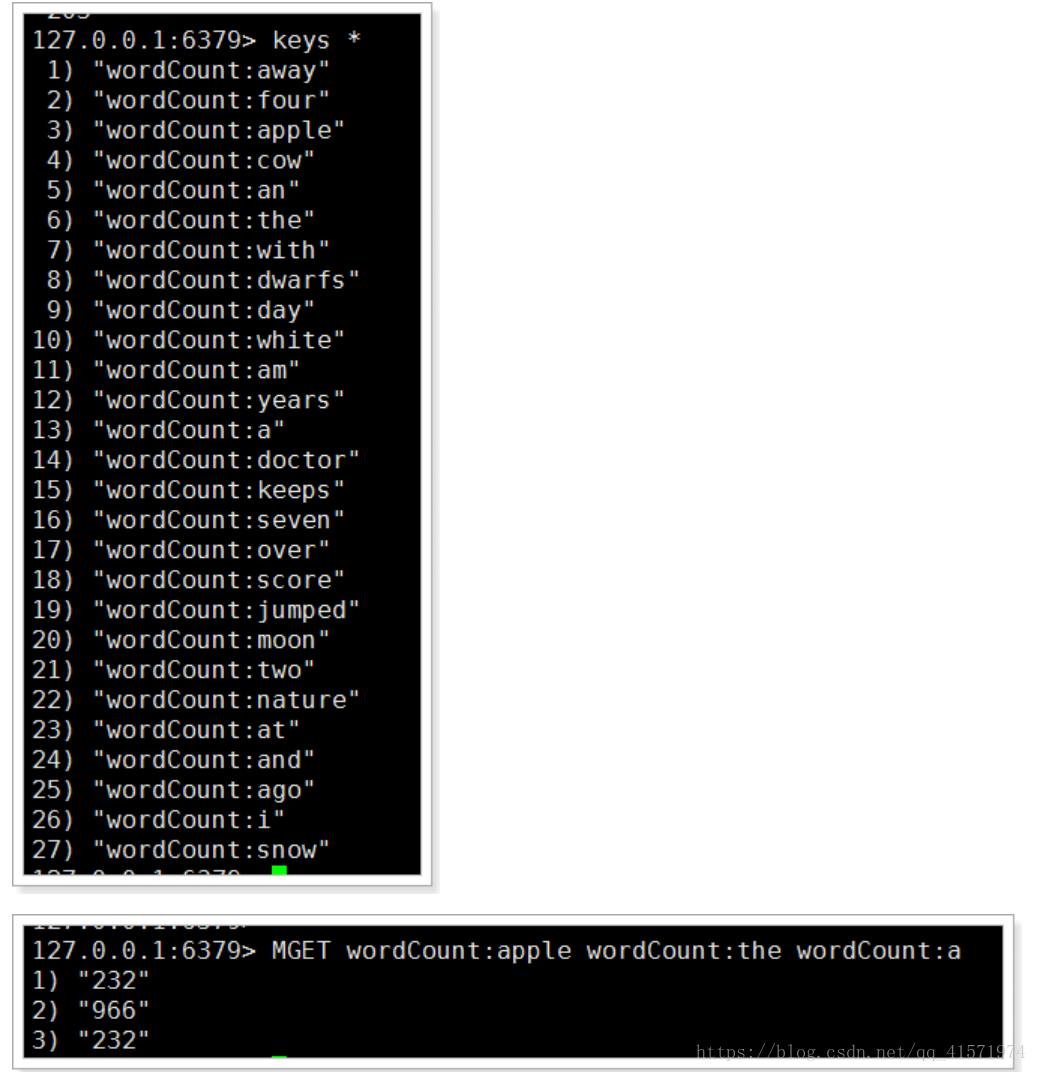

7.5、测试

可以看到已经有数据存储到了Redis中。

7.6、创建工程 itcast-wordcount-web

该工程用于展示数据。

使用技术:SpringMVC +spring-data-redis + echarts

效果:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>itcast-bigdata</artifactId>

<groupId>cn.itcast.bigdata</groupId>

<version>1.0.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<packaging>war</packaging>

<artifactId>itcast-wordcount-web</artifactId>

<dependencies>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-webmvc</artifactId>

<version>5.0.7.RELEASE</version>

</dependency>

<dependency>

<groupId>org.springframework.data</groupId>

<artifactId>spring-data-redis</artifactId>

<version>2.0.8.RELEASE</version>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.0</version>

</dependency>

<!-- Jackson Json处理工具包 -->

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.9.4</version>

</dependency>

<!-- JSP相关 -->

<dependency>

<groupId>jstl</groupId>

<artifactId>jstl</artifactId>

<version>1.2</version>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

<version>2.5</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>javax.servlet</groupId>

<artifactId>jsp-api</artifactId>

<version>2.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>1.7.7</version>

</dependency>

</dependencies>

<build>

<finalName>${project.artifactId}</finalName>

<plugins>

<!-- 资源文件拷贝插件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

<version>2.7</version>

<configuration>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<!-- java编译插件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.2</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

<encoding>UTF-8</encoding>

</configuration>

</plugin>

<!-- 配置Tomcat插件 -->

<plugin>

<groupId>org.apache.tomcat.maven</groupId>

<artifactId>tomcat7-maven-plugin</artifactId>

<version>2.2</version>

<configuration>

<path>/</path>

<port>8086</port>

</configuration>

</plugin>

</plugins>

</build>

</project>

7.7、编写配置文件

7.7.1、log4j.properties

log4j.rootLogger=DEBUG,A1

log4j.appender.A1=org.apache.log4j.ConsoleAppender

log4j.appender.A1.layout=org.apache.log4j.PatternLayout

log4j.appender.A1.layout.ConversionPattern=%-d{yyyy-MM-dd HH:mm:ss} [%t] [%c]-[%p] %m%n

7.7.2、itcast-wordcount-servlet.xml

<?xml version="1.0" encoding="UTF-8"?>

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:p="http://www.springframework.org/schema/p"

xmlns:context="http://www.springframework.org/schema/context"

xmlns:mvc="http://www.springframework.org/schema/mvc"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-4.0.xsd

http://www.springframework.org/schema/mvc http://www.springframework.org/schema/mvc/spring-mvc-4.0.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-4.0.xsd">

<!-- 扫描包 -->

<context:component-scan base-package="cn.itcast.wordcount"/>

<!-- 注解驱动 -->

<mvc:annotation-driven />

<!-- 配置视图解析器 -->

<!--

Example: prefix="/WEB-INF/jsp/", suffix=".jsp", viewname="test" -> "/WEB-INF/jsp/test.jsp"

-->

<bean class="org.springframework.web.servlet.view.InternalResourceViewResolver">

<property name="prefix" value="/WEB-INF/views/"/>

<property name="suffix" value=".jsp"/>

</bean>

<!--静态资源交由web容器处理-->

<mvc:default-servlet-handler/>

</beans>

7.7.3、itcast-wordcount-redis.xml

<?xml version="1.0" encoding="UTF-8"?> <beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:p="http://www.springframework.org/schema/p" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd"> <bean id="jedisConnectionFactory" class="org.springframework.data.redis.connection.jedis.JedisConnectionFactory" p:use-pool="true" p:hostName="node01" p:port="6379"/> <bean id="stringRedisTemplate" class="org.springframework.data.redis.core.StringRedisTemplate" p:connection-factory-ref="jedisConnectionFactory"/> </beans>

7.7.4、web.xml

需要创建webapp以及WEB-INF目录。

<?xml version="1.0" encoding="UTF-8"?> <web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://java.sun.com/xml/ns/javaee" xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd" id="WebApp_ID" version="2.5"> <display-name>itcast-wordcount</display-name> <!-- 配置SpringMVC框架入口 --> <servlet> <servlet-name>itcast-wordcount</servlet-name> <servlet-class>org.springframework.web.servlet.DispatcherServlet</servlet-class> <init-param> <param-name>contextConfigLocation</param-name> <param-value>classpath:itcast-wordcount-*.xml</param-value> </init-param> <load-on-startup>1</load-on-startup> </servlet> <servlet-mapping> <servlet-name>itcast-wordcount</servlet-name> <url-pattern>/</url-pattern> </servlet-mapping> <welcome-file-list> <welcome-file>index.jsp</welcome-file> </welcome-file-list> </web-app>

7.8、编写代码

7.8.1、编写Controller

package cn.itcast.wordcount.controller;

import cn.itcast.wordcount.service.WordCountService;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.ResponseBody;

import java.util.List;

import java.util.Map;

@Controller

public class WordCountController {

@Autowired

private WordCountService wordCountService;

@RequestMapping("view")

public String wordCountView(){

return "view";

}

@RequestMapping("data")

@ResponseBody

public Map<String,String> queryData(){

return this.wordCountService.queryData();

}

}

7.8.2、编写WordCountService

package cn.itcast.wordcount.service;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.stereotype.Service;

import java.util.HashMap;

import java.util.Map;

import java.util.Set;

@Service

public class WordCountService {

@Autowired

private RedisTemplate redisTemplate;

public Map<String, String> queryData() {

Set<String> keys = this.redisTemplate.keys("wordCount:*");

Map<String, String> result = new HashMap<>();

for (String key : keys) {

result.put(key.substring(key.indexOf(':') + 1), this.redisTemplate.opsForValue().get(key).toString());

}

return result;

}

}

7.9、编写view.jsp

在WEB-INF/view下创建view.jsp

<%@ page contentType="text/html;charset=UTF-8" language="java" %>

<html>

<head>

<title>Word Count View Page</title>

<script type="application/javascript" src="/js/jquery.min.js"></script>

<script type="application/javascript" src="/js/echarts.min.js"></script>

</head>

<body>

<div id="main" style="height: 100%"></div>

<script type="text/javascript">

// 基于准备好的dom,初始化echarts实例

var myChart = echarts.init(document.getElementById('main'));

// 指定图表的配置项和数据

var option = {

title: {

text: 'Word Count'

},

tooltip : {//鼠标悬浮弹窗提示

trigger : 'item',

show:true,

showDelay: 5,

hideDelay: 2,

transitionDuration:0,

formatter: function (params,ticket,callback) {

// console.log(params);

var res = "次数:"+params.value;

return res;

}

},

xAxis: {

data: [],

type: 'category',

axisLabel: {

interval: 0

}

},

yAxis: {},

series: [{

name: '数量',

type: 'bar',

data: [],

itemStyle: {

color: '#2AAAE3'

}

}, {

name: '折线',

type: 'line',

itemStyle: {

color: '#FF3300'

},

data: []

}

]

};

// 使用刚指定的配置项和数据显示图表。

myChart.setOption(option);

myChart.showLoading();

// 异步加载数据

$.get('/data', function (data) {

var words = [];

var counts = [];

var counts2 = [];

for (var d in data) {

words.push(d);

counts.push(data[d]);

counts2.push(eval(data[d]) + 50);

}

myChart.hideLoading();

// 填入数据

myChart.setOption({

xAxis: {

data: words

},

series: [{

name: '数量',

data: counts

},{

name: '折线',

data: counts2

}]

});

});

</script>

</body>

</html>

7.10、itcast-bigdata-storm 项目打包

现在我们需要将itcast-bigdata-storm项目打包成jar包,发布到storm集群环境中。

7.10.1、修改WordCountTopology

package cn.itcast.storm;

import cn.itcast.storm.utils.SpringApplication;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//实例化Spring容器

SpringApplication.init();

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout(), 2).setNumTasks(2);

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt(), 4).localOrShuffleGrouping("RandomSentenceSpout").setNumTasks(4);

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt(), 2).partialKeyGrouping("SplitSentenceBolt", new Fields("word"));

// topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

topologyBuilder.setBolt("RedistBolt", new RedisBolt()).localOrShuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

Config config = new Config();

config.setNumWorkers(2); // 设置工作进程数

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

// LocalCluster localCluster = new LocalCluster();

// localCluster.submitTopology("WordCountTopology", config, topology);

try {

//提交到集群

StormSubmitter.submitTopology("WordCountTopology", config, topology);

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

} catch (AuthorizationException e) {

e.printStackTrace();

}

}

}

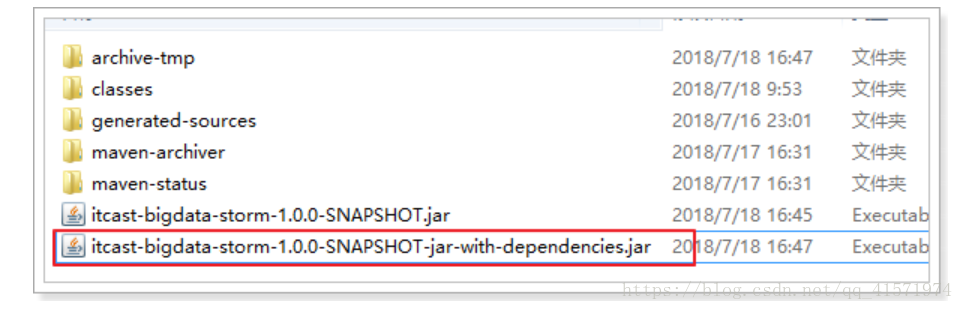

7.10.2、添加打包插件

目前的打包会存一个问题,直接执行package命令打成的jar包中不包含第三方的依赖(比如,jedis依赖)。这样我们的程序是没有办法运行的。所以需要添加如下插件来解决这个问题。

<build> <plugins> <plugin> <artifactId>maven-assembly-plugin</artifactId> <configuration> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> <archive> <manifest> <mainClass>cn.itcast.storm.WordCountTopology</mainClass> </manifest> </archive> </configuration> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>3.7.0</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> </plugins> </build>

打包的结果如下:

将该包上传到服务器,并且提交到storm。

storm jar itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar cn.itcast.storm.WordCountTopology

报错:

[root@node01 tmp]# storm jar itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar cn.itcast.storm.WordCountTopology

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/servers/storm/lib/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/tmp/itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.ExceptionInInitializerError

at org.apache.storm.config$read_storm_config.invoke(config.clj:78)

at org.apache.storm.config$fn__908.invoke(config.clj:100)

at org.apache.storm.config__init.load(Unknown Source)

at org.apache.storm.config__init.<clinit>(Unknown Source)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at clojure.lang.RT.classForName(RT.java:2154)

at clojure.lang.RT.classForName(RT.java:2163)

at clojure.lang.RT.loadClassForName(RT.java:2182)

at clojure.lang.RT.load(RT.java:436)

at clojure.lang.RT.load(RT.java:412)

at clojure.core$load$fn__5448.invoke(core.clj:5866)

at clojure.core$load.doInvoke(core.clj:5865)

at clojure.lang.RestFn.invoke(RestFn.java:408)

at clojure.core$load_one.invoke(core.clj:5671)

at clojure.core$load_lib$fn__5397.invoke(core.clj:5711)

at clojure.core$load_lib.doInvoke(core.clj:5710)

at clojure.lang.RestFn.applyTo(RestFn.java:142)

at clojure.core$apply.invoke(core.clj:632)

at clojure.core$load_libs.doInvoke(core.clj:5753)

at clojure.lang.RestFn.applyTo(RestFn.java:137)

at clojure.core$apply.invoke(core.clj:634)

at clojure.core$use.doInvoke(core.clj:5843)

at clojure.lang.RestFn.invoke(RestFn.java:408)

at org.apache.storm.command.config_value$loading__5340__auto____12278.invoke(config_value.clj:16)

at org.apache.storm.command.config_value__init.load(Unknown Source)

at org.apache.storm.command.config_value__init.<clinit>(Unknown Source)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:348)

at clojure.lang.RT.classForName(RT.java:2154)

at clojure.lang.RT.classForName(RT.java:2163)

at clojure.lang.RT.loadClassForName(RT.java:2182)

at clojure.lang.RT.load(RT.java:436)

at clojure.lang.RT.load(RT.java:412)

at clojure.core$load$fn__5448.invoke(core.clj:5866)

at clojure.core$load.doInvoke(core.clj:5865)

at clojure.lang.RestFn.invoke(RestFn.java:408)

at clojure.lang.Var.invoke(Var.java:379)

at org.apache.storm.command.config_value.<clinit>(Unknown Source)

Caused by: java.lang.RuntimeException: java.io.IOException: Found multiple defaults.yaml resources. You're probably bundling the Storm jars with your topology jar. [jar:file:/export/servers/storm/lib/storm-core-1.1.1.jar!/defaults.yaml, jar:file:/tmp/itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar!/defaults.yaml]

at org.apache.storm.utils.Utils.findAndReadConfigFile(Utils.java:383)

at org.apache.storm.utils.Utils.readDefaultConfig(Utils.java:427)

at org.apache.storm.utils.Utils.readStormConfig(Utils.java:463)

at org.apache.storm.utils.Utils.<clinit>(Utils.java:177)

... 39 more

Caused by: java.io.IOException: Found multiple defaults.yaml resources. You're probably bundling the Storm jars with your topology jar. [jar:file:/export/servers/storm/lib/storm-core-1.1.1.jar!/defaults.yaml, jar:file:/tmp/itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar!/defaults.yaml]

at org.apache.storm.utils.Utils.getConfigFileInputStream(Utils.java:409)

at org.apache.storm.utils.Utils.findAndReadConfigFile(Utils.java:362)

... 42 more

Running: /export/software/jdk1.8.0_141/bin/java -client -Ddaemon.name= -Dstorm.options= -Dstorm.home=/export/servers/storm -Dstorm.log.dir=/export/servers/storm/logs -Djava.library.path= -Dstorm.conf.file= -cp /export/servers/storm/lib/asm-5.0.3.jar:/export/servers/storm/lib/objenesis-2.1.jar:/export/servers/storm/lib/log4j-core-2.8.2.jar:/export/servers/storm/lib/reflectasm-1.10.1.jar:/export/servers/storm/lib/storm-rename-hack-1.1.1.jar:/export/servers/storm/lib/kryo-3.0.3.jar:/export/servers/storm/lib/log4j-over-slf4j-1.6.6.jar:/export/servers/storm/lib/slf4j-api-1.7.21.jar:/export/servers/storm/lib/servlet-api-2.5.jar:/export/servers/storm/lib/clojure-1.7.0.jar:/export/servers/storm/lib/log4j-slf4j-impl-2.8.2.jar:/export/servers/storm/lib/log4j-api-2.8.2.jar:/export/servers/storm/lib/disruptor-3.3.2.jar:/export/servers/storm/lib/storm-core-1.1.1.jar:/export/servers/storm/lib/minlog-1.3.0.jar:/export/servers/storm/lib/ring-cors-0.1.5.jar:itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar:/export/servers/storm/conf:/export/servers/storm/bin -Dstorm.jar=itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar -Dstorm.dependency.jars= -Dstorm.dependency.artifacts={} cn.itcast.storm.WordCountTopology

689 [main] INFO o.s.c.s.ClassPathXmlApplicationContext - Refreshing org.springframework.context.support.ClassPathXmlApplicationContext@fe18270: startup date [Wed Jul 18 17:38:08 CST 2018]; root of context hierarchy

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/servers/storm/lib/log4j-slf4j-impl-2.8.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/tmp/itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Exception in thread "main" java.lang.ExceptionInInitializerError

at org.apache.storm.topology.TopologyBuilder$BoltGetter.customGrouping(TopologyBuilder.java:562)

at org.apache.storm.topology.TopologyBuilder$BoltGetter.customGrouping(TopologyBuilder.java:557)

at org.apache.storm.topology.TopologyBuilder$BoltGetter.partialKeyGrouping(TopologyBuilder.java:547)

at org.apache.storm.topology.TopologyBuilder$BoltGetter.partialKeyGrouping(TopologyBuilder.java:476)

at cn.itcast.storm.WordCountTopology.main(WordCountTopology.java:27)

Caused by: java.lang.RuntimeException: java.io.IOException: Found multiple defaults.yaml resources. You're probably bundling the Storm jars with your topology jar. [jar:file:/export/servers/storm/lib/storm-core-1.1.1.jar!/defaults.yaml, jar:file:/tmp/itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar!/defaults.yaml]

at org.apache.storm.utils.Utils.findAndReadConfigFile(Utils.java:383)

at org.apache.storm.utils.Utils.readDefaultConfig(Utils.java:427)

at org.apache.storm.utils.Utils.readStormConfig(Utils.java:463)

at org.apache.storm.utils.Utils.<clinit>(Utils.java:177)

... 5 more

Caused by: java.io.IOException: Found multiple defaults.yaml resources. You're probably bundling the Storm jars with your topology jar. [jar:file:/export/servers/storm/lib/storm-core-1.1.1.jar!/defaults.yaml, jar:file:/tmp/itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar!/defaults.yaml]

at org.apache.storm.utils.Utils.getConfigFileInputStream(Utils.java:409)

at org.apache.storm.utils.Utils.findAndReadConfigFile(Utils.java:362)

错误显示,存在多个defaults.yaml文件,这是什么意思呢???

其实,在我们打包时把所有的依赖包都打进去了,其中也包含了storm相关的包,在集群环境中本来就已经存在相关的包,这样就冲突了,所以在打包时要排除掉storm相关的依赖包。

<dependency> <groupId>org.apache.storm</groupId> <artifactId>storm-core</artifactId> <version>1.1.1</version> <scope>provided</scope> </dependency>

7.11、优化提交Topology逻辑

通过前面的测试会发现,WordCountTopology中的提交逻辑需要经常的本地、集群进行切换,非常的麻烦,现在我们对这个逻辑做下优化改进,具体如下:

package cn.itcast.storm;

import org.apache.storm.Config;

import org.apache.storm.LocalCluster;

import org.apache.storm.StormSubmitter;

import org.apache.storm.generated.AlreadyAliveException;

import org.apache.storm.generated.AuthorizationException;

import org.apache.storm.generated.InvalidTopologyException;

import org.apache.storm.generated.StormTopology;

import org.apache.storm.topology.TopologyBuilder;

import org.apache.storm.tuple.Fields;

public class WordCountTopology {

public static void main(String[] args) {

//第一步,定义TopologyBuilder对象,用于构建拓扑

TopologyBuilder topologyBuilder = new TopologyBuilder();

//第二步,设置spout和bolt

topologyBuilder.setSpout("RandomSentenceSpout", new RandomSentenceSpout(), 2).setNumTasks(2);

topologyBuilder.setBolt("SplitSentenceBolt", new SplitSentenceBolt(), 4).localOrShuffleGrouping("RandomSentenceSpout").setNumTasks(4);

topologyBuilder.setBolt("WordCountBolt", new WordCountBolt(), 2).partialKeyGrouping("SplitSentenceBolt", new Fields("word"));

// topologyBuilder.setBolt("PrintBolt", new PrintBolt()).shuffleGrouping("WordCountBolt");

topologyBuilder.setBolt("RedistBolt", new RedisBolt()).localOrShuffleGrouping("WordCountBolt");

//第三步,构建Topology对象

StormTopology topology = topologyBuilder.createTopology();

Config config = new Config();

if (args == null || args.length == 0) {

// 本地模式

//第四步,提交拓扑到集群,这里先提交到本地的模拟环境中进行测试

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("WordCountTopology", config, topology);

} else {

// 集群模式

config.setNumWorkers(2); // 设置工作进程数

try {

//提交到集群,并且将参数作为拓扑的名称

StormSubmitter.submitTopology(args[0], config, topology);

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

} catch (AuthorizationException e) {

e.printStackTrace();

}

}

}

}

集群模式下用法:

# 参数:WordCountTopology2为Topology的名称 storm jar itcast-bigdata-storm-1.0.0-SNAPSHOT-jar-with-dependencies.jar cn.itcast.storm.WordCountTopology WordCountTopology2

可以看到,已经提交成功。