Elastic Stack介绍

回顾:(redis)

分布式系统两个基础理论:CAP/BASE

CAP:AP

C、A、P:三者其中之二;

AP:可用性、分区容错性、弱一致性;

BASE:BA,S,E

BA:基本可用、S:软状态、E:最终一致性;

分布式系统:

分布式存储:

NoSQL:

kv、document、column families、GraphDB

分布式文件系统:文件系统接口

分布式存储:API,不能挂载;ceph, glusterfs, HDFS…

NewSQL:

PingCAP:TiDB(MySQL protocol)…

分布式运算:mapreduce, …

HADOOP=mapreduce+HDFS, HBase(Hadoop Database)

redis:REmote DIctionary Server

数据结构:String, List, Set, sorted_set, Hash, pubsub …

MySQL(MyISAM),Redis(k/v)

MyISAM:支持全文索引Fulltext

B+Tree:最左前缀索引

互联网搜索引擎面临的两大问题:

数据处理

数据存储

google引擎:

gfs:海量数据存储系统

mapreduce:分布式应用处理程序框架 概念"Map(映射)“和"Reduce(归约)”

Hadoof

根据google研究搜索引擎后发表的论文而山寨的开源搜索引擎中的数据处理和数据存储

mapreduce:分布式应用处理框架

HDFS:文件存储系统

倒排索引

关键词找文档

Lucene:java开发的搜索引擎类库

https://baike.baidu.com/item/Lucene/6753302?fr=aladdin

ASF旗下产品

etl:抽取(extract),装入,转换(transform)

shard:切片

Document:相当于关系型数据库的row 如{title:BODY,ident:ID,user:USER}

type:相当于关系型数据库的table

index:相当于关系型数据库的database

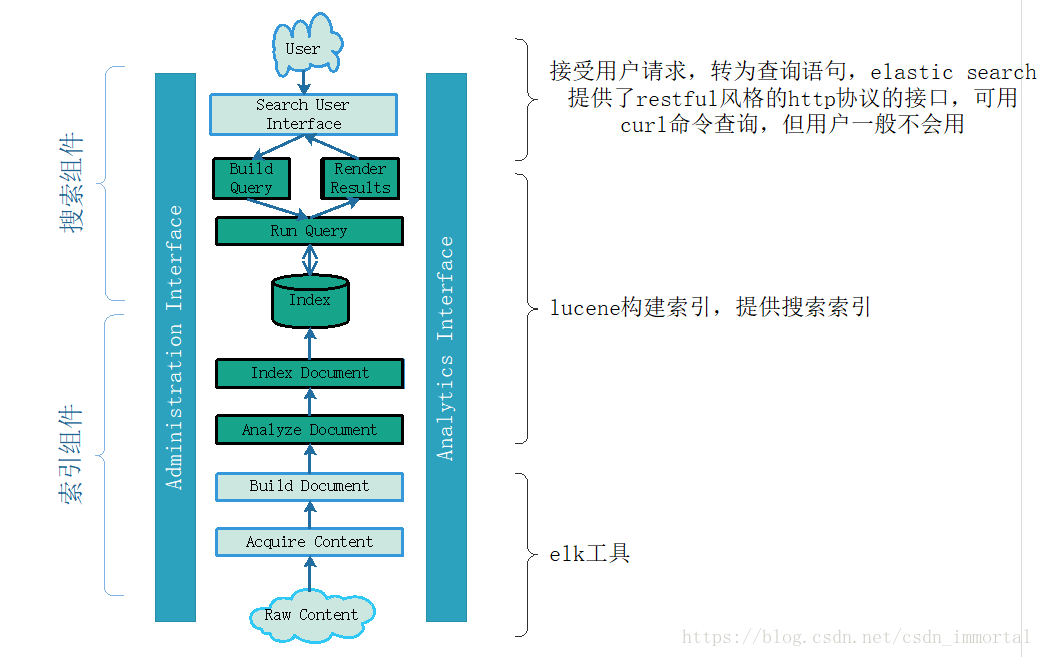

lucene构建索引,提供搜索功能,但不提供搜索界面,无法与用户交互

可以给lucene开发一个外壳,通过套接字和lucene交互,外壳负责把用户要查找的数据转为特定格式的查找操作,交给lucene处理

Sphinx:c++开发,搜索引擎类库

埃及师身人面像

Solr:搜索引擎服务器

ASF旗下产品,只是搜索引擎服务器,数据来源没有

数据来源:可以通过网络爬虫爬遍网络搜索网址,转换为特定格式,导入搜索引擎的存储数据库,给客户提供api接口,以便用户检索,查询。站内搜索的话,可以从数据库查找数据

设计初衷是单机的,可以看做lucene的外壳

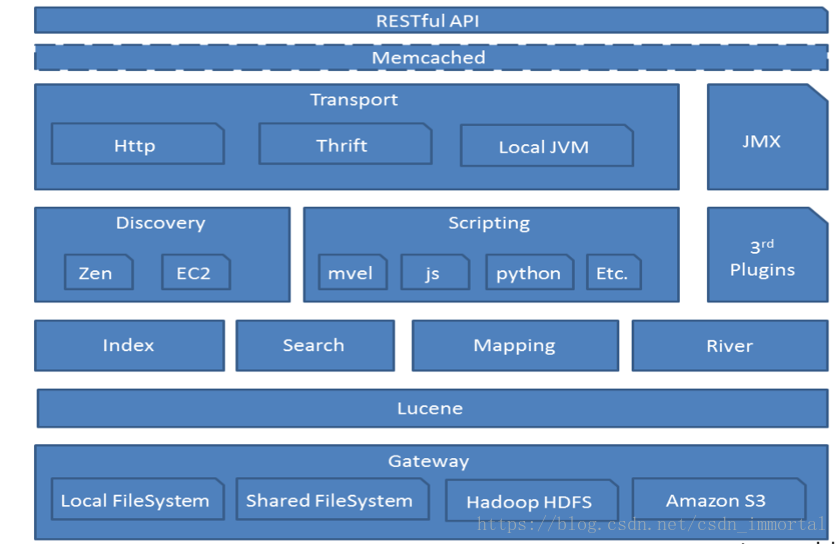

elastic search

实现日志存储,分析,检索的系统

多级系统分布式运行,可以看做lucene的外壳,其核心功能是lucene

支持单播,不再支持多播

Shard:分片

百科

lucene是把搜索到的数据切片为json格式

elosatic的分片和redis一样,二级路由,作为分布式规划,不再对节点取模,而是想redis一样,对一个固定数据取模16384个slot。这种模式为二级分布或者二级路由:一个中心节点记录所有的key所在的分布式集群中的节点号,则value即可取到,或者没有无中心节点,所有节点都是中心节点;每一个分片在每一个节点都可以作为一个独立的索引(非关系型)

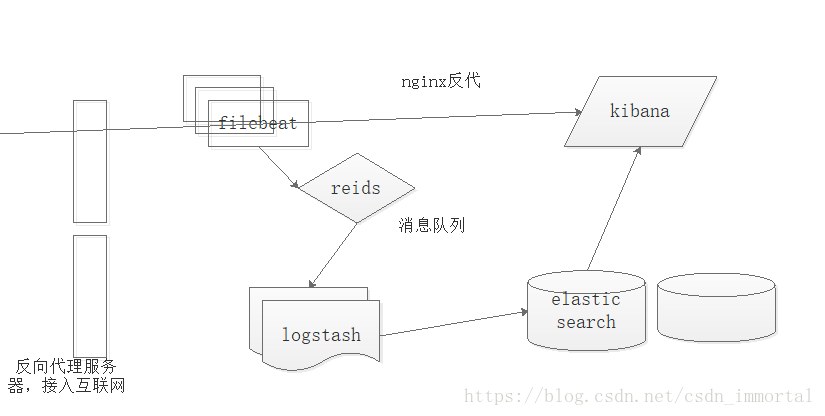

Logstash:工作于生成日志的服务器上的进程,抓取日志传给elastic search,重量级,消耗内存,至少几百兆的内存。etl工具,RESTful风格

filebeat是另一个日志抽取工具,效率高,占用内存只有几兆,filebeat负责抓取日志,logstash负责转换

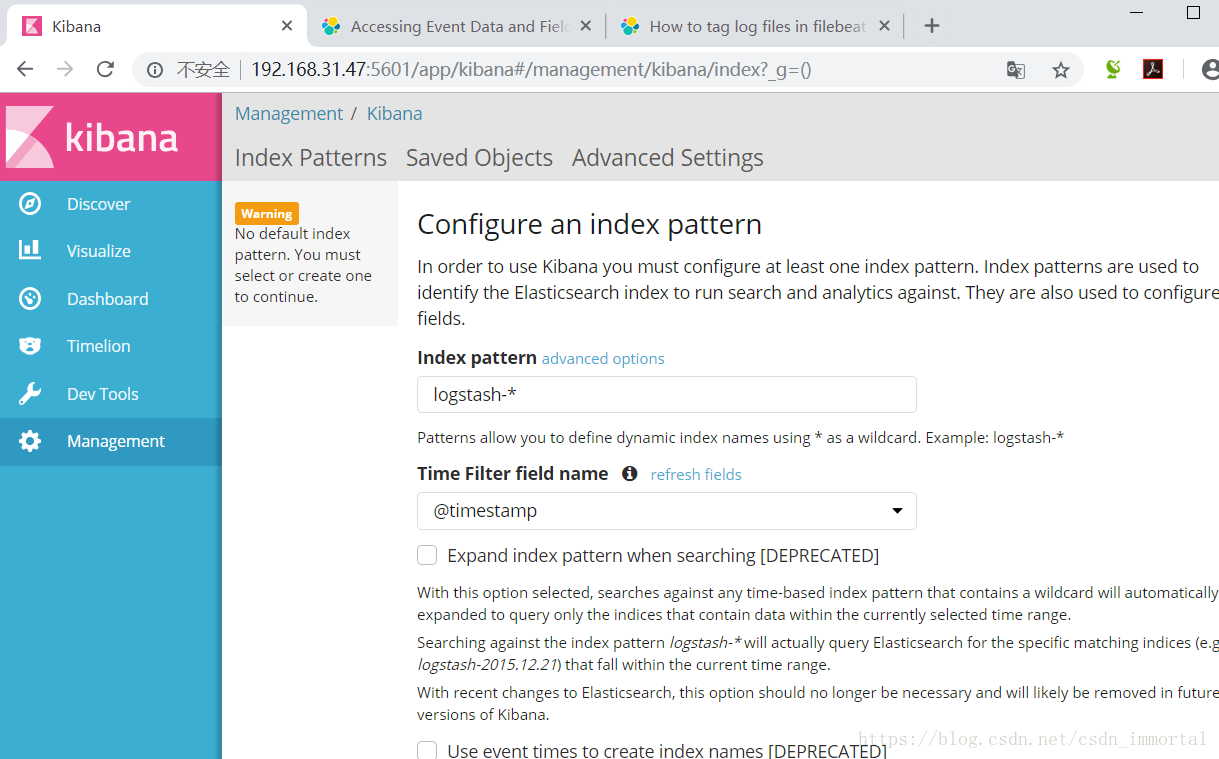

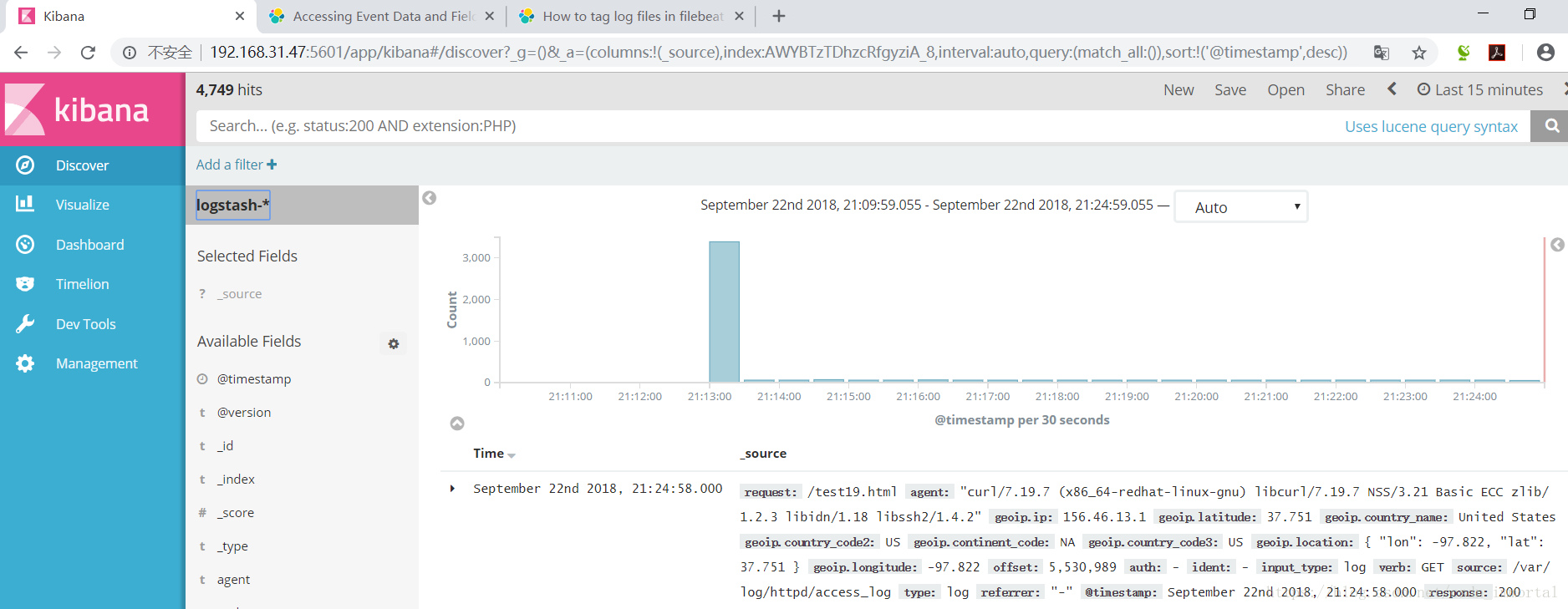

kibana:支持elastic search接口的展示页面,可视化工具,类似grafana

elfk称为elastic stack

图11和图2

图3 ES ARCH

https://www.elastic.co/downloads/past-releases

[root@cos7 ~ ]#vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.31.7 node01

192.168.31.17 node02

192.168.31.27 node03

192.168.31.37 node04

其它同理

[root@cos7 ~ ]#lftp 172.18.0.1

lftp 172.18.0.1:~> cd pub/Sources/7.x86_64/elasticstack/

[root@cos7 ~ ]#yum install java-1.8.0-openjdk-devel -y

[root@cos7 ~ ]#rpm -ivh elasticsearch-5.6.8.rpm

[root@cos7 ~ ]#vim /etc/elasticsearch/elasticsearch.yml

cluster.name: myels

node.name: node01

path.data: /els/data

path.logs: /els/logs

network.host: 192.168.31.7

http.port: 9200

discovery.zen.ping.unicast.hosts: ["node01", "node02","node03"]

discovery.zen.minimum_master_nodes: 1

[root@cos7 ~ ]#vim /etc/elasticsearch/jvm.options

# Xmx represents the maximum size of total heap space

-Xms1g #elastic seartch要求这两个选项大小一样

-Xmx1g

[root@cos17 ~ ]#rpm -ivh elasticsearch-5.6.8.rpm

[root@cos17 ~ ]#vim /etc/elasticsearch/jvm.options

[root@cos27 ~ ]#rpm -ivh elasticsearch-5.6.8.rpm

[root@cos27 ~ ]#vim /etc/elasticsearch/jvm.options

[root@cos7 ~ ]#mkdir -p /els/{data,logs} && chown elasticsearch.elasticsearch /els/*

[root@cos17 ~ ]#mkdir -p /els/{data,logs} && chown elasticsearch.elasticsearch /els/*

[root@cos27 ~ ]#mkdir -p /els/{data,logs} && chown elasticsearch.elasticsearch /els/*

[root@cos7 ~ ]#systemctl start elasticsearch.service

[root@cos17 ~ ]#systemctl start elasticsearch.service

[root@cos27 ~ ]#systemctl start elasticsearch.service

[root@cos7 ~ ]#ss -ntl

LISTEN 0 128 ::ffff:192.168.31.7:9200 #提供服务

LISTEN 0 128 ::ffff:192.168.31.7:9300 #内部协调节点

启动较慢

filebeat:收集日志

[root@cos7 ~ ]#curl http://node01:9200/

{

“name” : “node01”,

“cluster_name” : “myels”,

“cluster_uuid” : “GhLswAecRuyP55L-6a6lZw”,

“version” : {

“number” : “5.6.8”,

“build_hash” : “688ecce”,

“build_date” : “2018-02-16T16:46:30.010Z”,

“build_snapshot” : false,

“lucene_version” : “6.6.1”

},

“tagline” : “You Know, for Search”

}

[root@cos7 ~ ]#curl http://node01:9200/_cat/nodes

192.168.31.7 19 92 0 0.00 0.01 0.05 mdi * node01

192.168.31.17 7 95 0 0.00 0.01 0.10 mdi - node02

192.168.31.27 6 95 0 0.05 0.03 0.06 mdi - node03

[root@cos7 ~ ]#curl http://node01:9200/_cat/health

1537516549 15:55:49 myels green 3 3 10 5 0 0 0 0 - 100.0%

[root@cos7 ~ ]#curl http://node01:9200/_cat

[root@cos7 ~ ]#curl http://node01:9200/myindex/students/1?pretty=true

默认get方法,结果为error,?pretty=true是以一定的格式显示

{

"error" : {

"root_cause" : [

{

"type" : "index_not_found_exception",

"reason" : "no such index",

"resource.type" : "index_expression",

"resource.id" : "myindex",

"index_uuid" : "_na_",

"index" : "myindex"

}

],

"type" : "index_not_found_exception",

"reason" : "no such index",

"resource.type" : "index_expression",

"resource.id" : "myindex",

"index_uuid" : "_na_",

"index" : "myindex"

},

"status" : 404

}

[root@cos7 ~ ]#curl -XPUT http://node01:9200/myindex/students/1 -d '{"name":"dhy","age":18,"song":"rose"}'

{"_index":"myindex","_type":"students","_id":"1","_version":1,"result":"created","_shards":{"total":2,"successful":2,"failed":0},"created":true}

#两个分片

[root@cos7 ~ ]#curl http://node01:9200/myindex/students/1?pretty

{

"_index" : "myindex",

"_type" : "students",

"_id" : "1",

"_version" : 1,

"found" : true,

"_source" : {

"name" : "dhy",

"age" : 18,

"song" : "rose"

}

}

[root@cos7 ~ ]#curl -XGET http://node01:9200/_cat/indices

green open myindex Da2GRrF0RI2o6x5MLNyiyQ 5 1 1 0 9.5kb 4.7kb

[root@cos7 ~ ]#curl -XGET http://node01:9200/_cat/shards

myindex 4 p STARTED 0 162b 192.168.31.17 node02

myindex 4 r STARTED 0 162b 192.168.31.7 node01

myindex 3 r STARTED 1 4.2kb 192.168.31.17 node02

myindex 3 p STARTED 1 4.2kb 192.168.31.7 node01

myindex 1 r STARTED 0 162b 192.168.31.27 node03

myindex 1 p STARTED 0 162b 192.168.31.7 node01

myindex 2 p STARTED 0 162b 192.168.31.17 node02

myindex 2 r STARTED 0 162b 192.168.31.27 node03

myindex 0 p STARTED 0 162b 192.168.31.17 node02

myindex 0 r STARTED 0 162b 192.168.31.27 node03

#10个分片,5主5副,一对主副不会再相同的节点上,数据分片

[root@cos7 ~ ]#yum install jq -y

#json工具

[root@cos7 ~ ]#curl -s -XGET http://node01:9200/_search?q=song:rose |jq .

{

"took": 9,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 1,

"max_score": 0.2876821,

"hits": [

{

"_index": "myindex",

"_type": "students",

"_id": "1",

"_score": 0.2876821,

"_source": {

"name": "dhy",

"age": 18,

"song": "rose"

}

}

]

}

}

https://www.elastic.co/guide/en/elasticsearch/reference/5.6/index.html

ETL工具的使用

把数据抽取,转换,装入elastic search中

Logstash工具 官网

Beats工具

tomcat服务器上可以装占用内存较少的Beats工具,包含filebeat

官网

各种插件

input–> filter–>output

众多的tomcat服务器,都连接到elastic search上,对elastic search服务器来说,连接过多,可以找一个中间件,把tomcat上的beats都发送到中间件,由中间件统一发送到elastic serarch

[root@cos47 ~ ]#yum install httpd -y

[root@cos47 ~ ]#for i in {1..20};do echo "Page $i" > /var/www/html/test$i.html;done

[root@cos47 ~ ]#vim /etc/httpd/conf/httpd.conf

LogFormat "%{X-Forwarded}i %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-Agent}i\"" combined

[root@cos47 ~ ]#systemctl start httpd

模拟客户端工具curl -H

[root@cos6 ~ ]#curl -H x-forwarded:1.1.1.1 http://192.168.31.47/test1.html

[root@cos47 html ]#tail -f /var/log/httpd/access_log

1.1.1.1 - - [21/Sep/2018:16:06:47 +0800] "GET /test1.html HTTP/1.1" 200 7 "-" "curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2"

[root@cos6 ~ ]#while true;do curl -H "X-forwarded:$[$RANDOM%222+1].$[$RANDOM%225].$[$RANDOM%118].1" http://192.168.31.47/test$[$RANDOM%20+1].html;sleep .5;done

[root@cos47 html ]#tail -f /var/log/httpd/access_log

120.120.33.1 - - [21/Sep/2018:16:11:30 +0800] "GET /test6.html HTTP/1.1" 200 7 "-" "curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2"

[root@cos47 ~ ]#yum install java-1.8.0-openjdk -y

[root@cos47 ~ ]#rpm -ivh logstash-5.6.8.rpm

[root@cos47 ~ ]#rpm -ql logstash | wc -l

11293

[root@cos47 ~ ]#ls /etc/logstash/

conf.d/ jvm.options log4j2.properties logstash.yml startup.options

[root@cos47 ~ ]#rpm -ql logstash |grep logstash$

/usr/share/logstash/bin/logstash

/var/lib/logstash

/var/log/logstash

[root@cos47 ~ ]#vim /etc/profile.d/logstash.sh

export PATH=/usr/share/logstash/bin/:$PATH

[root@cos47 ~ ]#exec bash

[root@cos47 ~ ]#logstash -h

较慢

[root@cos47 ~ ]#cd /etc/logstash/conf.d

[root@cos47 conf.d ]#vim stdin-out.conf

input {

stdin{}

}

output {

stdout{

codec => rubydebug #此行删除,则是标准输出,此处为json编码

}

}

logstash文档

https://www.elastic.co/guide/en/logstash/5.6/index.html

[root@cos47 conf.d ]#logstash -f stdin-out.conf -t

#语法测试

[root@cos47 conf.d ]#logstash -f stdin-out.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

The stdin plugin is now waiting for input:

dhy

2018-09-21T08:27:08.200Z cos47.localdomain dhy

elastsh要求只能存入json格式的文档,如下

[root@cos47 conf.d ]#logstash -f stdin-out.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

The stdin plugin is now waiting for input:

dhy hello

{

"@version" => "1",

"host" => "cos47.localdomain",

"@timestamp" => 2018-09-21T08:33:06.781Z,

"message" => "dhy hello"

}

https://www.elastic.co/guide/en/logstash/5.6/plugins-inputs-file.html

让logstash从文件读取数据

[root@cos47 conf.d ]#vim file-out.conf

input{

file{

path => ["/var/log/httpd/access_log"]

start_position => "beginning"

}

}

output {

stdout {

codec => rubydebug

}

}

[root@cos47 conf.d ]#logstash -f file-out.conf -t

#语法检查

[root@cos47 conf.d ]#logstash -f file-out.conf

此处输出的数据格式太乱,可以用logstash进行过滤匹配转换

官网 https://www.elastic.co/guide/en/logstash/5.6/plugins-filters-grok.html

而系统内置的也有,模式不用自己写了,可以参考

[root@cos47 conf.d ]#rpm -ql logstash | grep patterns

[root@cos47 conf.d ]#less /usr/share/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-4.1.2/patterns/grok-patterns

[root@cos47 conf.d ]#cat /usr/share/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-4.1.2/patterns/httpd

HTTPDUSER %{EMAILADDRESS}|%{USER}

HTTPDERROR_DATE %{DAY} %{MONTH} %{MONTHDAY} %{TIME} %{YEAR}

# Log formats

HTTPD_COMMONLOG %{IPORHOST:clientip} %{HTTPDUSER:ident} %{HTTPDUSER:auth} \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

HTTPD_COMBINEDLOG %{HTTPD_COMMONLOG} %{QS:referrer} %{QS:agent}

# Error logs

HTTPD20_ERRORLOG \[%{HTTPDERROR_DATE:timestamp}\] \[%{LOGLEVEL:loglevel}\] (?:\[client %{IPORHOST:clientip}\] ){0,1}%{GREEDYDATA:message}

HTTPD24_ERRORLOG \[%{HTTPDERROR_DATE:timestamp}\] \[%{WORD:module}:%{LOGLEVEL:loglevel}\] \[pid %{POSINT:pid}(:tid %{NUMBER:tid})?\]( \(%{POSINT:proxy_errorcode}\)%{DATA:proxy_message}:)?( \[client %{IPORHOST:clientip}:%{POSINT:clientport}\])?( %{DATA:errorcode}:)? %{GREEDYDATA:message}

HTTPD_ERRORLOG %{HTTPD20_ERRORLOG}|%{HTTPD24_ERRORLOG}

# Deprecated

COMMONAPACHELOG %{HTTPD_COMMONLOG}

COMBINEDAPACHELOG %{HTTPD_COMBINEDLOG}

https://www.elastic.co/guide/en/logstash/5.6/plugins-filters-grok.html#plugins-filters-grok-match

[root@cos47 conf.d ]#vim file-out.conf

input{

file{

path => ["/var/log/httpd/access_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

}

}

output {

stdout {

codec => rubydebug

}

}

[root@cos47 conf.d ]#logstash -f file-out.conf -t

{

"request" => "/test7.html",

"agent" => "\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"auth" => "-",

"ident" => "-",

"verb" => "GET",

"message" => "114.192.25.1 - - [21/Sep/2018:16:57:30 +0800] \"GET /test7.html HTTP/1.1\" 200 7 \"-\" \"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"path" => "/var/log/httpd/access_log",

"referrer" => "\"-\"",

"@timestamp" => 2018-09-21T08:57:31.304Z,

"response" => "200",

"bytes" => "7",

"clientip" => "114.192.25.1",

"@version" => "1",

"host" => "cos47.localdomain",

"httpversion" => "1.1",

"timestamp" => "21/Sep/2018:16:57:30 +0800"

}

[root@cos47 conf.d ]#vim file-out.conf

input{

file{

path => ["/var/log/httpd/access_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => "message"

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

}

output {

stdout {

codec => rubydebug

}

}

移除了timestamp,并且把@timestamp的时间(logstash时间)替换为了timestamp的时间(日志生成时间)

[root@cos47 conf.d ]#logstash -f file-out.conf -t

{

"request" => "/test11.html",

"agent" => "\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"auth" => "-",

"ident" => "-",

"verb" => "GET",

"path" => "/var/log/httpd/access_log",

"referrer" => "\"-\"",

"@timestamp" => 2018-09-21T09:10:52.000Z,

"response" => "200",

"bytes" => "8",

"clientip" => "154.121.23.1",

"@version" => "1",

"host" => "cos47.localdomain",

"httpversion" => "1.1"

}

geoip的过滤器插件,做地理位置的归属分布

根据ip查找ip所在地和经纬度,有一个数据库,geoip会读取地址到地址库查找,maxmind地址库

https://www.elastic.co/guide/en/logstash/5.6/plugins-filters-geoip.html

The GeoIP filter adds information about the geographical location of IP addresses, based on data from the Maxmind GeoLite2 databases.

https://dev.maxmind.com/geoip/geoip2/geolite2/ 下载maxmind地址库

[root@cos47 conf.d ]#wget http://geolite.maxmind.com/download/geoip/database/GeoLite2-City.tar.gz

此地址位置库会每周更新,由于ip地址购买者或者到期的ip都会重新分配,需要做一个软链接,定期把库更新了

[root@cos47 conf.d ]#mkdir /etc/logstash/maxmind/

[root@cos47 conf.d ]#mv GeoLite2-City.tar.gz /etc/logstash/maxmind/

[root@cos47 conf.d ]#cd /etc/logstash/maxmind/

[root@cos47 maxmind ]#tar xf GeoLite2-City.tar.gz

[root@cos47 maxmind ]#ls

GeoLite2-City_20180911 GeoLite2-City.tar.gz

[root@cos47 maxmind ]#cd GeoLite2-City_20180911/

[root@cos47 GeoLite2-City_20180911 ]#ls

COPYRIGHT.txt GeoLite2-City.mmdb LICENSE.txt README.txt

[root@cos47 maxmind ]#ln -sv GeoLite2-City

GeoLite2-City_20180911/ GeoLite2-City.tar.gz

[root@cos47 maxmind ]#ln -sv GeoLite2-City_20180911/GeoLite2-City.mmdb ./

‘./GeoLite2-City.mmdb’ -> ‘GeoLite2-City_20180911/GeoLite2-City.mmdb’

[root@cos47 maxmind ]#ll

total 26520

drwxr-xr-x 2 2000 2000 90 Sep 12 05:17 GeoLite2-City_20180911

lrwxrwxrwx 1 root root 41 Sep 21 17:35 GeoLite2-City.mmdb -> GeoLite2-City_20180911/GeoLite2-City.mmdb

-rw-r--r-- 1 root root 27154441 Sep 12 05:17 GeoLite2-City.tar.gz

[root@cos47 maxmind ]#cd /etc/logstash/conf.d

[root@cos47 conf.d ]#logstash -f file-out.conf -t

[root@cos47 conf.d ]#logstash -f file-out.conf

{

"request" => "/test3.html",

"agent" => "\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"geoip" => {

"ip" => "134.182.57.1",

"latitude" => 37.751,

"country_name" => "United States",

"country_code2" => "US",

"continent_code" => "NA",

"country_code3" => "US",

"location" => {

"lon" => -97.822,

"lat" => 37.751

},

"longitude" => -97.822

},

"auth" => "-",

"ident" => "-",

"verb" => "GET",

"path" => "/var/log/httpd/access_log",

"referrer" => "\"-\"",

"@timestamp" => 2018-09-21T09:39:40.000Z,

"response" => "200",

"bytes" => "7",

"clientip" => "134.182.57.1",

"@version" => "1",

"host" => "cos47.localdomain",

"httpversion" => "1.1"

}

[root@cos47 conf.d ]#cat file-out.conf

input{

file{

path => ["/var/log/httpd/access_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => "message"

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/maxmind/GeoLite2-City.mmdb"

}

}

output {

stdout {

codec => rubydebug

}

}

切割字段

https://www.elastic.co/guide/en/logstash/5.6/plugins-filters-mutate.html

The mutate filter allows you to perform general mutations on fields. You can rename, remove, replace, and modify fields in your events.

输出插件

https://www.elastic.co/guide/en/logstash/5.6/index.html

Output plugins

Elasticsearch output plugin

File output plugin

Kafka output plugin

Redis output plugin

...

https://www.elastic.co/guide/en/logstash/5.6/plugins-outputs-elasticsearch.html

[root@cos47 conf.d ]#vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.31.7 node01

192.168.31.17 node02

192.168.31.27 node03

#修改输出过滤器,如下

[root@cos47 conf.d ]#vim file-out.conf

output {

elasticsearch {

hosts => ["http://node01:9200/","http://node02:9200/","http://node03:9200/"]

index => "logstash-%{+YYYY.MM.dd}"

document_type => "httpd_access_logs"

}

}

[root@cos7 ~ ]#curl http://node01:9200/_cat/indices

green open logstash-2018.09.21 n91hh_W9REiF923QYVh7Zw 5 1 1886 0 2.9mb 1.6mb

green open myindex Da2GRrF0RI2o6x5MLNyiyQ 5 1 1 0 9.7kb 4.8kb

[root@cos47 html ]#tail -f /var/log/httpd/access_log

27.118.110.1 - - [21/Sep/2018:17:59:33 +0800] “GET /test8.html HTTP/1.1” 200 7 “-” “curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2”

[root@cos7 ~ ]#curl http://node01:9200/logstash-*/_search?q=clientip:149.150.15.1 |jq .

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 998 100 998 0 0 69863 0 --:--:-- --:--:-- --:--:-- 71285

{

"took": 7,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 1,

"max_score": 6.0606794,

"hits": [

{

"_index": "logstash-2018.09.21",

"_type": "httpd_access_logs",

"_id": "AWX7lMjHyF1gIEU9hupG",

"_score": 6.0606794,

"_source": {

"request": "/test2.html",

"agent": "\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\"",

"geoip": {

"timezone": "America/New_York",

"ip": "149.150.15.1",

"latitude": 40.749,

"continent_code": "NA",

"city_name": "South Orange",

"country_name": "United States",

"country_code2": "US",

"dma_code": 501,

"country_code3": "US",

"region_name": "New Jersey",

"location": {

"lon": -74.2639,

"lat": 40.749

},

"postal_code": "07079",

"region_code": "NJ",

"longitude": -74.2639

},

"auth": "-",

"ident": "-",

"verb": "GET",

"path": "/var/log/httpd/access_log",

"referrer": "\"-\"",

"@timestamp": "2018-09-21T10:02:35.000Z",

"response": "200",

"bytes": "7",

"clientip": "149.150.15.1",

"@version": "1",

"host": "cos47.localdomain",

"httpversion": "1.1"

}

}

]

}

}

从filebeat输入

回顾一下上面

node01,node02,node03上装的是elastic search服务,演示elastic search的服务

cos47上装的httpd服务,和logstash服务(logstash基于java开发,需要安装jdk),演示了logstash作为ETL工具的抽取(即stdin {}),转换(即filter {}),装入功能(output {})

上面是从cos47的httpd服务的日志作为标准输入,实际应该用beats家族的filebeat抽取web服务的日志,作为logstash服务的标准输入,拿现在把filebeat也安装到cos47机器上(仅实验),从filebeat获取logstash标准输入需要的数据,由logstash进行数据格式转换

[root@cos47 ~ ]#rpm -ivh filebeat-5.6.8-x86_64.rpm

[root@cos47 ~ ]#rpm -ql filebeat

/etc/filebeat/filebeat.full.yml

/etc/filebeat/filebeat.template-es2x.json

/etc/filebeat/filebeat.template-es6x.json

/etc/filebeat/filebeat.template.json

/etc/filebeat/filebeat.yml

/usr/share/filebeat/bin/filebeat

[root@cos47 filebeat ]#vim filebeat.yml

- /var/log/httpd/access_log

#-------------------------- Elasticsearch output ------------------------------

#output.elasticsearch:

# Array of hosts to connect to.

#hosts: ["localhost:9200"]

#----------------------------- Logstash output --------------------------------

output.logstash:

# The Logstash hosts

hosts: ["192.168.31.47:5044"]

https://www.elastic.co/guide/en/logstash/current/plugins-inputs-beats.html

[root@cos47 conf.d ]#pwd

/etc/logstash/conf.d

修改输入为filebeat的端口

[root@cos47 conf.d ]#vim file-out.conf

input{

beats {

port => 5044

}

}

filter {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => "message"

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/maxmind/GeoLite2-City.mmdb"

}

}

output {

elasticsearch {

hosts => ["http://node01:9200/","http://node02:9200/","http://node03:9200/"]

index => "logstash-%{+YYYY.MM.dd}"

document_type => "httpd_access_logs"

}

}

[root@cos47 conf.d ]#logstash -f file-out.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

[INFO ] 2018-09-21 18:35:30.813 [[main]<beats] Server - Starting server on port: 5044

[root@cos47 html ]#ss -ntl

#监听5044

LISTEN 0 128 :::5044

[root@cos47 ~ ]#systemctl start filebeat

[root@cos47 ~ ]#ps aux

root 9306 0.5 0.9 23904 15172 ? Ssl 18:45 0:00 /usr/share/filebeat/bin/filebeat -c /etc/f

[root@cos7 ~ ]#curl http://node01:9200/logstash-*/_search?q=response:404 | jq .

****省略****

],

"referrer": "\"-\"",

"@timestamp": "2018-09-21T08:10:10.000Z",

"response": "404",

"bytes": "208",

"clientip": "132.156.12.1",

"@version": "1",

"host": "cos47.localdomain",

"httpversion": "1.1"

}

}

]

}

}

加入redis

[root@cos47 ~ ]#systemctl stop filebeat

[root@cos47 ~ ]#yum install redis

[root@cos47 ~ ]#vim /etc/redis.conf

bind 0.0.0.0

requirepass dhy.com

[root@cos47 ~ ]#systemctl start redis

[root@cos47 ~ ]#ss -ntl

#6379端口

[root@cos47 ~ ]#vim /etc/filebeat/filebeat.full.yml

[root@cos47 ~ ]#vim /etc/filebeat/filebeat.yml

#------------------------------- Redis output ----------------------------------

output.redis:

enabled: true

hosts: ["192.168.31.47:6379"]

port: 6379

key: filebeat

db: 0

password: dhy.com

datatype: list

关闭logstash output

[root@cos47 ~ ]#systemctl restart filebeat

[root@cos47 filebeat ]#tail /var/log/filebeat/filebeat

2018-09-22T19:41:09+08:00 INFO Loading registrar data from /var/lib/filebeat/registry

2018-09-22T19:41:09+08:00 INFO States Loaded from registrar: 1

2018-09-22T19:41:09+08:00 INFO Loading Prospectors: 1

2018-09-22T19:41:09+08:00 INFO Starting Registrar

2018-09-22T19:41:09+08:00 INFO Start sending events to output

2018-09-22T19:41:09+08:00 INFO Prospector with previous states loaded: 1

2018-09-22T19:41:09+08:00 INFO Starting prospector of type: log; id: 14835892602573179500

2018-09-22T19:41:09+08:00 INFO Loading and starting Prospectors completed. Enabled prospectors: 1

2018-09-22T19:41:09+08:00 INFO Starting spooler: spool_size: 2048; idle_timeout: 5s

2018-09-22T19:41:09+08:00 INFO Harvester started for file: /var/log/httpd/access_log

[root@cos47 filebeat ]#redis-cli -a dhy.com

127.0.0.1:6379> KEYS *

1) "filebeat"

127.0.0.1:6379> LINDEX filebeat 0

"{\"@timestamp\":\"2018-09-22T11:41:09.956Z\",\"beat\":{\"hostname\":\"cos47.localdomain\",\"name\":\"cos47.localdomain\",\"version\":\"5.6.8\"},\"input_type\":\"log\",\"message\":\"48.59.26.1 - - [22/Sep/2018:19:34:14 +0800] \\\"GET /test12.html HTTP/1.1\\\" 200 8 \\\"-\\\" \\\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\\\"\",\"offset\":3713292,\"source\":\"/var/log/httpd/access_log\",\"type\":\"log\"}"

https://www.elastic.co/guide/en/logstash/5.6/plugins-inputs-redis.html

#修改输入插件为redis

[root@cos47 conf.d ]#vim file-out.conf

input{

redis {

host => "192.168.31.47"

port => 6379

password => "dhy.com"

db => 0

key => "filebeat"

data_type => "list"

}

}

[root@cos47 conf.d ]#logstash -f file-out.conf -t

[root@cos47 filebeat ]#redis-cli -a dhy.com

127.0.0.1:6379> LLEN filebeat

(integer) 1694

127.0.0.1:6379> LLEN filebeat

(integer) 1773

[root@cos47 conf.d ]#logstash -f file-out.conf

WARNING: Could not find logstash.yml which is typically located in $LS_HOME/config or /etc/logstash. You can specify the path using --path.settings. Continuing using the defaults

Could not find log4j2 configuration at path /usr/share/logstash/config/log4j2.properties. Using default config which logs errors to the console

#停留于此

[root@cos47 filebeat ]#redis-cli -a dhy.com

127.0.0.1:6379> LLEN filebeat

(integer) 0

#列表数据被logstash读走

https://www.elastic.co/guide/en/logstash/5.6/event-dependent-configuration.html

if EXPRESSION {

…

} else if EXPRESSION {

…

} else {

…

}

https://discuss.elastic.co/t/how-to-tag-log-files-in-filebeat-for-logstash-ingestion/44713/3

[root@cos47 ~ ]#vim /etc/filebeat/filebeat.yml

Each - is a prospector. Most options can be set at the prospector level

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /var/log/httpd/access_log

fields:

log_type: access

- paths:

-/var/log/httpd/error_log

fields:

log_type: errors

[root@cos47 filebeat ]#redis-cli -a dhy.com

127.0.0.1:6379> LLEN filebeat

(integer) 4313

127.0.0.1:6379> LINDEX filebeat 4313

"{\"@timestamp\":\"2018-09-22T13:21:15.457Z\",\"beat\":{\"hostname\":\"cos47.localdomain\",\"name\":\"cos47.localdomain\",\"version\":\"5.6.8\"},\"fields\":{\"log_type\":\"access\"},\"input_type\":\"log\",\"message\":\"5.99.56.1 - - [22/Sep/2018:21:21:14 +0800] \\\"GET /test13.html HTTP/1.1\\\" 200 8 \\\"-\\\" \\\"curl/7.19.7 (x86_64-redhat-linux-gnu) libcurl/7.19.7 NSS/3.21 Basic ECC zlib/1.2.3 libidn/1.18 libssh2/1.4.2\\\"\",\"offset\":5445561,\"source\":\"/var/log/httpd/access_log\",\"type\":\"log\"}"

,“fields”:{“log_type”:“access”}

[root@cos47 filebeat ]#systemctl restart filebeat

[root@cos47 conf.d ]#vim file-out.conf

input{

redis {

host => "192.168.31.47"

port => 6379

password => "dhy.com"

db => 0

key => "filebeat"

data_type => "list"

}

}

filter {

if [fields][log_type] == "access" {

grok {

match => { "message" => "%{HTTPD_COMBINEDLOG}" }

remove_field => ["message","beat"]

}

date {

match => ["timestamp","dd/MMM/YYYY:H:m:s Z"]

remove_field => "timestamp"

}

geoip {

source => "clientip"

target => "geoip"

database => "/etc/logstash/maxmind/GeoLite2-City.mmdb"

}

}

}

output {

if [fields][log_type] == "access" {

elasticsearch {

hosts => ["http://node01:9200/","http://node02:9200/","http://node03:9200/"]

index => "logstash-%{+YYYY.MM.dd}"

document_type => "httpd_access_logs"

}

} else {

elasticsearch {

hosts => ["http://node01:9200/","http://node02:9200/","http://node03:9200/"]

index => "logstash-%{+YYYY.MM.dd}"

document_type => "httpd_error_logs"

}

}

}

[root@cos47 conf.d ]#logstash -f file-out.conf -t

[root@cos47 conf.d ]#systemctl start logstash

[root@cos47 conf.d ]#systemctl status logstash

[root@cos47 conf.d ]#ps aux

logstash 3651 31.3 27.4 3757668 419288 ? SNsl 20:32 0:21 /usr/bin/java -XX:+UseParNewGC -XX:+UseCon

kibana

[root@cos47 ~ ]#rpm -ivh kibana-5.6.8-x86_64.rpm

server.port: 5601

server.host: “0.0.0.0” #本机所有地址

server.name: “node04”

elasticsearch.url: “http://192.168.31.17:9200”

elasticsearch.preserveHost: true

kibana.index: “.kibana”

[root@cos47 ~ ]#systemctl start kibana

[root@cos47 ~ ]#ss -ntl

LISTEN 0 128 *:5601

如图

浏览器过滤条件

response:200 OR response:404

agent:curl

参考:

ELASTICSEARCH 选主流程 https://www.easyice.cn/archives/164

ElasticSearch的基本原理与用法 https://www.cnblogs.com/luxiaoxun/p/4869509.html