第2章 Hadoop快速入门

2.4 Hadoop单机运行

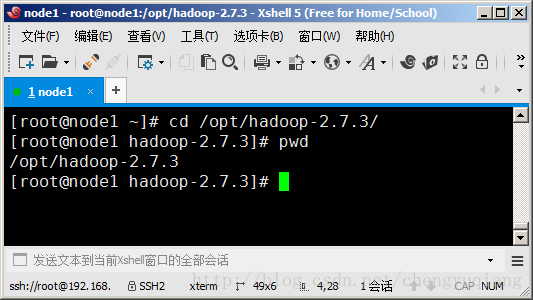

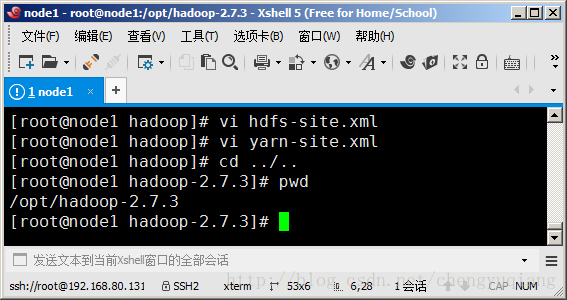

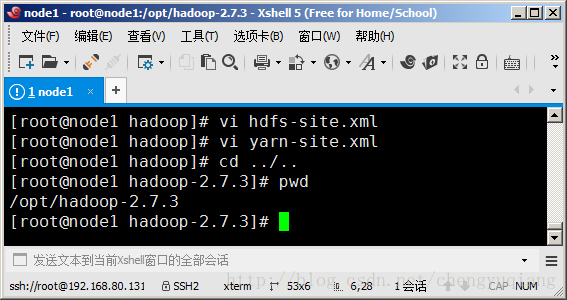

紧接上一节内容,首先切换到Hadoop根目录

或者cd /opt/hadoop-2.7.3进入Hadoop根目录

通过pwd命令可以知道当前所在目录

[root@node1 hadoop-2.7.3]# pwd

- 1

注意:本节命令都将在/opt/hadoop-2.7.3目录下执行。

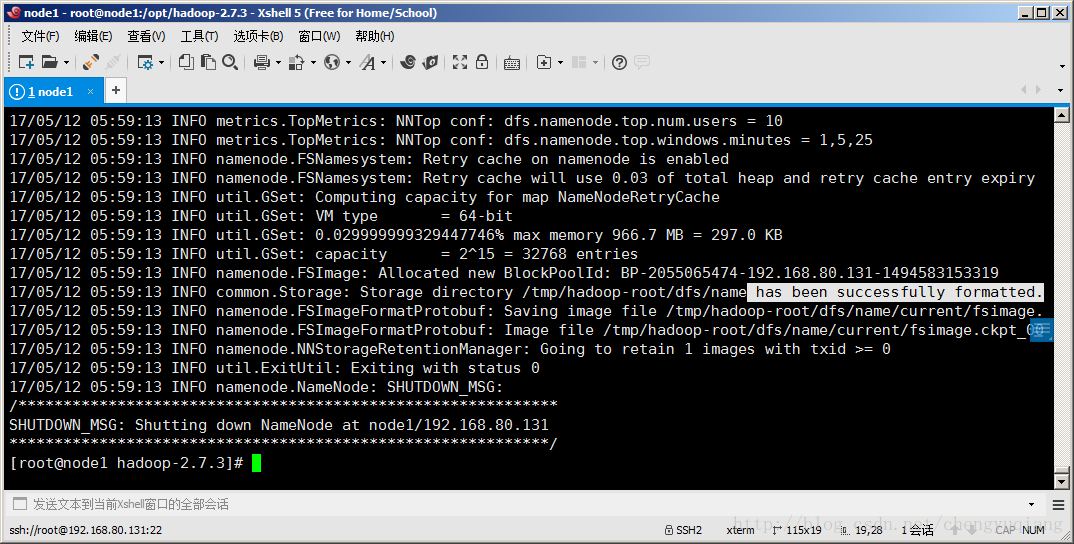

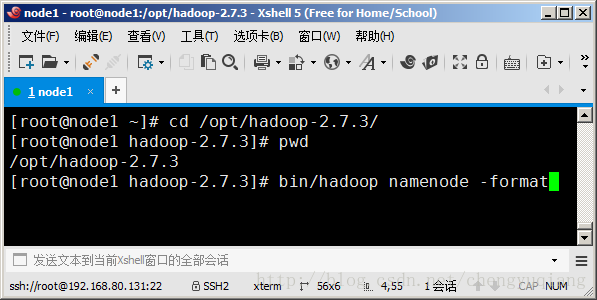

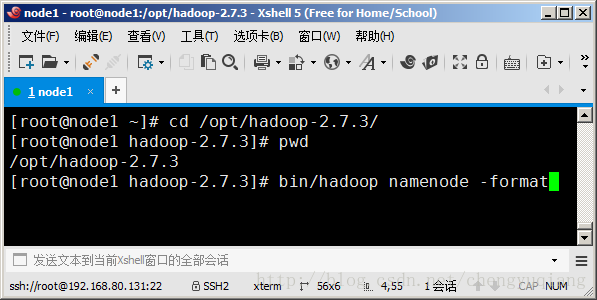

2.4.1 namenode格式化

执行bin/hadoop namenode -format命令,进行namenode格式化

[root@node1 hadoop-2.7.3]# bin/hadoop namenode -format

- 1

输出信息(下半部分):

-

17/

05/

12

05:

59:

11 INFO namenode

.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

-

17/

05/

12

05:

59:

11 INFO namenode

.NameNode: createNameNode [-format]

-

Formatting using clusterid: CID-db9a34c9-

661e-4fc0-a273-b554e0cfb32b

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: No KeyProvider found.

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: fsLock is fair:true

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.DatanodeManager: dfs

.block

.invalidate

.limit=

1000

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.DatanodeManager: dfs

.namenode

.datanode

.registration

.ip-hostname-

check=

true

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: dfs

.namenode

.startup

.delay

.block

.deletion

.sec

is

set

to

000:

00:

00:

00.000

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: The

block deletion will

start around

2017 May

12

05:

59:

12

-

17/

05/

12

05:

59:

12 INFO util

.GSet: Computing

capacity

for

map BlocksMap

-

17/

05/

12

05:

59:

12 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

12 INFO util

.GSet:

2.0%

max

memory

966.7 MB =

19.3 MB

-

17/

05/

12

05:

59:

12 INFO util

.GSet:

capacity =

2^

21 =

2097152 entries

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: dfs

.block

.access

.token

.enable=

false

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: defaultReplication =

1

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: maxReplication =

512

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: minReplication =

1

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: maxReplicationStreams =

2

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: replicationRecheckInterval =

3000

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: encryptDataTransfer =

false

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: maxNumBlocksToLog =

1000

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: fsOwner = root (auth:SIMPLE)

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: supergroup = supergroup

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: isPermissionEnabled =

true

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: HA Enabled:

false

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: Append Enabled:

true

-

17/

05/

12

05:

59:

13 INFO util

.GSet: Computing

capacity

for

map INodeMap

-

17/

05/

12

05:

59:

13 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

1.0%

max

memory

966.7 MB =

9.7 MB

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

capacity =

2^

20 =

1048576 entries

-

17/

05/

12

05:

59:

13 INFO namenode

.FSDirectory: ACLs enabled?

false

-

17/

05/

12

05:

59:

13 INFO namenode

.FSDirectory: XAttrs enabled?

true

-

17/

05/

12

05:

59:

13 INFO namenode

.FSDirectory: Maximum

size

of an xattr:

16384

-

17/

05/

12

05:

59:

13 INFO namenode

.NameNode:

Caching

file

names occuring more

than

10 times

-

17/

05/

12

05:

59:

13 INFO util

.GSet: Computing

capacity

for

map cachedBlocks

-

17/

05/

12

05:

59:

13 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

0.25%

max

memory

966.7 MB =

2.4 MB

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

capacity =

2^

18 =

262144 entries

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: dfs

.namenode

.safemode

.threshold-pct =

0.9990000128746033

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: dfs

.namenode

.safemode

.min

.datanodes =

0

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: dfs

.namenode

.safemode

.extension =

30000

-

17/

05/

12

05:

59:

13 INFO metrics

.TopMetrics: NNTop conf: dfs

.namenode

.top

.window

.num

.buckets =

10

-

17/

05/

12

05:

59:

13 INFO metrics

.TopMetrics: NNTop conf: dfs

.namenode

.top

.num

.users =

10

-

17/

05/

12

05:

59:

13 INFO metrics

.TopMetrics: NNTop conf: dfs

.namenode

.top

.windows

.minutes =

1,

5,

25

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: Retry

cache

on namenode

is enabled

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: Retry

cache will

use

0.03

of total

heap

and retry

cache entry expiry

time

is

600000 millis

-

17/

05/

12

05:

59:

13 INFO util

.GSet: Computing

capacity

for

map NameNodeRetryCache

-

17/

05/

12

05:

59:

13 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

0.029999999329447746%

max

memory

966.7 MB =

297.0 KB

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

capacity =

2^

15 =

32768 entries

-

17/

05/

12

05:

59:

13 INFO namenode

.FSImage: Allocated

new BlockPoolId: BP

-

2055065474

-

192.168

.80

.131

-

1494583153319

-

17/

05/

12

05:

59:

13 INFO common

.Storage:

Storage

directory /tmp/hadoop-root/dfs/

name has been successfully formatted.

-

17/

05/

12

05:

59:

13 INFO namenode

.FSImageFormatProtobuf: Saving image

file /tmp/hadoop-root/dfs/

name/

current/fsimage

.ckpt_0000000000000000000

using

no compression

-

17/

05/

12

05:

59:

13 INFO namenode

.FSImageFormatProtobuf: Image

file /tmp/hadoop-root/dfs/

name/

current/fsimage

.ckpt_0000000000000000000

of

size

351

bytes saved

in

0 seconds.

-

17/

05/

12

05:

59:

13 INFO namenode

.NNStorageRetentionManager: Going

to retain

1 images

with txid >=

0

-

17/

05/

12

05:

59:

13 INFO util

.ExitUtil: Exiting

with

status

0

-

17/

05/

12

05:

59:

13 INFO namenode

.NameNode: SHUTDOWN_MSG:

-

/************************************************************

-

SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.80.131

-

************************************************************/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

在执行结果中可以找到has been successfully formatted,说明namenode格式化成功了!

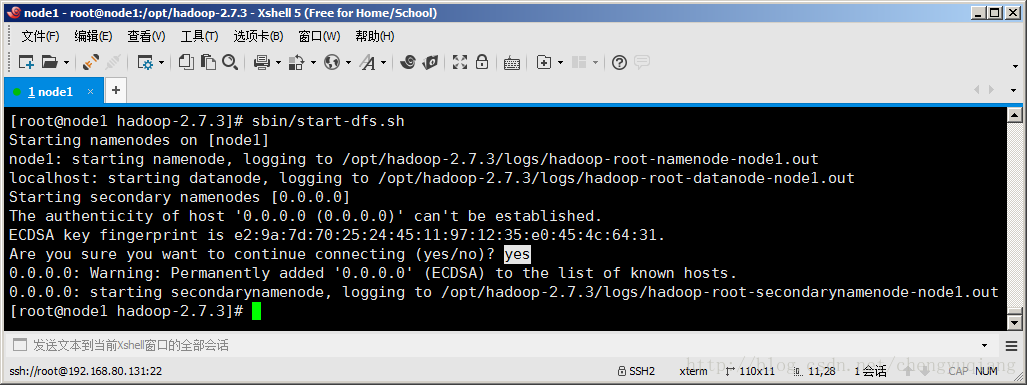

2.4.2 启动HDFS

执行sbin/start-dfs.sh命令启动HDFS

-

[root@node1 hadoop

-

2.7

.3]

# sbin/start-dfs.sh

-

Starting namenodes

on [node1]

-

node1: starting namenode, logging

to /opt/hadoop

-

2.7

.3/logs/hadoop-root-namenode-node1.out

-

localhost: starting datanode, logging

to /opt/hadoop

-

2.7

.3/logs/hadoop-root-datanode-node1.out

-

Starting secondary namenodes [

0.0

.0

.0]

-

The authenticity

of host

'

0.0

.0

.0

(

0.0

.0

.0

)' can't be established.

-

ECDSA

key fingerprint

is e2:

9a:

7d:

70:

25:

24:

45:

11:

97:

12:

35:e0:

45:

4c:

64:

31.

-

Are you sure you want

to

continue connecting (yes/no)? yes

-

0.0

.0

.0: Warning: Permanently added

'

0.0

.0

.0

' (ECDSA)

to

the

list

of

known hosts.

-

0.0

.0

.0: starting secondarynamenode, logging

to /opt/hadoop

-

2.7

.3/logs/hadoop-root-secondarynamenode-node1.out

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

在启动HDFS过程中,按照提示输入“yes”

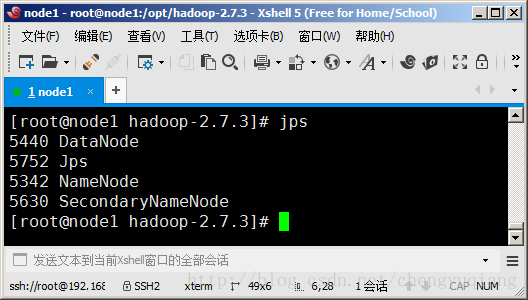

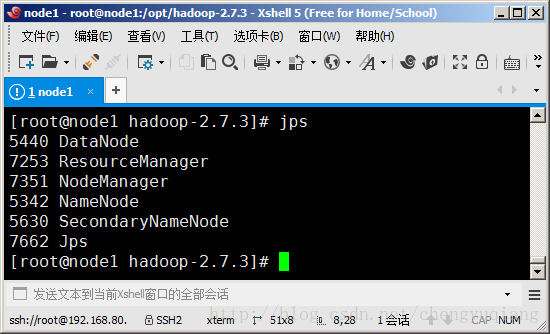

通过jps命令可以查看Java进程

[root@node1 hadoop-2.7.3]# jps

- 1

jps(Java Virtual Machine Process Status Tool)是JDK 1.5开始提供的一个显示当前所有Java进程pid的命令,简单实用,非常适合在Linux/unix平台上简单察看当前java进程的一些简单情况。 jps -l输出应用程序main class的完整package名 或者 应用程序的jar文件完整路径名

-

[root@node1 ~]

# jps -l

-

5752

sun

.tools

.jps

.Jps

-

5342

org

.apache

.hadoop

.hdfs

.server

.namenode

.NameNode

-

5440

org

.apache

.hadoop

.hdfs

.server

.datanode

.DataNode

-

5630

org

.apache

.hadoop

.hdfs

.server

.namenode

.SecondaryNameNode

- 1

- 2

- 3

- 4

- 5

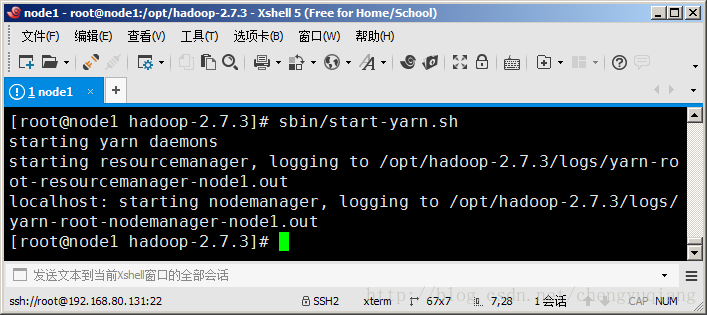

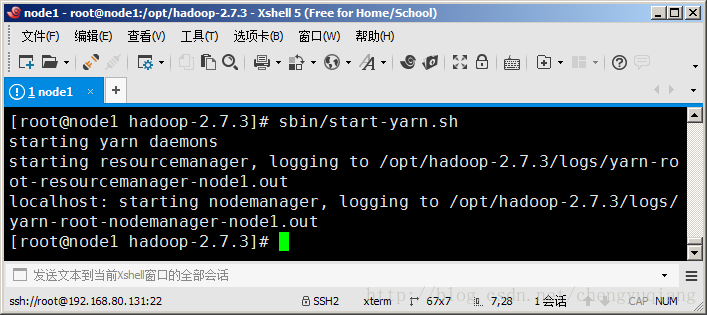

2.4.3 启动YARN

通过命令sbin/start-yarn.sh启动YARN

-

[root@node1 hadoop-

2.7

.3]

# sbin/start-yarn.sh

-

starting yarn daemons

-

starting resourcemanager, logging

to /opt/hadoop-

2.7

.3/logs/yarn-root-resourcemanager-node1

.out

-

localhost: starting nodemanager, logging

to /opt/hadoop-

2.7

.3/logs/yarn-root-nodemanager-node1

.out

- 1

- 2

- 3

- 4

然后通过jps查看YARN的进程

-

[root

@node1 hadoop-

2.7.

3]

# jps

-

5440

DataNode

-

7253

ResourceManager

-

7351

NodeManager

-

5342

NameNode

-

5630

SecondaryNameNode

-

7662

Jps

-

-

[root

@node1 hadoop-

2.7.

3]

#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

可以看到多了ResourceManager和NodeManager两个进程。

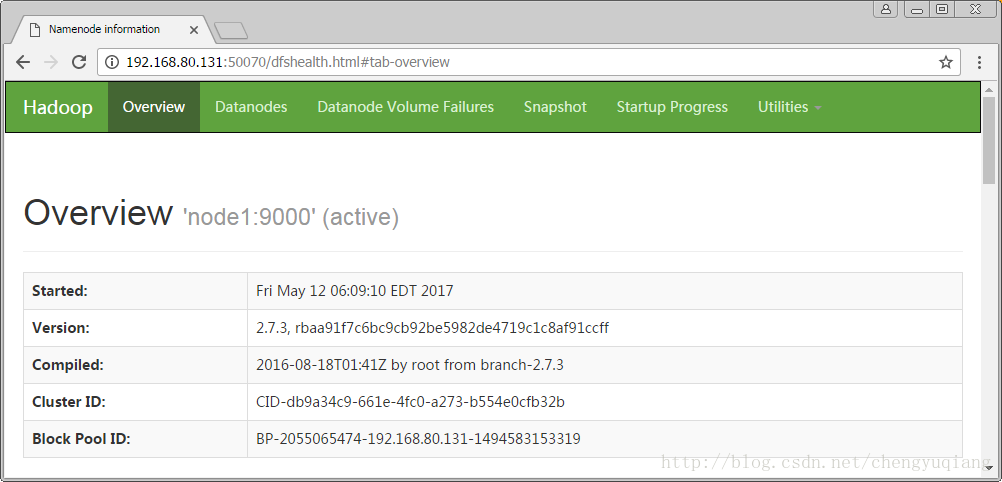

2.4.4 HDFS WEB界面

HDFS的Web界面默认端口号是50070。

因为宿主机Windows的hosts文件没有配置虚拟机相关IP信息,所以需要通过IP地址来访问HDFS WEB界面,在浏览器中打开:http://192.168.80.131:50070

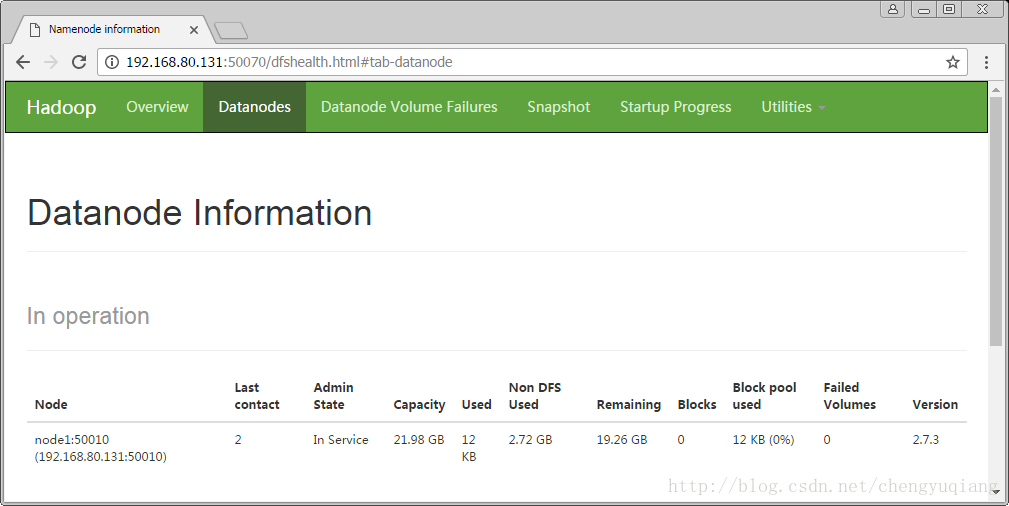

单击页面上部的导航栏中的“Datanodes”

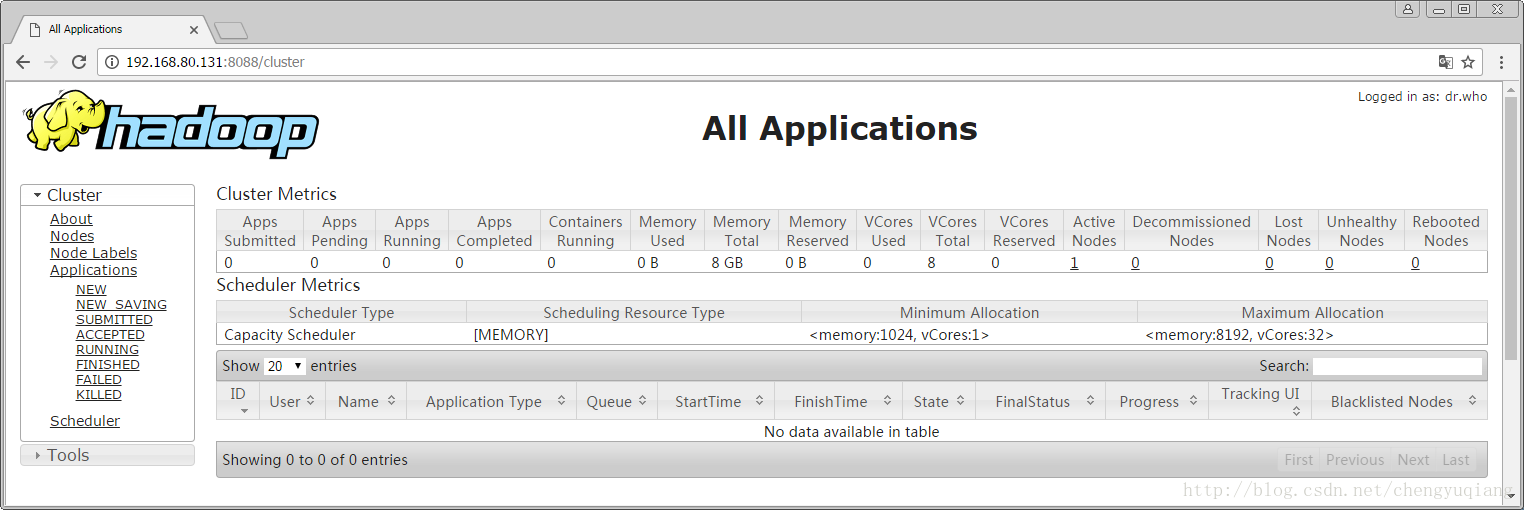

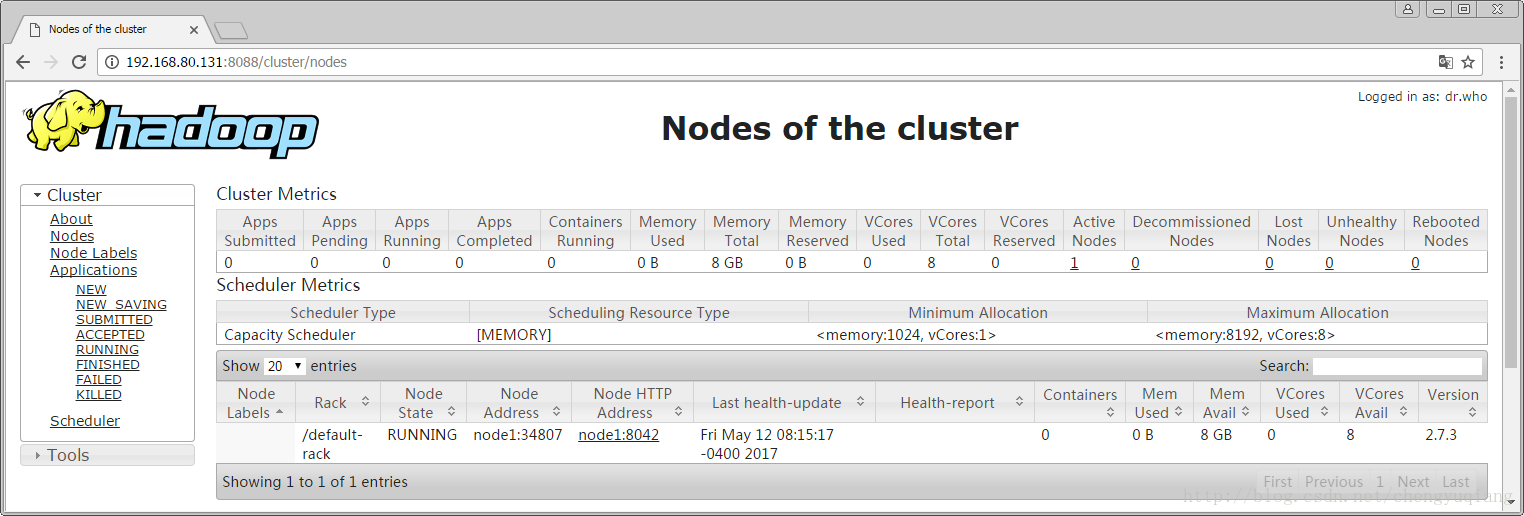

2.4.5 YARN WEB界面

YARN的Web界面默认端口号是8088。

http://192.168.80.131:8088

单击左侧菜单栏的“Nodes”,可以查看NodeManager信息

第2章 Hadoop快速入门

2.4 Hadoop单机运行

紧接上一节内容,首先切换到Hadoop根目录

或者cd /opt/hadoop-2.7.3进入Hadoop根目录

通过pwd命令可以知道当前所在目录

[root@node1 hadoop-2.7.3]# pwd

- 1

注意:本节命令都将在/opt/hadoop-2.7.3目录下执行。

2.4.1 namenode格式化

执行bin/hadoop namenode -format命令,进行namenode格式化

[root@node1 hadoop-2.7.3]# bin/hadoop namenode -format

- 1

输出信息(下半部分):

-

17/

05/

12

05:

59:

11 INFO namenode

.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

-

17/

05/

12

05:

59:

11 INFO namenode

.NameNode: createNameNode [-format]

-

Formatting using clusterid: CID-db9a34c9-

661e-4fc0-a273-b554e0cfb32b

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: No KeyProvider found.

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: fsLock is fair:true

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.DatanodeManager: dfs

.block

.invalidate

.limit=

1000

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.DatanodeManager: dfs

.namenode

.datanode

.registration

.ip-hostname-

check=

true

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: dfs

.namenode

.startup

.delay

.block

.deletion

.sec

is

set

to

000:

00:

00:

00.000

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: The

block deletion will

start around

2017 May

12

05:

59:

12

-

17/

05/

12

05:

59:

12 INFO util

.GSet: Computing

capacity

for

map BlocksMap

-

17/

05/

12

05:

59:

12 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

12 INFO util

.GSet:

2.0%

max

memory

966.7 MB =

19.3 MB

-

17/

05/

12

05:

59:

12 INFO util

.GSet:

capacity =

2^

21 =

2097152 entries

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: dfs

.block

.access

.token

.enable=

false

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: defaultReplication =

1

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: maxReplication =

512

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: minReplication =

1

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: maxReplicationStreams =

2

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: replicationRecheckInterval =

3000

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: encryptDataTransfer =

false

-

17/

05/

12

05:

59:

12 INFO blockmanagement

.BlockManager: maxNumBlocksToLog =

1000

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: fsOwner = root (auth:SIMPLE)

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: supergroup = supergroup

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: isPermissionEnabled =

true

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: HA Enabled:

false

-

17/

05/

12

05:

59:

12 INFO namenode

.FSNamesystem: Append Enabled:

true

-

17/

05/

12

05:

59:

13 INFO util

.GSet: Computing

capacity

for

map INodeMap

-

17/

05/

12

05:

59:

13 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

1.0%

max

memory

966.7 MB =

9.7 MB

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

capacity =

2^

20 =

1048576 entries

-

17/

05/

12

05:

59:

13 INFO namenode

.FSDirectory: ACLs enabled?

false

-

17/

05/

12

05:

59:

13 INFO namenode

.FSDirectory: XAttrs enabled?

true

-

17/

05/

12

05:

59:

13 INFO namenode

.FSDirectory: Maximum

size

of an xattr:

16384

-

17/

05/

12

05:

59:

13 INFO namenode

.NameNode:

Caching

file

names occuring more

than

10 times

-

17/

05/

12

05:

59:

13 INFO util

.GSet: Computing

capacity

for

map cachedBlocks

-

17/

05/

12

05:

59:

13 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

0.25%

max

memory

966.7 MB =

2.4 MB

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

capacity =

2^

18 =

262144 entries

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: dfs

.namenode

.safemode

.threshold-pct =

0.9990000128746033

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: dfs

.namenode

.safemode

.min

.datanodes =

0

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: dfs

.namenode

.safemode

.extension =

30000

-

17/

05/

12

05:

59:

13 INFO metrics

.TopMetrics: NNTop conf: dfs

.namenode

.top

.window

.num

.buckets =

10

-

17/

05/

12

05:

59:

13 INFO metrics

.TopMetrics: NNTop conf: dfs

.namenode

.top

.num

.users =

10

-

17/

05/

12

05:

59:

13 INFO metrics

.TopMetrics: NNTop conf: dfs

.namenode

.top

.windows

.minutes =

1,

5,

25

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: Retry

cache

on namenode

is enabled

-

17/

05/

12

05:

59:

13 INFO namenode

.FSNamesystem: Retry

cache will

use

0.03

of total

heap

and retry

cache entry expiry

time

is

600000 millis

-

17/

05/

12

05:

59:

13 INFO util

.GSet: Computing

capacity

for

map NameNodeRetryCache

-

17/

05/

12

05:

59:

13 INFO util

.GSet: VM

type =

64-

bit

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

0.029999999329447746%

max

memory

966.7 MB =

297.0 KB

-

17/

05/

12

05:

59:

13 INFO util

.GSet:

capacity =

2^

15 =

32768 entries

-

17/

05/

12

05:

59:

13 INFO namenode

.FSImage: Allocated

new BlockPoolId: BP

-

2055065474

-

192.168

.80

.131

-

1494583153319

-

17/

05/

12

05:

59:

13 INFO common

.Storage:

Storage

directory /tmp/hadoop-root/dfs/

name has been successfully formatted.

-

17/

05/

12

05:

59:

13 INFO namenode

.FSImageFormatProtobuf: Saving image

file /tmp/hadoop-root/dfs/

name/

current/fsimage

.ckpt_0000000000000000000

using

no compression

-

17/

05/

12

05:

59:

13 INFO namenode

.FSImageFormatProtobuf: Image

file /tmp/hadoop-root/dfs/

name/

current/fsimage

.ckpt_0000000000000000000

of

size

351

bytes saved

in

0 seconds.

-

17/

05/

12

05:

59:

13 INFO namenode

.NNStorageRetentionManager: Going

to retain

1 images

with txid >=

0

-

17/

05/

12

05:

59:

13 INFO util

.ExitUtil: Exiting

with

status

0

-

17/

05/

12

05:

59:

13 INFO namenode

.NameNode: SHUTDOWN_MSG:

-

/************************************************************

-

SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.80.131

-

************************************************************/

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

在执行结果中可以找到has been successfully formatted,说明namenode格式化成功了!

2.4.2 启动HDFS

执行sbin/start-dfs.sh命令启动HDFS

-

[root@node1 hadoop

-

2.7

.3]

# sbin/start-dfs.sh

-

Starting namenodes

on [node1]

-

node1: starting namenode, logging

to /opt/hadoop

-

2.7

.3/logs/hadoop-root-namenode-node1.out

-

localhost: starting datanode, logging

to /opt/hadoop

-

2.7

.3/logs/hadoop-root-datanode-node1.out

-

Starting secondary namenodes [

0.0

.0

.0]

-

The authenticity

of host

'

0.0

.0

.0

(

0.0

.0

.0

)' can't be established.

-

ECDSA

key fingerprint

is e2:

9a:

7d:

70:

25:

24:

45:

11:

97:

12:

35:e0:

45:

4c:

64:

31.

-

Are you sure you want

to

continue connecting (yes/no)? yes

-

0.0

.0

.0: Warning: Permanently added

'

0.0

.0

.0

' (ECDSA)

to

the

list

of

known hosts.

-

0.0

.0

.0: starting secondarynamenode, logging

to /opt/hadoop

-

2.7

.3/logs/hadoop-root-secondarynamenode-node1.out

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

在启动HDFS过程中,按照提示输入“yes”

通过jps命令可以查看Java进程

[root@node1 hadoop-2.7.3]# jps

- 1

jps(Java Virtual Machine Process Status Tool)是JDK 1.5开始提供的一个显示当前所有Java进程pid的命令,简单实用,非常适合在Linux/unix平台上简单察看当前java进程的一些简单情况。 jps -l输出应用程序main class的完整package名 或者 应用程序的jar文件完整路径名

-

[root@node1 ~]

# jps -l

-

5752

sun

.tools

.jps

.Jps

-

5342

org

.apache

.hadoop

.hdfs

.server

.namenode

.NameNode

-

5440

org

.apache

.hadoop

.hdfs

.server

.datanode

.DataNode

-

5630

org

.apache

.hadoop

.hdfs

.server

.namenode

.SecondaryNameNode

- 1

- 2

- 3

- 4

- 5

2.4.3 启动YARN

通过命令sbin/start-yarn.sh启动YARN

-

[root@node1 hadoop-

2.7

.3]

# sbin/start-yarn.sh

-

starting yarn daemons

-

starting resourcemanager, logging

to /opt/hadoop-

2.7

.3/logs/yarn-root-resourcemanager-node1

.out

-

localhost: starting nodemanager, logging

to /opt/hadoop-

2.7

.3/logs/yarn-root-nodemanager-node1

.out

- 1

- 2

- 3

- 4

然后通过jps查看YARN的进程

-

[root

@node1 hadoop-

2.7.

3]

# jps

-

5440

DataNode

-

7253

ResourceManager

-

7351

NodeManager

-

5342

NameNode

-

5630

SecondaryNameNode

-

7662

Jps

-

-

[root

@node1 hadoop-

2.7.

3]

#

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

可以看到多了ResourceManager和NodeManager两个进程。

2.4.4 HDFS WEB界面

HDFS的Web界面默认端口号是50070。

因为宿主机Windows的hosts文件没有配置虚拟机相关IP信息,所以需要通过IP地址来访问HDFS WEB界面,在浏览器中打开:http://192.168.80.131:50070

单击页面上部的导航栏中的“Datanodes”

2.4.5 YARN WEB界面

YARN的Web界面默认端口号是8088。

http://192.168.80.131:8088

单击左侧菜单栏的“Nodes”,可以查看NodeManager信息