requests库

requests 是用python编写,基于 urllib,采用 Apache2 Licensed 开源协议的一个很实用的Python HTTP客户端库。

它比 urllib 更加方便,可以节约我们大量的工作,完全满足 HTTP 测试需求。Requests 的哲学是以 PEP 20 的习语为中心开发的,所以它比 urllib 更好用。更重要的一点是它支持 Python3 哦!

urllib

pyhton3中把urllib2里面的方法封装到urllib.request

urllib:https://docs.python.org/3/library/urllib.html

HTTP常见的状态码有哪些:

404:页面找不到

403:拒绝访问

200:成功访问

2xxx:成功

3xxx:重定向

4xxx:客户端的问题

5xxx:服务端的问题

所有状态码:

-

消息

▪ 100 Continue

▪ 101 Switching Protocols

▪ 102 Processing -

成功

▪ 200 OK

▪ 201 Created

▪ 202 Accepted

▪ 203 Non-Authoritative Information

▪ 204 No Content

▪ 205 Reset Content

▪ 206 Partial Content

▪ 207 Multi-Status -

重定向

▪ 300 Multiple Choices

▪ 301 Moved Permanently

▪ 302 Move temporarily

▪ 303 See Other

▪ 304 Not Modified

▪ 305 Use Proxy

▪ 306 Switch Proxy

▪ 307 Temporary Redirect -

请求错误

▪ 400 Bad Request

▪ 401 Unauthorized

▪ 402 Payment Required

▪ 403 Forbidden

▪ 404 Not Found

▪ 405 Method Not Allowed

▪ 406 Not Acceptable

▪ 407 Proxy Authentication Required

▪ 408 Request Timeout

▪ 409 Conflict

▪ 410 Gone

▪ 411 Length Required

▪ 412 Precondition Failed

▪ 413 Request Entity Too Large

▪ 414 Request-URI Too Long

▪ 415 Unsupported Media Type

▪ 416 Requested Range Not Satisfiable

▪ 417 Expectation Failed

▪ 421 too many connections

▪ 422 Unprocessable Entity

▪ 423 Locked

▪ 424 Failed Dependency

▪ 425 Unordered Collection

▪ 426 Upgrade Required

▪ 449 Retry With

▪ 451Unavailable For Legal Reasons -

服务器错误

▪ 500 Internal Server Error

▪ 501 Not Implemented

▪ 502 Bad Gateway

▪ 503 Service Unavailable

▪ 504 Gateway Timeout

▪ 505 HTTP Version Not Supported(http/1.1)

▪ 506 Variant Also Negotiates

▪ 507 Insufficient Storage

▪ 509 Bandwidth Limit Exceeded

▪ 510 Not Extended

▪ 600 Unparseable Response Headers

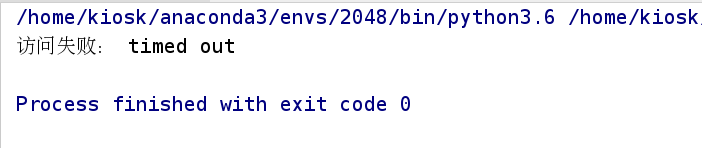

urllib模块里面的异常:

代码:

from urllib import request

from urllib import error

try:

url = 'http://www.baidu.com/hello.html'

response = request.urlopen(url, timeout=0.01)

except error.HTTPError as e:

print(e.code, e.headers, e.reason)

except error.URLError as e:

print('访问失败:',e.reason)

else:

content = response.read().decode('utf-8')

print(content[:5])

运行结果:

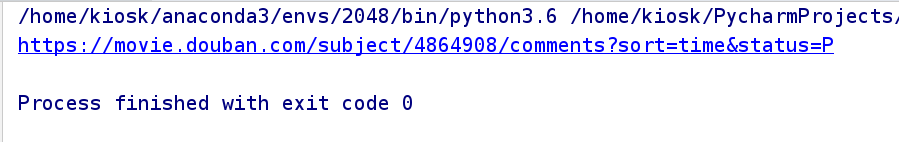

url解析模块

urlencode模块:用于编码

urlparse模块:拥有解码

对url地址进行编码:https://movie.douban.com/subject/4864908/comments?sort=time&status=P

代码:

from urllib.parse import urlencode

data = urlencode({

'sort': 'time',

'status': 'P'

})

doubanUrl = 'https://movie.douban.com/subject/4864908/comments?' + data

print(doubanUrl)

运行结果:

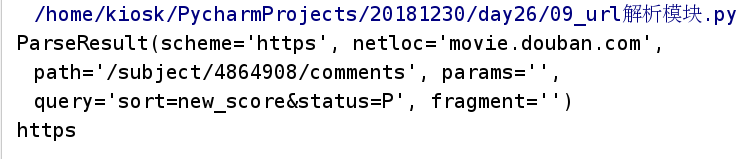

对url地址进行解码:https://movie.douban.com/subject/4864908/comments?sort=new_score&status=P

代码:

from urllib.parse import urlparse

doubanUrl = 'https://movie.douban.com/subject/4864908/comments?sort=new_score&status=P'

info = urlparse(doubanUrl)

print(info)

print(info.scheme)

运行结果:

requests

爬取页面信息

爬取京东商品页面信息内容

京东商品页面url:https://item.jd.com/6789689.html

import requests

from urllib.error import HTTPError

def get_content(url):

try:

response = requests.get(url)

response.raise_for_status() # 如果状态码不是200, 引发HttpError异常

# 从内容分析出响应内容的编码格式

response.encoding = response.apparent_encoding # 根据响应信息判断网页的编码格式,便于response.text知道如何解码

except HTTPError as e:

print(e)

else:

# return response.text # 返回的是字符串类型

return response.content # 返回的是bytes类型,不进行解码

if __name__ == '__main__':

url = 'https://item.jd.com/6789689.html'

content = get_content(url)

with open('doc/jingdong.html', 'wb') as f:

f.write(content)

将获取到的商品信息写入html文件jingdong.html;

将文件jingdong.html用浏览器打开则为京东商品信息页面。

提交数据到服务器

Http常见的请求方法:

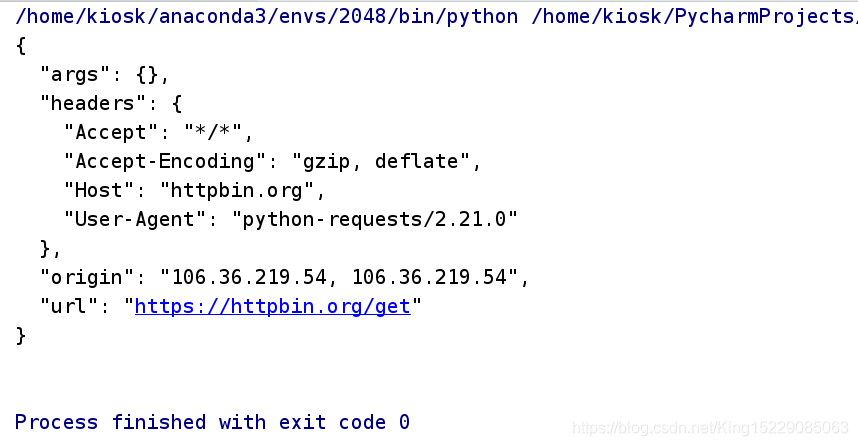

- get

import requests

response = requests.get('https://httpbin.org/get')

print(response.text)

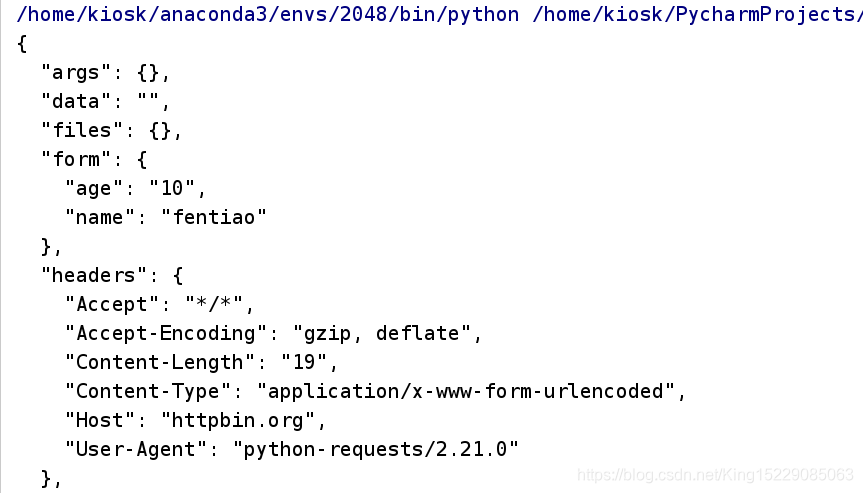

- post

import requests

response = requests.post('https://httpbin.org/post',data={'name':'fentiao','age':10})

print(response.text)

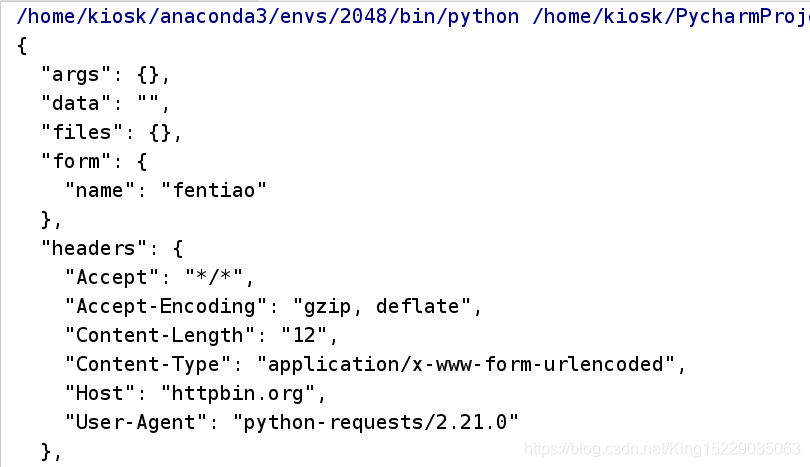

delete方法:

import requests

response = requests.delete('https://httpbin.org/delete',data={'name':'fentiao'})

print(response.text)

带参数的get请求方法:

get请求页面:https://movie.douban.com/subject/4864908/comments?start=20&limit=20&sort=time&status=P

url = 'https://movie.douban.com/subject/4864908/comments'

data = {

'start':20,

'limit':20,

'sort':'time',

'status':'P'

}

response = requests.get(url,params=data)

print(response.text)

print(response.url)

百度/360搜索的关键字提交

代码:

import requests

def keyword_post(url, data):

try:

user_agent = "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.109 Safari/537.36"

response = requests.get(url, params=data, headers={'User-Agent': user_agent})

response.raise_for_status() # 如果返回的状态码不是200, 则抛出异常;

response.encoding = response.apparent_encoding # 判断网页的编码格式,便于respons.text知道如何解码;

except Exception as e:

print("爬取错误")

else:

print(response.url)

print("爬取成功!")

# response.content:返回的是bytes类型,比如:下载图片,视频

# response.text:返回的是str类型,默认情况会将bytes类型转成str类型

return response.content

def search_baidu():

url = "https://www.baidu.com"

keyword = input("请输入搜索的关键字:")

# wd是百度需要的关键词

data = {

'wd': keyword

}

keyword_post(url, data)

def search_360():

url = "https://www.so.com"

keyword = input("请输入搜索的关键字:")

# q是360需要的关键词

data = {

'q': keyword

}

content = keyword_post(url, data)

with open('360.html', 'wb') as f:

f.write(content)

if __name__ == '__main__':

search_baidu()

search_360()

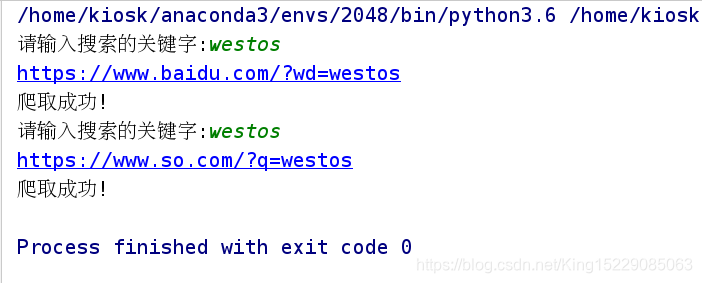

运行结果:

点击链接即可进入搜索结果页面:

百度:https://www.baidu.com/?wd=westos

360:https://www.so.com/?q=westos

上传chinaunix登录数据

import requests

# 1). 上传数据

url = 'http://bbs.chinaunix.net/member.php?mod=logging&action=login&loginsubmit=yes&loginhash=La2A2'

# 这里的用户名和密码写你自己的,鉴于保密用xxxxxx代替

postData = {

'username':'xxxxxx',

'password':'xxxxxx'

}

# post 给网站提交登陆信息

response = requests.post(url,data=postData)

# 2). 将获取的页面写入文件, 用于检测是否爬取成功

with open('doc/chinaunix.html','wb') as f:

f.write(response.content)

# 3). 查看网站的cookie信息

print(response.cookies)

for key,value in response.cookies.items():

print(key + ' = ' + value)

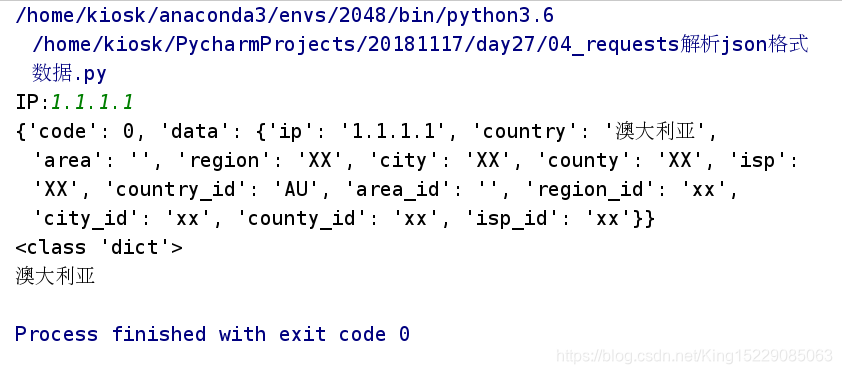

解析json格式的数据

import requests

# 解析json格式

ip = input('IP:')

url = "http://ip.taobao.com/service/getIpInfo.php"

data = {

'ip': ip

}

response = requests.get(url, params=data)

# 将响应的json数据编码为python可以识别的数据类型

content = response.json()

print(content)

print(type(content))

country = content['data']['country']

print(country)

解析json数据为python可以识别的数据类型:

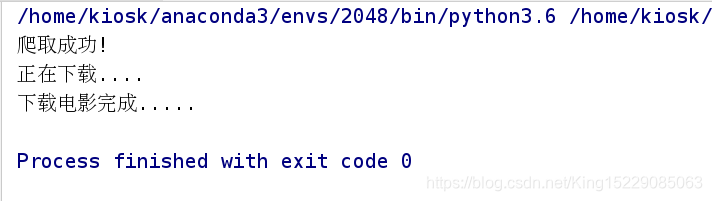

下载指定图片/视频

import requests

def get_content(url):

try:

user_agent = "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.109 Safari/537.36"

response = requests.get(url, headers={'User-Agent': user_agent})

response.raise_for_status()

response.encoding = response.apparent_encoding

except Exception as e:

print("爬取错误")

else:

print("爬取成功!")

return response.content # 下载视频需要的是bytes类型

if __name__ == '__main__':

# 下载图片

url = 'https://gss0.bdstatic.com/-4o3dSag_xI4khGkpoWK1HF6hhy/baike/w%3D268%3Bg%3D0/sign=4f7bf38ac3fc1e17fdbf8b3772ab913e/d4628535e5dde7119c3d076aabefce1b9c1661ba.jpg'

# 下载视频

# url = "http://gslb.miaopai.com/stream/sJvqGN6gdTP-sWKjALzuItr7mWMiva-zduKwuw__.mp4"

movie_content = get_content(url)

print("正在下载....")

with open('doc/movie.jpg', 'wb') as f:

f.write(movie_content)

print("下载电影完成.....")

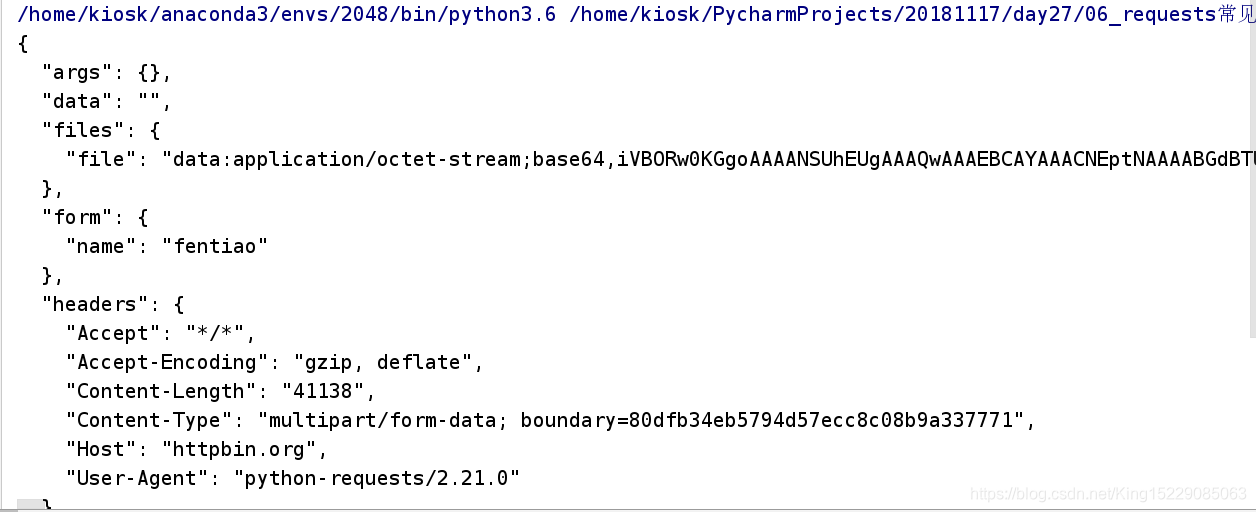

常见使用总结

1.上传文件:指定的文件的内容

import requests

data = {

'name':'fentiao'

}

files = {

# 二进制文件需要指定rb

'file': open('doc/movie.jpg', 'rb')

}

response = requests.post(url='http://httpbin.org/post', data = data, files=files)

print(response.text)

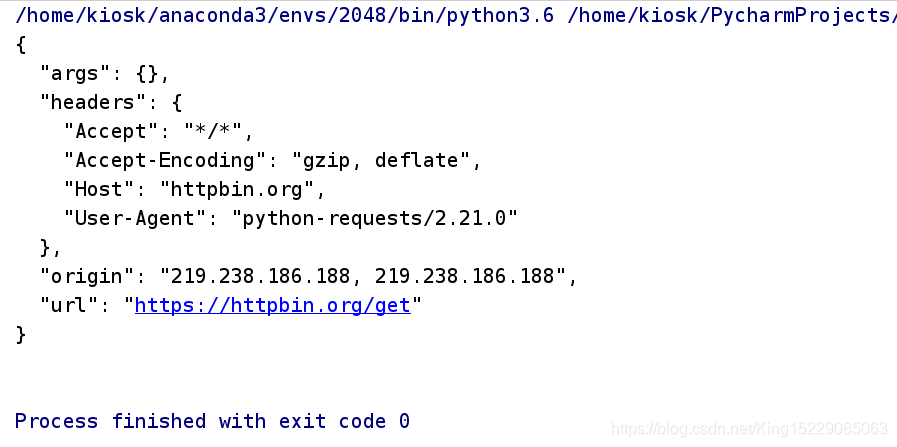

2.设置代理

import requests

proxies = {

'http':'219.238.186.188:8118',

'https':'110.52.235.228:9999'

}

response = requests.get('http://httpbin.org/get', proxies=proxies, timeout=2)

print(response.text)

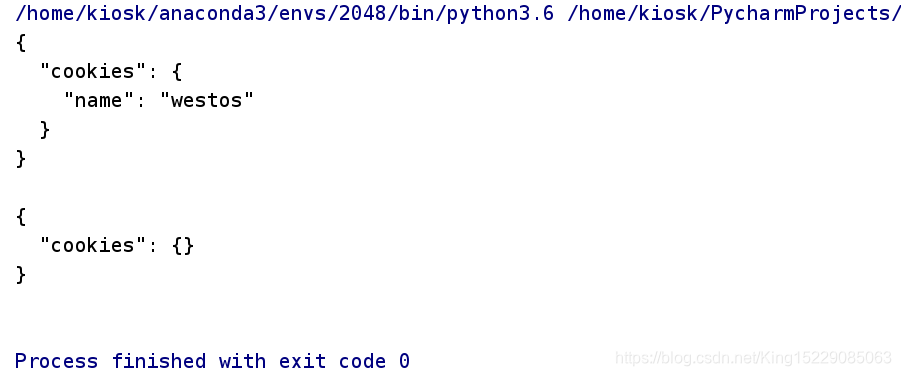

3.cookie信息的保存和加载

客户端的缓存,保持客户端和服务端连接会话session

import requests

seesionObj = requests.session()

# 专门用来设置cookie信息的网址

response1 = seesionObj.get('http://httpbin.org/cookies/set/name/westos')

# 专门用来查看cookie信息的网址

response2 = seesionObj.get('http://httpbin.org/cookies')

print(response2.text)

# 如果没有保持客户端和服务端连接会话session,则查看不到设置的cookie信息

# 专门用来设置cookie信息的网址

response1 = requests.get('http://httpbin.org/cookies/set/name/westos')

# 专门用来查看cookie信息的网址

response2 = requests.get('http://httpbin.org/cookies')

print(response2.text)