利用HttpClient-Get去爬取数据

1、网络爬虫方法简述:

其实网络爬虫就跟我们人用浏览器去访问一个网页套路是一样的,都是分为四个部分:

- 打开浏览器:创建HttpClient对象。

- 输入网址:创建发起Get请求,创建HttpGet对象。

- 按回车:发起请求,返回响应,使用httpClient发送请求。

- 解析响应获取请求:判断状态码是否是200,如果为200就是访问成功了。

- 最后关闭response和httpClient

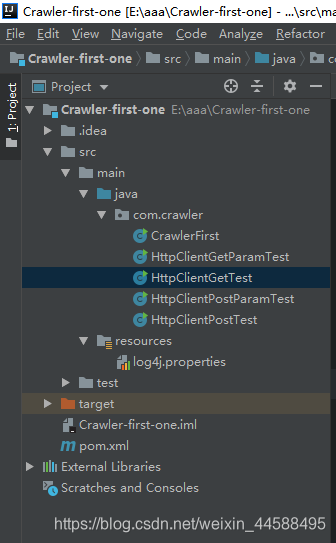

2、该项目的目录结构

如何创建项目请看:https://blog.csdn.net/weixin_44588495/article/details/90580722

3、不带参数去爬取数据

package com.crawler;

import org.apache.http.HttpEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import sun.net.www.http.HttpClient;

import java.io.IOException;

public class HttpClientGetTest {

public static void main(String[] args) {

//1、打开浏览器,创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//2、输入网址,创建发起Get请求,创建HttpGet对象

HttpGet httpGet = new HttpGet("http://www.itcast.cn");

CloseableHttpResponse response = null;

try {

//3、按回车,发起请求,返回响应,使用httpClient发送请求

response = httpClient.execute(httpGet);

//4、解析响应获取请求,判断状态码是否是200

if(response.getStatusLine().getStatusCode() == 200){

HttpEntity httpEntity = response.getEntity();

String content = EntityUtils.toString(httpEntity,"utf-8");

System.out.println(content);

}

} catch (IOException e) {

e.printStackTrace();

}finally {

try {

response.close();

} catch (IOException e) {

e.printStackTrace();

}

try {

httpClient.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

}

运行结果:

4、带参数去爬取数据

这里需要为Get请求设置参数,通过URIBuilder类和build方法实现。

package com.crawler;

import org.apache.http.HttpEntity;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.client.utils.URIBuilder;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

public class HttpClientGetParamTest {

public static void main(String[] args) throws Exception {

//1、打开浏览器,创建HttpClient对象

CloseableHttpClient httpClient = HttpClients.createDefault();

//设置请求地址为:http://yun.itheima.com/course?keys=java

//创建URIBulider

URIBuilder uriBuilder = new URIBuilder("http://yun.itheima.com/course");

//设置参数:

uriBuilder.setParameter("keys","java");

//2、输入网址,创建发起Get请求,创建HttpGet对象

HttpGet httpGet = new HttpGet(uriBuilder.build());

CloseableHttpResponse response = null;

try {

//3、按回车,发起请求,返回响应,使用httpClient发送请求

response = httpClient.execute(httpGet);

//4、解析响应获取请求,判断状态码是否是200

if(response.getStatusLine().getStatusCode() == 200){

HttpEntity httpEntity = response.getEntity();

String content = EntityUtils.toString(httpEntity,"utf-8");

System.out.println(content);

}

} catch (IOException e) {

e.printStackTrace();

}finally {

response.close();

httpClient.close();

}

}

}

运行结果: