Hadoop部署模式有:本地模式、伪分布模式、完全分布式模式、HA完全分布式模式。

区分的依据是NameNode、DataNode、ResourceManager、NodeManager等模块运行在几个JVM进程、几个机器。

| 模式名称 | 各个模块占用的JVM进程数 | 各个模块运行在几个机器数上 |

|---|---|---|

| 本地模式 | 1个 | 1个 |

| 伪分布式模式 | N个 | 1个 |

| 完全分布式模式 | N个 | N个 |

| HA完全分布式 | N个 | N个 |

Hadoop本地模式安装

下载hadoop:http://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.0.0/hadoop-3.0.0.tar.gz

解压至~/Programs目录:

tar -xzf hadoop-3.0.0.tar.gz- 创建并进入目录~/hadoop-workspace,准备mapreduce输入文件wc.input

hadoop mapreduce hive

hbase spark storm

sqoop hadoop hive

spark hadoop- 运行hadoop自带的mapreduce Demo

~/Programs/hadoop-3.0.0/bin/hadoop jar ~/Programs/hadoop-3.0.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.0.jar wordcount wc.input wc.ouput- 查看输出文件:

➜ ls

_SUCCESS part-r-00000_SUCCESS表示任务成功,part-r-00000为结果文件。

➜ cat part-r-00000

hadoop 3

hbase 1

hive 2

mapreduce 1

spark 2

sqoop 1

storm 1Hadoop伪分布式模式安装

- 配置Hadoop环境变量

vim /etc/profile

# 追加如下两行配置

export HADOOP_HOME="~/Programs/hadoop-3.0.0"

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

## 保存文件内容并source使其生效

source /etc/profile- 配置core-site.xml

- HDFS的地址

- Hadoop临时目录

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/data/tmp</value>

</property>

</configuration>- 配置hdfs-site.xml

dfs.replication配置的是HDFS存储时的备份数量

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>- 格式化HDFS

~/Programs/hadoop-3.0.0/bin/hdfs namenode -format格式化是对HDFS这个分布式文件系统中的DataNode进行分块,统计所有分块后的初始元数据的存储在NameNode中。

格式化后,查看core-site.xml里hadoop.tmp.dir指定的目录下是否有了dfs目录。如果有,则说明格式化成功。

- 查看NameNode格式化后的目录

➜ ll /opt/data/tmp/dfs/name/current

total 32

-rw-r--r-- 1 chuxing wheel 214B 3 22 21:28 VERSION

-rw-r--r-- 1 chuxing wheel 397B 3 22 21:28 fsimage_0000000000000000000

-rw-r--r-- 1 chuxing wheel 62B 3 22 21:28 fsimage_0000000000000000000.md5

-rw-r--r-- 1 chuxing wheel 2B 3 22 21:28 seen_txidvession文件里保存:

- namespaceID:NameNode的唯一ID。

- clusterID:集群ID,NameNode和DataNode的集群ID应该一致,表明是一个集群。

VERSION文件内容:

#Thu Mar 22 21:28:23 CST 2018

namespaceID=1277421845

clusterID=CID-a41907d3-7347-4ed2-95a8-0f9758d4a9db

cTime=1521725303613

storageType=NAME_NODE

blockpoolID=BP-1635914868-127.0.0.1-1521725303613

layoutVersion=-64- 启动NameNode

${HADOOP_HOME}/sbin/hadoop-daemon.sh start namenode- 启动DataNode

${HADOOP_HOME}/sbin/hadoop-daemon.sh start datanode- 启动SecondaryNameNode

${HADOOP_HOME}/sbin/hadoop-daemon.sh start secondarynamenode启动NameNode、DataNode、SecondaryNameNode也可采用如下的方式:

➜ ~ hdfs namenode

➜ ~ hdfs secondarynamenode

➜ ~ hdfs datanode

# 或者后台启动

➜ ~ hdfs --daemon start namenode

➜ ~ hdfs --daemon start datanode

➜ ~ hdfs --daemon start secondarynamenode- JPS命令查看是否已经启动成功

47203 SecondaryNameNode

47508 DataNode

47572 Jps

47112 NameNode- 测试上传、下载、查看文件等操作

➜ ~ hdfs dfs -mkdir /demo

➜ ~ hdfs dfs -put ~/hadoop-worksapce/wc.input /demo

➜ ~ hdfs dfs -cat /demo/wc.input

➜ ~ hdfs dfs -get /demo/wc.input配置、启动YARN

- 配置mapred-site.xml

vim ${HADOOP_HOME}/etc/hadoop/mapred-site.xml增加如下内容:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>- 配置yarn-site.xml

vim ${HADOOP_HOME}/etc/hadoop/yarn-site.xml增加如下内容:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourceManager.hostname</name>

<value>localhost</value>

</property>

</configuration>- yarn.nodemanager.aux-services:配置yarn的默认混洗方式,选择为mapreduce的默认混洗算法。

yarn.resourcemanager.hostname:指定Resourcemanager的运行节点。

- 启动ResourceManager

➜ ~ ${HADOOP_HOME}/sbin/yarn-daemon.sh start resourcemanager

WARNING: Use of this script to start YARN daemons is deprecated.

WARNING: Attempting to execute replacement "yarn --daemon start" instead.- 启动NodeManager

➜ ~ ${HADOOP_HOME}/sbin/yarn-daemon.sh start nodemanager

WARNING: Use of this script to start YARN daemons is deprecated.

WARNING: Attempting to execute replacement "yarn --daemon start" instead.- 查看是否启动成功

➜ ~ jps

54032 SecondaryNameNode

53969 DataNode

52755 Launcher

28421 NailgunRunner

77940 ResourceManager

78278 Jps

78185 NodeManager

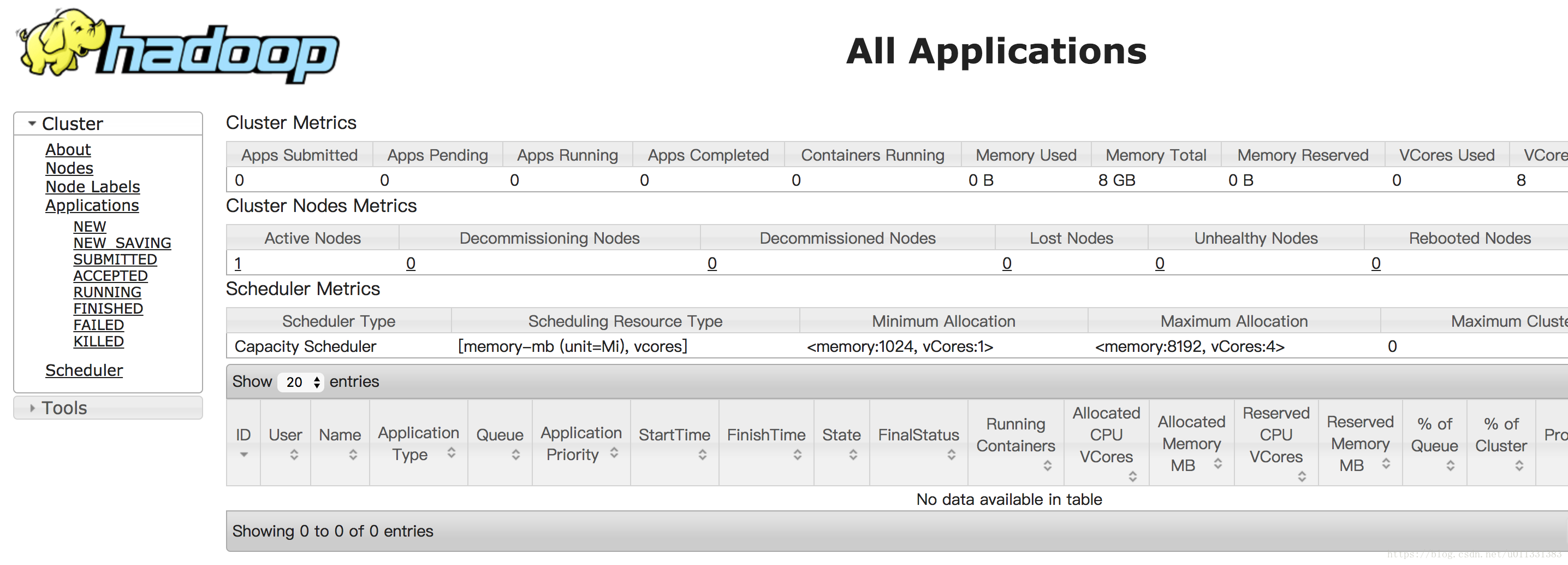

53881 NameNodeYARN的Web页面

YARN的Web客户端端口号是8088,通过http://localhost:8088/可以查看。

运行WordCount MapReduce Job

${HADOOP_HOME}/bin/yarn jar ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.0.jar wordcount /demo/wc.input /demo/wc.output输出日志如下:

➜ ~ ${HADOOP_HOME}/bin/yarn jar ${HADOOP_HOME}/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.0.jar wordcount /demo/wc.input /demo/wc.output

2018-03-28 17:14:23,183 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2018-03-28 17:14:23,888 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2018-03-28 17:14:24,348 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/chuxing/.staging/job_1522226869846_0004

2018-03-28 17:14:24,529 INFO input.FileInputFormat: Total input files to process : 1

2018-03-28 17:14:24,579 INFO mapreduce.JobSubmitter: number of splits:1

2018-03-28 17:14:24,607 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

2018-03-28 17:14:24,691 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1522226869846_0004

2018-03-28 17:14:24,693 INFO mapreduce.JobSubmitter: Executing with tokens: []

2018-03-28 17:14:24,851 INFO conf.Configuration: resource-types.xml not found

2018-03-28 17:14:24,852 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2018-03-28 17:14:24,910 INFO impl.YarnClientImpl: Submitted application application_1522226869846_0004

2018-03-28 17:14:24,941 INFO mapreduce.Job: The url to track the job: http://localhost:8088/proxy/application_1522226869846_0004/

2018-03-28 17:14:24,942 INFO mapreduce.Job: Running job: job_1522226869846_0004

2018-03-28 17:14:32,041 INFO mapreduce.Job: Job job_1522226869846_0004 running in uber mode : false

2018-03-28 17:14:32,042 INFO mapreduce.Job: map 0% reduce 0%

2018-03-28 17:14:36,087 INFO mapreduce.Job: map 100% reduce 0%

2018-03-28 17:14:41,128 INFO mapreduce.Job: map 100% reduce 100%

2018-03-28 17:14:41,135 INFO mapreduce.Job: Job job_1522226869846_0004 completed successfully

2018-03-28 17:14:41,223 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=94

FILE: Number of bytes written=411915

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=171

HDFS: Number of bytes written=60

HDFS: Number of read operations=8

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=2413

Total time spent by all reduces in occupied slots (ms)=2399

Total time spent by all map tasks (ms)=2413

Total time spent by all reduce tasks (ms)=2399

Total vcore-milliseconds taken by all map tasks=2413

Total vcore-milliseconds taken by all reduce tasks=2399

Total megabyte-milliseconds taken by all map tasks=2470912

Total megabyte-milliseconds taken by all reduce tasks=2456576

Map-Reduce Framework

Map input records=4

Map output records=11

Map output bytes=115

Map output materialized bytes=94

Input split bytes=100

Combine input records=11

Combine output records=7

Reduce input groups=7

Reduce shuffle bytes=94

Reduce input records=7

Reduce output records=7

Spilled Records=14

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=88

CPU time spent (ms)=0

Physical memory (bytes) snapshot=0

Virtual memory (bytes) snapshot=0

Total committed heap usage (bytes)=444596224

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=71

File Output Format Counters

Bytes Written=60如果运行报错:”错误: 找不到或无法加载主类 org.apache.hadoop.mapreduce.v2.app.MRAppMaster“,请执行hadoop classpath,获取一个输出,并将该输出内容设置为yarn-site.xml中yarn.application.classpath的值。

- 查看输出结果目录

➜ ~ hdfs dfs -ls /demo/wc.output

Found 2 items

-rw-r--r-- 1 chuxing supergroup 0 2018-03-28 17:14 /demo/wc.output/_SUCCESS

-rw-r--r-- 1 chuxing supergroup 60 2018-03-28 17:14 /demo/wc.output/part-r-00000output目录中有两个文件,_SUCCESS文件是空文件,有这个文件说明Job执行成功。

part-r-00000文件是结果文件,其中-r-说明这个文件是Reduce阶段产生的结果,mapreduce程序执行时,可以没有reduce阶段,但是肯定会有map阶段,如果没有reduce阶段这个地方有是-m-。

一个reduce会产生一个part-r-开头的文件。

- 停止Hadoop

hdfs --daemon stop namenode

hdfs --daemon stop datanode

hdfs --daemon stop secondarynamenode

yarn --daemon stop nodemanager

yarn --daemon stop resourcemanager为了方便,可以把启动和停止Hadoop写成shell脚本。

hdfs-start.sh:

#!/bin/sh

set -e

hdfs --daemon start namenode

hdfs --daemon start datanode

hdfs --daemon start secondarynamenode

yarn --daemon start nodemanager

yarn --daemon start resourcemanager

check() {

count=`jps | grep -i "$1" | wc -l`

if [ $count -gt 0 ]

then

echo "$1启动成功"

else

echo "$1启动失败"

fi

}

check namenode

check datanode

check secondarynamenode

check nodemanager

check resourcemanagerhdfs-stop.sh

#!/bin/sh

set -ex

hdfs --daemon stop namenode

hdfs --daemon stop datanode

hdfs --daemon stop secondarynamenode

yarn --daemon stop nodemanager

yarn --daemon stop resourcemanager