import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data',one_hot=True)

n_inputs = 28

max_time = 28

lstm_size = 100

n_class = 10

batch_size = 50

n_batch = mnist.train.num_examples//batch_size

x = tf.placeholder(tf.float32,[None,784])

y = tf.placeholder(tf.float32,[None,10])

weights = tf.Variable(tf.truncated_normal([lstm_size,n_class],stddev=0.1))

biases = tf.Variable(tf.constant(0.1,shape=[n_class]))

def RNN(x,weights,biases):

inputs = tf.reshape(x,[-1,max_time,n_inputs])

#lstm_cell = tf.contrib.rnn.core_rnn_cell.BasicLSTMCell(lstm_size)

lstm_cell = tf.contrib.rnn.BasicLSTMCell(lstm_size)

outputs,final_state = tf.nn.dynamic_rnn(lstm_cell,inputs,dtype=tf.float32)

results = tf.nn.softmax(tf.matmul(final_state[1],weights)+biases)

return results

pre = RNN(x,weights,biases)

loss = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(labels=y,logits=pre))#交叉熵损失

train = tf.train.AdamOptimizer(1e-4).minimize(loss)

correct_prediction = tf.equal(tf.argmax(y,1),tf.argmax(pre,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

train_acc = 31*[0]

test_acc = 31*[0]

step = list(range(31))

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

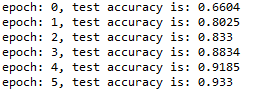

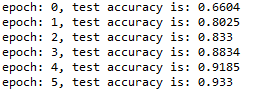

for epoch in range(6):

for batch in range(n_batch):

batch_x,batch_y = mnist.train.next_batch(batch_size)

sess.run(train,feed_dict={x:batch_x,y:batch_y})

train_acc[epoch] = sess.run(accuracy,

feed_dict={x:mnist.train.images,y:mnist.train.labels})

test_acc[epoch] = sess.run(accuracy,

feed_dict={x:mnist.test.images,y:mnist.test.labels})

print('epoch: '+str(epoch)+', test accuracy is: '+str(test_acc[epoch]))