上一篇:Python urllib包基本使用教程中介绍了urllib基本用法,相比于urllib来说Requests比urllib更加方便优越,更推崇用Requests进行爬虫

Requests是用python语言基于urllib编写的,采用的是Apache2 Licensed开源协议的HTTP库

总之,requests是python实现的最简单易用的HTTP库,建议爬虫使用requests库。

安装

通过pip安装:

$ pip3 install requests

或者也可以使用 easy_install 命令安装

$ easy_install requests

或者用pycharm通过"setting"安装

发送请求

请求方式:

- requests.get()

- requests.post()

首先需要导入 Requests 模块:

import requests

get请求 实例:

尝试获取Github 中的某个网页

r = requests.get('https://api.github.com/events')

post请求 实例:

r = requests.post('http://httpbin.org/post', data = {

'key':'value'})

HTTP请求类型:

r = requests.put('http://httpbin.org/put', data = {

'key':'value'})

r = requests.delete('http://httpbin.org/delete')

r = requests.head('http://httpbin.org/get')

r = requests.options('http://httpbin.org/get')

参数传递

在发送请求时,经常需要向服务端发送请求参数,通常参数都是以键/值对的形式置于 URL 中,跟在一个问号的后面。例如, httpbin.org/get?key=val。Requests 允许通过使用 params 关键字参数,以一个字符串字典来提供这些参数。

实例:

# 1.以键/值对的形式传入

payload = {

'key1': 'value1', 'key2': 'value2'}

r = requests.get("http://httpbin.org/get", params=payload)

print(r.url)

# 1.输出结果

http://httpbin.org/get?key1=value1&key2=value2

#2.一个列表作为值传入:

payload = {

'key1': 'value1', 'key2': ['value2', 'value3']}

r = requests.get("http://httpbin.org/get", params=payload)

# 2.输出结果

http://httpbin.org/get?key1=value1&key2=value2&key2=value3

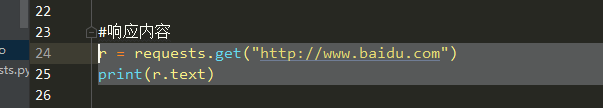

响应内容

通过返回读取服务器响应的内容

实例:

返回结果:

<!DOCTYPE html>

<!--STATUS OK--><html> <head><meta http-equiv=content-type content=text/html;charset=utf-8><meta http-equiv=X-UA-Compatible content=IE=Edge><meta content=always name=referrer><link rel=stylesheet type=text/css href=http://s1.bdstatic.com/r/www/cache/bdorz/baidu.min.css><title>ç™¾åº¦ä¸€ä¸‹ï¼Œä½ å°±çŸ¥é“</title></head> <body link=#0000cc> <div id=wrapper> <div id=head> <div class=head_wrapper> <div class=s_form> <div class=s_form_wrapper> <div id=lg> <img hidefocus=true src=//www.baidu.com/img/bd_logo1.png width=270 height=129> </div> <form id=form name=f action=//www.baidu.com/s class=fm> <input type=hidden name=bdorz_come value=1> <input type=hidden name=ie value=utf-8> <input type=hidden name=f value=8> <input type=hidden name=rsv_bp value=1> <input type=hidden name=rsv_idx value=1> <input type=hidden name=tn value=baidu><span class="bg s_ipt_wr"><input id=kw name=wd class=s_ipt value maxlength=255 autocomplete=off autofocus></span><span class="bg s_btn_wr"><input type=submit id=su value=百度一下 class="bg s_btn"></span> </form> </div> </div> <div id=u1> <a href=http://news.baidu.com name=tj_trnews class=mnav>æ–°é—»</a> <a href=http://www.hao123.com name=tj_trhao123 class=mnav>hao123</a> <a href=http://map.baidu.com name=tj_trmap class=mnav>地图</a> <a href=http://v.baidu.com name=tj_trvideo class=mnav>视频</a> <a href=http://tieba.baidu.com name=tj_trtieba class=mnav>è´´å§</a> <noscript> <a href=http://www.baidu.com/bdorz/login.gif?login&tpl=mn&u=http%3A%2F%2Fwww.baidu.com%2f%3fbdorz_come%3d1 name=tj_login class=lb>登录</a> </noscript> <script>document.write('<a href="http://www.baidu.com/bdorz/login.gif?login&tpl=mn&u='+ encodeURIComponent(window.location.href+ (window.location.search === "" ? "?" : "&")+ "bdorz_come=1")+ '" name="tj_login" class="lb">登录</a>');</script> <a href=//www.baidu.com/more/ name=tj_briicon class=bri style="display: block;">更多产å“</a> </div> </div> </div> <div id=ftCon> <div id=ftConw> <p id=lh> <a href=http://home.baidu.com>å

³äºŽç™¾åº¦</a> <a href=http://ir.baidu.com>About Baidu</a> </p> <p id=cp>©2017 Baidu <a href=http://www.baidu.com/duty/>使用百度å‰å¿

读</a> <a href=http://jianyi.baidu.com/ class=cp-feedback>æ„è§å馈</a> 京ICPè¯030173å· <img src=//www.baidu.com/img/gs.gif> </p> </div> </div> </div> </body> </html>

编码

请求发出后,Requests 会基于 HTTP 头部对响应的编码作出有根据的推测

r.encoding

# 输出结果

'utf-8'

修改编码

使用 r.encoding属性来改变:

r.encoding = 'ISO-8859-1'

二进制响应内容

Requests 会自动为解码 gzip 和 deflate 传输编码的响应数据。所以非文本请求(例如图片),也能以字节的方式访问请求响应体

实例:

from PIL import Image

from io import BytesIO

r = requests.get("https://huaban.com/explore/miaoxingrenchahua.jpg")

print(r.content)

bi = BytesIO(r.content)

print(bi)

i = Image.open(bi)

print(i)

JSON 响应内容

Requests 中内置一个 JSON 解码器,用来处理 JSON 数据:

r = requests.get('https://api.github.com/events')

print(r.json())

# 输出结果

[{

u'repository': {

u'open_issues': 0, u'url': 'https://github.com/...

原始响应内容

访问 r.raw,获取来自服务器的原始套接字响应,注意需要在请求中设置stream=True,如下:

r = requests.get('https://api.github.com/events', stream=True)

print(r.raw)

print(r.raw.read(10))

# 输出结果

<requests.packages.urllib3.response.HTTPResponse object at 0x101194810>

'\x1f\x8b\x08\x00\x00\x00\x00\x00\x00\x03'

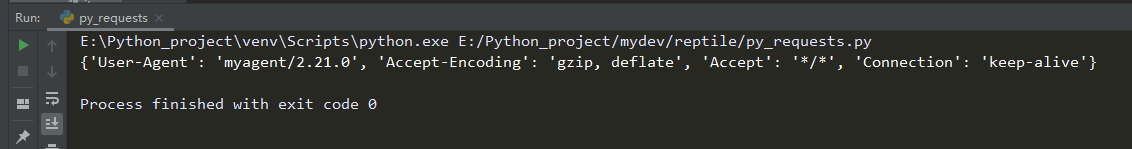

设置请求头

设置headers,为请求添加 HTTP 头部信息可以帮助我们伪装,模拟浏览器去请求网页,防止被识别为爬虫用户,爬取信息失败

设置 User-Agent :

url = 'http://www.baidu.com'

headers = {

'User-Agent': 'myagent/2.21.0'}

r = requests.get(url, headers=headers)

print(r.request.headers)

结果:

POST请求

post请求主要参数:

{

"args": {

},

"data": "",

"files": {

},

"form": {

},

"headers": {

},

"json": null,

"origin": "221.232.172.222, 221.232.172.222",

"url": "https://httpbin.org/post"

}

设置data参数

通过字典给 data 参数。数据字典会在发出请求时会自动编码为表单形式:

payload = {

'key1': 'value1', 'key2': 'value2'}

r = requests.post("http://httpbin.org/post", data=payload)

print(r.text)

# 输出结果

{

...

"form": {

"key1": "value1",

"key2": "value2"

},

...

}

data 参数传入一个元组列表。Requests 会自动将其转换成一个列表:

payload = (('key1', 'value1'), ('key1', 'value2'))

r = requests.post("http://httpbin.org/post", data=payload)

print(r.text)

# 输出结果

{

...

"form": {

"key1": [

"value1",

"value2"

]

},

...

}

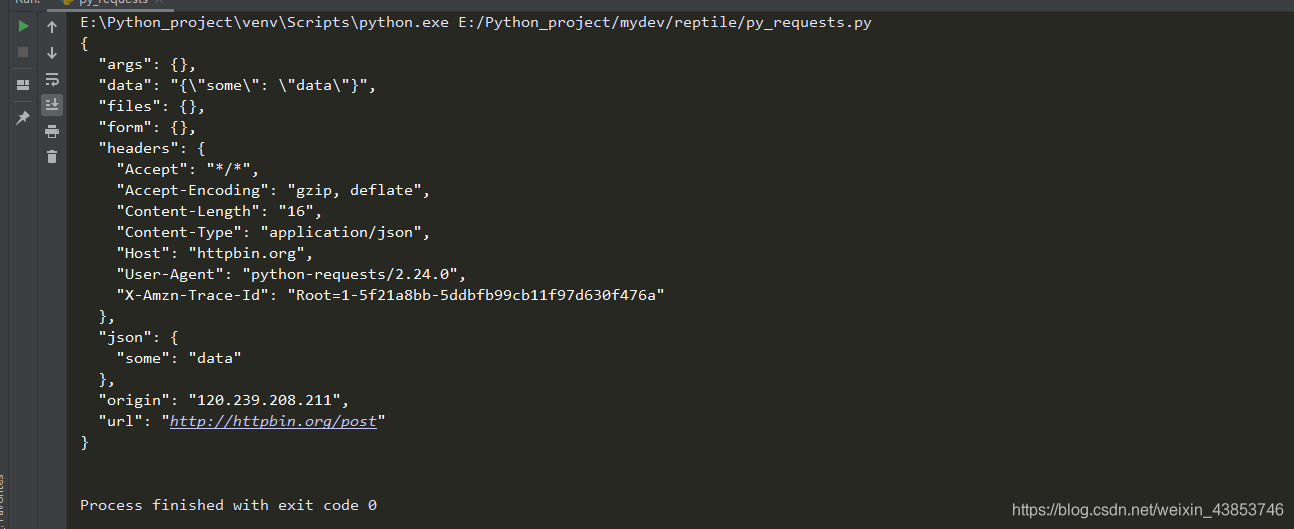

设置 json 参数

使用 json 直接传递参数,会被自动编码。

payload = {

'some': 'data'}

r = requests.post("http://httpbin.org/post", json=payload)

print(r.text)

结果:

设置文件上传

可以增加一个参数,把字符串发送到上传的文件中

files = {

'file': ('files.txt', '文件上传\n')}

r = requests.post('http://httpbin.org/post', files=files)

print(r.text)

# 输出结果

{

...

"files": {

"file": "文件上传\n"

},

...

}

或者不加参数

files = {

'file': open('file.txt', 'rb')}

r = requests.post('http://httpbin.org/post', files=files)

print(r.text)

# 输出结果

{

...

"files": {

"file": "文件上传测试"

},

...

}

响应状态码 & 响应头

从服务器响应的结果中获取状态码和响应头的信息

r = requests.get('http://httpbin.org/get')

print(r.status_code)

# 输出结果

200

内置的状态码查询对象:

print(r.status_code == requests.codes.ok)

# 输出结果

True

Requests还内置的状态码查询对象:

100: ('continue',),

101: ('switching_protocols',),

102: ('processing',),

103: ('checkpoint',),

122: ('uri_too_long', 'request_uri_too_long'),

200: ('ok', 'okay', 'all_ok', 'all_okay', 'all_good', '\o/', '✓'),

201: ('created',),

202: ('accepted',),

203: ('non_authoritative_info', 'non_authoritative_information'),

204: ('no_content',),

205: ('reset_content', 'reset'),

206: ('partial_content', 'partial'),

207: ('multi_status', 'multiple_status', 'multi_stati', 'multiple_stati'),

208: ('already_reported',),

226: ('im_used',),

Redirection.

300: ('multiple_choices',),

301: ('moved_permanently', 'moved', '\o-'),

302: ('found',),

303: ('see_other', 'other'),

304: ('not_modified',),

305: ('use_proxy',),

306: ('switch_proxy',),

307: ('temporary_redirect', 'temporary_moved', 'temporary'),

308: ('permanent_redirect',

'resume_incomplete', 'resume',), # These 2 to be removed in 3.0

Client Error.

400: ('bad_request', 'bad'),

401: ('unauthorized',),

402: ('payment_required', 'payment'),

403: ('forbidden',),

404: ('not_found', '-o-'),

405: ('method_not_allowed', 'not_allowed'),

406: ('not_acceptable',),

407: ('proxy_authentication_required', 'proxy_auth', 'proxy_authentication'),

408: ('request_timeout', 'timeout'),

409: ('conflict',),

410: ('gone',),

411: ('length_required',),

412: ('precondition_failed', 'precondition'),

413: ('request_entity_too_large',),

414: ('request_uri_too_large',),

415: ('unsupported_media_type', 'unsupported_media', 'media_type'),

416: ('requested_range_not_satisfiable', 'requested_range', 'range_not_satisfiable'),

417: ('expectation_failed',),

418: ('im_a_teapot', 'teapot', 'i_am_a_teapot'),

421: ('misdirected_request',),

422: ('unprocessable_entity', 'unprocessable'),

423: ('locked',),

424: ('failed_dependency', 'dependency'),

425: ('unordered_collection', 'unordered'),

426: ('upgrade_required', 'upgrade'),

428: ('precondition_required', 'precondition'),

429: ('too_many_requests', 'too_many'),

431: ('header_fields_too_large', 'fields_too_large'),

444: ('no_response', 'none'),

449: ('retry_with', 'retry'),

450: ('blocked_by_windows_parental_controls', 'parental_controls'),

451: ('unavailable_for_legal_reasons', 'legal_reasons'),

499: ('client_closed_request',),

Server Error.

500: ('internal_server_error', 'server_error', '/o\', '✗'),

501: ('not_implemented',),

502: ('bad_gateway',),

503: ('service_unavailable', 'unavailable'),

504: ('gateway_timeout',),

505: ('http_version_not_supported', 'http_version'),

506: ('variant_also_negotiates',),

507: ('insufficient_storage',),

509: ('bandwidth_limit_exceeded', 'bandwidth'),

510: ('not_extended',),

511: ('network_authentication_required', 'network_auth', 'network_authentication'),

查看响应的响应头信息:

r = requests.get('http://httpbin.org/get')

print(r.headers)

# 输出结果

{

'Access-Control-Allow-Credentials': 'true', 'Access-Control-Allow-Origin': '*', 'Content-Encoding': 'gzip', 'Content-Type': 'application/json', 'Date': 'Wed, 18 Sep 2019 12:22:06 GMT', 'Referrer-Policy': 'no-referrer-when-downgrade', 'Server': 'nginx', 'X-Content-Type-Options': 'nosniff', 'X-Frame-Options': 'DENY', 'X-XSS-Protection': '1; mode=block', 'Content-Length': '183', 'Connection': 'keep-alive'}

获取响应头的某个字段值:

print(r.headers['Content-Encoding'])

# 输出结果

gzip

重定向与请求历史

默认情况下,除了 HEAD 请求, Requests 会自动处理所有重定向。可以使用响应对象的 history 方法来追踪重定向。

Response.history是一个 Response 对象的列表,这个对象列表按照从最老到最近的请求进行排序。

r = requests.get('http://github.com')

print(r.url)

print(r.status_code)

print(r.history)

# 输出结果

https://github.com/

200

[<Response [301]>]

超时

通过设置 timeout 参数来告诉 requests 在经过以 timeout 参数设定的秒数时间之后停止等待响应。

注意 timeout 仅对连接过程有效,与响应体的下载无关。timeout 并不是整个下载响应的时间限制,而是如果服务器在 timeout 秒内没有应答,将会引发一个异常。

requests.get('http://github.com', timeout=0.001)

# 输出结果

requests.exceptions.ConnectTimeout: HTTPConnectionPool(host='github.com', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x110adf400>, 'Connection to github.com timed out. (connect timeout=0.001)'))

Cookie

利用 cookies 变量来获取响应中的cookie

url = 'http://www.baidu.com/some/cookie/setting/url'

r = requests.get(url)

r.cookies['cookie名字']

# 输出结果

'cookie值'

cookies = dict(cookies_are='python')

r = requests.get('http://httpbin.org/cookies', cookies=cookies)

print(r.text)

# 输出结果

{

"cookies": {

"cookies_are": "python"

}

}

Cookie 的返回对象为 RequestsCookieJar,它和字典类似,适合跨域名跨路径使用,以把 Cookie Jar 传到 Requests 中:

jar = requests.cookies.RequestsCookieJar()

#为路径/cookies设置cookie

jar.set('tasty_cookie', 'yum', domain='httpbin.org', path='/cookies')

#为路径/elsewhere设置cookie

jar.set('gross_cookie', 'blech', domain='httpbin.org', path='/elsewhere')

#请求路径为/cookies的URL

url = 'http://httpbin.org/cookies'

r = requests.get(url, cookies=jar)

print(r.text)

# 输出结果

{

"cookies": {

"tasty_cookie": "yum"

}

}