赛题背景

赛题链接

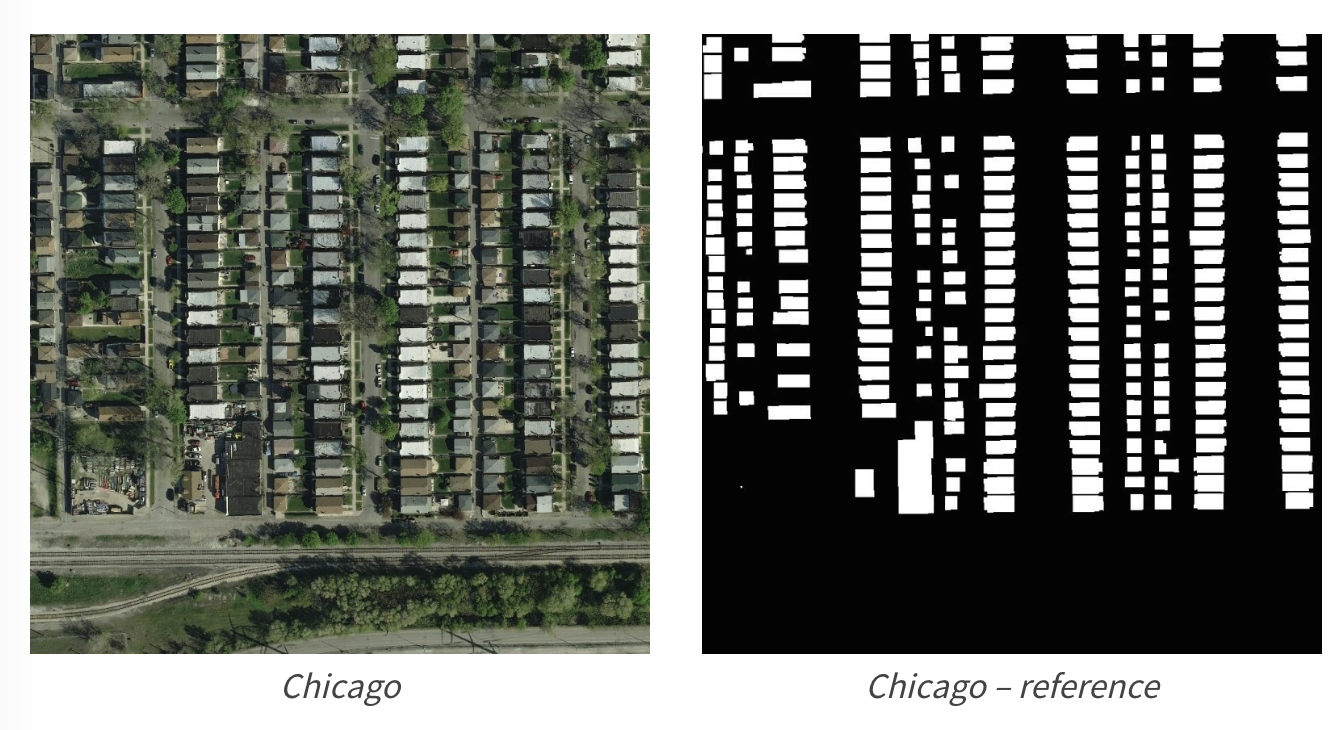

遥感技术已成为获取地表覆盖信息最为行之有效的手段,遥感技术已经成功应用于地表覆盖检测、植被面积检测和建筑物检测任务。本赛题使用航拍数据,需要参赛选手完成地表建筑物识别,将地表航拍图像素划分为有建筑物和无建筑物两类。

如下图,左边为原始航拍图,右边为对应的建筑物标注。

引入库

import numpy as np

import pandas as pd

import pathlib, sys, os, random, time

import cv2, gc

from tqdm import tqdm_notebook

import matplotlib.pyplot as plt

%matplotlib inline

import warnings

warnings.filterwarnings('ignore')

from tqdm.notebook import tqdm

import albumentations as A

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.utils.data as D

import torchvision

from torchvision import transforms as T

数据分析

赛题数据为航拍图,需要识别图片中的地表建筑具体像素位置。

- train_mask.csv:存储图片的标注的rle编码;

- train和test文件夹:存储训练集和测试集图片;

rle编码的具体的读取代码如下:

# 将图片编码为rle格式

def rle_encode(im):

'''

im: numpy array, 1 - mask, 0 - background

Returns run length as string formated

'''

pixels = im.flatten(order = 'F')

pixels = np.concatenate([[0], pixels, [0]])

runs = np.where(pixels[1:] != pixels[:-1])[0] + 1

runs[1::2] -= runs[::2]

return ' '.join(str(x) for x in runs)

# 将rle格式进行解码为图片

def rle_decode(mask_rle, shape=(512, 512)):

'''

mask_rle: run-length as string formated (start length)

shape: (height,width) of array to return

Returns numpy array, 1 - mask, 0 - background

'''

s = mask_rle.split()

starts, lengths = [np.asarray(x, dtype=int) for x in (s[0:][::2], s[1:][::2])]

starts -= 1

ends = starts + lengths

img = np.zeros(shape[0]*shape[1], dtype=np.uint8)

for lo, hi in zip(starts, ends):

img[lo:hi] = 1

return img.reshape(shape, order='F')

设置常用变量

- DEVICE:这是用于后续选择将数据放到GPU设备或者CPU设备上运行的属性

- IMAGE_SIZE:不同的图像大小,网络中的参数数量不一样。图像越大,参数越多,对算力要求越高。

- BATCH_SIZE: 批处理次数

- EPOCHES: 迭代轮数

DEVICE = 'cuda' if torch.cuda.is_available() else 'cpu'

EPOCHES = 20

BATCH_SIZE = 32

IMAGE_SIZE = 256

设置数据增强方式

这里用到了缩放、水平翻转、垂直翻转、随机90度旋转四类

trfm = A.Compose([

A.Resize(IMAGE_SIZE, IMAGE_SIZE),

A.HorizontalFlip(p=0.5),

A.VerticalFlip(p=0.5),

A.RandomRotate90(),

])

自定义数据集

class TianChiDataset(D.Dataset):

def __init__(self, paths, rles, transform, test_mode=False):

self.paths = paths

self.rles = rles

self.transform = transform

self.test_mode = test_mode

self.len = len(paths)

self.as_tensor = T.Compose([

T.ToPILImage(),

T.Resize(IMAGE_SIZE),

T.ToTensor(),

T.Normalize([0.625, 0.448, 0.688],

[0.131, 0.177, 0.101]),

])

# get data operation

def __getitem__(self, index):

img = cv2.imread(self.paths[index])

if not self.test_mode:

mask = rle_decode(self.rles[index])

augments = self.transform(image=img, mask=mask)

return self.as_tensor(augments['image']), augments['mask'][None]

else:

return self.as_tensor(img), ''

def __len__(self):

"""

Total number of samples in the dataset

"""

return self.len

加载训练数据

train_mask = pd.read_csv('data/train_mask.csv', sep='\t', names=['name', 'mask'])

train_mask['name'] = train_mask['name'].apply(lambda x: 'data/train/' + x)

dataset = TianChiDataset(

train_mask['name'].values,

train_mask['mask'].fillna('').values,

trfm, False

)

把训练数据分为训练集和验证集

valid_idx, train_idx = [], []

for i in range(len(dataset)):

if i % 7 == 0:

valid_idx.append(i)

# else:

elif i % 7 == 1:

train_idx.append(i)

train_ds = D.Subset(dataset, train_idx)

valid_ds = D.Subset(dataset, valid_idx)

# define training and validation data loaders

loader = D.DataLoader(

train_ds, batch_size=BATCH_SIZE, shuffle=True, num_workers=0)

vloader = D.DataLoader(

valid_ds, batch_size=BATCH_SIZE, shuffle=False, num_workers=0)

定义模型

使用的是fcn,特征提取是使用resnet50

def get_model():

model = torchvision.models.segmentation.fcn_resnet50(True)

# pth = torch.load("../input/pretrain-coco-weights-pytorch/fcn_resnet50_coco-1167a1af.pth")

# for key in ["aux_classifier.0.weight", "aux_classifier.1.weight", "aux_classifier.1.bias", "aux_classifier.1.running_mean", "aux_classifier.1.running_var", "aux_classifier.1.num_batches_tracked", "aux_classifier.4.weight", "aux_classifier.4.bias"]:

# del pth[key]

model.classifier[4] = nn.Conv2d(512, 1, kernel_size=(1, 1), stride=(1, 1))

return model

定义验证函数

@torch.no_grad()

def validation(model, loader, loss_fn):

losses = []

model.eval()

for image, target in loader:

image, target = image.to(DEVICE), target.float().to(DEVICE)

output = model(image)['out']

loss = loss_fn(output, target)

losses.append(loss.item())

return np.array(losses).mean()

定义损失函数

class SoftDiceLoss(nn.Module):

def __init__(self, smooth=1., dims=(-2,-1)):

super(SoftDiceLoss, self).__init__()

self.smooth = smooth

self.dims = dims

def forward(self, x, y):

tp = (x * y).sum(self.dims)

fp = (x * (1 - y)).sum(self.dims)

fn = ((1 - x) * y).sum(self.dims)

dc = (2 * tp + self.smooth) / (2 * tp + fp + fn + self.smooth)

dc = dc.mean()

return 1 - dc

bce_fn = nn.BCEWithLogitsLoss()

dice_fn = SoftDiceLoss()

def loss_fn(y_pred, y_true):

bce = bce_fn(y_pred, y_true)

dice = dice_fn(y_pred.sigmoid(), y_true)

return 0.8*bce+ 0.2*dice

加载模型,定义优化器,开始训练

model = get_model()

model.to(DEVICE);

optimizer = torch.optim.AdamW(model.parameters(),

lr=1e-4, weight_decay=1e-3)

header = r'''

Train | Valid

Epoch | Loss | Loss | Time, m

'''

# Epoch metrics time

raw_line = '{:6d}' + '\u2502{:7.3f}'*2 + '\u2502{:6.2f}'

print(header)

best_loss = 10

for epoch in range(1, EPOCHES+1):

losses = []

start_time = time.time()

model.train()

for image, target in tqdm_notebook(loader):

image, target = image.to(DEVICE), target.float().to(DEVICE)

optimizer.zero_grad()

output = model(image)['out']

loss = loss_fn(output, target)

loss.backward()

optimizer.step()

losses.append(loss.item())

# print(loss.item())

vloss = validation(model, vloader, loss_fn)

print(raw_line.format(epoch, np.array(losses).mean(), vloss,

(time.time()-start_time)/60**1))

losses = []

if vloss < best_loss:

best_loss = vloss

torch.save(model.state_dict(), 'model_best.pth')

加载最优模型,并在测试集上执行前向推理

trfm = T.Compose([

T.ToPILImage(),

T.Resize(IMAGE_SIZE),

T.ToTensor(),

T.Normalize([0.625, 0.448, 0.688],

[0.131, 0.177, 0.101]),

])

subm = []

model.load_state_dict(torch.load("./model_best.pth"))

model.eval()

test_mask = pd.read_csv('data/test_a_samplesubmit.csv', sep='\t', names=['name', 'mask'])

test_mask['name'] = test_mask['name'].apply(lambda x: 'data/test_a/' + x)

for idx, name in enumerate(tqdm_notebook(test_mask['name'].iloc[:])):

image = cv2.imread(name)

image = trfm(image)

with torch.no_grad():

image = image.to(DEVICE)[None]

score = model(image)['out'][0][0]

score_sigmoid = score.sigmoid().cpu().numpy()

score_sigmoid = (score_sigmoid > 0.5).astype(np.uint8)

score_sigmoid = cv2.resize(score_sigmoid, (512, 512))

# break

subm.append([name.split('/')[-1], rle_encode(score_sigmoid)])

0%| | 0/2500 [00:00<?, ?it/s]

将预测结果保存到本地

subm = pd.DataFrame(subm)

subm.to_csv('./result.csv', index=None, header=None, sep='\t')

提交成绩