作者:张华 发表于:2022-10-12

版权声明:可以任意转载,转载时请务必以超链接形式标明文章原始出处和作者信息及本版权声明

What’s raft

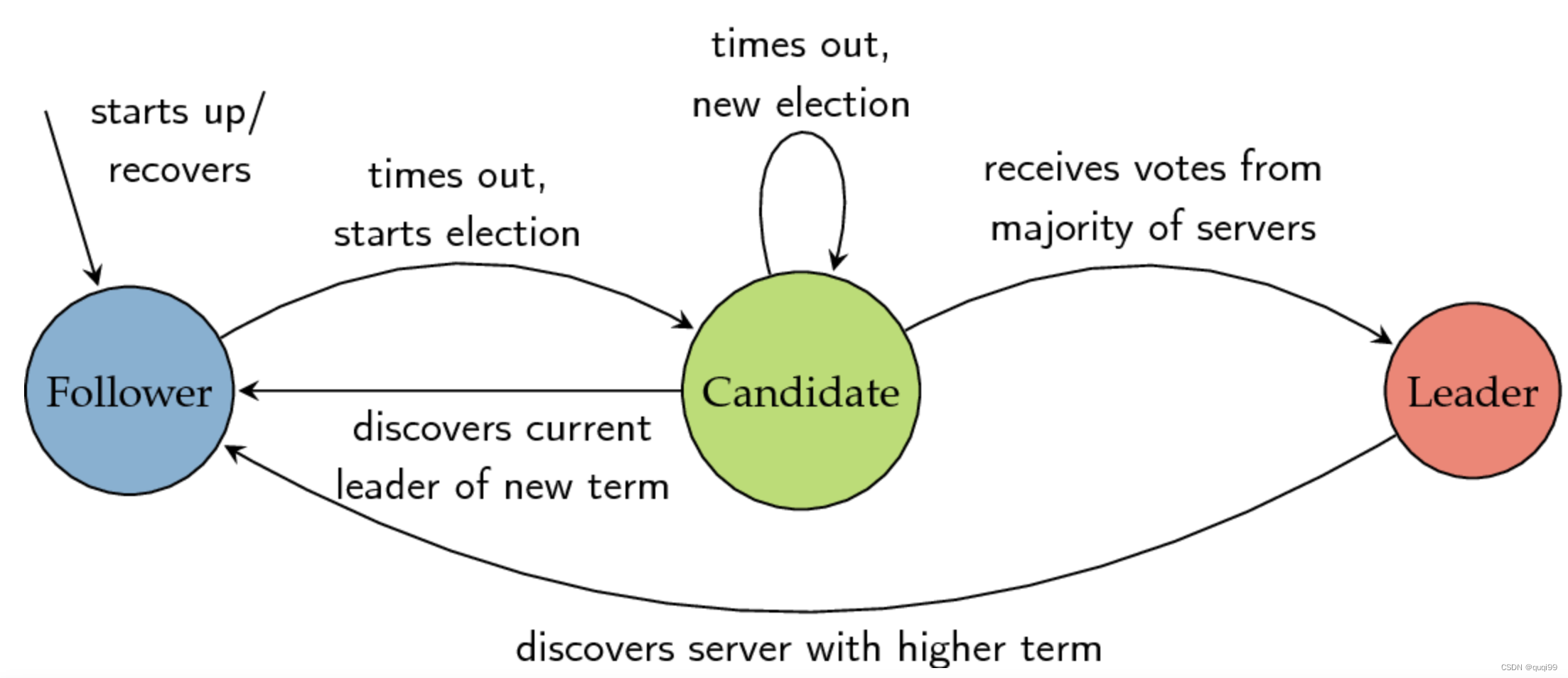

RAFT(https://raft.github.io/)是一种致性算法,它有三种节点:

- Follower - This is first state when node start.

- Candidate - If no leader then start election.

- Leader - If get enough vote then it become leader and all other nodes followers.

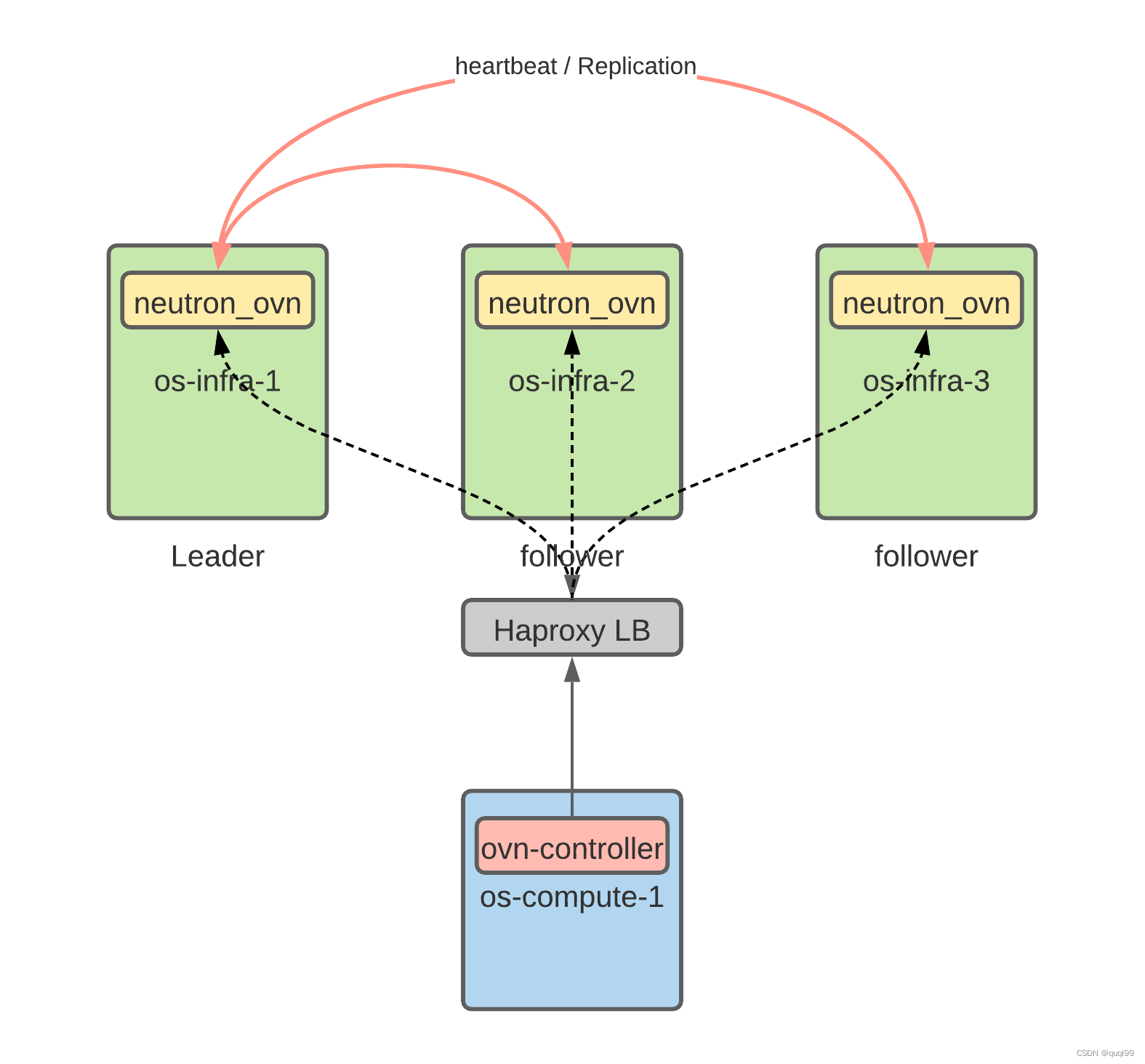

使用RAFT做做ovn-central HA的作用相当于haproxy或pacemaker (see https://bugs.launchpad.net/neutron/+bug/1969354/comments/3 )

Set up ovn-central raft HA env

基于3个LXD容器快速搭建ovn-central raft HA环境. 或请参考: https://bugzilla.redhat.com/show_bug.cgi?id=1929690#c9

cd ~ && lxc launch faster:ubuntu/focal v1

lxc launch faster:ubuntu/focal v2

lxc launch faster:ubuntu/focal v3

#the subnet is 192.168.121.0/24

lxc config device override v1 eth0 ipv4.address=192.168.121.2

lxc config device override v2 eth0 ipv4.address=192.168.121.3

lxc config device override v3 eth0 ipv4.address=192.168.121.4

lxc stop v1 && lxc start v1 && lxc stop v2 && lxc start v2 && lxc stop v3 && lxc start v3

#on v1

lxc exec `lxc list |grep v1 |awk -F '|' '{print $2}'` bash

sudo apt install ovn-central -y

cat << EOF |tee /etc/default/ovn-central

OVN_CTL_OPTS= \

--db-nb-addr=192.168.121.2 \

--db-sb-addr=192.168.121.2 \

--db-nb-cluster-local-addr=192.168.121.2 \

--db-sb-cluster-local-addr=192.168.121.2 \

--db-nb-create-insecure-remote=yes \

--db-sb-create-insecure-remote=yes \

--ovn-northd-nb-db=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 \

--ovn-northd-sb-db=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642

EOF

rm -rf /var/lib/ovn/* && rm -rf /var/lib/ovn/.ovn*

systemctl restart ovn-central

root@v1:~# ovs-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound

6943

Name: OVN_Northbound

Cluster ID: 51b9 (51b9f953-989f-4f90-9add-73dbabe3fe06)

Server ID: 6943 (69432f05-2d37-44fd-8869-2ec365bb0b4c)

Address: tcp:192.168.121.2:6643

Status: cluster member

Role: leader

Term: 2

Leader: self

Vote: self

Election timer: 1000

Log: [2, 5]

Entries not yet committed: 0

Entries not yet applied: 0

Connections: <-0000 <-0000

Servers:

6943 (6943 at tcp:192.168.121.2:6643) (self) next_index=4 match_index=4

#on v2

lxc exec `lxc list |grep v2 |awk -F '|' '{print $2}'` bash

sudo apt install ovn-central -y

cat << EOF |tee /etc/default/ovn-central

OVN_CTL_OPTS= \

--db-nb-addr=192.168.121.3 \

--db-sb-addr=192.168.121.3 \

--db-nb-cluster-local-addr=192.168.121.3 \

--db-sb-cluster-local-addr=192.168.121.3 \

--db-nb-create-insecure-remote=yes \

--db-sb-create-insecure-remote=yes \

--ovn-northd-nb-db=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 \

--ovn-northd-sb-db=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642 \

--db-nb-cluster-remote-addr=192.168.121.2 \

--db-sb-cluster-remote-addr=192.168.121.2

EOF

rm -rf /var/lib/ovn/* && rm -rf /var/lib/ovn/.ovn*

systemctl restart ovn-central

root@v2:~# ovs-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound

158b

Name: OVN_Northbound

Cluster ID: 51b9 (51b9f953-989f-4f90-9add-73dbabe3fe06)

Server ID: 158b (158b0aea-ba5d-42e0-b69b-2fc05204f622)

Address: tcp:192.168.121.3:6643

Status: cluster member

Role: follower

Term: 2

Leader: 6943

Vote: unknown

Election timer: 1000

Log: [2, 7]

Entries not yet committed: 0

Entries not yet applied: 0

Connections: ->0000 <-6943

Servers:

6943 (6943 at tcp:192.168.121.2:6643)

158b (158b at tcp:192.168.121.3:6643) (self)

#on v3

lxc exec `lxc list |grep v3 |awk -F '|' '{print $2}'` bash

sudo apt install ovn-central -y

cat << EOF |tee /etc/default/ovn-central

OVN_CTL_OPTS= \

--db-nb-addr=192.168.121.4 \

--db-sb-addr=192.168.121.4 \

--db-nb-cluster-local-addr=192.168.121.4 \

--db-sb-cluster-local-addr=192.168.121.4 \

--db-nb-create-insecure-remote=yes \

--db-sb-create-insecure-remote=yes \

--ovn-northd-nb-db=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 \

--ovn-northd-sb-db=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642 \

--db-nb-cluster-remote-addr=192.168.121.2 \

--db-sb-cluster-remote-addr=192.168.121.2

EOF

rm -rf /var/lib/ovn/* && rm -rf /var/lib/ovn/.ovn*

systemctl restart ovn-central

root@v3:~# ovs-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound

298d

Name: OVN_Northbound

Cluster ID: 51b9 (51b9f953-989f-4f90-9add-73dbabe3fe06)

Server ID: 298d (298de33b-1b92-47c4-95aa-ebcf8e80f567)

Address: tcp:192.168.121.4:6643

Status: cluster member

Role: follower

Term: 2

Leader: 6943

Vote: unknown

Election timer: 1000

Log: [2, 8]

Entries not yet committed: 0

Entries not yet applied: 0

Connections: ->0000 ->158b <-6943 <-158b

Servers:

6943 (6943 at tcp:192.168.121.2:6643)

158b (158b at tcp:192.168.121.3:6643)

298d (298d at tcp:192.168.121.4:6643) (self)

OVN_NB_DB=tcp:192.168.121.2:6641,tcp:192.168.121.3:6641,tcp:192.168.121.4:6641 ovn-nbctl show

OVN_SB_DB=tcp:192.168.121.2:6642,tcp:192.168.121.3:6642,tcp:192.168.121.4:6642 ovn-sbctl show

Cluster Failover Testing

做一个failover的测试,停掉容器v1 (lxc stop v1), 会在v2与v3上看到如下日志,现在v2变成了leader,并且Term由2变成了3.

root@v2:~# tail -f /var/log/ovn/ovsdb-server-nb.log

2022-10-12T03:38:18.092Z|00085|raft|INFO|received leadership transfer from 6943 in term 2

2022-10-12T03:38:18.092Z|00086|raft|INFO|term 3: starting election

2022-10-12T03:38:18.095Z|00088|raft|INFO|term 3: elected leader by 2+ of 3 servers

root@v3:~# tail -f /var/log/ovn/ovsdb-server-nb.log

2022-10-12T03:38:18.095Z|00021|raft|INFO|server 158b is leader for term 3

root@v2:~# ovs-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound

158b

Name: OVN_Northbound

Cluster ID: 51b9 (51b9f953-989f-4f90-9add-73dbabe3fe06)

Server ID: 158b (158b0aea-ba5d-42e0-b69b-2fc05204f622)

Address: tcp:192.168.121.3:6643

Status: cluster member

Role: leader

Term: 3

Leader: self

Vote: self

Election timer: 1000

Log: [2, 9]

Entries not yet committed: 0

Entries not yet applied: 0

Connections: (->0000) <-298d ->298d

Servers:

6943 (6943 at tcp:192.168.121.2:6643) next_index=9 match_index=0

158b (158b at tcp:192.168.121.3:6643) (self) next_index=8 match_index=8

298d (298d at tcp:192.168.121.4:6643) next_index=9 match_index=8

set inactivity-probe for raft port (6644)

ovn-sbctl list connection

#ovn-sbctl --no-leader-only list connection

ovn-sbctl --inactivity-probe=30000 set-connection pssl:6642 pssl:6644 pssl:16642

ovn-sbctl --inactivity-probe=30000 set-connection pssl:6642 pssl:6644 pssl:16642 punix:/var/run/ovn/ovnsb_db.sock

ovn-sbctl --inactivity-probe=30000 set-connection read-write role="ovn-controller" pssl:6642 read-write role="ovn-controller" pssl:6644 pssl:16642

或者使用下面的(它等价于:ovn-nbctl --inactivity-probe=57 set-connection pssl:6642 pssl:6644)

#https://mail.openvswitch.org/pipermail/ovs-discuss/2020-February/049743.html

#https://opendev.org/x/charm-ovn-central/commit/9dcd53bb75805ff733c8f10b99724ea16a2b5f25

ovn-sbctl -- --id=@connection create Connection target="pssl\:6644" inactivity_probe=55 -- set SB_Global . connections=@connection

ovn-sbctl set connection . inactivity_probe=56

#above 'set SB_Global' will delete all then create one new, here 'add SB_Global' is only to add one new

ovn-sbctl -- --id=@connection create Connection target="pssl\:6648" -- add SB_Global . connections @connection

ovn-sbctl --inactivity-probe=30000 set-connection pssl:6648

NOTE: 20221019更新 - 上面的’ovn-sbctl --inactivity-probe=30000 set-connection pssl:6648’会覆盖到6648之外的其他port的配置, 结果造成客户开了L1, 想哭. 正确的设置方法是下列两种之一:

ovn-sbctl --inactivity-probe=60001 set-connection read-write role="ovn-controller" pssl:6644 pssl:6641 pssl:6642 pssl:16642

或

ovn-sbctl -- --id=@connection create Connection role=ovn-controller target="pssl\:6644" inactivity_probe=6000 -- add SB_Global . connections @connection

use ovsdb-tool to set up cluster

https://mail.openvswitch.org/pipermail/ovs-discuss/2020-February/049743.html

使用下列命令来用ovsdb-tool来创建cluster时刚开始未成功(在v2上用’ovs-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound’ 看到'Remotes for joining: tcp:192.168.121.4:6643 tcp:192.168.121.3:6643'无法加入cluster),那是因为在v2上运行’join-cluster’命令时v2的ip (192.168.121.3:6644)应该写在最前面, 所以对于v2它应该是" tcp:192.168.121.3:6644 tcp:192.168.121.2:6644 tcp:192.168.121.4:6644",而不是" tcp:192.168.121.2:6644 tcp:192.168.121.3:6644 tcp:192.168.121.4:6644"

#reset env in all nodes(v1, v2, v3)

systemctl stop ovn-central

rm -rf /var/lib/ovn/* && rm -rf /var/lib/ovn/.ovn*

rm -rf /etc/default/ovn-central

# on v1

rm -rf /var/lib/openvswitch/ovn*b_db.db

ovsdb-tool create-cluster /var/lib/openvswitch/ovnsb_db.db /usr/share/ovn/ovn-sb.ovsschema tcp:192.168.121.2:6644

ovsdb-tool create-cluster /var/lib/openvswitch/ovnnb_db.db /usr/share/ovn/ovn-nb.ovsschema tcp:192.168.121.2:6643

# on v2

rm -rf /var/lib/openvswitch/ovn*b_db.db

ovsdb-tool join-cluster /var/lib/openvswitch/ovnsb_db.db OVN_Southbound tcp:192.168.121.3:6644 tcp:192.168.121.2:6644 tcp:192.168.121.4:6644

ovsdb-tool join-cluster /var/lib/openvswitch/ovnnb_db.db OVN_Northbound tcp:192.168.121.3:6643 tcp:192.168.121.2:6643 tcp:192.168.121.4:6643

# on v3

rm -rf /var/lib/openvswitch/ovn*b_db.db

ovsdb-tool join-cluster /var/lib/openvswitch/ovnsb_db.db OVN_Southbound tcp:192.168.121.4:6644 tcp:192.168.121.2:6644 tcp:192.168.121.3:6644

ovsdb-tool join-cluster /var/lib/openvswitch/ovnnb_db.db OVN_Northbound tcp:192.168.121.4:6643 tcp:192.168.121.2:6643 tcp:192.168.121.3:6643

# then append the following content in /etc/default/ovn-central, finally restart ovn-central

--db-nb-file=/var/lib/openvswitch/ovnnb_db.db --db-sb-file=/var/lib/openvswitch/ovnsb_db.db

又例如,这个lp bug 提到的恢复ovn-central的方法 - https://bugs.launchpad.net/charm-ovn-central/+bug/1948680

1. Stop all units:

$ juju run-action ovn-central/0 pause --wait

$ juju run-action ovn-central/1 pause --wait

$ juju run-action ovn-central/2 pause --wait

2. Create standalone on ovn-central/0

# ovsdb-tool cluster-to-standalone /tmp/standalone_ovnsb_db.db /var/lib/ovn/ovnsb_db.db

# ovsdb-tool cluster-to-standalone /tmp/standalone_ovnnb_db.db /var/lib/ovn/ovnnb_db.db

3. Create the cluster

# mv /var/lib/ovn/ovnsb_db.db /var/lib/ovn/ovnsb_db.db.old -v

# mv /var/lib/ovn/ovnnb_db.db /var/lib/ovn/ovnnb_db.db.old -v

# ovsdb-tool create-cluster /var/lib/ovn/ovnsb_db.db /tmp/standalone_ovnsb_db.db ssl:<ovn-central-0-ip>:6644

# ovsdb-tool create-cluster /var/lib/ovn/ovnnb_db.db /tmp/standalone_ovnnb_db.db ssl:<ovn-central-0-ip>:6643

4. Resume ovn-central/0

$ juju run-action ovn-central/0 resume --wait

5. Get New Cluster UUIDs

$ juju ssh ovn-central/0 'sudo ovn-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound'

$ juju ssh ovn-central/0 'sudo ovn-appctl -t /var/run/ovn/ovnsb_db.ctl cluster/status OVN_Southbound'

6. Join cluster from ovn-central/1

# mv /var/lib/ovn/ovnsb_db.db /var/lib/ovn/ovnsb_db.db.old -v

# mv /var/lib/ovn/ovnnb_db.db /var/lib/ovn/ovnnb_db.db.old -v

# ovsdb-tool --cid=SB-UUID join-cluster /var/lib/ovn/ovnsb_db.db OVN_Southbound ssl:<ovn-central-1-ip>:6644 ssl:<ovn-central-0-ip>:6644

# ovsdb-tool --cid=NB-UUID join-cluster /var/lib/ovn/ovnnb_db.db OVN_Northbound ssl:<ovn-central-1-ip>:6643 ssl:<ovn-central-0-ip>:6643

7. Resume ovn-central/1

$ juju run-action ovn-central/1 resume --wait

8. Join cluster from ovn-central/2

# mv /var/lib/ovn/ovnsb_db.db /var/lib/ovn/ovnsb_db.db.old -v

# mv /var/lib/ovn/ovnnb_db.db /var/lib/ovn/ovnnb_db.db.old -v

# ovsdb-tool --cid=SB-UUID join-cluster /var/lib/ovn/ovnsb_db.db OVN_Southbound ssl:<ovn-central-2-ip>:6644 ssl:<ovn-central-1-ip>:6644 ssl:<ovn-central-0-ip>:6644

# ovsdb-tool --cid=NB-UUID join-cluster /var/lib/ovn/ovnnb_db.db OVN_Northbound ssl:<ovn-central-2-ip>:6643 ssl:<ovn-central-1-ip>:6643 ssl:<ovn-central-0-ip>:6643

9. Resume ovn-central/2

$ juju run-action ovn-central/2 resume --wait

一个大问题

上面给raft port 6644设置inactivity-probe会造成一个大问题,因为要给它设置值就会创建一个connection, 这样在重启ovn-ovsdb-server-sb.service时会看到错误:6644:10.5.3.254: bind: Address already in use , 这样SB DB不 work, 进而neutron list与nova list都hang在那.

最后通过下列命令将a) 将SB DB从raft版本转成standalone版本 b) 从命令行启动 (从systemd启动会仍然以cluster模式启动) c) 将6644这个connection删除

ovsdb-tool cluster-to-standalone /var/lib/ovn/ovnsb_db.db_standalone /var/lib/ovn/ovnsb_db.db

cp /var/lib/ovn/ovnsb_db.db /var/lib/ovn/ovnsb_db.db_bk2

cp /var/lib/ovn/ovnsb_db.db_standalone /var/lib/ovn/ovnsb_db.db

ovsdb-server --remote=punix:/var/run/ovn/ovnsb_db.sock --pidfile=/var/run/ovn/ovnsb_db.pid --unixctl=/var/run/ovn/ovnsb_db.ctl --remote=db:OVN_Southbound,SB_Global,connections --private-key=/etc/ovn/key_host --certificate=/etc/ovn/cert_host --ca-cert=/etc/ovn/ovn-central.crt --ssl-protocols=db:OVN_Southbound,SSL,ssl_protocols --ssl-ciphers=db:OVN_Southbound,SSL,ssl_ciphers /var/lib/ovn/ovnsb_db.db > temp 2>&1

6644的心跳与6641和6642还有有些区别的, 它只是3个ovn-central节点的raft之间的心跳, 一般5秒应该是够了(For raft ports the only thing which is transmitted consensus and the DB diff, it should not take more than 5 seconds ). 它将调大了的话then it will take longer to see if any of the members has issues and you could get in a place where you have a split brain/db corruption , so changing the inactivity probe for raft ports is discouraged

https://bugs.launchpad.net/ubuntu/+source/openvswitch/+bug/1990978

https://bugs.launchpad.net/openvswitch/+bug/1985062

raft的heartbeat是单向的, 当inactivity-probe被禁用时,leader不停往followers发heartbeat, followers没有收到heartbeat就会发起election, 但是如果leader没有收到任何响应的话它也不会做任何事情(if leader will not receive any replies it will not do anything until there is a quorum), 这会导致leader一直盲目发包浪费cpu. 为避免这种情况又重新引入了inactivity-probe这样leader是长时间没有收到一个leader的响应的话会断到和它之间的tcp连接.

在所有ovn-central节点上运行上面iptables将会让下列的连接变得单向.

iptables -A INPUT -i eth0 -p tcp --match multiport --dports 6641:6644 -j DROP

iptables -A POSTROUTING -p tcp --match multiport --dports 6641:6644 -j DROP

ovn1:<random port> <------ ovn3:6641

ovn1:6641 ------> ovn3:<random port>

20221122 - 一些分析

1, neutron_lib工程的worker.BaseWorker#start会发布消息

registry.publish(resources.PROCESS, events.AFTER_INIT, self.start)

2, neutron工程中的./neutron/worker.py#NeutronBaseWorker会继承neutron_lib工程的worker.BaseWorker, wsgi#WorkerService是继承自NeutronBaseWorker的,UnixDomainWSGIServer继承了wsgi#WorkerService. UnixDomainMetadataProxy会调用UnixDomainWSGIServer, metadata-agent会调用UnixDomainMetadataProxy

3, neutron工程的./neutron/agent/ovn/metadata/server.py会消费消息

45 class MetadataProxyHandler(object):

...

65 def subscribe(self):

66 registry.subscribe(self.post_fork_initialize,

67 resources.PROCESS,

68 events.AFTER_INIT)

4, 在post_fork_initialize中为每个worker创建到SB_DB的sdl连接

70 def post_fork_initialize(self, resource, event, trigger, payload=None):

71 # We need to open a connection to OVN SouthBound database for

72 # each worker so that we can process the metadata requests.

73 self._post_fork_event.clear()

74 self.sb_idl = ovsdb.MetadataAgentOvnSbIdl(

75 tables=('Port_Binding', 'Datapath_Binding', 'Chassis'),

76 chassis=self.chassis).start()

77

78 # Now IDL connections can be safely used.

79 self._post_fork_event.set()

5, 在ovsdb.MetadataAgentOvnSbIdl中会根据ovsdb_retry_max_interval=180秒重新调用ovsdbapp.schema.open_vswitch.impl_idl来创建链接

import logging

from ovs.db import idl

from ovsdbapp.backend.ovs_idl import connection

from ovsdbapp.backend.ovs_idl import idlutils

from ovsdbapp.schema.open_vswitch import impl_idl as idl_ovs

TIMEOUT_SEC = 3600

class NotificationBackend(object):

@staticmethod

def notify_event(event, table):

print('New event "%s", table "%s"' % (event, table))

def connect():

connection_string = "tcp:127.0.0.1:6642"

helper = idlutils.get_schema_helper(connection_string, 'Open_vSwitch')

tables = ('Open_vSwitch', 'Bridge', 'Port', 'Interface')

for table in tables:

helper.register_table(table)

ovs_idl = idl.Idl(connection_string, helper, NotificationBackend())

conn = connection.Connection(ovs_idl, timeout=TIMEOUT_SEC)

return idl_ovs.OvsdbIdl(conn)

def get_port_name_list(ovs_idl):

return ovs_idl.list_ports('br-int').execute(check_error=False)

def main():

ovs_idl = connect()

port_names = get_port_name_list(ovs_idl)

ovs_idl.db_list('Interface', port_names,

columns=['name', 'external_ids', 'ofport'], if_exists=False).execute(check_error=False)

if __name__ == "__main__":

logging.basicConfig()

main()

6, ./neutron/agent/ovn/metadata/agent.py用了ovsdb.MetadataAgentOvnSbIdl, 且它会调用self.sb_idl.db_add去做事.

在获取self.sb_idl时会使用self._post_fork_event.wait

209 @property

210 def sb_idl(self):

211 if not self._sb_idl:

212 self._post_fork_event.wait()

213 return self._sb_idl

它在运行了self._post_fork_event.set()之后也会调用self.sync()进而调用self.ensure_all_networks_provisioned来为每个namespace来创建metadata tap这期间

会调用ports = self.sb_idl.get_ports_on_chassis(self.chassis) ,此时若SB_DB在做snapshop leadership transfer就连不上了从而从SB_DB中获取的Port_Binding为0,

也就无法为VM创建metadata tap了

7, 所以在ovn的leader切换后, neutron端应该设置resync=true然后重新运行self.agent.sync()吗?(目前只是PortBindingChassisEvent, PortBindingChassisCreatedEvent, PortBindingChassisDeletedEvent, /ChassisCreateEventBase)会重新运行sync(). 重新连接之后neutron也确实会做sync的, 日志如下:

2022-08-18 09:15:49.887 75556 INFO ovsdbapp.backend.ovs_idl.vlog [-] ssl:100.94.0.158:6642: clustered database server is disconnected from cluster; trying another server

2022-08-18 09:15:49.888 75556 INFO ovsdbapp.backend.ovs_idl.vlog [-] ssl:100.94.0.158:6642: connection closed by client

2022-08-18 09:15:49.892 75556 INFO ovsdbapp.backend.ovs_idl.vlog [-] ssl:100.94.0.99:6642: connecting...

2022-08-18 09:15:49.910 75556 INFO ovsdbapp.backend.ovs_idl.vlog [-] ssl:100.94.0.99:6642: connected

2022-08-18 09:15:50.726 75556 INFO neutron.agent.ovn.metadata.agent [-] Connection to OVSDB established, doing a full sync

8, 往ovn的表(如Chassis_Private)添加记录时都会重试 - 如:https://review.opendev.org/c/openstack/neutron/+/764318/3/neutron/agent/ovn/metadata/agent.py#260

9, 之后,在2022-08-18 12:30由于snapshop leadership transfer, 100.94.0.204:6644变成了leader

5-lxd-23: f801 100.94.0.99:6644 follower term=297

6-lxd-24: 0f3c 100.94.0.158:6644 follower term=297

7-lxd-27: 9b15 100.94.0.204:6644 leader term=297 leader

$ find sosreport-juju-2752e1-*/var/log/ovn/* |xargs zgrep -i -E 'received leadership transfer from'

sosreport-juju-2752e1-6-lxd-24-00341190-2022-08-18-entowko/var/log/ovn/ovsdb-server-sb.log:2022-08-18T12:30:35.610Z|80967|raft|INFO|received leadership transfer from 9b15 in term 295

sosreport-juju-2752e1-7-lxd-27-00341190-2022-08-18-hhxxqci/var/log/ovn/ovsdb-server-sb.log:2022-08-18T12:30:35.322Z|92695|raft|INFO|received leadership transfer from 0f3c in term 294

sosreport-juju-2752e1-7-lxd-27-00341190-2022-08-18-hhxxqci/var/log/ovn/ovsdb-server-sb.log:2022-08-18T17:52:53.025Z|92893|raft|INFO|received leadership transfer from 0f3c in term 296

# reelection was caused by snapshot factor according to timestamp

$ find sosreport-juju-2752e1-*/var/log/ovn/* |xargs zgrep -i -E 'Transferring leadership'

sosreport-juju-2752e1-6-lxd-24-00341190-2022-08-18-entowko/var/log/ovn/ovsdb-server-sb.log:2022-08-18T12:30:35.322Z|80962|raft|INFO|Transferring leadership to write a snapshot.

sosreport-juju-2752e1-6-lxd-24-00341190-2022-08-18-entowko/var/log/ovn/ovsdb-server-sb.log:2022-08-18T17:52:53.024Z|82382|raft|INFO|Transferring leadership to write a snapshot.

sosreport-juju-2752e1-7-lxd-27-00341190-2022-08-18-hhxxqci/var/log/ovn/ovsdb-server-sb.log:2022-08-18T12:30:35.330Z|92698|raft|INFO|Transferring leadership to write a snapshot.

10, 2022-08-18 12:30:36发生了错误

$ find sosreport-srv1ibm00*/var/log/neutron/* |xargs zgrep -i -E 'OVSDB Error'

sosreport-srv1ibm002d-00341190-2022-08-18-cuvkufw/var/log/neutron/neutron-ovn-metadata-agent.log:2022-08-18 12:30:36.103 75556 ERROR ovsdbapp.backend.ovs_idl.transaction [-] OVSDB Error: no error details available

metadata好的时候看到的是这种日志:

2022-08-18 00:01:37.607 75810 INFO eventlet.wsgi.server [-] 100.94.99.243,<local> "GET /latest/meta-data/ HTTP/1.1" status: 200 len: 360 time: 0.0124910

发生了OVSDB Error之后看到的一直是下列日志, 直到重启metadata-agent才恢复:

2022-08-18 13:13:55.508 75810 ERROR neutron.agent.ovn.metadata.server [-] No port found in network 63e2c276-60dd-40e3-baa1-c16342eacce2 with IP address 100.94.99.107

它是neutron/agent/ovn/metadata/server.py#_get_instance_and_project_id在调用self.sb_idl.get_network_port_bindings_by_ip时self.sb_idl连不上SB_DB了

[1] reference: https://medoc.readthedocs.io/en/latest/docs/ovs/ovn/idl/1_ovn_driver_2_ovn_client.html

测试代码

import sys

import hashlib

import hmac

import threading

import urllib

from neutron._i18n import _

from neutron.agent.linux import utils as agent_utils

from neutron.agent.ovn.metadata import ovsdb

from neutron.common import ipv6_utils

from neutron.common.ovn import constants as ovn_const

from neutron.common import config

from neutron.common import utils

from neutron_lib.callbacks import events

from neutron_lib.callbacks import registry

from neutron_lib.callbacks import resources

from neutron.conf.agent.ovn.metadata import config as ovn_meta

from neutron.conf.agent.metadata import config as meta

from oslo_config import cfg

from oslo_log import log as logging

from oslo_utils import encodeutils

import requests

import webob

LOG = logging.getLogger(__name__)

class MetadataProxyHandler(object):

def __init__(self, conf, chassis):

self.conf = conf

self.chassis = chassis

self._sb_idl = None

self._post_fork_event = threading.Event()

@property

def sb_idl(self):

if not self._sb_idl:

self._post_fork_event.wait()

return self._sb_idl

@sb_idl.setter

def sb_idl(self, val):

self._sb_idl = val

def post_fork_initialize(self):

self._post_fork_event.clear()

self.sb_idl = ovsdb.MetadataAgentOvnSbIdl(

tables=('Port_Binding', 'Datapath_Binding', 'Chassis'),

chassis=self.chassis).start()

# Now IDL connections can be safely used.

self._post_fork_event.set()

def get_instance_and_project_id(self):

#ports = self.sb_idl.get_network_port_bindings_by_ip(network_id, remote_address)

ports = self.sb_idl.get_ports_on_chassis(self.chassis)

num_ports = len(ports)

if num_ports == 0:

LOG.error("No port found")

return None

return ports

if __name__ == '__main__':

ovn_meta.register_meta_conf_opts(meta.SHARED_OPTS)

ovn_meta.register_meta_conf_opts(meta.UNIX_DOMAIN_METADATA_PROXY_OPTS)

ovn_meta.register_meta_conf_opts(meta.METADATA_PROXY_HANDLER_OPTS)

ovn_meta.register_meta_conf_opts(ovn_meta.OVS_OPTS, group='ovs')

config.init(['--config-file=/etc/neutron/neutron.conf', '--config-file=/etc/neutron/neutron_ovn_metadata_agent.ini'])

config.setup_logging()

# ovn-sbctl list chassis

#import rpdb;rpdb.set_trace()

proxy = MetadataProxyHandler(cfg.CONF, chassis='juju-402d04-xena-8.cloud.sts')

proxy.post_fork_initialize()

ports = proxy.get_instance_and_project_id()

print(ports)

试图重现1996594

试图重现: https://bugs.launchpad.net/neutron/+bug/1996594 , 但失败了

#!/bin/bash

for i in {1..5000}

do

ovn-nbctl ls-add sw$i

if [[ $? -ne 0 ]] ; then

echo "Failed on ls-add i: $i"

exit 1

fi

for j in {1..2000}

do

echo "Iteration i: $i and j:$j"

ovn-nbctl lsp-add sw$i sw$i$j

if [[ $? -ne 0 ]] ; then

echo "Failed on lsp-add i: $i and j: $j"

exit 1

fi

done

done

for i in {1..5000}

do

echo "Delete iteration i: $i"

ovn-nbctl ls-del sw$i

if [[ $? -ne 0 ]] ; then

echo "Failed on ls-del i: $i"

exit 1

fi

done

按照上面(lp bug 1990978)中的脚本给DB加压最后将NB_DB给压出snapshot了,但不是SB_DB

Iteration i: 3 and j:822

Iteration i: 3 and j:823

2022-11-22T01:24:24Z|00002|timeval|WARN|Unreasonably long 1030ms poll interval (895ms user, 19ms system)

2022-11-22T01:24:24Z|00003|timeval|WARN|faults: 1634 minor, 0 major

2022-11-22T01:24:24Z|00004|timeval|WARN|context switches: 0 voluntary, 638 involuntary

Iteration i: 3 and j:824

Iteration i: 3 and j:825

Iteration i: 3 and j:826

Iteration i: 3 and j:827

2022-11-22T01:24:28Z|00002|timeval|WARN|Unreasonably long 1010ms poll interval (966ms user, 10ms system)

2022-11-22T01:24:28Z|00003|timeval|WARN|faults: 1394 minor, 0 major

2022-11-22T01:24:28Z|00004|timeval|WARN|context switches: 0 voluntary, 723 involuntary

Iteration i: 3 and j:828

Iteration i: 3 and j:829

2022-11-22T01:24:30Z|00002|timeval|WARN|Unreasonably long 1019ms poll interval (970ms user, 9ms system)

2022-11-22T01:24:30Z|00003|timeval|WARN|faults: 1284 minor, 0 major

2022-11-22T01:24:30Z|00004|timeval|WARN|context switches: 0 voluntary, 746 involuntary

Iteration i: 3 and j:830

Iteration i: 3 and j:831

Iteration i: 3 and j:832

2022-11-22T01:24:33Z|00002|timeval|WARN|Unreasonably long 1015ms poll interval (969ms user, 9ms system)

2022-11-22T01:24:33Z|00003|timeval|WARN|faults: 1283 minor, 0 major

2022-11-22T01:24:33Z|00004|timeval|WARN|context switches: 0 voluntary, 728 involuntary

Iteration i: 3 and j:833

Iteration i: 3 and j:834

Iteration i: 3 and j:835

2022-11-22T01:24:36Z|00002|timeval|WARN|Unreasonably long 1029ms poll interval (908ms user, 19ms system)

2022-11-22T01:24:36Z|00003|timeval|WARN|faults: 1257 minor, 0 major

2022-11-22T01:24:36Z|00004|timeval|WARN|context switches: 0 voluntary, 675 involuntary

Iteration i: 3 and j:836

Iteration i: 3 and j:837

Iteration i: 3 and j:838

Iteration i: 3 and j:839

2022-11-22T01:24:41Z|00004|timeval|WARN|Unreasonably long 1125ms poll interval (907ms user, 37ms system)

2022-11-22T01:24:41Z|00005|timeval|WARN|faults: 1359 minor, 0 major

2022-11-22T01:24:41Z|00006|timeval|WARN|context switches: 0 voluntary, 667 involuntary

ovn-nbctl: unix:/var/run/ovn/ovnnb_db.sock: database connection failed ()

Failed on lsp-add i: 3 and j: 839

$ juju run -a ovn-central -- grep -r -i 'leadership transfer' /var/log/ovn/

- ReturnCode: 1

Stdout: ""

UnitId: ovn-central/0

- ReturnCode: 1

Stdout: ""

UnitId: ovn-central/1

- Stdout: |

/var/log/ovn/ovsdb-server-nb.log:2022-11-22T01:24:40.535Z|00890|raft|INFO|received leadership transfer from 42bc in term 1

UnitId: ovn-central/2

过了一会,SB_DB自已开始snapshot了,

$ juju run -a ovn-central -- grep -r -i 'Transferring leadership to write a snapshot' /var/log/ovn/

- Stdout: |

/var/log/ovn/ovsdb-server-nb.log:2022-11-22T01:43:16.695Z|00908|raft|INFO|Transferring leadership to write a snapshot.

UnitId: ovn-central/0

- Stdout: |

/var/log/ovn/ovsdb-server-nb.log:2022-11-22T01:24:40.534Z|00901|raft|INFO|Transferring leadership to write a snapshot.

/var/log/ovn/ovsdb-server-sb.log:2022-11-22T02:11:02.547Z|01586|raft|INFO|Transferring leadership to write a snapshot.

UnitId: ovn-central/1

- Stdout: |

/var/log/ovn/ovsdb-server-nb.log:2022-11-22T01:43:11.186Z|00905|raft|INFO|Transferring leadership to write a snapshot.

UnitId: ovn-central/2

但此时创建虚机metadata没有问题, /var/log/neutron/neutron-ovn-metadata-agent.log中也没有搜到任何connect相关的字眼.

2022-11-22 04:23:34.386 45409 INFO neutron.agent.ovn.metadata.agent [-] Port fa4d9821-6ed3-4c7e-95a5-a68b08c95b9f in datapath 946aa920-137f-42f1-a4a8-8d772f2af3cd bound to our chassis

目前https://bugs.launchpad.net/ubuntu/+source/neutron/+bug/1975594这上面的lp bug里的patch都有的.

neutron端也支持连接non-leader - https://review.opendev.org/c/openstack/neutron/+/829486

monitor_cond_since也应该需要 - https://openii.cn/openvswitch/ovs/commit/9167cb52fa8708ff524be9fc41a8f5ccdfa0a15d

这个也应该需要 - https://patchwork.ozlabs.org/project/openvswitch/patch/[email protected]/

20230529 - 将ovn-central从standalone转成raft HA

例如,之前在n1(10.5.21.11)安装了standalone的ovn-central. 则在n1上运行:

#on n1, stop ovn-central, OVN_NB, OVN_SB

systemctl stop ovn-central.service

systemctl stop ovn-ovsdb-server-nb.service

systemctl stop ovn-ovsdb-server-sb.service

#it's already standalone so only use cp

#ovsdb-tool cluster-to-standalone /tmp/standalone_ovnsb_db.db /var/lib/ovn/ovnsb_db.db

#ovsdb-tool cluster-to-standalone /tmp/standalone_ovnnb_db.db /var/lib/ovn/ovnnb_db.db

sudo cp /var/lib/ovn/ovnsb_db.db /tmp/standalone_ovnsb_db.db

sudo cp /var/lib/ovn/ovnnb_db.db /tmp/standalone_ovnnb_db.db

#convert standalone db to cluster db, use tcp rather than ssl here

mv /var/lib/ovn/ovnsb_db.db /var/lib/ovn/ovnsb_db.db.old -v

mv /var/lib/ovn/ovnnb_db.db /var/lib/ovn/ovnnb_db.db.old -v

ovsdb-tool create-cluster /var/lib/ovn/ovnsb_db.db /tmp/standalone_ovnsb_db.db tcp:10.5.21.11:6644

ovsdb-tool create-cluster /var/lib/ovn/ovnnb_db.db /tmp/standalone_ovnnb_db.db tcp:10.5.21.11:6643

#resume ovn-central, OVN_NB, OVN_SB on n1

systemctl start ovn-central.service

systemctl start ovn-ovsdb-server-nb.service

systemctl start ovn-ovsdb-server-sb.service

#Get cluster UUIDs ()

ovn-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound

ovn-appctl -t /var/run/ovn/ovnsb_db.ctl cluster/status OVN_Southbound

NB_UUID=a8dddb43-62e8-4383-9c59-b1b4b68d5196

SB_UUID=96ab9d1a-f31b-4e60-bedf-8232ffae5407

在n2(10.5.21.12)和n3(在n3上运行时将10.5.21.12改成10.5.21.13)上运行:

apt install ovn-central openvswitch-switch net-tools -y

systemctl stop ovn-central.service

systemctl stop ovn-ovsdb-server-nb.service

systemctl stop ovn-ovsdb-server-sb.service

rm -rf /var/lib/ovn/ovnsb_db.db

rm -rf /var/lib/ovn/ovnnb_db.db

ovsdb-tool --cid=$NB_UUID join-cluster /var/lib/ovn/ovnnb_db.db OVN_Northbound tcp:10.5.21.12:6643 tcp:10.5.21.11:6643

ovsdb-tool --cid=$SB_UUID join-cluster /var/lib/ovn/ovnsb_db.db OVN_Southbound tcp:10.5.21.12:6644 tcp:10.5.21.11:6644

systemctl start ovn-central.service

systemctl start ovn-ovsdb-server-nb.service

systemctl start ovn-ovsdb-server-sb.service

ovn-appctl -t /var/run/ovn/ovnnb_db.ctl cluster/status OVN_Northbound

ovn-appctl -t /var/run/ovn/ovnsb_db.ctl cluster/status OVN_Southbound