1. HTTP常见状态码

2xxx: 成功

3xxx: 重定向

4xxx: 客户端的问题

5xxxx: 服务端的问题

404: 页面找不到

403: 拒绝访问

200: 成功访问

消息

100 Continue

101 Switching Protocols

102 Processing

成功

200 OK

201 Created

202 Accepted

203 Non-Authoritative Information

204 No Content

205 Reset Content

206 Partial Content

207 Multi-Status

重定向

300 Multiple Choices

301 Moved Permanently

302 Move temporarily

303 See Other

304 Not Modified

305 Use Proxy

306 Switch Proxy

307Temporary Redirect

请求错误

400 Bad Request

401 Unauthorized

402 Payment Required

403 Forbidden

404 Not Found

405 Method Not Allowed

406 Not Acceptable

407 Proxy Authentication Required

408 Request Timeout

409 Conflict

410 Gone

411 Length Required

412 Precondition Failed

413 Request Entity Too Large

414 Request-URI Too Long

415 Unsupported Media Type

416 Requested Range Not Satisfiable

417 Expectation Failed

421 too many connections

422 Unprocessable Entity

423 Locked

424 Failed Dependency

425 Unordered Collection

426 Upgrade Required

449 Retry With

451Unavailable For Legal Reasons

服务器错误

500 Internal Server Error

501 Not Implemented

502 Bad Gateway

503 Service Unavailable

504 Gateway Timeout

505 HTTP Version Not Supported(http/1.1)

506 Variant Also Negotiates

507 Insufficient Storage

509 Bandwidth Limit Exceeded

510 Not Extended

600 Unparseable Response Headers

2. urllib异常捕获

from urllib import request

from urllib import error

try:

url = 'http://www.baidu.com/hello.html'

# timeout设置等待响应时间

response = request.urlopen(url, timeout=0.01)

except error.HTTPError as e:

print(e.code, e.headers, e.reason)

except error.URLError as e:

print(e.reason)

else:

content = response.read().decode('utf-8')

print(content)

3. url解析

from urllib.parse import urlparse

doubanUrl = 'https://movie.douban.com/subject/4864908/comments?sort=new_score&status=P'

info = urlparse(doubanUrl)

print(info)

print(info.scheme)

4. requests模块爬取网页信息

import requests

from urllib.error import HTTPError

def get_content(url):

try:

response = requests.get(url)

response.raise_for_status() # 如果状态码不是200, 引发HttpError异常

# 从内容分析出响应内容的编码格式

response.encoding = response.apparent_encoding

except HTTPError as e:

print(e)

else:

print(response.status_code)

# print(response.headers)

return response.text

if __name__ == '__main__':

url = 'http://www.baidu.com'

get_content(url)

5. requests提交数据到服务器

5.1 HTTP常见请求方法:

GET:会将上传的信息显示出来(当上传信息有密码等私密信息时不建议使用)

POST:将上传的信息隐藏不显示

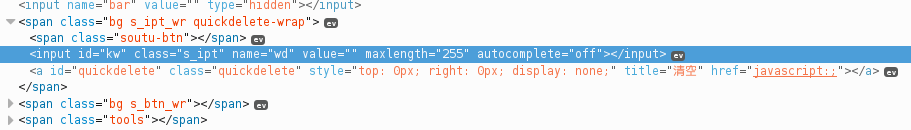

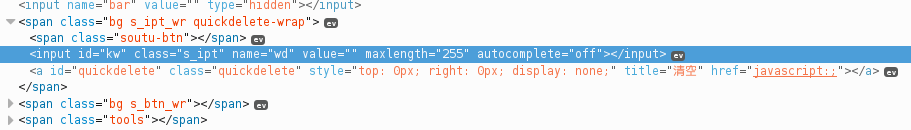

5.2 百度搜索关键字提交:

def baidu():

url = "https://www.baidu.com"

keyword = input("请输入搜索的关键字:")

# wd是百度需要输入的关键字的name

data = {

'wd': keyword

}

keyword_post(url, data)

if __name__ == '__main__':

baidu()

6. requests解析json格式数据

import requests

# 解析json格式

ip = input('IP:')

url = "http://ip.taobao.com/service/getIpInfo.php"

data = {

'ip': ip

}

response = requests.get(url, params=data)

# 将响应的json数据编码为python可以识别的数据类型;

content = response.json()

print(content)

print(type(content))

country = content['data']['country']

print(country)

# response.content: 返回的是bytes类型, 比如: 下载图片, 视频;

# response.text: 返回的是str类型, 默认情况会将bytes类型转成str类型。

7. 上传文件

files = {

# 二进制文件需要指定读取方式为rb

'file': open('doc/movie.jpg', 'rb')

}

response = requests.post(url='http://httpbin.org/post', data = data, files=files)

print(response.text)